用户行为数据仓库 第 3 节 数仓搭建之DWD层

上篇:用户行为数据仓库 第 2 节 数仓搭建环境及ODS层加载数据脚本

对ODS层数据进行清洗(去除空值,脏数据,超过极限范围的数据,行式存储改为列存储,改压缩格式)。

1、DWD层启动表数据解析

1.1、创建启动表

(1)建表语句

hive (gmall)>

drop table if exists dwd_start_log;

CREATE EXTERNAL TABLE dwd_start_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`entry` string,

`open_ad_type` string,

`action` string,

`loading_time` string,

`detail` string,

`extend1` string

)

PARTITIONED BY (dt string)

location '/user/hive/warehouse/gmall.db';

OK

Time taken: 0.596 seconds

(2)向启动表导入数据

insert overwrite table dwd_start_log

PARTITION (dt='2019-02-10')

select

get_json_object(line,'$.mid') mid_id,

get_json_object(line,'$.uid') user_id,

get_json_object(line,'$.vc') version_code,

get_json_object(line,'$.vn') version_name,

get_json_object(line,'$.l') lang,

get_json_object(line,'$.sr') source,

get_json_object(line,'$.os') os,

get_json_object(line,'$.ar') area,

get_json_object(line,'$.md') model,

get_json_object(line,'$.ba') brand,

get_json_object(line,'$.sv') sdk_version,

get_json_object(line,'$.g') gmail,

get_json_object(line,'$.hw') height_width,

get_json_object(line,'$.t') app_time,

get_json_object(line,'$.nw') network,

get_json_object(line,'$.ln') lng,

get_json_object(line,'$.la') lat,

get_json_object(line,'$.entry') entry,

get_json_object(line,'$.open_ad_type') open_ad_type,

get_json_object(line,'$.action') action,

get_json_object(line,'$.loading_time') loading_time,

get_json_object(line,'$.detail') detail,

get_json_object(line,'$.extend1') extend1

from ods_start_log

where dt='2019-02-10';

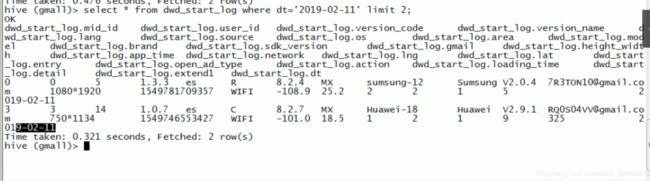

(3)测试

hive (gmall)> select * from dwd_start_log limit 2;

2、DWD层启动表加载数据脚本

(1)在hadoop1的/home/MrZhou/bin目录下创建脚本

[root@hadoop1 bin]# vim dwd_start_log.sh

//在脚本中编写如下内容

#!/bin/bash

# 定义变量方便修改

APP=gmall

hive=/usr/local/etc/hadoop/module/hive-1.2.1/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table "$APP".dwd_start_log

PARTITION (dt='$do_date')

select

get_json_object(line,'$.mid') mid_id,

get_json_object(line,'$.uid') user_id,

get_json_object(line,'$.vc') version_code,

get_json_object(line,'$.vn') version_name,

get_json_object(line,'$.l') lang,

get_json_object(line,'$.sr') source,

get_json_object(line,'$.os') os,

get_json_object(line,'$.ar') area,

get_json_object(line,'$.md') model,

get_json_object(line,'$.ba') brand,

get_json_object(line,'$.sv') sdk_version,

get_json_object(line,'$.g') gmail,

get_json_object(line,'$.hw') height_width,

get_json_object(line,'$.t') app_time,

get_json_object(line,'$.nw') network,

get_json_object(line,'$.ln') lng,

get_json_object(line,'$.la') lat,

get_json_object(line,'$.entry') entry,

get_json_object(line,'$.open_ad_type') open_ad_type,

get_json_object(line,'$.action') action,

get_json_object(line,'$.loading_time') loading_time,

get_json_object(line,'$.detail') detail,

get_json_object(line,'$.extend1') extend1

from "$APP".ods_start_log

where dt='$do_date';

"

$hive -e "$sql"

(2)增加脚本执行权限

[root@hadoop1 bin]# chmod 777 dwd_start_log.sh

(3)脚本使用

[root@hadoop1 bin]# ./dwd_start_log.sh 2019-02-11

(4)查询导入结果

select * from dwd_start_log where dt='2019-02-11' limit 2;

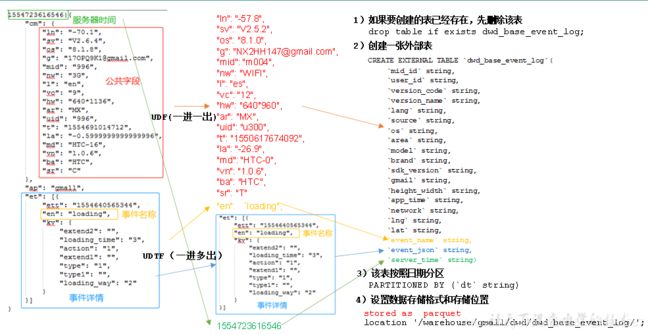

3、DWD层事件表数据解析

创建基础明细表

明细表用于存储ODS层原始表转换过来的明细数据

(1)创建事件日志基础明细表

hive (gmall)>

drop table if exists dwd_base_event_log;

CREATE EXTERNAL TABLE dwd_base_event_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`event_name` string,

`event_json` string,

`server_time` string)

PARTITIONED BY (`dt` string)

stored as parquet

location '/warehouse/gmall/dwd/dwd_base_event_log/';

(2)说明:其中event_name和event_json用来对应事件名和整个事件。这个地方将原始日志1对多的形式拆分出来了。操作的时候我们需要将原始日志展平,需要用到UDF和UDTF。

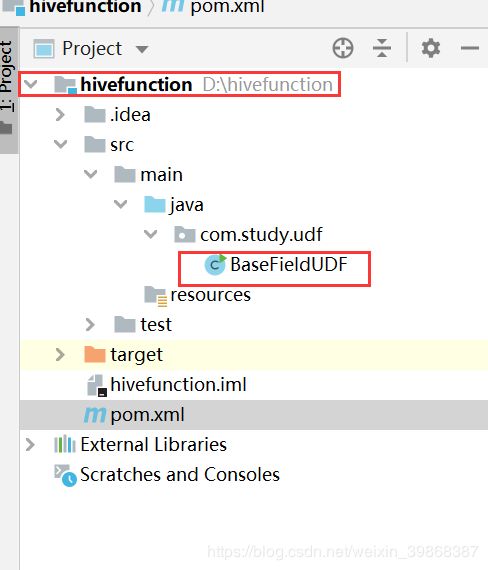

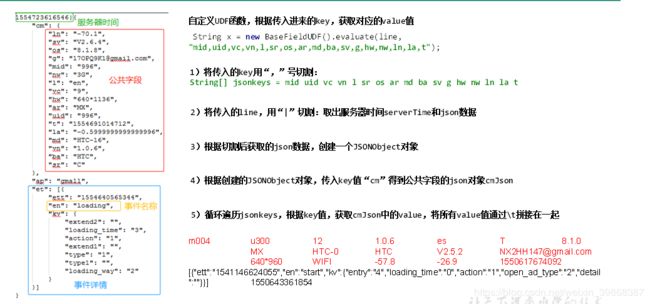

4、自定义UDF函数(解析公共字段)

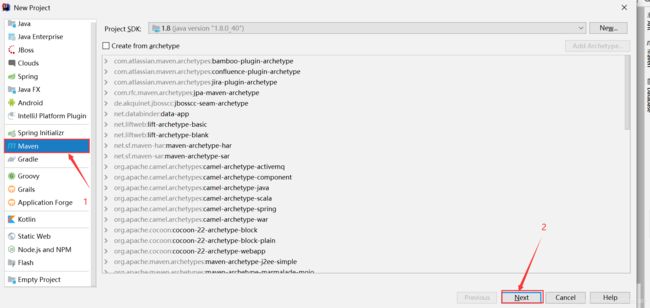

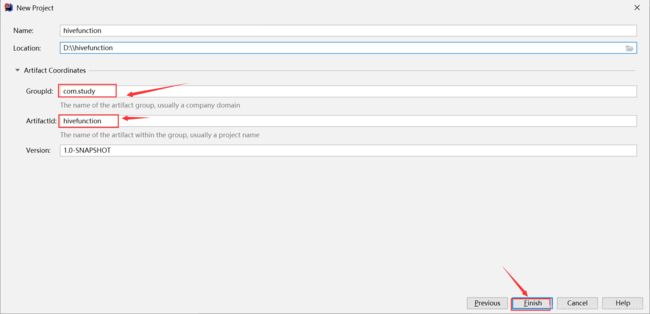

(1) 创建一个maven工程:hivefunction

(2) 在pom.xml文件中添加如下内容

<properties>

<project.build.sourceEncoding>UTF8</project.build.sourceEncoding>

<hive.version>1.2.1</hive.version>

</properties>

<dependencies>

<!--添加hive依赖-->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>${hive.version}</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>2.3.2</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

BaseFieldUDF.java

package com.study.udf;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.json.JSONException;

import org.json.JSONObject;

public class BaseFieldUDF extends UDF {

//定义输入参数

public String evaluate(String line, String jsonkeysString) {

StringBuilder sb = new StringBuilder();

//1、获取所有key、mid、uv...

String[] jsonkeys = jsonkeysString.split(",");

//2、line 服务器时间| json

String[] logContents = line.split("\\|");

//3、校验

if (logContents.length != 2 || StringUtils.isBlank(logContents[1])) {

return "";

}

try {

//4、对logContents【1】创建json对象

JSONObject jsonObject = new JSONObject(logContents[1]);

//5、获取公共的字段的json对象

JSONObject cm = jsonObject.getJSONObject("cm");

//6、循环遍历

for (int i = 0; i < jsonkeys.length; i++) {

String jsonkey = jsonkeys[i];

if (cm.has(jsonkey)) {

sb.append(cm.getString(jsonkey)).append("\t");

} else {

sb.append("\t");

}

}

//7、拼接事件字段和服务器时间

sb.append(jsonObject.getString("et")).append("\t");

sb.append(logContents[0]).append("\t");

} catch (JSONException e) {

e.printStackTrace();

}

return sb.toString();

}

/**

* 测试类

* @param args

*/

public static void main(String[] args) {

String line = "1541217850324|{\"cm\":{\"mid\":\"m7856\",\"uid\":\"u8739\",\"ln\":\"-74.8\",\"sv\":\"V2.2.2\",\"os\":\"8.1.3\",\"g\":\"[email protected]\",\"nw\":\"3G\",\"l\":\"es\",\"vc\":\"6\",\"hw\":\"640*960\",\"ar\":\"MX\",\"t\":\"1541204134250\",\"la\":\"-31.7\",\"md\":\"huawei-17\",\"vn\":\"1.1.2\",\"sr\":\"O\",\"ba\":\"Huawei\"},\"ap\":\"weather\",\"et\":[{\"ett\":\"1541146624055\",\"en\":\"display\",\"kv\":{\"goodsid\":\"n4195\",\"copyright\":\"ESPN\",\"content_provider\":\"CNN\",\"extend2\":\"5\",\"action\":\"2\",\"extend1\":\"2\",\"place\":\"3\",\"showtype\":\"2\",\"category\":\"72\",\"newstype\":\"5\"}},{\"ett\":\"1541213331817\",\"en\":\"loading\",\"kv\":{\"extend2\":\"\",\"loading_time\":\"15\",\"action\":\"3\",\"extend1\":\"\",\"type1\":\"\",\"type\":\"3\",\"loading_way\":\"1\"}},{\"ett\":\"1541126195645\",\"en\":\"ad\",\"kv\":{\"entry\":\"3\",\"show_style\":\"0\",\"action\":\"2\",\"detail\":\"325\",\"source\":\"4\",\"behavior\":\"2\",\"content\":\"1\",\"newstype\":\"5\"}},{\"ett\":\"1541202678812\",\"en\":\"notification\",\"kv\":{\"ap_time\":\"1541184614380\",\"action\":\"3\",\"type\":\"4\",\"content\":\"\"}},{\"ett\":\"1541194686688\",\"en\":\"active_background\",\"kv\":{\"active_source\":\"3\"}}]}";

String x = new BaseFieldUDF().evaluate(line, "mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,nw,ln,la,t");

System.out.println(x);

}

}

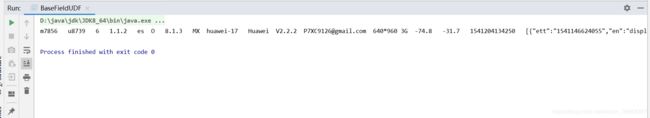

(4)运行程序,控制台打印

m7856 u8739 6 1.1.2 es O 8.1.3 MX huawei-17 Huawei V2.2.2 P7XC9126@gmail.com 640*960 3G -74.8 -31.7 1541204134250 [{"ett":"1541146624055","en":"display","kv":{"copyright":"ESPN","content_provider":"CNN","extend2":"5","goodsid":"n4195","action":"2","extend1":"2","place":"3","showtype":"2","category":"72","newstype":"5"}},{"ett":"1541213331817","en":"loading","kv":{"extend2":"","loading_time":"15","action":"3","extend1":"","type1":"","type":"3","loading_way":"1"}},{"ett":"1541126195645","en":"ad","kv":{"entry":"3","show_style":"0","action":"2","detail":"325","source":"4","behavior":"2","content":"1","newstype":"5"}},{"ett":"1541202678812","en":"notification","kv":{"ap_time":"1541184614380","action":"3","type":"4","content":""}},{"ett":"1541194686688","en":"active_background","kv":{"active_source":"3"}}] 1541217850324

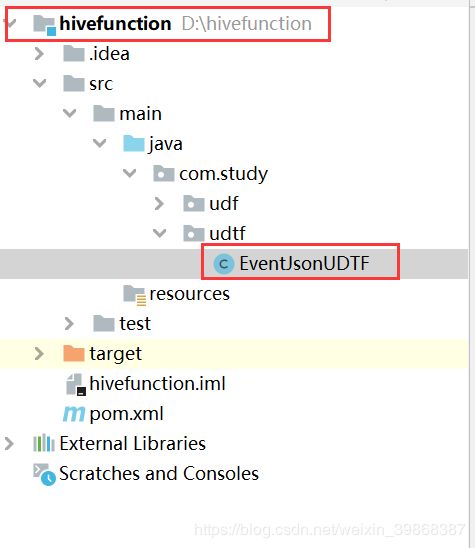

5、自定义UDTF函数(解析具体事件字段)

EventJsonUDTF.java

package com.study.udtf;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.json.JSONArray;

import org.json.JSONException;

import java.util.ArrayList;

import java.util.List;

public class EventJsonUDTF extends GenericUDTF {

@Deprecated

public StructObjectInspector initialize(ObjectInspector[] argOIs) throws UDFArgumentException {

List<String> fieldNames = new ArrayList<>();

List<ObjectInspector> fieldTypes = new ArrayList<>();

fieldNames.add("event_name");

fieldTypes.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("event_json");

fieldTypes.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames,fieldTypes);

}

@Override

public void process(Object[] objects) throws HiveException {

//1、获取输入数据

String input = objects[0].toString();

// 2 校验

if (StringUtils.isBlank(input)){

return;

}else {

try {

JSONArray jsonArray = new JSONArray(input);

if (jsonArray == null){

return;

}

for (int i = 0; i < jsonArray.length(); i++) {

String[] results = new String[2];

try {

// 获取事件名称

results[0]= jsonArray.getJSONObject(i).getString("en");

results[1] =jsonArray.getString(i);

} catch (JSONException e) {

e.printStackTrace();

continue;

}

// 把结果写出去

forward(results);

}

} catch (JSONException e) {

e.printStackTrace();

}

}

}

@Override

public void close() throws HiveException {

}

}

点击即可下载:hivefunction

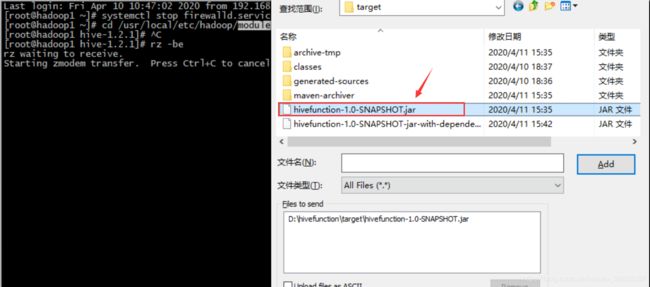

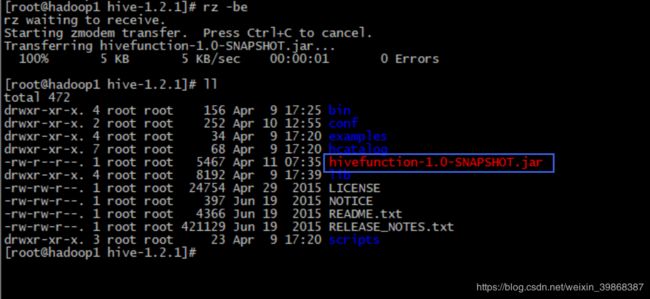

(1)打包,上传jar包到Linux环境的module/hive-1.2.1/目录下

查看

(2)将jar包添加到Hive的classpath

hive (gmall)> add jar /usr/local/etc/hadoop/module/hive-1.2.1/hivefunction-1.0-SNAPSHOT.jar;

Added [/usr/local/etc/hadoop/module/hive-1.2.1/hivefunction-1.0-SNAPSHOT.jar] to class path

Added resources: [/usr/local/etc/hadoop/module/hive-1.2.1/hivefunction-1.0-SNAPSHOT.jar]

(3)创建临时函数与开发好的java class关联

hive (gmall)> create temporary function base_analizer as 'com.atguigu.udf.BaseFieldUDF';

create temporary function flat_analizer as 'com.atguigu.udtf.EventJsonUDTF';

5、解析事件日志基础明细表

(1)解析事件日志基础明细表

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_base_event_log

PARTITION (dt='2019-02-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

event_name,

event_json,

server_time

from

(

select

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[0] as mid_id,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[1] as user_id,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[2] as version_code,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[3] as version_name,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[4] as lang,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[5] as source,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[6] as os,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[7] as area,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[8] as model,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[9] as brand,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[10] as sdk_version,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[11] as gmail,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[12] as height_width,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[13] as app_time,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[14] as network,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[15] as lng,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[16] as lat,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[17] as ops,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[18] as server_time

from ods_event_log where dt='2019-02-10' and base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la')<>''

) sdk_log lateral view flat_analizer(ops) tmp_k as event_name, event_json;

(2) 测试

hive (gmall)> select * from dwd_base_event_log limit 2;