deeplearning_class4:机器翻译、注意力机制与seq2seq模型

1 机器翻译

这一部分由于资源包不全,笔者没有进行过多学习,借助腾讯AI平台

2 注意力机制

注意力机制模仿了生物观察行为的内部过程,即一种将内部经验和外部感觉对齐从而增加部分区域的观察精细度的机制。注意力机制可以快速提取稀疏数据的重要特征,因而被广泛用于自然语言处理任务,特别是机器翻译。而自注意力机制是注意力机制的改进,其减少了对外部信息的依赖,更擅长捕捉数据或特征的内部相关性。本文通过文本情感分析的案例,解释了自注意力机制如何应用于稀疏文本的单词对表征加权,并有效提高模型效率。

2.1 注意力机制框架

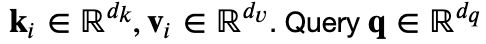

Attention是一种通用的带权池化方法,输入有两部分构成:

- 询问(query)

- 键值对(key-value)

attention layer得到输出与value的维度一致 o ∈ R d v o\in R^{d_v} o∈Rdv,对一个query来说,attention layer会与每一个key计算注意力分数并进行权重的归一化,输出的向量o则是value的家全球和,而每个key计算的权重与value一一对应。

a i = α ( q , k i ) a_i=\alpha(q,k_i) ai=α(q,ki)

使用softmax函数,获得注意力权重:

b 1 , . . . . . . , b n = s o f t m a x ( a 1 , . . . . . . , a n ) b_1,......,b_n = softmax(a_1,......,a_n) b1,......,bn=softmax(a1,......,an)

最终的输出就是value的加权求和:

o = ∑ i = 1 n b i v i o = \sum^{n}_{i = 1}b_iv_i o=i=1∑nbivi

2.2 softmax屏蔽

def SequenceMask(X, X_len,value=-1e6):

maxlen = X.size(1)

#print(X.size(),torch.arange((maxlen),dtype=torch.float)[None, :],'\n',X_len[:, None] )

mask = torch.arange((maxlen),dtype=torch.float)[None, :] >= X_len[:, None]

#print(mask)

X[mask]=value

return X

def masked_softmax(X, valid_length):

# X: 3-D tensor, valid_length: 1-D or 2-D tensor

softmax = nn.Softmax(dim=-1)

if valid_length is None:

return softmax(X)

else:

shape = X.shape

if valid_length.dim() == 1:

try:

valid_length = torch.FloatTensor(valid_length.numpy().repeat(shape[1], axis=0))#[2,2,3,3]

except:

valid_length = torch.FloatTensor(valid_length.cpu().numpy().repeat(shape[1], axis=0))#[2,2,3,3]

else:

valid_length = valid_length.reshape((-1,))

# fill masked elements with a large negative, whose exp is 0

X = SequenceMask(X.reshape((-1, shape[-1])), valid_length)

return softmax(X).reshape(shape)

masked_softmax(torch.rand((2,2,4),dtype=torch.float), torch.FloatTensor([2,3]))

#tensor([[[0.5423, 0.4577, 0.0000, 0.0000],

# [0.5290, 0.4710, 0.0000, 0.0000]],

#

# [[0.2969, 0.2966, 0.4065, 0.0000],

# [0.3607, 0.2203, 0.4190, 0.0000]]])

2.3 点积注意力

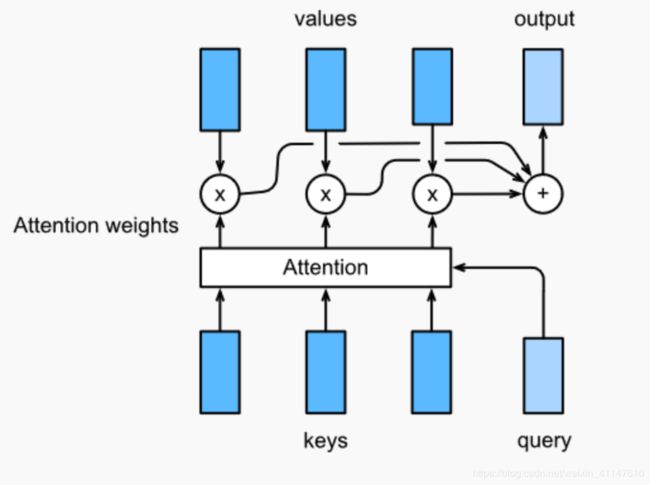

The dot product 假设query和keys有相同的维度, 即 ∀,,∈ℝ . 通过计算query和key转置的乘积来计算attention score,通常还会除去 ‾‾√ 减少计算出来的score对维度的依赖性,如下

![]()

假设 ∈ℝ× 有 个query, ∈ℝ× 有 个keys. 我们可以通过矩阵运算的方式计算所有 个score:

现在让我们实现这个层,它支持一批查询和键值对。此外,它支持作为正则化随机删除一些注意力权重.

2.4 多层感知机注意力

多层感知器中,首先将query and keys 投影到 R h R^h Rh。

例如,我们可以做如下映射 W k ∈ R h × d k , a n d v ∈ R h W_k\in R^{h×d_k},and\space v\in R^h Wk∈Rh×dk,and v∈Rh,将score函数定义

α ( k , q ) = v T t a n h ( W k + W q q ) \alpha(k,q) = v^Ttanh(W_k +W_qq) α(k,q)=vTtanh(Wk+Wqq)

然后将key和value在特征的维度上合并,然后送至a single hidden layer perception这层中hidden layer为h and输出的size为1,隐层激活函数为tanh,无偏执。

# Save to the d2l package.

class MLPAttention(nn.Module):

def __init__(self, units,ipt_dim,dropout, **kwargs):

super(MLPAttention, self).__init__(**kwargs)

# Use flatten=True to keep query's and key's 3-D shapes.

self.W_k = nn.Linear(ipt_dim, units, bias=False)

self.W_q = nn.Linear(ipt_dim, units, bias=False)

self.v = nn.Linear(units, 1, bias=False)

self.dropout = nn.Dropout(dropout)

def forward(self, query, key, value, valid_length):

query, key = self.W_k(query), self.W_q(key)

#print("size",query.size(),key.size())

# expand query to (batch_size, #querys, 1, units), and key to

# (batch_size, 1, #kv_pairs, units). Then plus them with broadcast.

features = query.unsqueeze(2) + key.unsqueeze(1)

#print("features:",features.size()) #--------------开启

scores = self.v(features).squeeze(-1)

attention_weights = self.dropout(masked_softmax(scores, valid_length))

return torch.bmm(attention_weights, value)