生产环境-----K8S多节点二进制部署

文章目录

- 一、K8S多master群集环境介绍

- 二、Master02服务器配置

- 三、前端负载均衡配置

- 四、修改node节点配置与VIP地址对接

- 五、集群验证

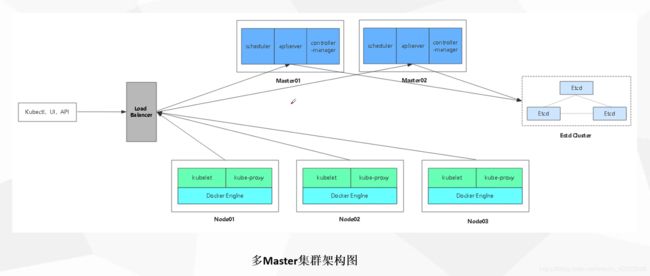

一、K8S多master群集环境介绍

此群集基于K8S单节点群集升级,增加了一个master节点,并在前端部署了nginx作为代理服务器,提高了群集的高可用性、高负载性。

K8S单节点完整部署~~~~

群集拓扑图:

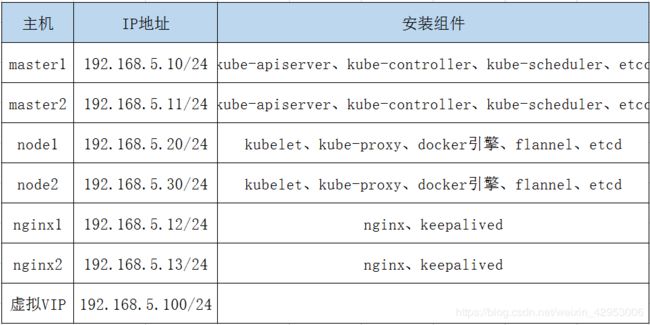

服务器规划:

所有IP地址已经在前面k8s单节点部署中的server证书中指明,不能更改

二、Master02服务器配置

- 拷贝master01中的配置文件和启动脚本到master02

###关闭增强型安全功能

[root@k8s_master ~]# setenforce 0

##拷贝配置文件到master02

[root@k8s_master ~]# scp -r /opt/kubernetes/ root@192.168.5.11:/opt

##拷贝启动脚本到master02

[root@k8s_master ~]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.5.11:/usr/lib/systemd/system/

###拷贝etcd证书到master02

[root@k8s_master ~]# scp -r /opt/etcd/ root@192.168.5.11:/opt

- 修改从master01拷贝过来的配置文件

[root@k8s_master ~]# cd /opt/kubernetes/cfg

[root@k8s_master cfg]# vi kube-apiserver

--bind-address=192.168.5.11 \

--advertise-address=192.168.5.11 \

- 启动master02的三个组件服务

[root@k8s_master cfg]# systemctl start kube-apiserver.service

[root@k8s_master cfg]# systemctl start kube-controller-manager

[root@k8s_master cfg]# systemctl start kube-scheduler

###设置环境变量,让系统识别

[root@k8s_master cfg]# echo 'PATH=/opt/kubernetes/bin:$PATH' >> /etc/profile

[root@k8s_master cfg]# source /etc/profile

###检查群集状态

[root@k8s_master cfg]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.5.20 Ready <none> 5d20h v1.12.3

192.168.5.30 Ready <none> 5d20h v1.12.3

三、前端负载均衡配置

- 两台nginx服务器配置

##关闭防火墙

systemctl stop firewalld

setenforce 0

##安装nginx

yum install -y wget pcre-devel gcc gcc-c++ make pcre-devel zlib zlib-devel

wget http://nginx.org/download/nginx-1.12.2.tar.gz

##解压,编译

tar zxvf nginx-1.12.2.tar.gz -C /opt

cd /opt/nginx-1.12.2/

./configure --prefix=/usr/local/nginx --with-stream

make && make install

###添加四层转发

vim /usr/local/nginx/conf/nginx.conf

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /usr/local/nginx/logs/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.5.10:6443;

server 192.168.5.11:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

- 启动nginx服务

[root@nginx2 nginx-1.12.2]# /usr/local/nginx/sbin/nginx

[root@nginx2 nginx-1.12.2]# ss -napt | grep nginx

LISTEN 0 128 *:6443 *:* users:(("nginx",pid=16825,fd=7),("nginx",pid=16824,fd=7))

LISTEN 0 128 *:80 *:* users:(("nginx",pid=16825,fd=8),("nginx",pid=16824,fd=8))

- 配置keepalived服务,nginx1的配置

##安装keepalive

yum install keepalived -y

##修改keepalive配置

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/usr/local/nginx/sbin/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.5.100/24

}

track_script {

check_nginx

}

}

###配置监控nginx服务脚本

vim /usr/local/nginx/sbin/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

/etc/init.d/keepalived stop

fi

##添加执行权限

chmod +x /usr/local/nginx/sbin/check_nginx.sh

- nginx2配置

##安装keepalive

yum install keepalived -y

####修改keepalive配置

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/usr/local/nginx/sbin/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.5.100/24

}

track_script {

check_nginx

}

}

###配置监控nginx服务脚本

vim /usr/local/nginx/sbin/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

/etc/init.d/keepalived stop

fi

##添加执行权限

chmod +x /usr/local/nginx/sbin/check_nginx.sh

- 启动2台主机的keepalive服务,查看漂移地址

##启动服务

systemctl restart keepalived.service

##在nginx1上能够看见192.168.5.100的漂移地址

[root@nginx1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:15:d7:e0 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.12/24 brd 192.168.5.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.5.100/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe15:d7e0/64 scope link

valid_lft forever preferred_lft forever

四、修改node节点配置与VIP地址对接

- 2个node节点修改配置文件

##2个node节点修改下面3个服务配置文件,将对接的IP改为VIP地址

vim /opt/kubernetes/cfg/bootstrap.kubeconfig

vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

vim /opt/kubernetes/cfg/kubelet.kubeconfig

//修改为 server: https://192.168.5.100:6443

- 2个node节点重启服务,并自检

[root@k8s_node2 ~]# cd /opt/kubernetes/cfg/

[root@k8s_node2 cfg]# grep 100 *

bootstrap.kubeconfig: server: https://192.168.5.100:6443

kubelet.kubeconfig: server: https://192.168.5.100:6443

kube-proxy.kubeconfig: server: https://192.168.5.100:6443

五、集群验证

- 验证负载均衡

由于node节点和vip地址进行了对接,当重启kubelet时,请求vip地址会转发到后面的master节点

[root@localhost ~]# tail /usr/local/nginx/logs/k8s-access.log

192.168.5.30 192.168.5.10:6443, 192.168.5.11:6443 - [06/May/2020:14:05:06 +0800] 502 0, 0

192.168.5.30 192.168.5.11:6443, 192.168.5.10:6443 - [06/May/2020:14:36:38 +0800] 200 0, 1566

- 在master01上创建测试pod

[root@k8s_master ~]# kubectl run nginx --image=nginx

[root@k8s_master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-dbddb74b8-4xbcz 1/1 Running 0 17m

- 查看该pod被分配到哪个node上

[root@k8s_master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-dbddb74b8-4xbcz 1/1 Running 0 18m 172.17.56.2 192.168.5.20 <none>

- 进入node节点访问pod并查看日志

[root@k8s_node1 cfg]# curl 172.17.56.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

##创建匿名用户,提权

[root@k8s_master ~]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

##进入master节点查看日志

[root@k8s_master ~]# kubectl logs nginx-dbddb74b8-4xbcz

172.17.56.1 - - [05/May/2020:17:46:01 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (X11; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0" "-"