为了让大家能够看到K8S Dashboard DEMO,我创建了一个“只读用户”

前言

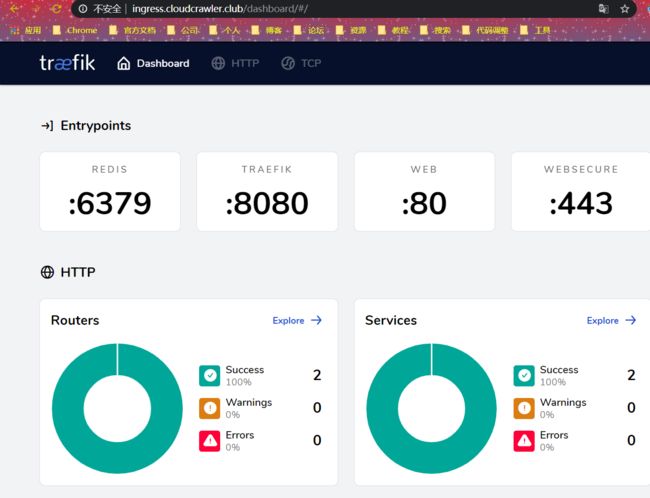

这两天抽空搞了下Kubernetes集群和Traefik,有很多朋友私信我想要看看实际的Kubernetes集群 Dashboard Demo效果,所以简单的把这两个服务开放出来啦!

PS:相关代码都在Github仓库 https://github.com/lateautumn4lin/KubernetesResearch/tree/master/ClusterEcology/generate_readonly

1. 暴露服务的两个步骤

有关于Kubernetes集群暴露服务我只是使用的是最简单的方案(大佬们勿喷) ,让服务公网访问就是很简单的两步,如下(PS:原理上Kubernetes集群集群暴露出来我使用了Traefik,而要通过域名访问Traefik需要在本地配置Host解析,为了避免让大家少操作异步,我直接在我的域名上增加了子域名的解析):

- 子域名解析

因为我的域名是固定的,也就是cloudcrawler.club,所以我之后提供的服务域名都是基于主域名来的,例如xxx.cloudcrawler.club,为了要使大家访问到xxx.cloudcrawler.club的时候能够访问到我的访问,所以在域名解析中增加了子域名的解析,指向的记录值也是我Kubernetes集群的Master节点的IP值,这样,就可以保证访问xxx.cloudcrawler.club的时候访问到的是经过Traefik路由之后的Kubernetes集群服务了。

- 服务建立

顺序说反了,应该是先建立服务再配置域名解析。不过大家也可以反着理解,一个服务总是有客户端和服务端的,客户端对应用户,用户只知道访问方式,通过xxx.cloudcrawler.club来访问,而不会关心服务端的构造,所以我们在第一步先建立好了对用户友好的客户端,这种做法其实也不算反,因为很多企业也是先把功能吹出去,再去实现的,哈哈。

说多了,视线回到服务建立这一块,我们要暴露的是两个Dashboard,Traefik Dashboard本身就只是一个只读的Dashboard,所以暴露出去也不会影响应用本身。而Kubernetes Dashboard就不同了,用户可以通过它来进行操作,会涉及到集群和应用本身,所以我就需要建立一个“只读用户”来给使用者进行查看。

2. 理解Kubernetes集群的服务和角色的关系

我们在创建“只读用户”之前,先来理解下在Kubernetes集群中用户是怎么定义的,在官方文档中提到:

其实,Kubernetes集群借鉴了RABC的角色权限控制机制,也就是基于角色的权限访问控制(Role-Based Access Control),把Role和Subject分开,在通过Binding把两者联结在一起,能够更加细粒度和方便的去控制用户的权限。

而在Kubernetes集群中又会因为是否涉及到集群权限做了区分,关于服务和用户也做了区分,例如在Subject分为User和Service。我们下面会用到的是ClusterRole、ServiceAccount、ClusterRoleBinding,原理就是我们要创建一个只读权限的ClusterRole,并且把这个Role和我们新建的一个ServiceAccount通过ClusterRoleBinding绑定,并且我们在登录的时候实际上就是获取ServiceAccount的Token,把Token给Dashboard这个服务,让服务去访问集群,获取相关信息。

3. 依靠官方View Role建立只读用户

Kubernetes集群里有一个默认的叫作view的clusterrole,它其实就是一个有只读权限的的角色,我们来看一下这个角色,具体有哪些权限

[root@master1 readonly]# kubectl describe clusterrole view

Name: view

Labels: kubernetes.io/bootstrapping=rbac-defaults

rbac.authorization.k8s.io/aggregate-to-edit=true

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

bindings [] [] [get list watch]

configmaps [] [] [get list watch]

endpoints [] [] [get list watch]

events [] [] [get list watch]

limitranges [] [] [get list watch]

namespaces/status [] [] [get list watch]

namespaces [] [] [get list watch]

persistentvolumeclaims/status [] [] [get list watch]

persistentvolumeclaims [] [] [get list watch]

pods/log [] [] [get list watch]

pods/status [] [] [get list watch]

pods [] [] [get list watch]

replicationcontrollers/scale [] [] [get list watch]

replicationcontrollers/status [] [] [get list watch]

replicationcontrollers [] [] [get list watch]

resourcequotas/status [] [] [get list watch]

resourcequotas [] [] [get list watch]

serviceaccounts [] [] [get list watch]

services/status [] [] [get list watch]

services [] [] [get list watch]

controllerrevisions.apps [] [] [get list watch]

daemonsets.apps/status [] [] [get list watch]

daemonsets.apps [] [] [get list watch]

deployments.apps/scale [] [] [get list watch]

deployments.apps/status [] [] [get list watch]

deployments.apps [] [] [get list watch]

replicasets.apps/scale [] [] [get list watch]

replicasets.apps/status [] [] [get list watch]

replicasets.apps [] [] [get list watch]

statefulsets.apps/scale [] [] [get list watch]

statefulsets.apps/status [] [] [get list watch]

statefulsets.apps [] [] [get list watch]

horizontalpodautoscalers.autoscaling/status [] [] [get list watch]

horizontalpodautoscalers.autoscaling [] [] [get list watch]

cronjobs.batch/status [] [] [get list watch]

cronjobs.batch [] [] [get list watch]

jobs.batch/status [] [] [get list watch]

jobs.batch [] [] [get list watch]

daemonsets.extensions/status [] [] [get list watch]

daemonsets.extensions [] [] [get list watch]

deployments.extensions/scale [] [] [get list watch]

deployments.extensions/status [] [] [get list watch]

deployments.extensions [] [] [get list watch]

ingresses.extensions/status [] [] [get list watch]

ingresses.extensions [] [] [get list watch]

networkpolicies.extensions [] [] [get list watch]

replicasets.extensions/scale [] [] [get list watch]

replicasets.extensions/status [] [] [get list watch]

replicasets.extensions [] [] [get list watch]

replicationcontrollers.extensions/scale [] [] [get list watch]

nodes.metrics.k8s.io [] [] [get list watch]

pods.metrics.k8s.io [] [] [get list watch]

ingresses.networking.k8s.io/status [] [] [get list watch]

ingresses.networking.k8s.io [] [] [get list watch]

networkpolicies.networking.k8s.io [] [] [get list watch]

poddisruptionbudgets.policy/status [] [] [get list watch]

poddisruptionbudgets.policy [] [] [get list watch]

可以看到,它对拥有的浆糊的访问权限都是get list和watch,也就是都是不可以进行写操作的权限。这样我们就可以像最初把用户绑定到cluster-admin一样,新创建一个用户,绑定到默认的view role上。

kubectl create sa dashboard-readonly -n kube-system

kubectl create clusterrolebinding dashboard-readonly --clusterrole=view --serviceaccount=kube-system:dashboard-readonly

通过以上命令我们创建了一个叫作dashboard-readonly的用户,然后把它绑定到view这个role上。我们可以通过kubectl describe secret -n=kube-system dashboard-readonly-token-随机字符串(可以通过kubectl get secret -n=kube-system把所有的secret都列出来,然后找到具体的那一个)查看dashboard-readonly用户的secret,里面包含token,,我们把token复制到dashboard登陆界面登陆。

登录之后我们可以看到,我们随便进到一个deployment里面,可以看到左上角仍然有scale,edit和delete这些权限,其实不用担心,你如果尝试edit和scale的时候,虽然没有提示,但是操作是不成功的。如果你点击了delete,则会出现一个错误提示,如下图,提示dashboard-readonly用户没有删除的权限。

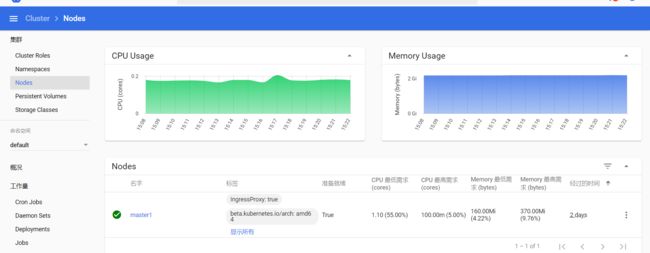

4. 真正建立一个只读用户

以前我们通过把用户绑定到view这个角色上创建了一个具有只读权限的用户,但是实际上你会发现,这个用户并不是一个完全意义上的只读权限用户,它是没有cluster级别的一些权限的,比如Nodes,Persistent Volumes等权限,比如我们点击左侧的Nodes标签,就会出现以下提示:

下面我们来手动创建一个对cluster级别的资源也有只读权限的用户

首先,我们先创建一个名叫作

kubectl create sa dashboard-real-readonly -n kube-system

下面我们来创建一个叫作dashboard-viewonly的clusterrole

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: dashboard-viewonly

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- persistentvolumeclaims

- pods

- replicationcontrollers

- replicationcontrollers/scale

- serviceaccounts

- services

- nodes

- persistentvolumeclaims

- persistentvolumes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- bindings

- events

- limitranges

- namespaces/status

- pods/log

- pods/status

- replicationcontrollers/status

- resourcequotas

- resourcequotas/status

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- apps

resources:

- daemonsets

- deployments

- deployments/scale

- replicasets

- replicasets/scale

- statefulsets

verbs:

- get

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- deployments

- deployments/scale

- ingresses

- networkpolicies

- replicasets

- replicasets/scale

- replicationcontrollers/scale

verbs:

- get

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

verbs:

- get

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- get

- list

- watch

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterrolebindings

- clusterroles

- roles

- rolebindings

verbs:

- get

- list

- watch

然后把它绑定到dashboard-real-readonly ServiceAccount上

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: dashboard-viewonly

subjects:

- kind: ServiceAccount

name: dashboard-real-readonly

namespace: kube-system

后面就是获取这个用户的token进行登陆了,看到Dashboard页面也能够显示出Nodes的信息了。

5. 服务访问方式

到此为止,所有工作已经做好了,现在来测试下能否通过子域名访问我们的服务。

我的服务地址是