kaggle竞赛练手( CIFAR-10 - Object Recognition in Images)

竞赛介绍

CIFAR-10是kaggle5年前的竞赛了,就是简单的分类,一共有10个类别,正确识别测试集的30万个数据,按比例提交即可。可以在链接中下载数据,一共700M的数据集。

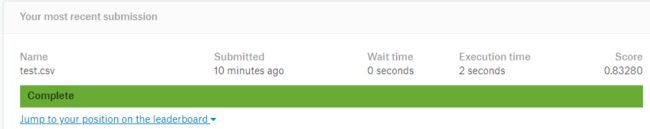

我用tensorflow2.0框架下的vgg16网络经过调试,最后达到了83%的准确率,接着训练应该还能有提升。

github可以获取完整代码,链接(https://github.com/fengshilin/cifar-10)

查看数据

代码

1.配置文件

配置文件准备config.py

这里我写了两个类,一个是路径类,一个是超参数类。

class PATH:

csv_path = '/home/dennis/competition/classify/cifar-10/dataset/trainLabels.csv'

train_root = '/home/dennis/competition/classify/cifar-10/dataset/train'

weight_path = '/home/dennis/competition/classify/cifar-10/weight_path/cifar_vgg16_not_trained_20191216.ckpt'

test_root = '/home/dennis/competition/classify/cifar-10/dataset/test'

result_csv = '/home/dennis/competition/classify/cifar-10/test.csv'

class CONFIG:

image_shape = (32,32)

train_per = 0.999 # 训练集的占比

n_class = 10

lr = 1e-3

decay_lr = 1e-4

num_epochs = 100

batch_size = 200

label_class = ["airplane", "automobile", "bird" ,"cat" ,"deer", "dog" ,"frog" ,"horse" ,"ship", "truck"]

2.数据处理

在dataloader.py中读取csv文件,将图片与label一一对应读取为一个dataset。

import tensorflow as tf

import pandas as pd

import os

import cv2

import random

import sys

sys.path.append('/home/dennis/competition/classify/cifar-10')

from config import PATH, CONFIG

def load_csv(csv_path):

"""读取csv文件"""

csv_data = pd.read_csv(csv_path)

id_list = list(csv_data['id'])

id_list = map(lambda x:str(x), id_list)

label_list = list(csv_data['label'])

# 这里返回的是[(id1, label1),(id2, label2),(id3,label3),....]

return list(zip(id_list, label_list))

def split_dataset(data_list):

"""将数据集均匀地分为训练集与验证集"""

random.seed(3) # 随机种子,固定随机抽取,使得训练过程不会发生浮动。

train_list = random.sample(data_list, int(

len(data_list)*CONFIG.train_per)) # 从data_list中随机抽取CONFIG.train_per比例做训练集,剩下的做验证集

val_list = list(set(data_list) - set(train_list))

return train_list, val_list

def unzip_data(data_list):

"""将[(a1,b1), (a2, b3)]分解为[a1,a2], [b1,b2]"""

id_list = [data[0] for data in data_list]

label_list = [data[1] for data in data_list]

return tuple((id_list, label_list))

def load_image(image_id, label):

image = cv2.imread(os.path.join(PATH.train_root, str(image_id.numpy(), 'utf-8')+".png"))

# 归一化

image = image/255

label_class = CONFIG.label_class

label = label_class.index(label)

return tf.convert_to_tensor(image, dtype=tf.float32), tf.convert_to_tensor(label, dtype=tf.int32)

def set_shape(image, label):

image.set_shape([CONFIG.image_shape[0], CONFIG.image_shape[1], 3])

label.set_shape([])

return image, label

if __name__ == "__main__":

result = load_csv('/home/dennis/competition/classify/cifar-10/dataset/trainLabels.csv')

train_list, val_list = split_dataset(result)

train_data = unzip_data(train_list)

dataset = tf.data.Dataset.from_tensor_slices(train_data)

dataset = dataset.shuffle(len(result[0])).map(load_image)

index = 0

for id, label in dataset:

if index <=10:

print(id, label)

index+=1

在train.py中调用dataloader.py,生成tensorflow接收的dataset。

import tensorflow as tf

from dataset.dataloader import load_csv, load_image, split_dataset, unzip_data, set_shape

import sys

sys.path.append('/home/dennis/competition/classify/cifar-10')

from config import PATH, CONFIG

# 读取csv

csv_data = load_csv(PATH.csv_path)

train_list, val_list = split_dataset(csv_data)

train_list = unzip_data(train_list)

# 从train_list生成dataset,这里from_tensor_slices接收的是一个tuple,里面的元素为列表(id_list, label_list)。

train_dataset = tf.data.Dataset.from_tensor_slices(train_list)

tf.random.set_seed(1) # 随机种子,固定随机打乱顺序。

# 这里对数据集做map,对图片做增强。

train_dataset = train_dataset.shuffle(len(train_list)).map(lambda x,y: tf.py_function(load_image, [x,y], [tf.float32, tf.int32])).map(set_shape).map(

lambda image, label: (tf.image.convert_image_dtype(image, tf.float32), label)

).cache().map(

lambda image, label: (tf.image.random_flip_left_right(image), label)

).map(

lambda image, label: (tf.image.random_contrast(image, lower=0.0, upper=1.0), label)

).batch(CONFIG.batch_size)

# 对验证集做类似处理,不过验证集不需要做数据增强

val_list = unzip_data(val_list)

val_dataset = tf.data.Dataset.from_tensor_slices(val_list)

tf.random.set_seed(2)

val_dataset = val_dataset.shuffle(len(val_list)).map(lambda x,y: tf.py_function(load_image, [x,y], [tf.float32, tf.int32])).map(set_shape).batch(CONFIG.batch_size)

3.模型建立

mymodel.py这里用的是vgg16的模型,自己修改了一下后面的全连接层。

import tensorflow as tf

from tensorflow.keras.layers import Dropout, Input, concatenate, GlobalAveragePooling2D, Dense, BatchNormalization, Activation

import sys

sys.path.append('/home/dennis/competition/classify/cifar-10')

from config import PATH, CONFIG

image_shape = CONFIG.image_shape

class Vgg16net(tf.keras.Model):

def __init__(self, n_class=2):

super().__init__()

self.n_class = n_class

self.vgg16_model = self.load_vgg16()

self.global_pool = GlobalAveragePooling2D()

self.conv_vgg = Dense(256, use_bias=False,

kernel_initializer='uniform')

self.batch_normalize = BatchNormalization()

self.relu = Activation("relu")

self.dropout_1 = Dropout(0.2)

self.conv_1 = Dense(64, use_bias=False, kernel_initializer='uniform')

self.batch_normalize_1 = BatchNormalization()

self.relu_1 = Activation("relu")

self.dropout_2 = Dropout(0.2)

self.classify = Dense(

n_class, kernel_initializer='uniform', activation="softmax")

def call(self, input):

x = self.vgg16_model(input)

x = self.global_pool(x)

x = self.conv_vgg(x)

x = self.batch_normalize(x)

x = self.relu(x)

x = self.dropout_1(x)

x = self.conv_1(x)

x = self.batch_normalize_1(x)

x = self.relu_1(x)

x = self.dropout_2(x)

x = self.classify(x)

return x

def load_vgg16(self):

vgg16 = tf.keras.applications.vgg16.VGG16(weights='imagenet', include_top=False, input_tensor=Input(

shape=(image_shape[0], image_shape[1], 3)), classes=self.n_class)

# for layer in vgg16.layers[:15]:

# layer.trainable = False

return vgg16

4. 模型训练

模型训练没啥好说的,直接套用就行。

import tensorflow as tf

from tensorflow.keras.callbacks import ModelCheckpoint

from model.mymodel import MyModel, Vgg16net

from dataset.dataloader import load_csv, load_image, split_dataset, unzip_data, set_shape

import sys

sys.path.append('/home/dennis/competition/classify/cifar-10')

from config import PATH, CONFIG

# 读取csv

csv_data = load_csv(PATH.csv_path)

train_list, val_list = split_dataset(csv_data)

train_list = unzip_data(train_list)

train_dataset = tf.data.Dataset.from_tensor_slices(train_list)

tf.random.set_seed(1)

train_dataset = train_dataset.shuffle(len(train_list)).map(lambda x,y: tf.py_function(load_image, [x,y], [tf.float32, tf.int32])).map(set_shape).map(

lambda image, label: (tf.image.convert_image_dtype(image, tf.float32), label)

).cache().map(

lambda image, label: (tf.image.random_flip_left_right(image), label)

).map(

lambda image, label: (tf.image.random_contrast(image, lower=0.0, upper=1.0), label)

).batch(CONFIG.batch_size)

val_list = unzip_data(val_list)

val_dataset = tf.data.Dataset.from_tensor_slices(val_list)

tf.random.set_seed(2)

val_dataset = val_dataset.shuffle(len(val_list)).map(lambda x,y: tf.py_function(load_image, [x,y], [tf.float32, tf.int32])).map(set_shape).batch(CONFIG.batch_size)

model = Vgg16net(CONFIG.n_class)

# 加载之前训练过的模型可以加快收敛速度

# model.load_weights(PATH.weight_path)

checkpoint_callback = ModelCheckpoint(

PATH.weight_path, monitor='val_accuracy', verbose=1,

save_best_only=False, save_weights_only=True,

save_frequency=1)

optimizer = tf.keras.optimizers.SGD(

learning_rate=CONFIG.lr, decay=CONFIG.decay_lr)

model.compile(

optimizer=optimizer,

loss=tf.keras.losses.sparse_categorical_crossentropy,

metrics=[tf.metrics.SparseCategoricalAccuracy()]

)

model.fit(train_dataset, validation_data=val_dataset,

epochs=CONFIG.num_epochs, callbacks=[checkpoint_callback])