kubernetes(k8s):kubernetes调度机制、亲和、反亲和、污点、容忍

文章目录

- 1. Kuberbetes调度

- (1)nodename

- (2)nodeSelector

- (3)亲和与反亲和

- 节点亲和

- pod亲和与反亲和

- (4)污点

- 1 打污点NoSchedule

- 2 在PodSpec中为容器设定容忍标签

- 3 删除污点

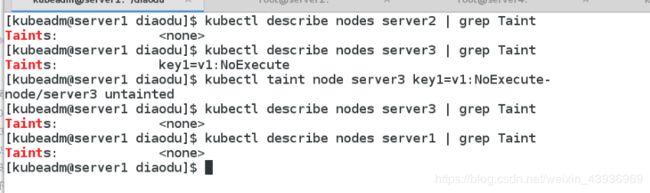

- 4 打污点:NoExecute

- tolerations容忍的设置

- 5 容忍所有的污点

- 6 影响Pod调度的指令还有:cordon、drain、delete

- cordon 停止调度

- drain驱逐节点:

- delete删除节点

1. Kuberbetes调度

调度器通过 kubernetes 的 watch 机制来发现集群中新创建且尚未被调度到 Node 上的 Pod。调度器会将发现的每一个未调度的 Pod 调度到一个合适的 Node 上来运行。

kube-scheduler 是 Kubernetes 集群的默认调度器,并且是集群控制面的一部分。如果你真的希望或者有这方面的需求,kube-scheduler 在设计上是允许你自己写一个调度组件并替换原有的 kube-scheduler。

在做调度决定时需要考虑的因素包括:单独和整体的资源请求、硬件/软件/策略限制、亲和以及反亲和要求、数据局域性、负载间的干扰等等。

Kuberbetes调度可以参考官网地址:

https://kubernetes.io/zh/docs/concepts/scheduling/kube-scheduler/

调度框架:https://kubernetes.io/zh/docs/concepts/configuration/scheduling-framework/

(1)nodename

nodeName 是节点选择约束的最简单方法,但一般不推荐。如果 nodeName 在 PodSpec 中指定了,则它优先于其他的节点选择方法。

使用 nodeName 来选择节点的一些限制:

- 如果指定的节点不存在。

- 如果指定的节点没有资源来容纳 pod,则pod 调度失败。

- 云环境中的节点名称并非总是可预测或稳定的。

[kubeadm@server1 scheduler]$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeName: server3 //绑定server3

(2)nodeSelector

nodeselector是节点选择约束的最简单推荐形式

给选择的节点添加标签:

kubectl label nodes <node name> disktype=ssd

[kubeadm@server1 scheduler]$ cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

disktype: ssd

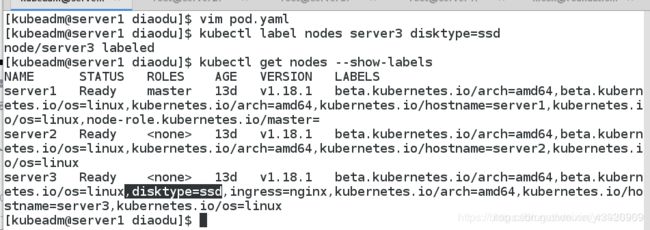

给server3加标签

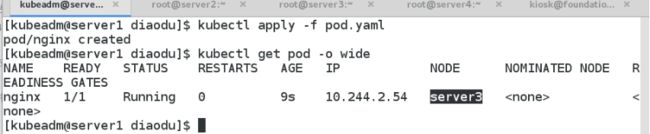

pod运行在server3上(因为标签)

pod运行在server3上(因为标签)

(3)亲和与反亲和

- nodeSelector 提供了一种非常简单的方法来将 pod约束到具有特定标签的节点上。亲和/反亲和功能极大地扩展了你可以表达约束的类型。

- 你可以发现规则是“软”/“偏好”,而不是硬性要求,因此,如果调度器无法满足该要求,仍然调度该 pod.

- 你可以使用节点上的 pod 的标签来约束,而不是使用节点本身的标签,来允许哪些 pod 可以或者不可以被放置在一起。

节点亲和

简单的来说就是用一些标签规则来约束pod的调度

requiredDuringSchedulingIgnoredDuringExecution //必须满足

preferredDuringSchedulingIgnoredDuringExecution //倾向满足

ignoreDuringExecution表示如果在pod运行期间node的标签发生变化,导致亲和性策略不能满足,则继续运行当前的pod

可以参考链接:

https://kubernetes.io/zh/docs/concepts/configuration/assign-pod-node

1 requiredDuringSchedulingIgnoredDuringExecution 必须满足

[kubeadm@server1 scheduler]$ cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity: //亲和性

nodeAffinity: //节点亲和

requiredDuringSchedulingIgnoredDuringExecution: //硬性

nodeSelectorTerms:

- matchExpressions:

- key: disktype // 有这个标签名(存储类型)

operator: In

values:

- ssd // 标签内容为ssd

nodeaffinity还支持多种规则匹配条件的配置如

- In:label 的值在列表内

- NotIn:label 的值不在列表内

- Gt:label 的值大于设置的值,不支持Pod亲和性

- Lt:label 的值小于设置的值,不支持pod亲和性

- Exists:设置的label 存在

- DoesNotExist:设置的 label 不存在

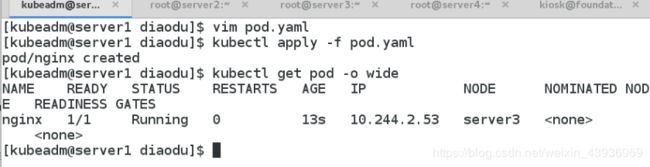

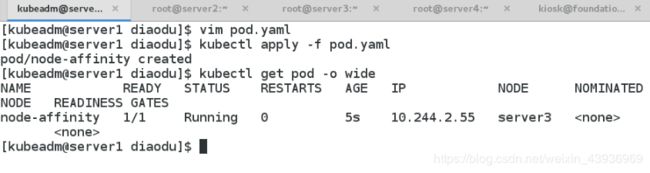

由于标签关系,调度到server3上:

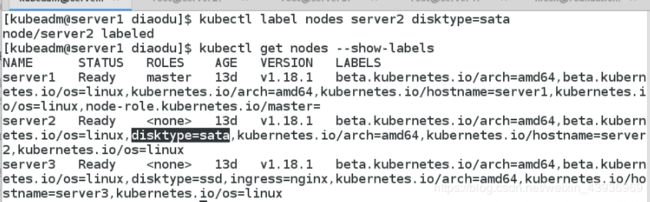

给server2加上标签

给server2加上标签

更改清单

更改清单

[kubeadm@server1 scheduler]$ cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity: //节点亲和

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: //节点选择

- matchExpressions:

- key: disktype

operator: In

values:

- sata

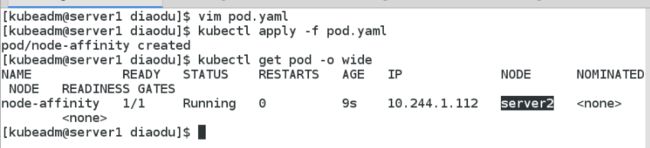

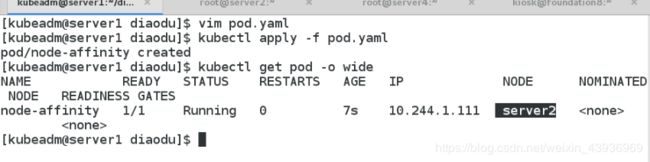

此时pod调度到server2的上面

增加一个选项sata,满足ssd和sata其中之一即可

增加一个选项sata,满足ssd和sata其中之一即可

[kubeadm@server1 node]$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity: //节点亲和

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: //节点选择

- matchExpressions:

- key: disktype

operator: In

values:

- ssd

- sata

[kubeadm@server1 node]$ kubectl apply -f pod.yaml

pod/node-affinity created

[kubeadm@server1 node]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-affinity 1/1 Running 0 8s 10.244.1.122 server3 <none> <none>

2 preferredDuringSchedulingIgnoredDuringExecution 倾向满足

[kubeadm@server1 diaodu]$ cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: node-affinity

spec:

containers:

- name: nginx

image: nginx

affinity:

nodeAffinity: //节点亲和

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: //节点选择

- matchExpressions:

- key: kubernetes.io/hostname

operator: NotIn

values:

- server3

preferredDuringSchedulingIgnoredDuringExecution: //倾向满足

- weight: 1

preference:

matchExpressions:

- key: disktype

operator: In

values:

- ssd

server3上有disktype=ssd

之前pod在server2上,此时不会调度到server3,因为是倾向满足

pod亲和与反亲和

- podAffinity主要解决POD可以和哪些POD部署在同一个拓扑域中的问题(拓扑域用主机标签实现,可以是单个主机,也可以是多个主机组成的cluster、zone等。)

- podAntiAffinity主要解决POD不能和哪些POD部署在同一个拓扑域中的问题。它们处理的是Kubernetes集群内部POD和POD之间的关系。

- Pod 间亲和与反亲和在与更高级别的集合(例如ReplicaSets,StatefulSets,Deployments等)一起使用时,它们可能更加有用。可以轻松配置一组应位于相同定义拓扑(例如,节点)中的工作负载。

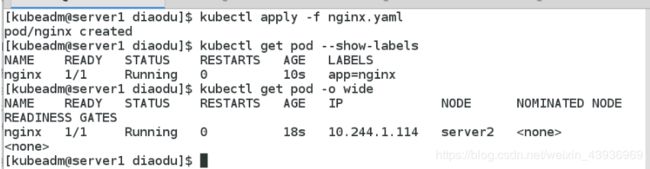

pod亲和性示例

给pod/nginx加标签:

[kubeadm@server1 diaodu]$ cat nginx.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels: //标签

app: nginx

spec:

containers:

- name: nginx

image: k8s/nginx

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

app: mysql

spec:

affinity:

podAffinity: // pod亲和

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector: //标签选择

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

containers:

- name: mysql

image: mysql:5.7

env:

- name: "MYSQL_ROOT_PASSWORD"

value: "westos"

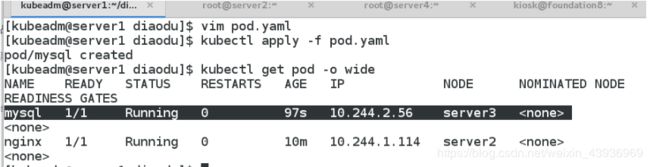

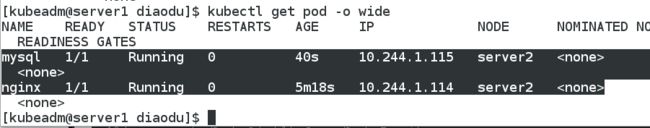

因为亲和了app=nginx的标签,所以与nginx pod部署在同一台主机上。

因为亲和了app=nginx的标签,所以与nginx pod部署在同一台主机上。

pod反亲和

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

app: mysql

spec:

affinity:

podAntiAffinity: // 区别

requiredDuringSchedulingIgnoredDuringExecution: //必须满足:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

containers:

- name: mysql

image: mysql:5.7

env:

- name: "MYSQL_ROOT_PASSWORD"

value: "westos"

(4)污点

NodeAffinity节点亲和性,是Pod上定义的一种属性,使Pod能够按我们的要求调度到某个Node上,而Taints则恰恰相反,它可以让Node拒绝运行Pod,甚至驱逐Pod。

Taints(污点)是Node的一个属性,设置了Taints后,所以Kubernetes是不会将Pod调度到这个Node上的,于是Kubernetes就给Pod设置了个属性Tolerations(容忍),只要Pod能够容忍Node上的污点,那么Kubernetes就会忽略Node上的污点,就能够(不是必须)把Pod调度过去。

- 污点作为节点上的标签拒绝运行调度pod,甚至驱逐pod

- 有污点就有容忍,容忍可以容忍节点上的污点,可以调度pod

可以使用命令 kubectl taint 给节点增加一个 taint:

kubectl taint nodes nodename key=value:NoSchedul //创建

kubectl describe nodes nodename | grep Taint //查询

kubectl taint nodes nodename key:NoSchedule- //删除

其中[effect] 可取值: [ NoSchedule | PreferNoSchedule | NoExecute ]

- NoSchedule:POD 不会被调度到标记为 taints 节点。

- PreferNoSchedule:NoSchedule 的软策略版本。

- NoExecute:该选项意味着一旦 Taint 生效,如该节点内正在运行的 POD 没有对应 Tolerate 设置,会直接被逐出。

示例:

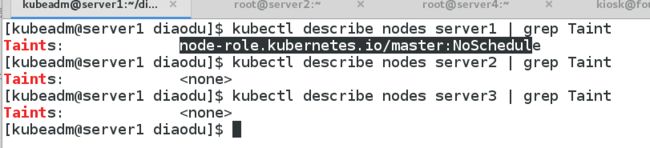

server1不参加调度的原因是因为有污点:

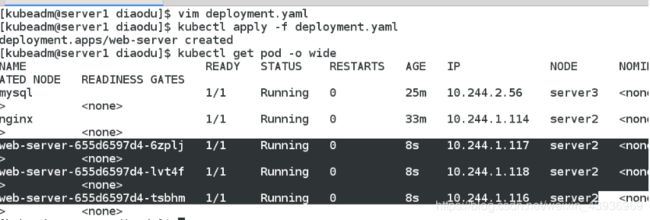

创建一个控制器:

创建一个控制器:

[kubeadm@server2 scheduler]$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

可以看到server1没有参加调度:

为什么master节点不参与调度?因为master节点上有污点

让server1参加调度的方法:

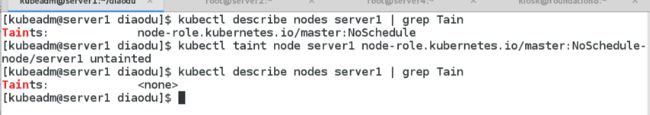

让server1参加调度的方法:

- 删除server1污点,重新调度

一般情况下因为server1启动pod太多,不会参与调度(如果pod基数太大就会参与调度)

一般情况下因为server1启动pod太多,不会参与调度(如果pod基数太大就会参与调度)

1 打污点NoSchedule

[kubeadm@server1 node]$ kubectl delete pod nginx

pod "nginx" deleted

[kubeadm@server1 node]$ kubectl delete pod mysql

pod "mysql" deleted

//给Server3节点打上taint:server3不参与调度

[kubeadm@server1 node]$ kubectl taint node server3 key1=v1:NoSchedule

node/server3 tainted

[kubeadm@server1 node]$ kubectl describe nodes server3 |grep Taint

Taints: key1=v1:NoSchedule

[kubeadm@server1 node]$ cat pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

[kubeadm@server1 node]$ kubectl apply -f pod.yaml

deployment.apps/web-server created

//因为server3不参加调度所以全在server4上运行

[kubeadm@server1 node]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-55d87b5996-8clxq 1/1 Running 1 3d2h 10.244.2.112 server4 <none> <none>

web-server-f89759699-646r2 1/1 Running 0 9s 10.244.2.117 server4 <none> <none>

web-server-f89759699-lbw8d 1/1 Running 0 9s 10.244.2.116 server4 <none> <none>

web-server-f89759699-pzn5l 0/1 ContainerCreating 0 10s <none> server4 <none> <none>

2 在PodSpec中为容器设定容忍标签

[kubeadm@server1 node$ cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

tolerations: //容忍标签

- key: "key1"

operator: "Equal"

value: "v1"

effect: "NoSchedule"

[kubeadm@server1 node]$ kubectl apply -f pod.yaml

deployment.apps/web-server created

// 因为容忍了server3上的污点key1=v1:NoSchedule,所以server3又可以参加调度

[kubeadm@server1 node]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-55d87b5996-8clxq 1/1 Running 1 3d2h 10.244.2.112 server4 <none> <none>

web-server-b98886d79-5hts6 1/1 Running 0 95s 10.244.2.118 server4 <none> <none>

web-server-b98886d79-ksv74 1/1 Running 0 95s 10.244.1.126 server3 <none> <none>

web-server-b98886d79-vcn7k 1/1 Running 0 95s 10.244.1.125 server3 <none> <none>

3 删除污点

[kubeadm@server1 node]$ kubectl taint nodes server3 key:NoSchedule-

// 删除server3节点的污点

[kubeadm@server1 node]$ kubectl describe nodes server3 |grep Taint

Taints: <none>

//删除所有节点的污点

[kubeadm@server1 node]$ kubectl taint nodes --all node-role.kubernetes.io/master-

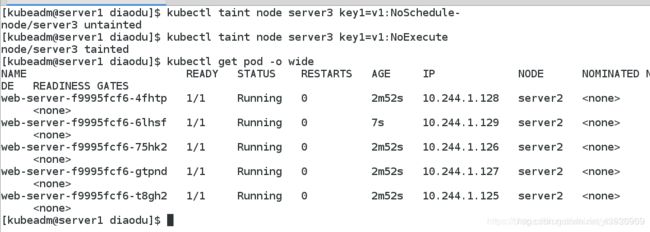

4 打污点:NoExecute

给server3添加驱逐标签,server3上运行的pod都调度到server2

//给Server3节点打上taint:

$ kubectl taint node server3 key1=v1:NoExecute

node/server1 tainted

effect: "NoExecute"

tolerations容忍的设置

tolerations中定义的key、value、effect,要与node上设置的taint保持一直:

- 如果 operator 是 Exists ,value可以省略。

- 如果 operator 是 Equal ,则key与value之间的关系必须相等。

- 如果不指定operator属性,则默认值为Equal。

还有两个特殊值:

- 当不指定key,再配合Exists 就能匹配所有的key与value ,可以容忍所有污点。

- 当不指定effect ,则匹配所有的effect。

tolerations示例:

tolerations:

- key: "key"

operator: "Equal" //equal必须key=value

value: "value"

effect: "NoSchedule"

---

tolerations:

- key: "key"

operator: "Exists" //exists,value可以省略

effect: "NoSchedule"

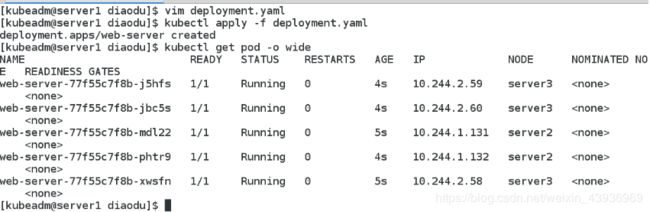

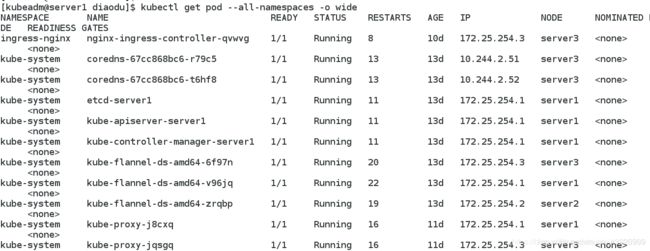

5 容忍所有的污点

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-server

spec:

selector:

matchLabels:

app: nginx

replicas: 6

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

tolerations:

- operator: "Exists"

[kubeadm@server1 node]$ kubectl apply -f deployment.yaml

deployment.apps/web-server created

// server3和server4都可以被调度,因为容忍了所有的污点

[kubeadm@server1 node]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-55d87b5996-8clxq 1/1 Running 1 3d3h 10.244.2.112 server4 <none> <none>

web-server-54dd87666-284q9 0/1 ContainerCreating 0 7s <none> server3 <none> <none>

web-server-54dd87666-nqd5p 0/1 ContainerCreating 0 7s <none> server4 <none> <none>

web-server-54dd87666-xkshd 0/1 ContainerCreating 0 7s <none> server3 <none> <none>

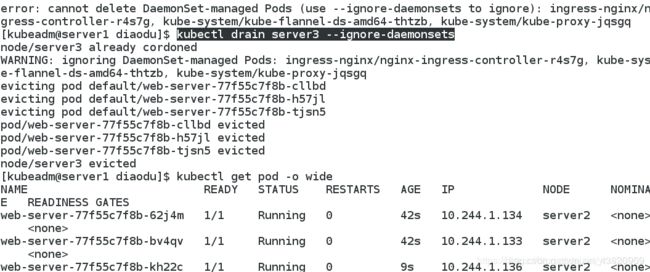

6 影响Pod调度的指令还有:cordon、drain、delete

影响Pod调度的指令还有:cordon、drain、delete,后期创建的pod都不会被调度到该节点上,但操作的暴力程度不一样。

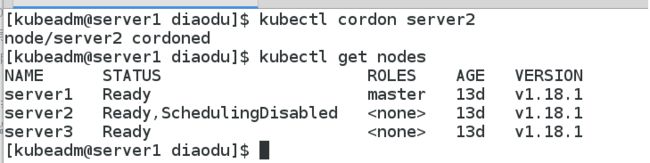

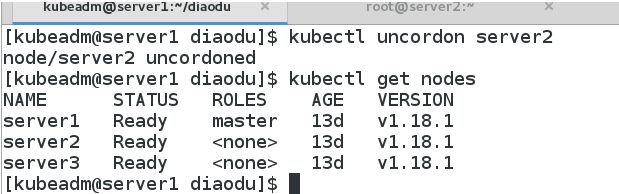

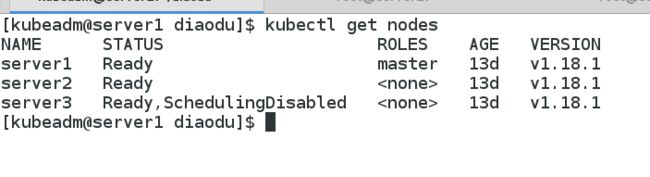

cordon 停止调度

影响最小,只会将node调为SchedulingDisabled,新创建pod,不会被调度到该节点,节点原有pod不受影响,仍正常对外提供服务。

kubectl cordon server2

drain驱逐节点:

首先驱离节点上的pod,再在其它节点重建,最后将节点调为SchedulingDisabled

删除server2和server3的污点

drain 驱逐节点:

drain 驱逐节点:

kubectl drain server3 --ignore-daemonsets(忽略daemonset的内容)

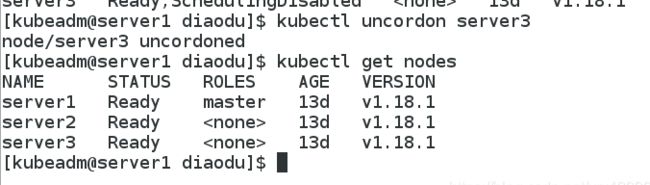

kubectl uncordon server3

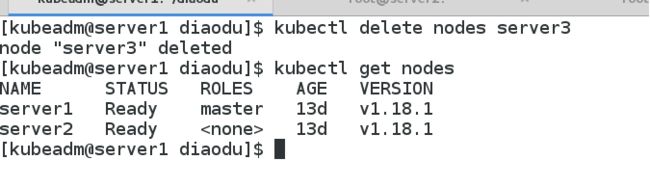

delete删除节点

最暴力的参数

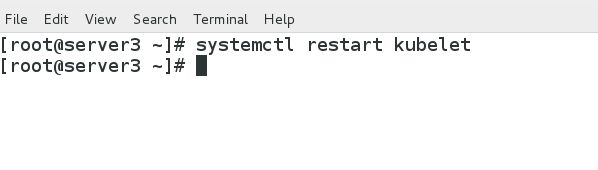

从master节点删除node,master失去对该节点控制,也就是该节点上的pod,如果想要恢复,需要在该节点重启kubelet服务

删除:

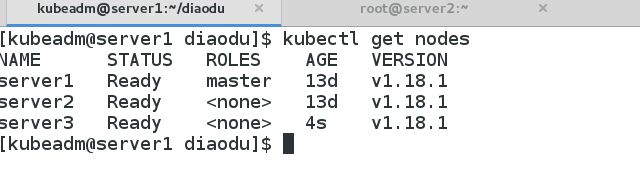

$ kubectl delete node server3

# systemctl restart kubelet //基于node的自注册功能,恢复使用,在你删除节点所在主机上执行