hbase伪分布安装和hbase案例

一、安装hbase

1、下载

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hbase/stable/hbase-2.2.4-bin.tar.gz

2、解压并修改文件名

tar xzvf hbase-2.2.4-bin.tar.gz -C /usr/local/

mv ./hbase-2.2.4 ./hbase

3、修改配置文件并使他生效

vi /etc/profile

添加以下内容:

export HBASE_HOME=/usr/local/hbase

export PATH=$PATH:$HBASE_HOME/bin

使配置文件生效:

source /etc/profile

4、修改配置文件 hbase-env.sh

添加内容

export JAVA_HOME=/usr/lib/jvm/java

5、配置hbase-site.xml

添加内容

<property>

<name>hbase.rootdir</name>

<value>hdfs://node2:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>node2</value>

</property>

</configuration>

6、启动hbase

./bin/start-hbase.sh

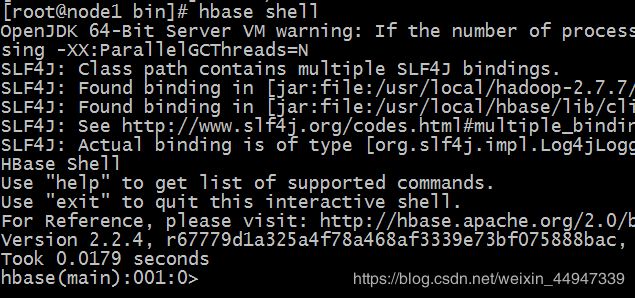

7、进入hbase shell

hbase shell

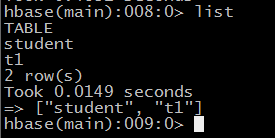

二、hbase shell例子

1、创建表

create 'student','info'

2、插入数据到表

put 'student','1001','info:sex','male'

put 'student','1001','info:age','18'

put 'student','1002','info:name','fu'

put 'student','1002','info:sex','female'

put 'student','1002','info:age','20'

3、扫描查看表数据

scan 'student'

scan 'student',{STARTROW => '1001', STOPROW =>'1001'}

scan 'student',{STARTROW => '1001'}

4、查看表结构

describe ‘student’

5、更新指定字段的数据

put 'student','1001','info:name','chen'

put 'student','1001','info:age','21'

6、查看“指定行”或“指定列族:列”的数据

get 'student','1001'

get 'student','1001','info:name'

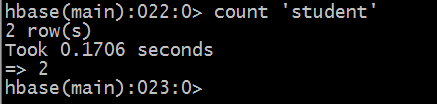

7、统计表数据行数

count 'student'

8、删除某rowkey的全部数据

9、删除某一列数据

10、删除表

三、Java API

1、配置 pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>hd-hbase</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>2.2.4</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.2.4</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>C:/Program Files/Java/jdk1.8.0_131/lib/tools.jar</systemPath>

</dependency>

</dependencies>

</project>

2、创建表t1

package com.hadoop.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class HbaseCreateTable {

public static void main(String[] args) throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum","192.168.100.102:2181");

Connection conn = ConnectionFactory.createConnection(conf);

Admin admin = conn.getAdmin();

TableName tableName = TableName.valueOf("t1");

HTableDescriptor dese = new HTableDescriptor((tableName));

HColumnDescriptor family = new HColumnDescriptor("f1");

dese.addFamily(family);

admin.createTable(dese);

System.out.println("Create table success!");

}

}

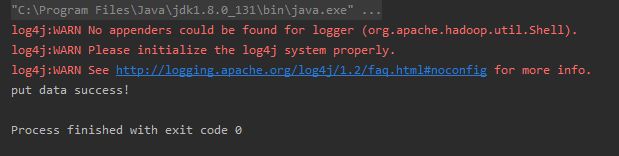

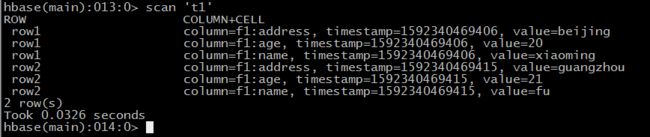

3、向t1插入数据

package com.hadoop.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class hbasePutData {

public static void main(String[] args) throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum","192.168.100.102:2181");

Connection conn = ConnectionFactory.createConnection(conf);

TableName tableName = TableName.valueOf("t1");

Table table = conn.getTable(tableName);

Put put = new Put(Bytes.toBytes("row1"));

put.addColumn(Bytes.toBytes("f1"),Bytes.toBytes("name"),Bytes.toBytes("xiaoming"));

put.addColumn(Bytes.toBytes("f1"),Bytes.toBytes("age"),Bytes.toBytes("20"));

put.addColumn(Bytes.toBytes("f1"),Bytes.toBytes("address"),Bytes.toBytes("beijing"));

Put put2 = new Put(Bytes.toBytes("row2"));

put2.addColumn(Bytes.toBytes("f1"),Bytes.toBytes("name"),Bytes.toBytes("fu"));

put2.addColumn(Bytes.toBytes("f1"),Bytes.toBytes("age"),Bytes.toBytes("21"));

put2.addColumn(Bytes.toBytes("f1"),Bytes.toBytes("address"),Bytes.toBytes("guangzhou"));

table.put(put);

table.put(put2);

table.close();

System.out.println("put data success!");

}

}

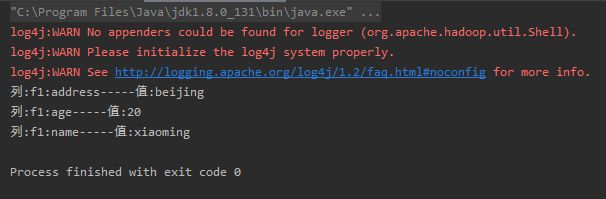

4、查询t1的row1

package com.hadoop.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class HbaseGetData {

public static void main(String[] args) throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum","192.168.100.102:2181");

Connection conn = ConnectionFactory.createConnection(conf);

TableName tableName = TableName.valueOf("t1");

Table table = conn.getTable(tableName.valueOf("t1"));

Get get = new Get("row1".getBytes());

Result r = table.get(get);

for (Cell cell:r.rawCells()){

String family=new String(CellUtil.cloneFamily(cell));

String qualifier = new String(CellUtil.cloneQualifier(cell));

String value = new String(CellUtil.cloneValue(cell));

System.out.println("列:"+family+":"+qualifier+"-----值:"+value);

}

}

}

5、删除数据

package com.hadoop.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class Hbasedelete {

public static void main(String[] args) throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "192.168.100.102:2181");

Connection conn = ConnectionFactory.createConnection(conf);

TableName tableName = TableName.valueOf("t1");

Table table = conn.getTable(tableName);

Delete delete = new Delete(Bytes.toBytes("row1"));

table.delete (delete);

table.close();

System.out.println("delete data success!!");

}

}

运行:

在hbase shell查看 t1中的rowkey 为row1的行已经被删除

四、错误及解决

Exception in thread "main" org.apache.hadoop.hbase.client.RetriesExhaustedException: Failed after attempts=16, exceptions:

Caused by: org.apache.hadoop.hbase.MasterNotRunningException: java.net.UnknownHostException: can not resolve master,16000,1592301072837

在Windows操作修改 hosts文件 添加虚拟机映射