OpenCV4学习笔记(76)——基于ArUco模块+QT实现增强现实(AR)

在《OpenCV4学习笔记(75)》中,整理记录了对于一副静态图像如何实现一个简单的增强现实效果,今天我们就结合ArUco模块和QT来实现对于实时视频流的AR效果。

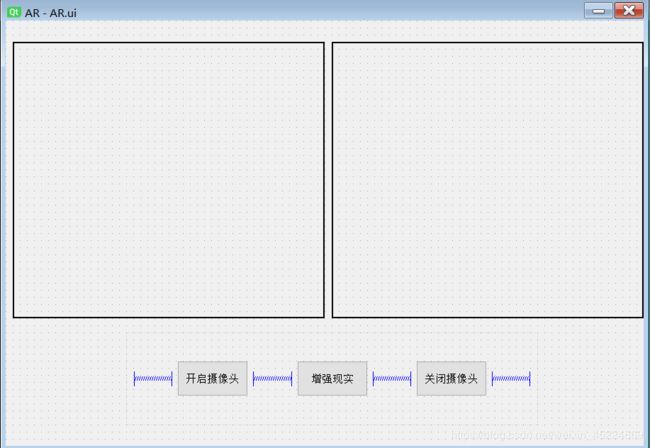

我们需要先创建一个QT项目,我使用的是基于VS2019平台来进行开发。然后在QT Designer 中先设计我们的GUI界面,长下面这个样子,左边的窗口是正常打开摄像头录制的视频,右边的窗口是进行增强现实后的视频。搞定这个简单的GUI界面设计后,接下来进入代码部分。

首先第一件事依然是先加载相机的内参矩阵和畸变系数,依然默认已经完成了相机标定这一步骤

static cv::Mat cameraMatrix, distCoeffs;

vector<double> cameraVec = { 657.1548323619423, 0, 291.8582472145741,0, 647.384819351103, 391.254810476919,0, 0, 1 };

cameraMatrix = Mat(cameraVec);

cameraMatrix = cameraMatrix.reshape(1, 3);

vector<double> dist = { 0.1961793476399528, -1.38146317350581, -0.002301820186177369, -0.001054637905895881, 2.458286937422959 };

distCoeffs = Mat(dist);

distCoeffs = distCoeffs.reshape(1, 1);

然后需要设置一下两个窗口和按钮的初始状态,也就是在一开始只有“打开摄像头”这个按钮是可以点击的,其他按钮是未激活状态。

QPixmap black = QPixmap(ui.label1->size());

ui.label1->setPixmap(black);

ui.label2->setPixmap(black);

ui.pushButton2->setDisabled(true);

ui.pushButton3->setDisabled(true);

接下来就是调用摄像头和视频文件的部分了,我这里使用的是手机相机作为摄像头

static VideoCapture camera, video;

video.open("D:\\opencv_c++\\opencv_tutorial\\data\\images\\Megamind.avi");

if (!video.isOpened())

{

cout << "can't open video" << endl;

exit(-1);

}

camera.open("http://192.168.3.2:8081");

if (!camera.isOpened())

{

cout << "can't open camera" << endl;

exit(-1);

}

设置“打开摄像头”按钮操作和定时器以显示视频流

QTimer *timer1 = new QTimer;

timer1->setInterval(30);

connect(timer1, &QTimer::timeout, ui.label1, [=]() {

camera.read(frame);

QImage qframe = QImage((const uchar*)frame.data, frame.cols, frame.rows, QImage::Format::Format_BGR888);

ui.label1->setPixmap(QPixmap::fromImage(qframe.scaled(ui.label1->size(), Qt::AspectRatioMode::KeepAspectRatio)));

});

connect(ui.pushButton1, &QPushButton::clicked, ui.label1, [=]() {

ui.pushButton2->setDisabled(false);

ui.pushButton3->setDisabled(false);

if (!camera.isOpened())

{

camera.open("http://192.168.3.2:8081");

}

timer1->start();

});

初始化定时器2,用来显示增强现实后的视频流。然后定义“增强现实”按钮的操作,读取视频文件并且获取视频中第一帧图像的四个顶点坐标

QTimer* timer2 = new QTimer;

timer2->setInterval(30);

connect(ui.pushButton2, &QPushButton::clicked, ui.label2, [=]() {

ui.pushButton1->setDisabled(true);

ui.pushButton2->setDisabled(true);

if (!video.isOpened())

{

video.open("D:\\opencv_c++\\opencv_tutorial\\data\\images\\Megamind.avi");

}

Mat firstFrame;

video.read(firstFrame);

video.read(firstFrame);

int width = firstFrame.cols;

int height = firstFrame.rows;

//提取替换图像的四个顶点

new_image_box.clear();

new_image_box.push_back(Point(0, 0));

new_image_box.push_back(Point(width, 0));

new_image_box.push_back(Point(width, height));

new_image_box.push_back(Point(0, height));

timer2 ->start();

});

重点步骤:定义定时器2的操作,对摄像头捕获的图像进行aruco标记检测,如果检测到完整的4个aruco标记,就寻找这四个标记所形成的四边形的四个顶点。然后对视频流帧图像的顶点,到这个四边形的顶点做透视变换,并根据透视变换后的区域进行掩膜操作,最后加权融合捕获图像和透视变换后的视频帧图像,从而得到具有AR效果的图像。

connect(timer2, &QTimer::timeout, ui.label2, [=]() {

camera.read(frame);

Mat newFrame;

video.read(videoFrame);

if (videoFrame.empty())

{

timer2->stop();

}

//进行标记检测

auto dictionary = aruco::getPredefinedDictionary(aruco::DICT_6X6_250);

vector<vector<Point2f>>corners, rejectedImaPoints;

vector<int>ids;

auto parameters = aruco::DetectorParameters::create();

aruco::detectMarkers(frame, dictionary, corners, ids, parameters, rejectedImaPoints, cameraMatrix, distCoeffs);

if (ids.size() != 4)

{

QImage qframe = QImage((const uchar*)frame.data, frame.cols, frame.rows, QImage::Format::Format_BGR888);

ui.label2->setPixmap(QPixmap::fromImage(qframe.scaled(ui.label2->size(), Qt::AspectRatioMode::KeepAspectRatio)));

}

else

{

//aruco::drawDetectedMarkers(videoFrame, corners, ids, Scalar(0, 255, 0));

vector<vector<Point2f>> cornersList(4);

for (int i = 0; i < 4; i++)

{

if (ids[i] == 2)

{

cornersList[0] = corners[i];

}

else if (ids[i] == 1)

{

cornersList[1] = corners[i];

}

else if (ids[i] == 0)

{

cornersList[2] = corners[i];

}

else if (ids[i] == 4)

{

cornersList[3] = corners[i];

}

}

//提取四个标记形成的ROI区域

vector<Point2f> roi_box_pt(4);

//寻找方框的四个顶点,从检测到标记的顺序决定

roi_box_pt[0] = cornersList[0][0];

roi_box_pt[1] = cornersList[1][1];

roi_box_pt[2] = cornersList[2][2];

roi_box_pt[3] = cornersList[3][3];

//计算从替换图像到目标ROI图像的3x3单应性矩阵

H = findHomography(new_image_box, roi_box_pt);

//进行透视变换

cv::warpPerspective(videoFrame, videoFrame, H, frame.size(), INTER_CUBIC);

//制作掩膜

mask_mat = Mat(frame.size(), CV_8UC3, Scalar(255, 255, 255));

for (int i = 0; i < frame.rows; i++)

{

for (int j = 0; j < frame.cols; j++)

{

if (videoFrame.at<Vec3b>(i, j) != Vec3b(0, 0, 0))

{

mask_mat.at<Vec3b>(i, j) = Vec3b(0, 0, 0);

}

}

}

Mat kernel = getStructuringElement(MorphShapes::MORPH_RECT, Size(10, 10));

cv::morphologyEx(mask_mat, mask_mat, MORPH_OPEN, kernel);

//用透视变换后的替换图像,替换原图像中的ROI区域

Mat result;

bitwise_and(frame, mask_mat, newFrame);

addWeighted(newFrame, 1, videoFrame, 0.7, 1.0, result);

QImage qresult = QImage((const uchar*)result.data, result.cols, result.rows, QImage::Format::Format_BGR888);

ui.label2->setPixmap(QPixmap::fromImage(qresult.scaled(ui.label2->size(), Qt::AspectRatioMode::KeepAspectRatio)));

}

});

最后我们需要定义“关闭摄像头”按钮的操作,也就是把所有定义的VideoCaptrue对象释放掉,并且停止所有定时器

connect(ui.pushButton3, &QPushButton::clicked, ui.label1, [=]() {

ui.pushButton1->setDisabled(false);

ui.pushButton2->setDisabled(true);

camera.release();

video.release();

timer1->stop();

timer2->stop();

ui.label1->setPixmap(black);

ui.label2->setPixmap(black);

});

想象一下,如果把这四个aruco标记裁剪下来后张贴到相框上,是不是就多了一个百变的AR相框???哈哈哈是不是还挺有趣的。好的那本次笔记到此结束啦,谢谢阅读~

PS:本人的注释比较杂,既有自己的心得体会也有网上查阅资料时摘抄下的知识内容,所以如有雷同,纯属我向前辈学习的致敬,如果有前辈觉得我的笔记内容侵犯了您的知识产权,请和我联系,我会将涉及到的博文内容删除,谢谢!