mfs(分布式)部署及高可用

| ip(主机名) | 用途 |

|---|---|

| 172.25.32.4(server4) | mfs master corosync+pacemaker |

| 172.25.3256(server5) | chunk server |

| 172.25.32.6(server5) | chunk server |

| 172.25.32.7(server5) | mfs master corosync+pacemaker |

| 物理机 | mfs master corosync+pacemaker |

[root@server4 ~]# yum install -y moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm

[root@server4 mfs]# vim /etc/hosts

172.25.32.4 server4 mfsmaster

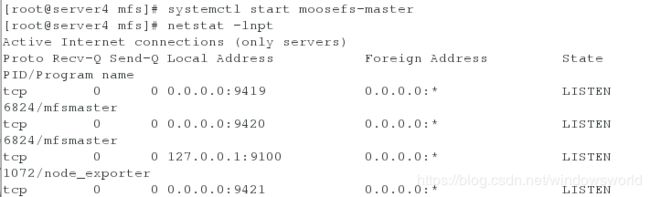

[root@server4 mfs]# systemctl start moosefs-master

[root@server4 mfs]# netstat -lnpt

9419:metalogger监听的端口地址,和原数据日志相结合,定期和master端同步数据

9420:用于和chunkserver连接的端口地址,通信节点

9421:用于客户端对外连接的点口地址

启动图形监控

[root@server4 mfs]# systemctl start moosefs-cgiserv

从节点开启

server5

[root@server5 ~]# yum install -y moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

[root@server5 ~]# vim /etc/hosts

172.25.32.4 server4 mfsmaster

[root@server5 ~]# cd /etc/mfs

[root@server5 mfs]# vim mfshdd.cfg

/mnt/chunk1

[root@server5 mfs]# cd /mnt

[root@server5 mnt]# mkdir chunk1

[root@server5 mnt]# chown mfs.mfs chunk1/

[root@server5 mnt]# systemctl start moosefs-chunkserver

server6

[root@server6 ~]# yum install -y moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

[root@server6 ~]# cd /etc/hosts

172.25.32.4 server4 mfsmaster

[root@server6 ~]# cd /etc/mfs

[root@server6 mfs]# vim mfshdd.cfg

/mnt/chunk2

[root@server6 mfs]# cd /mnt

[root@server6 mnt]# mkdir chunk2

[root@server6 mnt]# chown mfs.mfs chunk2/

[root@server6 mnt]# systemctl start moosefs-chunkserver

[kiosk@foundation32 3.0.103]$ sudo yum install -y moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm##安装软件

[root@foundation32 ~]# vim /etc/hosts##配置解析

172.25.32.4 server4 mfsmaster

創建真机挂载的数据的目录并且编辑配置文件添加挂载目录

[root@foundation32 ~]# mkdir /mnt/mfs

[root@foundation32 ~]# vim /etc/mfs/mfsmount.cfg

16 /mnt/mfs

在挂载客户端下创建目录并且查看相应信息

[root@foundation32 ~]# cd /mnt/mfs

[root@foundation32 mfs]# mkdir dir1

[root@foundation32 mfs]# mkdir dir2

[root@foundation32 mfs]# mfsgetgoal dir1/

dir1/: 2

[root@foundation32 mfs]# mfsgetgoal dir2/

dir2/: 2

指定dir1中的挂载数为1

[root@foundation32 mfs]# mfssetgoal -r 1 dir1/

dir1/:

inodes with goal changed: 1

inodes with goal not changed: 0

nodes with permission denied: 0

[root@foundation32 mfs]# mfsgetgoal dir1/

dir1/: 1

对文件进行复制发现dir1中文件只有一份备份(在server6),dir2中有2份备份(server5,server6都有备份)

关闭server6服务查看备份情况

[root@server6 chunk2]# systemctl stop moosefs-chunkserver

可以看到server6关闭后,dir1中的文件备份不存在,而dir2中却还有一份在server5中存在

##因为存储passwd的chunkserver已经挂掉,所以此时已经查不到passwd的相关数据,如果此时要打开该文件电脑会卡住,因为数据已经不再这个主机上存储

再次启动服务,数据恢复

[root@server6 chunk2]# systemctl start moosefs-chunkserver

- 客户端离散存储的测试

chunkserver对数据是按块备份的,每块50kb - dir1

[root@foundation32 dir2]# cd …/dir1

[root@foundation32 dir1]# dd if=/dev/zero of=bigfile bs=1M count=200

- dir2

[root@foundation32 dir1]# cd …/dir2

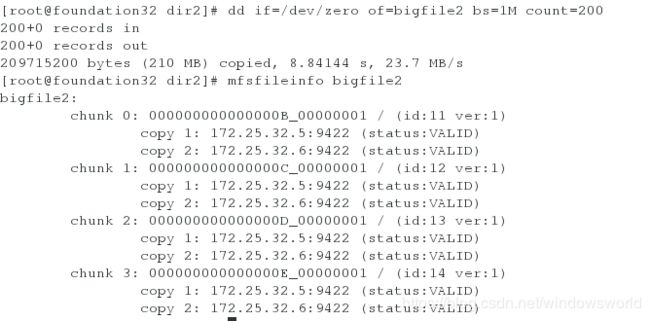

[root@foundation32 dir2]# dd if=/dev/zero of=bigfile2 bs=1M count=200

dir2对每个chunkserver都进行备份

- 关闭server6进行测试

[root@server6 chunk2]# systemctl stop moosefs-chunkserver

server6关闭后dir2中的数据还可以查看,dir1中的数据则无法查看

moosefs-master的开启问题

moosefs-master的启动是依赖于/var/lib/mfs下的metadata.mfs文件的,当moosefs-master开启后metadata.mfs会变为metadata.mfs.back文件,正常关闭后又会变为metadata.mfs文件

[root@server4 mfs]# ls

changelog.1.mfs changelog.2.mfs changelog.3.mfs metadata.crc metadata.mfs metadata.mfs.back.1 metadata.mfs.empty stats.mfs

[root@server4 mfs]# systemctl start moosefs-master

[root@server4 mfs]# ls

changelog.1.mfs changelog.2.mfs changelog.3.mfs metadata.crc metadata.mfs.back metadata.mfs.back.1 metadata.mfs.empty stats.mfs

[root@server4 mfs]# systemctl stop moosefs-master

[root@server4 mfs]# ls

changelog.2.mfs changelog.3.mfs changelog.4.mfs metadata.crc metadata.mfs metadata.mfs.back.1 metadata.mfs.empty stats.mfs

可是当moosefs-master异常关闭时(down机,内核崩溃等),再次开启moosefs-master服务时,metadata.mfs.back不会正常恢复到metadata.mfs文件,moosefs-master会无法启动。

可以看到在杀死mfs-master进程后,再次启动时会出错,可以看到metadata.mfs文件没有正常生成。

那么怎么解决moosefs-master异常结束呢?

[root@server4 mfs]# vim /usr/lib/systemd/system/moosefs-master.service##编辑moosefs-master的启动脚本加入-a参数

8 ExecStart=/usr/sbin/mfsmaster -a start

这样无论怎么样,moosefs-master都会自动找到启动文件自己启动。

数据恢复

- 删除passwd文件

[root@foundation32 dir1]# ls

bigfile passwd

[root@foundation32 dir1]# rm -fr passwd

[root@foundation32 dir1]# ls

bigfile

被删除的数据有恢复时间,在时间内时可以恢复的

[root@foundation32 dir1]# mfsgettrashtime .

.: 86400

[root@foundation32 dir1]# ls

bigfile

- 恢复

[root@foundation32 mfs]# mkdir /mnt/mfsmeta

[root@foundation32 mfsmeta]# mfsmount -m /mnt/mfsmeta/

mfsmaster accepted connection with parameters: read-write,restricted_ip

[root@foundation32 mfsmeta]# ls

[root@foundation32 mfsmeta]# cd /mnt/mfsmeta

[root@foundation32 mfsmeta]# ls

sustained trash

[root@foundation32 mfsmeta]# cd trash

[root@foundation32 trash]# ls

在trash中查找删除的数据并将其移动至unde1目录,数据就会恢复

[root@foundation32 trash]# find -name *passwd*

./004/00000004|dir1|passwd

[root@foundation32 trash]# mv ./004/00000004\|dir1\|passwd undel/

[root@foundation32 trash]# cd /mnt/mfs/dir1

[root@foundation32 dir1]# ls

bigfile passwd

数据恢复成功

pacemaker+corosync+iscsi+fence实现高可用

1.安装服务

[root@server7 ~]# vim /etc/hosts

172.25.32.4 server4 mfsmaster

[root@server7 ~]# yum install -y moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

8 ExecStart=/usr/sbin/mfsmaster -a start

[root@server7 system]# systemctl daemon-reload

[root@server7 system]# systemctl start moosefs-master

查看端口

2.server4与server7配置高可用yum源

[root@server7 system]# vim /etc/yum.repos.d/cn.repo

[HighAvailability]

name=HighAvailability

baseurl=http://172.25.32.250/cn/addons/HighAvailability/

gpgcheck=0

[root@server7 system]# scp /etc/yum.repos.d/cn.repo server4:/etc/yum.repos.d/cn.repo

3.在server4上和server7上安装pacemaker+corosync

[root@server4 system]# yum install pacemaker corosync -y

[root@server7 system]# yum install pacemaker corosync -y

4.作免秘登陆

[root@server4 mfs]# ssh-keygen

[root@server4 mfs]# ssh-copy-id server7

[root@server4 mfs]# ssh server7

Last login: Sun Aug 18 08:51:51 2019 from 172.25.32.250

[root@server7 ~]# logout

Connection to server7 closed.

5.在server4和server7上安装资源管理工具并且开启相应服务

[root@server4 mfs]# yum install -y pcs

[root@server4 mfs]# systemctl start pcsd

[root@server4 mfs]# systemctl enable pcsd

[root@server4 mfs]# passwd hacluster

[root@server7 mfs]# yum install -y pcs

[root@server7 mfs]# systemctl start pcsd

[root@server7 mfs]# systemctl enable pcsd

[root@server7 mfs]# passwd hacluster

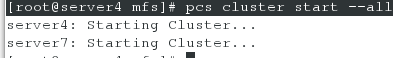

6.server14使用集群管理工具创建mfsmaster集群并且启动集群

[root@server4 mfs]# pcs cluster auth server4 server7

Username: hacluster

Password:

server4: Authorized

server7: Authorized

[root@server4 mfs]# pcs cluster setup --name mycluster server4 server7

[root@server4 mfs]# pcs cluster start --all

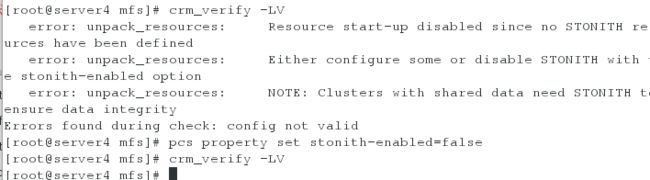

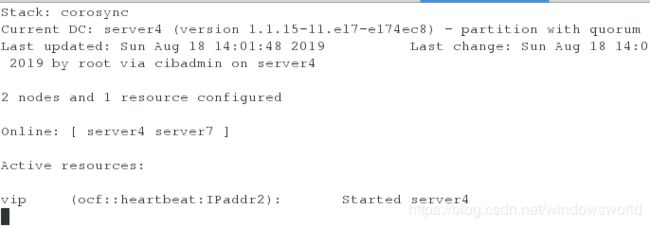

7.查看集群状态

[root@server4 mfs]# pcs status##有一个报错是因为fence模块没有被安装使用

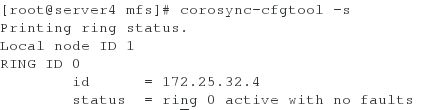

[root@server4 mfs]# corosync-cfgtool -s

[root@server4 mfs]# pcs status corosync

9.给mfs高可用集群添加资源,此次资源添加vip

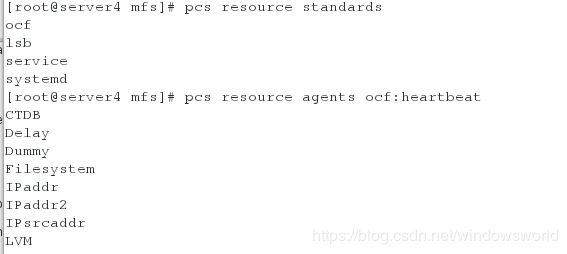

[root@server4 mfs]# pcs resource standards

[root@server4 mfs]# pcs resource agents ocf:heartbeat

[root@server4 mfs]# pcs resource create vip ocfIPaddr2 ip=172.25.32.100 cidr_netmask=32 op monitor interval=30s

[root@server4 mfs]# pcs resource create vip ocfIPaddr2 ip=172.25.32.100 cidr_netmask=32 op monitor interval=30s

[root@server4 mfs]# pcs resource show

server7上查看集群状态

[root@server7 system]# crm_mon

10.高可用测试

vip刚开始在server4

[root@server4 mfs]# pcs cluster stop server4##关闭server4服务后,vip会漂移到server7

[root@server4 mfs]# pcs cluster start server4##再次开启server4并使得vip迁移会server4

[root@server7 system]# pcs cluster stop server7

[root@server7 system]# pcs cluster start server7

pacemaker+corosync实现高可用后的iscsi实现数据共享

| ip(主机名) | 作用 |

|---|---|

| 172.25.32.4 | iscsi客户端 |

| 172.25.32.6 | iscsi服务端 |

| 172.25.32.7 | iscsi客户端 |

1.客户端移除挂载

[root@foundation32 html]# umount /mnt/mfsmeta

2.关闭服务为iscsi做准备

[root@server4 mfs]# systemctl stop moosefs-master

[root@server5 chunk5]# systemctl stop moosefs-chunkserver

[root@server6 chunk6]# systemctl stop moosefs-chunkserver

[root@server7 system]# systemctl stop moosefs-master

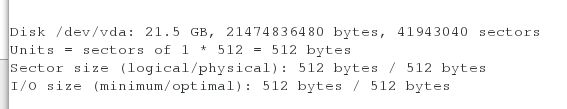

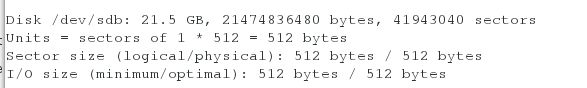

1.在server6为chunkserver也是真正的数据端上添加共享数据的磁盘

[root@server6 chunk2]# fdisk -l

[root@server6 chunk2]# yum install -y targetcli

[root@server6 chunk2]# targetcli

[root@server6 chunk2]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.fb41

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> ls

o- / ..................................................................... [...]

o- backstores .......................................................... [...]

| o- block .............................................. [Storage Objects: 0]

| o- fileio ............................................. [Storage Objects: 0]

| o- pscsi .............................................. [Storage Objects: 0]

| o- ramdisk ............................................ [Storage Objects: 0]

o- iscsi ........................................................ [Targets: 0]

o- loopback ..................................................... [Targets: 0]

/> cd backstores/block

/backstores/block> create my_disk1 /dev/vda

Created block storage object my_disk1 using /dev/vda.

/backstores/block> cd /

/> cd iscsi

/iscsi> create iqn.2019-08.com.example:server6

Created target iqn.2019-08.com.example:server6.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/iscsi> cd iqn.2019-08.com.example:server6

/iscsi/iqn.20...ample:server6> cd tpg1/luns

/iscsi/iqn.20...er6/tpg1/luns> create /backstores/block/my_disk1

Created LUN 0.

/iscsi/iqn.20...er6/tpg1/luns> cd /iscsi/iqn.2019-08.com.example:server6/tpg1/acls

/iscsi/iqn.20...er6/tpg1/acls> create iqn.2019-08.com.example:client

Created Node ACL for iqn.2019-08.com.example:client

Created mapped LUN 0.

/iscsi/iqn.20...er6/tpg1/acls>

/iscsi/iqn.20...er6/tpg1/acls> exit

Global pref auto_save_on_exit=true

Last 10 configs saved in /etc/target/backup.

Configuration saved to /etc/target/saveconfig.json

[root@server4 system]# yum install -y iscsi-*

[root@server4 mfs]# vim /etc/iscsi/initiatorname.iscsi

[root@server4 mfs]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-08.com.example:client

[root@server4 mfs]# iscsiadm -m discovery -t st -p 172.25.32.6

172.25.32.6:3260,1 iqn.2019-08.com.example:server6

[root@server4 mfs]# systemctl restart iscsid

[root@server4 mfs]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2019-08.com.example:server6, portal: 172.25.32.6,3260] (multiple)

Login to [iface: default, target: iqn.2019-08.com.example:server6, portal: 172.25.32.6,3260] successful.

[root@server4 mfs]# fdisk -l

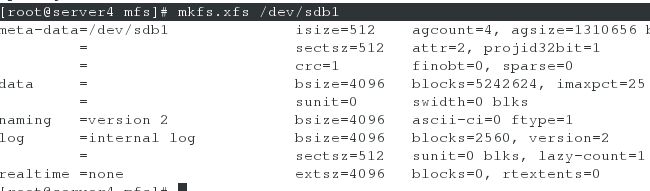

2.在server4上创建共享的磁盘分区挂载并且进行验证

[root@server4 mfs]# fdisk /dev/sdb

[root@server4 mfs]# mkfs.xfs /dev/sdb1

[root@server4 mfs]# mount /dev/sdb1 /mnt

[root@server4 mfs]# cd /var/lib/mfs/

[root@server4 mfs]# ls

changelog.1.mfs changelog.5.mfs metadata.crc metadata.mfs.empty

changelog.2.mfs changelog.6.mfs metadata.mfs stats.mfs

changelog.3.mfs changelog.7.mfs metadata.mfs.back.1

[root@server4 mfs]# cp -p * /mnt/

[root@server4 mfs]# cd /mnt/

[root@server4 mnt]# ls

changelog.1.mfs changelog.5.mfs metadata.crc metadata.mfs.empty

changelog.2.mfs changelog.6.mfs metadata.mfs stats.mfs

changelog.3.mfs changelog.7.mfs metadata.mfs.back.1

[root@server4 mnt]# chown mfs.mfs /mnt

[root@server4 mnt]# cd

[root@server4 ~]# umount /mnt

[root@server4 ~]# mount /dev/sdb1 /var/lib/mfs/

[root@server4 ~]# systemctl start moosefs-master

[root@server4 ~]# systemctl stop moosefs-master

server7iscsi客户端

操作同server4

[root@server4 system]# yum install -y iscsi-*

[root@server7 ~]# systemctl restart iscsid

[root@server7 system]# vim /etc/iscsi/initiatorname.iscsi

[root@server7 system]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2019-08.com.example:client

[root@server7 system]# iscsiadm -m discovery -t st -p 172.25.32.6

172.25.32.6:3260,1 iqn.2019-08.com.example:server6

[root@server7 system]# iscsiadm -m node -l

Logging in to [iface: default, target: iqn.2019-08.com.example:server6, portal: 172.25.32.6,3260] (multiple)

Login to [iface: default, target: iqn.2019-08.com.example:server6, portal: 172.25.32.6,3260] successful.

[root@server7 ~]# cd /var/lib/mfs/

[root@server7 mfs]# ls

changelog.2.mfs metadata.mfs metadata.mfs.empty

metadata.crc metadata.mfs.back.1 stats.mfs

[root@server7 mfs]# cp -p * /mnt/

[root@server7 mfs]# cd /mnt

[root@server7 mnt]# ls

changelog.2.mfs metadata.crc metadata.mfs.back.1 stats.mfs

database.sh metadata.mfs metadata.mfs.empty

[root@server7 mnt]# chown mfs.mfs /mnt

[root@server7 mnt]# cd

[root@server7 ~]# umount /mnt

umount: /mnt: not mounted

[root@server7 ~]# systemctl start moosefs-master

[root@server7 ~]# systemctl stop moosefs-master

查看server7与server4的用户与组id是否相同,不相同要进行修改

[root@server4 ~]# id mfs

uid=991(mfs) gid=988(mfs) groups=988(mfs)

[root@server7 ~]# id mfs

uid=995(mfs) gid=993(mfs) groups=993(mfs)

[root@server7 ~]# vim /etc/passwd

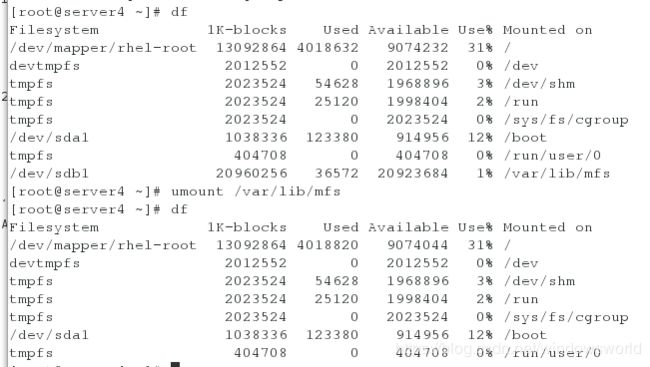

- 创建iscsi文件系统的集群资源

集群管理实现mfs文件系统下的iscsi文件系统共享,所以如果在server1,server4上挂载了/var/lib/mfs需要将其卸载,目的是要通过集群实现其的自动挂载。

[root@server4 ~]# pcs resource create mfsdata ocfFilesystem device=/dev/sdb1 directory=/var/lib/mfs/ fstype=xfs op monitor interval=30s

查看创建的资源

创建mfsd文件系统资源,把服务集中在一台主机上

[root@server4 ~]# pcs resource create mfsd systemd:moosefs-master op monitor interval=1min

[root@server4 ~]# pcs resource group add mfsgroup vip mfsdata mfsd

[root@server4 ~]# crm_mon

发现服务都在server1上此时查看挂载状态发现/var/lid/mfs文件系统的相应信息自动挂载

fence解决脑裂问题

[root@server5 chunk1]# systemctl start moosefs-chunkserver

[root@server6 chunk1]# systemctl start moosefs-chunkserver

- vip位置

- 服务状态

- 在客户端进行分布式存储测试

[root@foundation32 dir1]# dd if=/dev/zero of=bigfile2 bs=1M count=2000#客户端上传数据时,挂掉server4,数据依旧可以上传

[root@foundation32 dir1]# dd if=/dev/zero of=bigfile2 bs=1M count=2000#客户端上传数据时,挂掉server4,数据依旧可以上传

[root@server4 ~]# pcs cluster stop server4

[root@foundation32 dir1]# ls

bigfile bigfile2 passwd

- 为了防止server4启动后backmaster与master进行争抢对一个文件进行操作,需要用fence对其进行处理

[root@server4 ~]# yum install -y fence-virt*

[root@server4 ~]# mkdir /etc/cluster

[root@server7 ~]# yum install -y fence-virt*

[root@server7 ~]# mkdir /etc/cluster

物理机下载fence服务并且生成一份fence密钥文件,传给服务端

[root@foundation32 cluster]# yum install -y fence-virtd

[root@foundation32 cluster]# mkdir /etc/cluster/

[root@foundation32 cluster]# cd /etc/cluster/

[root@foundation32 cluster]# fence_virtd -c

[root@foundation32 cluster]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

[root@foundation32 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/

[root@foundation32 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/

![root@foundation32 cluster]# scp fence_xvm.key [email protected]:/etc/cluster/在这里插入图片描述