'''

思路: 1、生成L1;

2、Ck生成Lk;

3、Lk生成Ck+1;

4、得到频繁项集;(前面只用到支持度);

5、通过频繁项集生成关联规则。(用到置信度).

'''

import collections

datasets = [

["I1","I2","I5"],

["I2","I4"],

["I2","I3"],

["I1","I2","I4"],

["I1","I3"],

["I2","I3"],

["I1","I3"],

["I1","I2","I3","I5"],

["I1","I2","I3"]

]

min_support = 2

min_confidence = 0.5

Lk_all = {}

def getdatasetsString():

result = []

for transation in datasets:

tmp = ""

for item in transation:

tmp+=item

if item != transation[-1]:

tmp += ","

result.append(tmp)

return result

def getL1():

tmp = []

L1 = {}

for transaction in datasets:

for item in transaction:

tmp.append(item)

rs = collections.Counter(tmp)

for key in sorted(rs):

if(rs[key] >= min_support):

L1[key] = rs[key]

return L1

def getLk(Ck):

Lk = {}

result = getdatasetsString()

for items in Ck:

tmpitems = items.split(",")

count = 0

for r in result:

flag = True

for item in tmpitems:

if item in r:

continue

else:

flag = False

break

if flag:

count = count + 1

if (count >= min_support):

Lk[items] = count

return Lk

def getCk1(Lk):

Ck= []

keys = list(Lk.keys())

keys1 = list(Lk.keys())

for key in keys:

keys1.remove(key)

for key1 in keys1:

key_last = key.split(",")[-1]

key1_last = key1.split(",")[-1]

key_pre = key.replace(key_last,"")

key1_pre = key1.replace(key1_last,"")

if( key_pre == key1_pre):

if (key_last>key1_last):

s = key_pre+key1_last+","+key_last

else:

s = key_pre+key_last+","+key1_last

subc = getSubString(s)

subl = getLk(subc)

if(len(subc) == len(subl)):

Ck.append(s)

return Ck;

def getFiallyLk(L1):

Lk = L1

while(True):

Ck1 = getCk1(Lk)

Lk1 = getLk(Ck1)

for key in Lk:

Lk_all[key] = Lk[key]

if (len(Lk1) == 0):

break

Lk = Lk1

return Lk

def getSubString(s):

substring = []

items = s.split(",")

for item in items:

if item == items[-1]:

substring.append(s.replace(","+item,""))

else:

substring.append(s.replace(item+",",""))

return substring

from itertools import combinations

try:

from functools import reduce

except ImportError:

pass

f = lambda s: reduce(list.__add__, (

list(map(list, combinations(s, l)))

for l in range(1, len(s))),

[])

def getRule(Lk):

rules = {}

for Lk_item in Lk:

items = Lk_item.split(",")

N = Lk[Lk_item]

pre_item_list = f(items)

for pre_item in pre_item_list:

back_item = sorted(list(set(items)^set(pre_item)) )

pre_s = ""

for item in pre_item:

if len(pre_item) == 1:

pre_s += item

else:

if item == pre_item[-1]:

pre_s += item

else:

pre_s += item + ","

back_s = ""

for item in back_item:

if len(back_item) == 1:

back_s += item

else:

if item == back_item[-1]:

back_s += item

else:

back_s += item + ","

N1 = Lk_all[pre_s]

p = N/N1

if(p >= min_confidence):

rule = pre_s+" => "+back_s

rules[rule] = p

return rules

if __name__ == "__main__":

L1 = getL1()

Lk = getFiallyLk(L1)

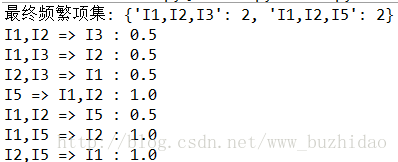

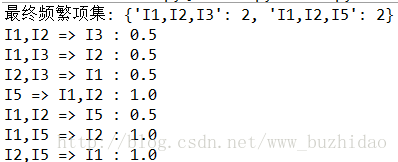

print("最终频繁项集:",Lk)

rules = getRule(Lk)

for rule in rules:

print(rule,":",rules[rule])

'''

C2 = getCk1(L1)

print("C2:",C2)

L2 = getLk(C2)

print("L2:",L2)

C3 = getCk1(L2)

print("C3:",C3)

L3 = getLk(C3)

print("L3:",L3)

'''

最终输出结果截图: