codis 3.0.3数据同步和高可用及压力测试

一.基本信息

三台机器:192.168.30.113 192.168.30.114 192.168.30.115

zk1 zk2 zk3

codis-dashboard

codis-fe codis-ha

codis-proxy1 codis-proxy2 codis-proxy3

codis_group1_M(6379) codis_group2_M(6379) codis_group3_M(6379)

codis_group3_S(6380) codis_group1_S(6380) codis_group2_S(6380)

二.主从数据同步测试

group1:

redis-cli -h 192.168.30.113 -p 6379

auth xxx

192.168.30.113:6379> auth xxxx

OK

192.168.30.113:6379> set a 1

OK

redis-cli -h 192.168.30.115 -p 6380

192.168.30.115:6380> auth xxx

OK

192.168.30.115:6380> get a

"1"

group2:

redis-cli -h 192.168.30.114 -p 6379

auth xxx

192.168.30.114:6379> set b 2

OK

redis-cli -h 192.168.30.113 -p 6380

auth xxx

192.168.30.113:6380> get b

"2"

group3:

redis-cli -h 192.168.30.115 -p 6379

auth xxx

192.168.30.115:6379> set c 3

OK

redis-cli -h 192.168.30.114 -p 6380

auth xxx

192.168.30.114:6380> get c

"3"

三.高可用测试

1.将proxy1 offline(192.168.30.113)后,验证通过proxy2(192.168.30.114)的19000 端口访问redis

关闭proxy1:

[codisapp@mvxl2579 codis]$ chmod u+x *

[codisapp@mvxl2579 codis]$ ps -ef|grep proxy

codisapp 10479 8345 0 13:36 pts/0 00:00:00 grep proxy

codisapp 30898 1 0 May31 ? 00:08:46 /codisapp/svr/codis/bin/codis-proxy --ncpu=4 --config=/codisapp/conf/codis/proxy.toml --log=/codisapp/logs/codis/proxy.log --log-level=WARN

[codisapp@mvxl2579 codis]$ ./stop_codis_proxy.sh

[codisapp@mvxl2579 codis]$ ps -ef|grep proxy

codisapp 10520 8345 0 13:36 pts/0 00:00:00 grep proxy

[codisapp@mvxl2580 ~]$ redis-cli -h 192.168.30.114 -p 19000

192.168.30.114:19000> auth xxxx

OK

192.168.30.114:19000> set d 4

OK

192.168.30.114:19000> get d

"4"

2.将proxy1 offline后,且将codis server1的6379和6380关掉,验证通过proxy3的19000 端口访问redis

再接着关闭server 1的redis:

[codisapp@mvxl2579 codis]$ ps -ef|grep codis-server

codisapp 10584 8345 0 13:44 pts/0 00:00:00 grep codis-server

codisapp 28919 1 0 May31 ? 00:01:28 /codisapp/svr/codis/bin/codis-server *:6379

codisapp 29151 1 0 May31 ? 00:01:18 /codisapp/svr/codis/bin/codis-server *:6380

[codisapp@mvxl2579 codis]$ ./stop_codis_server.sh

[codisapp@mvxl2579 codis]$ ps -ef|grep codis-server

codisapp 10626 8345 0 13:44 pts/0 00:00:00 grep codis-server

通过proxy3访问正常:

[codisapp@mvxl2580 ~]$ redis-cli -h 192.168.30.115 -p 19000

192.168.30.115:19000> auth xxxxxxx

OK

192.168.30.115:19000> set e 5

OK

192.168.30.115:19000> get e

"5"

3. HA主从自动切换测试

将server group 3(192.168.30.115)上的6379进程kill,查看HA是否能自动实现主从切换,及原主库启动后,如何处理?

mvxl2530主机上将6380对应的进程kill:

[codisapp@mvxl2581 codis]$ ps -ef|grep codis-server

codisapp 813 454 0 15:58 pts/0 00:00:00 grep codis-server

codisapp 21682 1 0 May31 ? 00:01:17 /codisapp/svr/codis/bin/codis-server *:6379

codisapp 21701 1 0 May31 ? 00:01:14 /codisapp/svr/codis/bin/codis-server *:6380

[codisapp@mvxl2581 codis]$ kill 21682

[codisapp@mvxl2581 codis]$ ps -ef|grep codis-server

codisapp 849 454 0 15:58 pts/0 00:00:00 grep codis-server

codisapp 21701 1 0 May31 ? 00:01:14 /codisapp/svr/codis/bin/codis-server *:6380

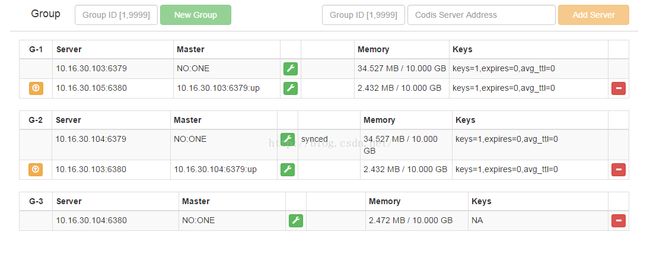

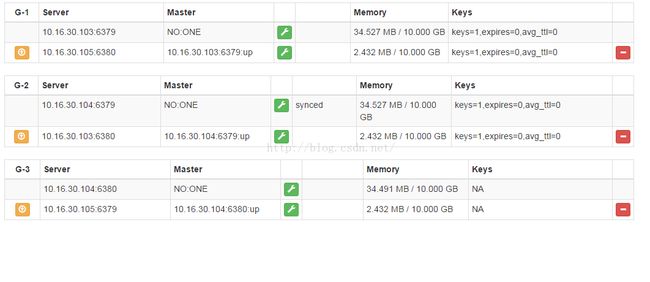

以下显示:group 3中的从库已有自动提升为主库,并且原主库已下线,从组中剔除.

下线的原主库redis需要先启动服务,再重新加入到组中,并点击帮手小图标进行同步:

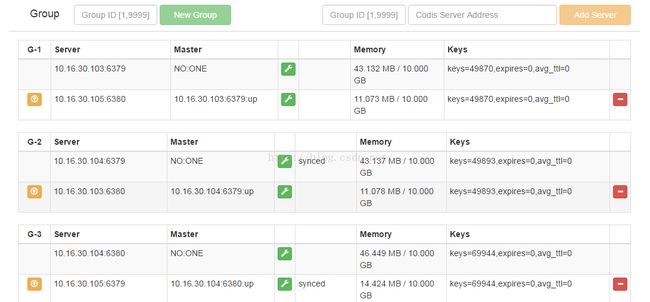

三.大数据量下的主从同步测试

插入20W个key(每次测试运行前,需要更改INSTANCE_NAME)

vim redis-key.sh

#!/bin/bash

REDISCLT="redis-cli -h 192.168.30.113 -p 19000 -a "xxxxxxx" -n 0 set"

ID=1

while [ $ID -le 50000 ]

do

INSTANCE_NAME="i-2-$ID-VM"

UUID=`cat /proc/sys/kernel/random/uuid`

CREATED=`date "+%Y-%m-%d %H:%M:%S"`

$REDISCLT vm_instance:$ID:instance_name "$INSTANCE_NAME"

$REDISCLT vm_instance:$ID:uuid "$UUID"

$REDISCLT vm_instance:$ID:created "$CREATED"

$REDISCLT vm_instance:$INSTANCE_NAME:id "$ID"

ID=`expr $ID + 1`

done

执行上面脚本,

查看面板,各组主从数据同步正常,QPS达到了800以上,显示如下:

五. 压力测试

1、/codisapp/svr/codis/bin/redis-benchmark -h 192.168.30.113 -p 19000 -a “xxxxxx” -c 100 -n 100000

100个并发连接,100000个请求,检测host为localhost 端口为6379的redis服务器性能。

以下显示100%的请求都在15ms或13ms或1ms内处理完成。

[codisapp@mvxl2579 codis]$ /codisapp/svr/codis/bin/redis-benchmark -h 192.168.30.113 -p 19000 -a "xxxxxxx" -c 100 -n 100000

====== PING_INLINE ======

100000 requests completed in 0.83 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 1 milliseconds

120627.27 requests per second

====== PING_BULK ======

100000 requests completed in 0.79 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 20 milliseconds

127064.80 requests per second

====== SET ======

100000 requests completed in 1.08 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 14 milliseconds

92506.94 requests per second

====== GET ======

100000 requests completed in 1.02 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 15 milliseconds

97560.98 requests per second

====== INCR ======

100000 requests completed in 0.80 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 19 milliseconds

124843.95 requests per second

====== LPUSH ======

100000 requests completed in 0.81 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 18 milliseconds

123762.38 requests per second

====== LPOP ======

100000 requests completed in 0.82 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 6 milliseconds

121212.12 requests per second

====== SADD ======

100000 requests completed in 0.93 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 6 milliseconds

108108.11 requests per second

====== SPOP ======

100000 requests completed in 0.91 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 16 milliseconds

110011.00 requests per second

====== LPUSH (needed to benchmark LRANGE) ======

100000 requests completed in 0.88 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 15 milliseconds

114155.25 requests per second

====== LRANGE_100 (first 100 elements) ======

100000 requests completed in 3.76 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 19 milliseconds

26588.67 requests per second

====== LRANGE_300 (first 300 elements) ======

100000 requests completed in 11.85 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 53 milliseconds

8438.82 requests per second

====== LRANGE_500 (first 450 elements) ======

100000 requests completed in 17.56 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 61 milliseconds

5693.46 requests per second

====== LRANGE_600 (first 600 elements) ======

100000 requests completed in 22.23 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 74 milliseconds

4499.44 requests per second

====== MSET (10 keys) ======

100000 requests completed in 3.24 seconds

100 parallel clients

3 bytes payload

keep alive: 1

100.00% <= 20 milliseconds

30873.73 requests per second

2、/codisapp/svr/codis/bin/redis-benchmark -h 192.168.30.113 -p 19000 -a "xxxxxxx" -q -d 100

测试存取大小为100字节的数据包的性能

[codisapp@mvxl2579 codis]$ /codisapp/svr/codis/bin/redis-benchmark -h 192.168.30.113 -p 19000 -a "xxxxxxx" -q -d 100

PING_INLINE: 124223.60 requests per second

PING_BULK: 129533.68 requests per second

SET: 59772.86 requests per second

GET: 68446.27 requests per second

INCR: 104166.67 requests per second

LPUSH: 121359.23 requests per second

LPOP: 125156.45 requests per second

SADD: 78926.60 requests per second

SPOP: 79113.92 requests per second

LPUSH (needed to benchmark LRANGE): 125628.14 requests per second

LRANGE_100 (first 100 elements): 19149.75 requests per second

LRANGE_300 (first 300 elements): 6358.90 requests per second

LRANGE_500 (first 450 elements): 4207.87 requests per second

LRANGE_600 (first 600 elements): 3238.34 requests per second

MSET (10 keys): 23430.18 requests per second

3、/codisapp/svr/codis/bin/redis-benchmark -h 192.168.30.113 -p 19000 -a "xxxxxxx" -t set,lpush -n 100000 –q

只测试部分操作性能。

[codisapp@mvxl2579 codis]$ /codisapp/svr/codis/bin/redis-benchmark -h 192.168.30.113 -p 19000 -a "xxxxxxx" -t set,lpush -n 100000 -q

SET: 65231.57 requests per second

LPUSH: 119189.52 requests per second