Flume 1.9.0开发者指南

概述

架构

数据流模型

可靠性

构建 Flume

获取Source

编译/测试的Sink

更新协议缓冲区版本

开发自定义组件

Client

Client SDK

RPC客户端接口

RPC客户端- Avro和Thrift

安全RPC客户端-Thrift

故障转移客户端 ( Failover Client )

LoadBalancing RPC客户端

嵌入式代理

接收器

Source

概述

Apache Flume是一个分布式,可靠且可用的系统,用于有效地从许多不同的数据源收集,聚合和移动大量日志数据到集中式数据存储。

Apache Flume是Apache Software Foundation的顶级项目。目前有两种版本代码行,版本0.9.x和1.x. 本文档适用于1.x代码行。有关0.9.x代码行,请参阅Flume 0.9.x开发人员指南。

架构

数据流模型

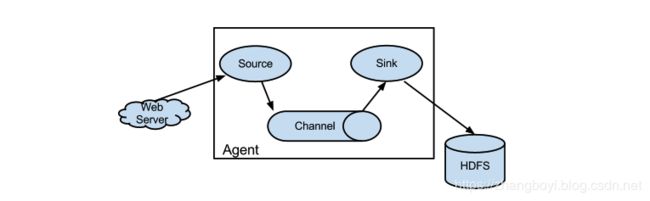

一个 Event是数据的单元,流过Sink剂。所述 Event 从流Source到Channel到Sink,并且通过的一个实现表示 Event接口。一个 Event中携带的有效载荷(字节阵列),其伴随着一组可选的报头(串属性)的。Flume代理是一个进程(JVM),它承载允许 Event从外部Source流向外部目标的组件。

一个Source消耗 具有特定格式的Events,并且这些 Events的通过外部的Source递送到Source , 例如网络服务器。例如,Avro Source可用于从客户端或流中的其他Flume代理接收Avro Event。当Source收到一个 Event时,它会将其存储到一个或多个Channel中。该Channel是一个被动持有 Event的存储,直到 Event被 Sink消耗。Flume中提供的一种Channel是FileChannel 使用本地文件系统作为其后备存储。一个Sink负责从Channel消费 Event,并把它到存储到类似HDFS这样的外部储存库(在的情况下 HDFSEventSink),或者它转发到另一个数据流的Source。给定代理内的Sourxe和Sink以异步方式运行,event在channel中分段存储。

可靠性

Event 在Flume agnet 的channel 中进行。然后,Sink负责将event传递到流中的下一个agnet或终端存储库(如HDF)。只有在event存储到下一个agnet的channel或存储在 终端存储库中之后,Sink才会从channel中删除event。这就是Flume中的单跳消息传递语义如何提供流的端到端可靠性。Flume使用事务性方法来确保event的可靠交付。Source和Sink封装了channel提供的事务中event的存储/检索。这样可以确保event集在流中可靠地从一点传递到另一点。在多跳流的情况下,上一个跳的Sink和下一个跳的Source都打开了它们的事务,以确保event数据安全地存储在下一个环节的channel中。

构建 Flume

获取Source

使用Git签出代码。单击此处获取 git存储库根目录。

Flume 1.x开发发生在分支“trunk”下,因此可以使用以下命令行:

git clone https://git-wip-us.apache.org/repos/asf/flume.git

编译/测试的Sink

Flume构建是mavenized 。您可以使用标准Maven命令编译Flume:

- 仅编译:mvn clean compile

- 编译并运行单元测试:mvn clean test

- 运行单个测试: mvn clean test -Dtest=

, ,... -DfailIfNoTests=false - 创建tarball包:mvn clean install

- 创建tarball包(跳过单元测试):mvn clean install -DskipTests

请注意,Flume构建要求Google Protocol Buffers编译器在路径中。您可以按照此处的说明下载并安装它 。

更新协议缓冲区版本

文件通道依赖于协议缓冲区。更新Flume使用的协议缓冲区版本时,必须使用作为协议缓冲区一部分的protoc编译器重新生成数据访问类,如下所示。

- 在本地计算机上安装所需版本的协议缓冲区

- 在pom.xml中更新Protocol Buffer的版本

- 在Flume中生成新的协议缓冲区数据访问类:cd flume-ng-channels/flume-file-channel; mvn -P compile-proto clean package -DskipTests

- 将Apache许可证头添加到任何缺少它的生成文件中

- 重建并测试Flume:cd ../..; mvn clean install

开发自定义组件

Client

Client在 Event的起Source点进行操作并将其交付给Flume代理。客户端通常在他们正在使用数据的应用程序的进程空间中运行。Flume目前支持Avro,log4j,syslog和Http POST(带有JSON主体)作为从外部Source传输数据的方法。此外,还有一个ExecSource可以使用本地进程的输出作为Flume的输入。

很可能有一个用例,这些现有的选项是不够的。在这种情况下,您可以构建自定义机制以将数据发送到Flume。有两种方法可以实现这一目标。第一个选项是创建一个自定义客户端,与Flume现有的Source之一(如 AvroSource或SyslogTcpSource)进行通信。客户端应将其数据转换为这些Flume Source所理解的消息。另一种选择是编写一个自定义Flume Source,它使用某种IPC或RPC协议直接与您现有的客户端应用程序进行通信,然后将客户端数据转换为Flume Event,以便向下游发送。请注意,所有 Event都存储在Flume代理的渠道必须作为Flume Event存在。

Client SDK

虽然Flume包含许多内置机制(即Source)来摄取数据,但通常需要能够直接从自定义应用程序与Flume进行通信。Flume Client SDK是一个库,使应用程序能够连接到Flume并通过RPC将数据发送到Flume的数据流。

RPC客户端接口

Flume的RpcClient接口的实现封装了Flume支持的RPC机制。用户的应用程序可以简单地调用Flume Client SDK的append(Event)或appendBatch(List

RPC客户端- Avro和Thrift

从Flume 1.4.0开始,Avro是默认的RPC协议。该 NettyAvroRpcClient和ThriftRpcClient实现RpcClient 接口。客户端需要使用目标Flume代理的主机和端口创建此对象,然后可以使用RpcClient将数据发送到代理。以下示例显示如何在用户的数据生成应用程序中使用Flume

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.api.RpcClient;

import org.apache.flume.api.RpcClientFactory;

import org.apache.flume.event.EventBuilder;

import java.nio.charset.Charset;

public class MyApp {

public static void main(String[] args) {

MyRpcClientFacade client = new MyRpcClientFacade();

// Initialize client with the remote Flume agent's host and port

client.init("host.example.org", 41414);

// Send 10 events to the remote Flume agent. That agent should be

// configured to listen with an AvroSource.

String sampleData = "Hello Flume!";

for (int i = 0; i < 10; i++) {

client.sendDataToFlume(sampleData);

}

client.cleanUp();

}

}

class MyRpcClientFacade {

private RpcClient client;

private String hostname;

private int port;

public void init(String hostname, int port) {

// Setup the RPC connection

this.hostname = hostname;

this.port = port;

this.client = RpcClientFactory.getDefaultInstance(hostname, port);

// Use the following method to create a thrift client (instead of the above line):

// this.client = RpcClientFactory.getThriftInstance(hostname, port);

}

public void sendDataToFlume(String data) {

// Create a Flume Event object that encapsulates the sample data

Event event = EventBuilder.withBody(data, Charset.forName("UTF-8"));

// Send the event

try {

client.append(event);

} catch (EventDeliveryException e) {

// clean up and recreate the client

client.close();

client = null;

client = RpcClientFactory.getDefaultInstance(hostname, port);

// Use the following method to create a thrift client (instead of the above line):

// this.client = RpcClientFactory.getThriftInstance(hostname, port);

}

}

public void cleanUp() {

// Close the RPC connection

client.close();

}

}

远程Flume代理需要在某个端口上监听AvroSource( 如果使用Thrift客户端,则为ThriftSource)。下面是一个等待来自MyApp的连接的Flume代理配置示例:

a1.channels = c1

a1.sources = r1

a1.sinks = k1

a1.channels.c1.type = memory

a1.sources.r1.channels = c1

a1.sources.r1.type = avro

# For using a thrift source set the following instead of the above line.

# a1.source.r1.type = thrift

a1.sources.r1.bind = 0.0.0.0

a1.sources.r1.port = 41414

a1.sinks.k1.channel = c1

a1.sinks.k1.type = logger

为了获得更大的灵活性,可以使用以下属性配置默认的Flume客户端实现(NettyAvroRpcClient和ThriftRpcClient):

client.type = default (for avro) or thrift (for thrift)

hosts = h1 # default client accepts only 1 host

# (additional hosts will be ignored)

hosts.h1 = host1.example.org:41414 # host and port must both be specified

# (neither has a default)

batch-size = 100 # Must be >=1 (default: 100)

connect-timeout = 20000 # Must be >=1000 (default: 20000)

request-timeout = 20000 # Must be >=1000 (default: 20000)

安全RPC客户端-Thrift

从Flume 1.6.0开始,ThriftSource和接收器支持基于kerberos的身份验证。客户端需要使用的getThriftInstance方法SecureRpcClientFactory 获得的保持SecureThriftRpcClient。SecureThriftRpcClient扩展了 ThriftRpcClient,它实现了RpcClient接口。当使用SecureRpcClientFactory时,kerberos身份验证模块驻留在类路径中需要的flume -ng-auth模块中。客户端主体和客户端密钥表都应作为参数通过属性传入,并反映客户端的凭据以对kerberos KDC进行身份验证。此外,还应提供此客户端连接到的目标ThriftSource的服务器主体。以下示例显示如何 在用户的数据生成应用程序中使用SecureRpcClientFactory:

import org.apache.flume.Event;

import org.apache.flume.EventDeliveryException;

import org.apache.flume.event.EventBuilder;

import org.apache.flume.api.SecureRpcClientFactory;

import org.apache.flume.api.RpcClientConfigurationConstants;

import org.apache.flume.api.RpcClient;

import java.nio.charset.Charset;

import java.util.Properties;

public class MyApp {

public static void main(String[] args) {

MySecureRpcClientFacade client = new MySecureRpcClientFacade();

// Initialize client with the remote Flume agent's host, port

Properties props = new Properties();

props.setProperty(RpcClientConfigurationConstants.CONFIG_CLIENT_TYPE, "thrift");

props.setProperty("hosts", "h1");

props.setProperty("hosts.h1", "client.example.org"+":"+ String.valueOf(41414));

// Initialize client with the kerberos authentication related properties

props.setProperty("kerberos", "true");

props.setProperty("client-principal", "flumeclient/[email protected]");

props.setProperty("client-keytab", "/tmp/flumeclient.keytab");

props.setProperty("server-principal", "flume/[email protected]");

client.init(props);

// Send 10 events to the remote Flume agent. That agent should be

// configured to listen with an AvroSource.

String sampleData = "Hello Flume!";

for (int i = 0; i < 10; i++) {

client.sendDataToFlume(sampleData);

}

client.cleanUp();

}

}

class MySecureRpcClientFacade {

private RpcClient client;

private Properties properties;

public void init(Properties properties) {

// Setup the RPC connection

this.properties = properties;

// Create the ThriftSecureRpcClient instance by using SecureRpcClientFactory

this.client = SecureRpcClientFactory.getThriftInstance(properties);

}

public void sendDataToFlume(String data) {

// Create a Flume Event object that encapsulates the sample data

Event event = EventBuilder.withBody(data, Charset.forName("UTF-8"));

// Send the event

try {

client.append(event);

} catch (EventDeliveryException e) {

// clean up and recreate the client

client.close();

client = null;

client = SecureRpcClientFactory.getThriftInstance(properties);

}

}

public void cleanUp() {

// Close the RPC connection

client.close();

}

}

远程ThriftSource应以kerberos模式启动。下面是一个等待来自MyApp的连接的Flume代理配置示例:

a1.channels = c1

a1.sources = r1

a1.sinks = k1

a1.channels.c1.type = memory

a1.sources.r1.channels = c1

a1.sources.r1.type = thrift

a1.sources.r1.bind = 0.0.0.0

a1.sources.r1.port = 41414

a1.sources.r1.kerberos = true

a1.sources.r1.agent-principal = flume/[email protected]

a1.sources.r1.agent-keytab = /tmp/flume.keytab

a1.sinks.k1.channel = c1

a1.sinks.k1.type = logger

故障转移客户端 ( Failover Client )

此类包装默认Avro RPC客户端以向客户端提供故障转移处理功能。这需要一个以空格分隔的

// Setup properties for the failover

Properties props = new Properties();

props.put("client.type", "default_failover");

// List of hosts (space-separated list of user-chosen host aliases)

props.put("hosts", "h1 h2 h3");

// host/port pair for each host alias

String host1 = "host1.example.org:41414";

String host2 = "host2.example.org:41414";

String host3 = "host3.example.org:41414";

props.put("hosts.h1", host1);

props.put("hosts.h2", host2);

props.put("hosts.h3", host3);

// create the client with failover properties

RpcClient client = RpcClientFactory.getInstance(props);

为了获得更大的灵活性,可以使用以下属性配置故障转移Flume客户端实现(FailoverRpcClient):

client.type = default_failover

hosts = h1 h2 h3 # at least one is required, but 2 or

# more makes better sense

hosts.h1 = host1.example.org:41414

hosts.h2 = host2.example.org:41414

hosts.h3 = host3.example.org:41414

max-attempts = 3 # Must be >=0 (default: number of hosts

# specified, 3 in this case). A '0'

# value doesn't make much sense because

# it will just cause an append call to

# immmediately fail. A '1' value means

# that the failover client will try only

# once to send the Event, and if it

# fails then there will be no failover

# to a second client, so this value

# causes the failover client to

# degenerate into just a default client.

# It makes sense to set this value to at

# least the number of hosts that you

# specified.

batch-size = 100 # Must be >=1 (default: 100)

connect-timeout = 20000 # Must be >=1000 (default: 20000)

request-timeout = 20000 # Must be >=1000 (default: 20000)

LoadBalancing RPC客户端

Flume Client SDK还支持RpcClient,可在多个主机之间进行负载平衡。这种类型的客户端采用以空格分隔的

如果启用了退避,则客户端将暂时将失败的主机列入黑名单,从而将它们排除在选定的超时之前被选为故障转移主机。当超时过去时,如果主机仍无响应,则认为这是顺序故障,并且超时会以指数方式增加,以避免在无响应主机上长时间等待时陷入困境。

可以通过设置maxBackoff(以毫秒为单位)来配置最大退避时间。maxBackoff默认值为30秒(在OrderSelector类中指定, 它是两个负载平衡策略的超类)。退避超时将随着每次顺序故障呈指数级增长,直至最大可能的退避超时。最大可能的退避限制为65536秒(约18.2小时)。例如:

// Setup properties for the load balancing

Properties props = new Properties();

props.put("client.type", "default_loadbalance");

// List of hosts (space-separated list of user-chosen host aliases)

props.put("hosts", "h1 h2 h3");

// host/port pair for each host alias

String host1 = "host1.example.org:41414";

String host2 = "host2.example.org:41414";

String host3 = "host3.example.org:41414";

props.put("hosts.h1", host1);

props.put("hosts.h2", host2);

props.put("hosts.h3", host3);

props.put("host-selector", "random"); // For random host selection

// props.put("host-selector", "round_robin"); // For round-robin host

// // selection

props.put("backoff", "true"); // Disabled by default.

props.put("maxBackoff", "10000"); // Defaults 0, which effectively

// becomes 30000 ms

// Create the client with load balancing properties

RpcClient client = RpcClientFactory.getInstance(props);

为了获得更大的灵活性,可以使用以下属性配置负载平衡Flume客户端实现(LoadBalancingRpcClient):

client.type = default_loadbalance

hosts = h1 h2 h3 # At least 2 hosts are required

hosts.h1 = host1.example.org:41414

hosts.h2 = host2.example.org:41414

hosts.h3 = host3.example.org:41414

backoff = false # Specifies whether the client should

# back-off from (i.e. temporarily

# blacklist) a failed host

# (default: false).

maxBackoff = 0 # Max timeout in millis that a will

# remain inactive due to a previous

# failure with that host (default: 0,

# which effectively becomes 30000)

host-selector = round_robin # The host selection strategy used

# when load-balancing among hosts

# (default: round_robin).

# Other values are include "random"

# or the FQCN of a custom class

# that implements

# LoadBalancingRpcClient$HostSelector

batch-size = 100 # Must be >=1 (default: 100)

connect-timeout = 20000 # Must be >=1000 (default: 20000)

request-timeout = 20000 # Must be >=1000 (default: 20000)

嵌入式代理

Flume有一个嵌入式代理api,允许用户在他们的应用程序中嵌入代理。此代理程序应该是轻量级的,因此不允许所有Source,接收器和通道。具体来说,使用的Source是一个特殊的嵌入式Source, Event应该通过EmbeddedAgent对象上的put,putAll方法发送到Source。只允许文件通道和内存通道作为通道,而Avro Sink是唯一支持的接收器。嵌入式代理也支持拦截器。

注意:嵌入式代理程序依赖于hadoop-core.jar。

嵌入式代理的配置类似于完整代理的配置。以下是一份详尽的移民选择清单:

必需属性以粗体显示。

| 物业名称 |

默认 |

描述 |

| source.type |

embedded | 唯一可用的来Source是嵌入式Source。 |

| channel.type |

- |

任一memory 或file 分别对应于MemoryChannel和FileChannel。 |

| channel.* |

- |

请求的通道类型的配置选项,请参阅MemoryChannel或FileChannel用户指南以获取详尽的列表。 |

| sinks |

- |

接收器名称列表 |

| sink.type |

- |

属性名称必须与接收器列表中的名称匹配。价值必须是avro |

| sink.* |

- |

接收器的配置选项。有关详尽列表,请参阅AvroSink用户指南,但请注意AvroSink至少需要主机名和端口。 |

| processor.type |

- |

任一故障转移或LOAD_BALANCE其分别对应于FailoverSinksProcessor和LoadBalancingSinkProcessor。 |

| processor.* |

- |

选择的接收器处理器的配置选项。有关详尽列表,请参阅FailoverSinksProcessor和LoadBalancingSinkProcessor用户指南。 |

| source.interceptors |

- |

以空格分隔的拦截器列表 |

| source.interceptors。* |

- |

source.interceptors属性中指定的各个拦截器的配置选项 |

以下是如何使用代理的示例:

Map properties = new HashMap();

properties.put("channel.type", "memory");

properties.put("channel.capacity", "200");

properties.put("sinks", "sink1 sink2");

properties.put("sink1.type", "avro");

properties.put("sink2.type", "avro");

properties.put("sink1.hostname", "collector1.apache.org");

properties.put("sink1.port", "5564");

properties.put("sink2.hostname", "collector2.apache.org");

properties.put("sink2.port", "5565");

properties.put("processor.type", "load_balance");

properties.put("source.interceptors", "i1");

properties.put("source.interceptors.i1.type", "static");

properties.put("source.interceptors.i1.key", "key1");

properties.put("source.interceptors.i1.value", "value1");

EmbeddedAgent agent = new EmbeddedAgent("myagent");

agent.configure(properties);

agent.start();

List events = Lists.newArrayList();

events.add(event);

events.add(event);

events.add(event);

events.add(event);

agent.putAll(events);

...

agent.stop();

事务接口

该交易接口是用于Sink可靠性的基础。所有主要组件(即Source,Sink和Channel )必须使用Flume Transaction。

一个事物Channel中的实现。连接到Channel的每个 Source和Sink都必须获取 Transaction对象。该Source的使用一个的ChannelProcessor 管理事务秒,Sink 显式地管理他们通过他们的配置渠道。将 Event(放入Channel)或提取 Event(从Channel中取出 )的操作在活动事务中完成。例如:

Channel ch = new MemoryChannel();

Transaction txn = ch.getTransaction();

txn.begin();

try {

// This try clause includes whatever Channel operations you want to do

Event eventToStage = EventBuilder.withBody("Hello Flume!",

Charset.forName("UTF-8"));

ch.put(eventToStage);

// Event takenEvent = ch.take();

// ...

txn.commit();

} catch (Throwable t) {

txn.rollback();

// Log exception, handle individual exceptions as needed

// re-throw all Errors

if (t instanceof Error) {

throw (Error)t;

}

} finally {

txn.close();

}

在这里,我们从Channel获取交易。在begin() 返回之后,Transaction现在处于活动/打开状态,然后将Event放入Channel中。如果put成功,则提交并关闭Transaction。

接收器

一的目的Sink中提取 Event从S Channel,并将其转发到下一个Sink代理在流或存储它们在外部存储库中。根据Flume属性文件中的配置,接收器只与一个Channel相关联。每个已配置的Sink都有一个SinkRunner实例,当Flume框架调用 SinkRunner.start()时,会创建一个新线程来驱动Sink(使用 SinkRunner.PollingRunner作为线程的Runnable)。这个线程管理着Sink的生命周期。该Sink需要实现作为LifecycleAware接口一部分的start()和 stop()方法。该 Sink.start()方法应该初始化Sink,并把它在那里可以转发的状态 Event s到它的下一个目的地。所述 Sink.process()方法应当做提取的核心处理 Event从Channel和转发它。该Sink.stop()方法应该做必要的清理(如释放资Source)。该接收器 的实现还需要实现可配置用于处理自己的配置设置的界面。例如:

public class MySink extends AbstractSink implements Configurable {

private String myProp;

@Override

public void configure(Context context) {

String myProp = context.getString("myProp", "defaultValue");

// Process the myProp value (e.g. validation)

// Store myProp for later retrieval by process() method

this.myProp = myProp;

}

@Override

public void start() {

// Initialize the connection to the external repository (e.g. HDFS) that

// this Sink will forward Events to ..

}

@Override

public void stop () {

// Disconnect from the external respository and do any

// additional cleanup (e.g. releasing resources or nulling-out

// field values) ..

}

@Override

public Status process() throws EventDeliveryException {

Status status = null;

// Start transaction

Channel ch = getChannel();

Transaction txn = ch.getTransaction();

txn.begin();

try {

// This try clause includes whatever Channel operations you want to do

Event event = ch.take();

// Send the Event to the external repository.

// storeSomeData(e);

txn.commit();

status = Status.READY;

} catch (Throwable t) {

txn.rollback();

// Log exception, handle individual exceptions as needed

status = Status.BACKOFF;

// re-throw all Errors

if (t instanceof Error) {

throw (Error)t;

}

}

return status;

}

}

Source

Source的目的是从外部客户端接收数据并将其存储到已配置的Channel中。一个来Source可以得到它自己的一个实例 的ChannelProcessor处理一个 Event,一个内COMMITED 通道 本地事务,串行。在异常的情况下,所需的 Channel将传播异常,所有Channel将回滚其事务,但先前在其他Channel上处理的 Event将保持提交。

与SinkRunner.PollingRunner Runnable类似,有一个PollingRunner Runnable,它在Flume框架调用PollableSourceRunner.start()时创建的线程上执行。每个配置的PollableSource都与自己运行PollingRunner的线程相关联 。该线程管理PollableSource的生命周期,例如启动和停止。一个PollableSource实现必须实现的start()和停止()是在该声明的方法 LifecycleAware接口。一个转轮PollableSource调用了 Source的 process()方法。该过程()方法应该检查新数据,并将其存储到Channel的Sink Event秒。

请注意,实际上有两种类型的Source。该PollableSource 已经提到。另一个是EventDrivenSource。该 EventDrivenSource,不像PollableSource,必须捕获新的数据并将其存储到自己的回调机制通道。该 EventDrivenSource s为各不被自己的线程,如驱动 PollableSource s为。下面是一个自定义PollableSource的示例:

public class MySource extends AbstractSource implements Configurable, PollableSource {

private String myProp;

@Override

public void configure(Context context) {

String myProp = context.getString("myProp", "defaultValue");

// Process the myProp value (e.g. validation, convert to another type, ...)

// Store myProp for later retrieval by process() method

this.myProp = myProp;

}

@Override

public void start() {

// Initialize the connection to the external client

}

@Override

public void stop () {

// Disconnect from external client and do any additional cleanup

// (e.g. releasing resources or nulling-out field values) ..

}

@Override

public Status process() throws EventDeliveryException {

Status status = null;

try {

// This try clause includes whatever Channel/Event operations you want to do

// Receive new data

Event e = getSomeData();

// Store the Event into this Source's associated Channel(s)

getChannelProcessor().processEvent(e);

status = Status.READY;

} catch (Throwable t) {

// Log exception, handle individual exceptions as needed

status = Status.BACKOFF;

// re-throw all Errors

if (t instanceof Error) {

throw (Error)t;

}

} finally {

txn.close();

}

return status;

}

}

Channel

TBD