10分钟 to pandas

import pandas as pd

import numpy as np

import matplotlib.pyplot as pltx

In [3]:

dates = pd.date_range('20130101', periods=6)

dates

Out[3]:

DatetimeIndex(['2013-01-01', '2013-01-02', '2013-01-03', '2013-01-04',

'2013-01-05', '2013-01-06'],

dtype='datetime64[ns]', freq='D')In [4]:

df = pd.DataFrame(np.random.randn(6,4), index=dates, columns=list('ABCD'))

df

Out[4]:

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | 0.043660 | 0.914219 | 1.364281 | 0.960460 |

| 2013-01-02 | 0.245818 | 0.582317 | 0.456372 | -0.734680 |

| 2013-01-03 | -0.997398 | -0.476202 | 0.967015 | 0.089730 |

| 2013-01-04 | -1.132148 | 0.867161 | 0.458086 | 0.797743 |

| 2013-01-05 | -1.226727 | 1.524988 | -1.980305 | 0.694533 |

| 2013-01-06 | 1.695086 | 0.796078 | -0.688947 | -0.910752 |

In [5]:

df2 = pd.DataFrame({ 'A' : 1.,

'B' : pd.Timestamp('20130102'),

'C' : pd.Series(1,index=list(range(4)),dtype='float32'),

'D' : np.array([3] * 4,dtype='int32'),

'E' : pd.Categorical(["test","train","test","train"]),

'F' : 'foo' })

df2

Out[5]:

| A | B | C | D | E | F | |

|---|---|---|---|---|---|---|

| 0 | 1.0 | 2013-01-02 | 1.0 | 3 | test | foo |

| 1 | 1.0 | 2013-01-02 | 1.0 | 3 | train | foo |

| 2 | 1.0 | 2013-01-02 | 1.0 | 3 | test | foo |

| 3 | 1.0 | 2013-01-02 | 1.0 | 3 | train | foo |

In [6]:

df2.dtypes

Out[6]:

A float64

B datetime64[ns]

C float32

D int32

E category

F object

dtype: objectIn [11]:

df.describe()

Out[11]:

| A | B | C | D | |

|---|---|---|---|---|

| count | 6.000000 | 6.000000 | 6.000000 | 6.000000 |

| mean | -0.228618 | 0.701427 | 0.096084 | 0.149506 |

| std | 1.131678 | 0.657413 | 1.229257 | 0.810483 |

| min | -1.226727 | -0.476202 | -1.980305 | -0.910752 |

| 25% | -1.098461 | 0.635757 | -0.402617 | -0.528577 |

| 50% | -0.476869 | 0.831620 | 0.457229 | 0.392131 |

| 75% | 0.195279 | 0.902454 | 0.839783 | 0.771940 |

| max | 1.695086 | 1.524988 | 1.364281 | 0.960460 |

In [12]:

df.T

Out[12]:

| 2013-01-01 00:00:00 | 2013-01-02 00:00:00 | 2013-01-03 00:00:00 | 2013-01-04 00:00:00 | 2013-01-05 00:00:00 | 2013-01-06 00:00:00 | |

|---|---|---|---|---|---|---|

| A | 0.043660 | 0.245818 | -0.997398 | -1.132148 | -1.226727 | 1.695086 |

| B | 0.914219 | 0.582317 | -0.476202 | 0.867161 | 1.524988 | 0.796078 |

| C | 1.364281 | 0.456372 | 0.967015 | 0.458086 | -1.980305 | -0.688947 |

| D | 0.960460 | -0.734680 | 0.089730 | 0.797743 | 0.694533 | -0.910752 |

In [13]:

df.sort_index(axis=1, ascending=False)

Out[13]:

| D | C | B | A | |

|---|---|---|---|---|

| 2013-01-01 | 0.960460 | 1.364281 | 0.914219 | 0.043660 |

| 2013-01-02 | -0.734680 | 0.456372 | 0.582317 | 0.245818 |

| 2013-01-03 | 0.089730 | 0.967015 | -0.476202 | -0.997398 |

| 2013-01-04 | 0.797743 | 0.458086 | 0.867161 | -1.132148 |

| 2013-01-05 | 0.694533 | -1.980305 | 1.524988 | -1.226727 |

| 2013-01-06 | -0.910752 | -0.688947 | 0.796078 | 1.695086 |

In [14]:

df.sort_values(by='B')

Out[14]:

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-03 | -0.997398 | -0.476202 | 0.967015 | 0.089730 |

| 2013-01-02 | 0.245818 | 0.582317 | 0.456372 | -0.734680 |

| 2013-01-06 | 1.695086 | 0.796078 | -0.688947 | -0.910752 |

| 2013-01-04 | -1.132148 | 0.867161 | 0.458086 | 0.797743 |

| 2013-01-01 | 0.043660 | 0.914219 | 1.364281 | 0.960460 |

| 2013-01-05 | -1.226727 | 1.524988 | -1.980305 | 0.694533 |

In [15]:

df.apply(np.cumsum)

Out[15]:

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | 0.043660 | 0.914219 | 1.364281 | 0.960460 |

| 2013-01-02 | 0.289478 | 1.496535 | 1.820653 | 0.225781 |

| 2013-01-03 | -0.707920 | 1.020334 | 2.787668 | 0.315511 |

| 2013-01-04 | -1.840068 | 1.887495 | 3.245754 | 1.113254 |

| 2013-01-05 | -3.066794 | 3.412483 | 1.265449 | 1.807786 |

| 2013-01-06 | -1.371708 | 4.208561 | 0.576502 | 0.897035 |

In [16]:

df.apply(lambda x: x.max() - x.min())

Out[16]:

A 2.921813

B 2.001190

C 3.344586

D 1.871212

dtype: float64In [18]:

s = pd.Series(np.random.randint(0, 7, size=10))

s

Out[18]:

0 3

1 3

2 3

3 4

4 3

5 1

6 6

7 3

8 1

9 4

dtype: int32In [19]:

s.value_counts()

Out[19]:

3 5

4 2

1 2

6 1

dtype: int64In [20]:

s = pd.Series(['A', 'B', 'C', 'Aaba', 'Baca', np.nan, 'CABA', 'dog', 'cat'])

s.str.lower()

Out[20]:

0 a

1 b

2 c

3 aaba

4 baca

5 NaN

6 caba

7 dog

8 cat

dtype: objectIn [21]:

df = pd.DataFrame(np.random.randn(10, 4))

df

Out[21]:

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | 0.625507 | -0.587885 | 0.933430 | -1.608638 |

| 1 | -0.570564 | 1.653211 | -0.169102 | 0.278487 |

| 2 | 0.587255 | 0.580736 | 1.738137 | -1.989709 |

| 3 | 1.238611 | 0.867963 | -0.679791 | 1.784103 |

| 4 | -0.190212 | -1.065888 | 0.629625 | 0.968308 |

| 5 | -1.123788 | 0.433555 | 0.613186 | -0.885229 |

| 6 | -0.872940 | 0.117758 | 1.085781 | -1.739964 |

| 7 | -1.064637 | -1.562930 | 0.609092 | 0.591197 |

| 8 | -0.479885 | 0.019224 | -0.071010 | 0.203683 |

| 9 | 1.562199 | -0.084385 | 0.169083 | 0.588641 |

In [24]:

pieces = [df[:3], df[3:7]]

pieces

Out[24]:

[ 0 1 2 3

0 0.625507 -0.587885 0.933430 -1.608638

1 -0.570564 1.653211 -0.169102 0.278487

2 0.587255 0.580736 1.738137 -1.989709,

0 1 2 3

3 1.238611 0.867963 -0.679791 1.784103

4 -0.190212 -1.065888 0.629625 0.968308

5 -1.123788 0.433555 0.613186 -0.885229

6 -0.872940 0.117758 1.085781 -1.739964]In [25]:

pd.concat(pieces)

Out[25]:

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | 0.625507 | -0.587885 | 0.933430 | -1.608638 |

| 1 | -0.570564 | 1.653211 | -0.169102 | 0.278487 |

| 2 | 0.587255 | 0.580736 | 1.738137 | -1.989709 |

| 3 | 1.238611 | 0.867963 | -0.679791 | 1.784103 |

| 4 | -0.190212 | -1.065888 | 0.629625 | 0.968308 |

| 5 | -1.123788 | 0.433555 | 0.613186 | -0.885229 |

| 6 | -0.872940 | 0.117758 | 1.085781 | -1.739964 |

In [26]:

left = pd.DataFrame({'key': ['foo', 'foo'], 'lval': [1, 2]})

left

Out[26]:

| key | lval | |

|---|---|---|

| 0 | foo | 1 |

| 1 | foo | 2 |

In [27]:

right = pd.DataFrame({'key': ['foo', 'foo'], 'rval': [4, 5]})

right

Out[27]:

| key | rval | |

|---|---|---|

| 0 | foo | 4 |

| 1 | foo | 5 |

In [28]:

pd.merge(left, right, on='key')

Out[28]:

| key | lval | rval | |

|---|---|---|---|

| 0 | foo | 1 | 4 |

| 1 | foo | 1 | 5 |

| 2 | foo | 2 | 4 |

| 3 | foo | 2 | 5 |

追加

In [29]:

df = pd.DataFrame(np.random.randn(8, 4), columns=['A','B','C','D'])

df

Out[29]:

| A | B | C | D | |

|---|---|---|---|---|

| 0 | 0.567299 | -0.835836 | 1.997388 | 1.219204 |

| 1 | 0.901536 | -0.172666 | -0.396798 | 1.102758 |

| 2 | 0.585077 | -0.283219 | 0.685800 | 0.284514 |

| 3 | 0.228130 | 0.916432 | 0.102306 | -1.420889 |

| 4 | 1.196156 | -2.166493 | 1.202548 | 0.198905 |

| 5 | 0.930729 | 0.735202 | 0.780484 | 1.035269 |

| 6 | 0.335974 | -0.364062 | 1.316474 | -0.390350 |

| 7 | 1.611419 | -0.277883 | 0.116712 | -0.781101 |

In [30]:

s = df.iloc[3]

s

Out[30]:

A 0.228130

B 0.916432

C 0.102306

D -1.420889

Name: 3, dtype: float64In [31]:

df.append(s, ignore_index=True)

Out[31]:

| A | B | C | D | |

|---|---|---|---|---|

| 0 | 0.567299 | -0.835836 | 1.997388 | 1.219204 |

| 1 | 0.901536 | -0.172666 | -0.396798 | 1.102758 |

| 2 | 0.585077 | -0.283219 | 0.685800 | 0.284514 |

| 3 | 0.228130 | 0.916432 | 0.102306 | -1.420889 |

| 4 | 1.196156 | -2.166493 | 1.202548 | 0.198905 |

| 5 | 0.930729 | 0.735202 | 0.780484 | 1.035269 |

| 6 | 0.335974 | -0.364062 | 1.316474 | -0.390350 |

| 7 | 1.611419 | -0.277883 | 0.116712 | -0.781101 |

| 8 | 0.228130 | 0.916432 | 0.102306 | -1.420889 |

分组

In [32]:

df = pd.DataFrame({'A' : ['foo', 'bar', 'foo', 'bar',

'foo', 'bar', 'foo', 'foo'],

'B' : ['one', 'one', 'two', 'three',

'two', 'two', 'one', 'three'],

'C' : np.random.randn(8),

'D' : np.random.randn(8)})

df

Out[32]:

| A | B | C | D | |

|---|---|---|---|---|

| 0 | foo | one | 1.946394 | -1.603756 |

| 1 | bar | one | 1.128497 | 0.186203 |

| 2 | foo | two | 0.768784 | -1.485998 |

| 3 | bar | three | 1.530352 | 1.159454 |

| 4 | foo | two | 0.740245 | -0.517180 |

| 5 | bar | two | -1.475944 | 2.510523 |

| 6 | foo | one | -0.822403 | 0.036043 |

| 7 | foo | three | -0.799283 | -2.370425 |

In [33]:

df.groupby('A').sum()

Out[33]:

| C | D | |

|---|---|---|

| A | ||

| bar | 1.182905 | 3.856180 |

| foo | 1.833737 | -5.941317 |

In [34]:

df.groupby(['A','B']).sum()

Out[34]:

| C | D | ||

|---|---|---|---|

| A | B | ||

| bar | one | 1.128497 | 0.186203 |

| three | 1.530352 | 1.159454 | |

| two | -1.475944 | 2.510523 | |

| foo | one | 1.123991 | -1.567713 |

| three | -0.799283 | -2.370425 | |

| two | 1.509028 | -2.003179 |

重塑

In [35]:

tuples = list(zip(*[['bar', 'bar', 'baz', 'baz',

'foo', 'foo', 'qux', 'qux'],

['one', 'two', 'one', 'two',

'one', 'two', 'one', 'two']]))

tuples

Out[35]:

[('bar', 'one'),

('bar', 'two'),

('baz', 'one'),

('baz', 'two'),

('foo', 'one'),

('foo', 'two'),

('qux', 'one'),

('qux', 'two')]In [36]:

index = pd.MultiIndex.from_tuples(tuples, names=['first', 'second'])

index

Out[36]:

MultiIndex(levels=[['bar', 'baz', 'foo', 'qux'], ['one', 'two']],

labels=[[0, 0, 1, 1, 2, 2, 3, 3], [0, 1, 0, 1, 0, 1, 0, 1]],

names=['first', 'second'])In [37]:

df = pd.DataFrame(np.random.randn(8, 2), index=index, columns=['A', 'B'])

df

Out[37]:

| A | B | ||

|---|---|---|---|

| first | second | ||

| bar | one | -1.421775 | 0.156963 |

| two | 1.564838 | 2.088619 | |

| baz | one | 0.371071 | 0.103863 |

| two | -0.789093 | 0.952786 | |

| foo | one | 1.931205 | 0.103578 |

| two | 0.169767 | -0.750791 | |

| qux | one | 0.601066 | -0.779490 |

| two | 1.416660 | 1.744881 |

In [39]:

df2 = df[:4]

df2

Out[39]:

| A | B | ||

|---|---|---|---|

| first | second | ||

| bar | one | -1.421775 | 0.156963 |

| two | 1.564838 | 2.088619 | |

| baz | one | 0.371071 | 0.103863 |

| two | -0.789093 | 0.952786 |

In [40]:

stacked = df2.stack()

stacked

Out[40]:

first second

bar one A -1.421775

B 0.156963

two A 1.564838

B 2.088619

baz one A 0.371071

B 0.103863

two A -0.789093

B 0.952786

dtype: float64In [41]:

stacked.unstack()

Out[41]:

| A | B | ||

|---|---|---|---|

| first | second | ||

| bar | one | -1.421775 | 0.156963 |

| two | 1.564838 | 2.088619 | |

| baz | one | 0.371071 | 0.103863 |

| two | -0.789093 | 0.952786 |

In [42]:

stacked.unstack(1)

Out[42]:

| second | one | two | |

|---|---|---|---|

| first | |||

| bar | A | -1.421775 | 1.564838 |

| B | 0.156963 | 2.088619 | |

| baz | A | 0.371071 | -0.789093 |

| B | 0.103863 | 0.952786 |

In [43]:

stacked.unstack(0)

Out[43]:

| first | bar | baz | |

|---|---|---|---|

| second | |||

| one | A | -1.421775 | 0.371071 |

| B | 0.156963 | 0.103863 | |

| two | A | 1.564838 | -0.789093 |

| B | 2.088619 | 0.952786 |

数据透视表

In [44]:

df = pd.DataFrame({'A' : ['one', 'one', 'two', 'three'] * 3,

'B' : ['A', 'B', 'C'] * 4,

'C' : ['foo', 'foo', 'foo', 'bar', 'bar', 'bar'] * 2,

'D' : np.random.randn(12),

'E' : np.random.randn(12)})

df

Out[44]:

| A | B | C | D | E | |

|---|---|---|---|---|---|

| 0 | one | A | foo | 1.955130 | 0.720242 |

| 1 | one | B | foo | 1.603413 | 1.022816 |

| 2 | two | C | foo | -0.019881 | -0.624567 |

| 3 | three | A | bar | 1.167609 | -1.001875 |

| 4 | one | B | bar | -0.468257 | 0.008671 |

| 5 | one | C | bar | 1.081470 | 1.286024 |

| 6 | two | A | foo | -1.302491 | 0.751544 |

| 7 | three | B | foo | 1.139926 | -0.791500 |

| 8 | one | C | foo | -2.829628 | 0.850286 |

| 9 | one | A | bar | -2.277605 | -0.943648 |

| 10 | two | B | bar | 1.091066 | -0.566744 |

| 11 | three | C | bar | -1.175964 | 0.731061 |

In [45]:

pd.pivot_table(df, values='D', index=['A', 'B'], columns=['C'])

Out[45]:

| C | bar | foo | |

|---|---|---|---|

| A | B | ||

| one | A | -2.277605 | 1.955130 |

| B | -0.468257 | 1.603413 | |

| C | 1.081470 | -2.829628 | |

| three | A | 1.167609 | NaN |

| B | NaN | 1.139926 | |

| C | -1.175964 | NaN | |

| two | A | NaN | -1.302491 |

| B | 1.091066 | NaN | |

| C | NaN | -0.019881 |

时间序列

In [46]:

rng = pd.date_range('1/1/2012', periods=100, freq='S')

rng

Out[46]:

DatetimeIndex(['2012-01-01 00:00:00', '2012-01-01 00:00:01',

'2012-01-01 00:00:02', '2012-01-01 00:00:03',

'2012-01-01 00:00:04', '2012-01-01 00:00:05',

'2012-01-01 00:00:06', '2012-01-01 00:00:07',

'2012-01-01 00:00:08', '2012-01-01 00:00:09',

'2012-01-01 00:00:10', '2012-01-01 00:00:11',

'2012-01-01 00:00:12', '2012-01-01 00:00:13',

'2012-01-01 00:00:14', '2012-01-01 00:00:15',

'2012-01-01 00:00:16', '2012-01-01 00:00:17',

'2012-01-01 00:00:18', '2012-01-01 00:00:19',

'2012-01-01 00:00:20', '2012-01-01 00:00:21',

'2012-01-01 00:00:22', '2012-01-01 00:00:23',

'2012-01-01 00:00:24', '2012-01-01 00:00:25',

'2012-01-01 00:00:26', '2012-01-01 00:00:27',

'2012-01-01 00:00:28', '2012-01-01 00:00:29',

'2012-01-01 00:00:30', '2012-01-01 00:00:31',

'2012-01-01 00:00:32', '2012-01-01 00:00:33',

'2012-01-01 00:00:34', '2012-01-01 00:00:35',

'2012-01-01 00:00:36', '2012-01-01 00:00:37',

'2012-01-01 00:00:38', '2012-01-01 00:00:39',

'2012-01-01 00:00:40', '2012-01-01 00:00:41',

'2012-01-01 00:00:42', '2012-01-01 00:00:43',

'2012-01-01 00:00:44', '2012-01-01 00:00:45',

'2012-01-01 00:00:46', '2012-01-01 00:00:47',

'2012-01-01 00:00:48', '2012-01-01 00:00:49',

'2012-01-01 00:00:50', '2012-01-01 00:00:51',

'2012-01-01 00:00:52', '2012-01-01 00:00:53',

'2012-01-01 00:00:54', '2012-01-01 00:00:55',

'2012-01-01 00:00:56', '2012-01-01 00:00:57',

'2012-01-01 00:00:58', '2012-01-01 00:00:59',

'2012-01-01 00:01:00', '2012-01-01 00:01:01',

'2012-01-01 00:01:02', '2012-01-01 00:01:03',

'2012-01-01 00:01:04', '2012-01-01 00:01:05',

'2012-01-01 00:01:06', '2012-01-01 00:01:07',

'2012-01-01 00:01:08', '2012-01-01 00:01:09',

'2012-01-01 00:01:10', '2012-01-01 00:01:11',

'2012-01-01 00:01:12', '2012-01-01 00:01:13',

'2012-01-01 00:01:14', '2012-01-01 00:01:15',

'2012-01-01 00:01:16', '2012-01-01 00:01:17',

'2012-01-01 00:01:18', '2012-01-01 00:01:19',

'2012-01-01 00:01:20', '2012-01-01 00:01:21',

'2012-01-01 00:01:22', '2012-01-01 00:01:23',

'2012-01-01 00:01:24', '2012-01-01 00:01:25',

'2012-01-01 00:01:26', '2012-01-01 00:01:27',

'2012-01-01 00:01:28', '2012-01-01 00:01:29',

'2012-01-01 00:01:30', '2012-01-01 00:01:31',

'2012-01-01 00:01:32', '2012-01-01 00:01:33',

'2012-01-01 00:01:34', '2012-01-01 00:01:35',

'2012-01-01 00:01:36', '2012-01-01 00:01:37',

'2012-01-01 00:01:38', '2012-01-01 00:01:39'],

dtype='datetime64[ns]', freq='S')In [48]:

ts = pd.Series(np.random.randint(0, 500, len(rng)), index=rng)

ts

Out[48]:

2012-01-01 00:00:00 478

2012-01-01 00:00:01 350

2012-01-01 00:00:02 17

2012-01-01 00:00:03 439

2012-01-01 00:00:04 84

2012-01-01 00:00:05 164

2012-01-01 00:00:06 17

2012-01-01 00:00:07 182

2012-01-01 00:00:08 482

2012-01-01 00:00:09 198

2012-01-01 00:00:10 231

2012-01-01 00:00:11 446

2012-01-01 00:00:12 271

2012-01-01 00:00:13 453

2012-01-01 00:00:14 418

2012-01-01 00:00:15 235

2012-01-01 00:00:16 231

2012-01-01 00:00:17 95

2012-01-01 00:00:18 132

2012-01-01 00:00:19 364

2012-01-01 00:00:20 93

2012-01-01 00:00:21 225

2012-01-01 00:00:22 255

2012-01-01 00:00:23 120

2012-01-01 00:00:24 387

2012-01-01 00:00:25 175

2012-01-01 00:00:26 174

2012-01-01 00:00:27 426

2012-01-01 00:00:28 185

2012-01-01 00:00:29 44

...

2012-01-01 00:01:10 61

2012-01-01 00:01:11 3

2012-01-01 00:01:12 14

2012-01-01 00:01:13 342

2012-01-01 00:01:14 361

2012-01-01 00:01:15 394

2012-01-01 00:01:16 414

2012-01-01 00:01:17 452

2012-01-01 00:01:18 191

2012-01-01 00:01:19 297

2012-01-01 00:01:20 16

2012-01-01 00:01:21 481

2012-01-01 00:01:22 364

2012-01-01 00:01:23 73

2012-01-01 00:01:24 238

2012-01-01 00:01:25 331

2012-01-01 00:01:26 111

2012-01-01 00:01:27 347

2012-01-01 00:01:28 28

2012-01-01 00:01:29 276

2012-01-01 00:01:30 33

2012-01-01 00:01:31 315

2012-01-01 00:01:32 177

2012-01-01 00:01:33 39

2012-01-01 00:01:34 211

2012-01-01 00:01:35 214

2012-01-01 00:01:36 65

2012-01-01 00:01:37 151

2012-01-01 00:01:38 418

2012-01-01 00:01:39 256

Freq: S, Length: 100, dtype: int32In [49]:

ts.resample('5Min').sum()

Out[49]:

2012-01-01 25315

Freq: 5T, dtype: int32In [50]:

rng = pd.date_range('3/6/2012 00:00', periods=5, freq='D')

ts = pd.Series(np.random.randn(len(rng)), rng)

ts

Out[50]:

2012-03-06 -0.844716

2012-03-07 -0.354952

2012-03-08 0.847330

2012-03-09 0.199636

2012-03-10 -0.157474

Freq: D, dtype: float64In [51]:

ts_utc = ts.tz_localize('UTC')

ts_utc

Out[51]:

2012-03-06 00:00:00+00:00 -0.844716

2012-03-07 00:00:00+00:00 -0.354952

2012-03-08 00:00:00+00:00 0.847330

2012-03-09 00:00:00+00:00 0.199636

2012-03-10 00:00:00+00:00 -0.157474

Freq: D, dtype: float64转换为另一个时区:

In [52]:

ts_utc.tz_convert('US/Eastern')

Out[52]:

2012-03-05 19:00:00-05:00 -0.844716

2012-03-06 19:00:00-05:00 -0.354952

2012-03-07 19:00:00-05:00 0.847330

2012-03-08 19:00:00-05:00 0.199636

2012-03-09 19:00:00-05:00 -0.157474

Freq: D, dtype: float64在时间跨度表示之间转换:

In [53]:

rng = pd.date_range('1/1/2012', periods=5, freq='M')

ts = pd.Series(np.random.randn(len(rng)), index=rng)

ts

Out[53]:

2012-01-31 -0.081224

2012-02-29 1.507458

2012-03-31 0.892793

2012-04-30 -1.667747

2012-05-31 1.100369

Freq: M, dtype: float64In [55]:

ps = ts.to_period()

ps

Out[55]:

2012-01 -0.081224

2012-02 1.507458

2012-03 0.892793

2012-04 -1.667747

2012-05 1.100369

Freq: M, dtype: float64In [56]:

ps.to_timestamp()

Out[56]:

2012-01-01 -0.081224

2012-02-01 1.507458

2012-03-01 0.892793

2012-04-01 -1.667747

2012-05-01 1.100369

Freq: MS, dtype: float64In [57]:

prng = pd.period_range('1990Q1', '2000Q4', freq='Q-NOV')

prng

Out[57]:

PeriodIndex(['1990Q1', '1990Q2', '1990Q3', '1990Q4', '1991Q1', '1991Q2',

'1991Q3', '1991Q4', '1992Q1', '1992Q2', '1992Q3', '1992Q4',

'1993Q1', '1993Q2', '1993Q3', '1993Q4', '1994Q1', '1994Q2',

'1994Q3', '1994Q4', '1995Q1', '1995Q2', '1995Q3', '1995Q4',

'1996Q1', '1996Q2', '1996Q3', '1996Q4', '1997Q1', '1997Q2',

'1997Q3', '1997Q4', '1998Q1', '1998Q2', '1998Q3', '1998Q4',

'1999Q1', '1999Q2', '1999Q3', '1999Q4', '2000Q1', '2000Q2',

'2000Q3', '2000Q4'],

dtype='period[Q-NOV]', freq='Q-NOV')In [58]:

ts = pd.Series(np.random.randn(len(prng)), prng)

ts

Out[58]:

1990Q1 1.115171

1990Q2 -0.910567

1990Q3 0.572898

1990Q4 0.122395

1991Q1 -0.742167

1991Q2 -0.249733

1991Q3 0.008649

1991Q4 0.922641

1992Q1 -0.377793

1992Q2 -0.810604

1992Q3 1.347122

1992Q4 -1.089756

1993Q1 0.921470

1993Q2 -0.315505

1993Q3 0.054035

1993Q4 0.196308

1994Q1 0.158628

1994Q2 -1.547737

1994Q3 0.805891

1994Q4 -2.249038

1995Q1 2.174847

1995Q2 -1.205269

1995Q3 -0.251231

1995Q4 -0.810176

1996Q1 -0.044167

1996Q2 0.893497

1996Q3 1.552094

1996Q4 -0.612805

1997Q1 0.918409

1997Q2 0.980157

1997Q3 1.509811

1997Q4 0.059969

1998Q1 -0.359608

1998Q2 1.643402

1998Q3 1.616181

1998Q4 1.882245

1999Q1 0.128783

1999Q2 -0.391579

1999Q3 1.448685

1999Q4 1.829124

2000Q1 1.583030

2000Q2 -1.449266

2000Q3 -0.153701

2000Q4 0.726885

Freq: Q-NOV, dtype: float64In [61]:

ts.index = (prng.asfreq('M', 'e') + 1).asfreq('H', 's') + 9

ts.head()

Out[61]:

1990-03-01 09:00 1.115171

1990-06-01 09:00 -0.910567

1990-09-01 09:00 0.572898

1990-12-01 09:00 0.122395

1991-03-01 09:00 -0.742167

Freq: H, dtype: float64分类

In [63]:

df = pd.DataFrame({"id":[1,2,3,4,5,6], "raw_grade":['a', 'b', 'b', 'a', 'a', 'e']})

df

Out[63]:

| id | raw_grade | |

|---|---|---|

| 0 | 1 | a |

| 1 | 2 | b |

| 2 | 3 | b |

| 3 | 4 | a |

| 4 | 5 | a |

| 5 | 6 | e |

In [67]:

df["grade"] = df["raw_grade"].astype("category")

df["grade"]

Out[67]:

0 a

1 b

2 b

3 a

4 a

5 e

Name: grade, dtype: category

Categories (3, object): [a, b, e]In [66]:

df

Out[66]:

| id | raw_grade | grade | |

|---|---|---|---|

| 0 | 1 | a | a |

| 1 | 2 | b | b |

| 2 | 3 | b | b |

| 3 | 4 | a | a |

| 4 | 5 | a | a |

| 5 | 6 | e | e |

In [68]:

df["grade"].cat.categories = ["very good", "good", "very bad"]

df["grade"]

Out[68]:

0 very good

1 good

2 good

3 very good

4 very good

5 very bad

Name: grade, dtype: category

Categories (3, object): [very good, good, very bad]In [69]:

df["grade"] = df["grade"].cat.set_categories(["very bad", "bad", "medium", "good", "very good"])

df["grade"]

Out[69]:

0 very good

1 good

2 good

3 very good

4 very good

5 very bad

Name: grade, dtype: category

Categories (5, object): [very bad, bad, medium, good, very good]In [70]:

df

Out[70]:

| id | raw_grade | grade | |

|---|---|---|---|

| 0 | 1 | a | very good |

| 1 | 2 | b | good |

| 2 | 3 | b | good |

| 3 | 4 | a | very good |

| 4 | 5 | a | very good |

| 5 | 6 | e | very bad |

In [71]:

df.groupby("grade").size()

Out[71]:

grade

very bad 1

bad 0

medium 0

good 2

very good 3

dtype: int64绘图

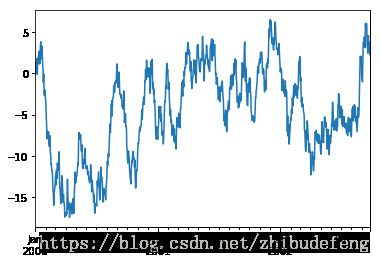

In [81]:

%matplotlib inline

ts = pd.Series(np.random.randn(1000), index=pd.date_range('1/1/2000', periods=1000))

ts

ts = ts.cumsum()

ts

ts.plot()

Out[81]:

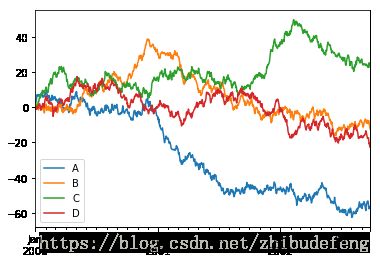

In [93]:

import matplotlib.pyplot as plt

df = pd.DataFrame(np.random.randn(1000, 4), index=ts.index,

columns=['A', 'B', 'C', 'D'])

df = df.cumsum()

plt.figure(); df.plot(); plt.legend(loc='best')

Out[93]:

获取数据进/出

In [94]:

'''

df.to_csv('foo.csv')

pd.read_csv('foo.csv')

df.to_hdf('foo.h5','df')

pd.read_hdf('foo.h5','df')

df.to_excel('foo.xlsx', sheet_name='Sheet1')

pd.read_excel('foo.xlsx', 'Sheet1', index_col=None, na_values=['NA'])

'''

Out[94]:

"\ndf.to_csv('foo.csv')\npd.read_csv('foo.csv')\n\ndf.to_hdf('foo.h5','df')\npd.read_hdf('foo.h5','df')\n\ndf.to_excel('foo.xlsx', sheet_name='Sheet1')\npd.read_excel('foo.xlsx', 'Sheet1', index_col=None, na_values=['NA'])\n"