巴斯勒工业相机(acA2500-20gm)参数

Acquisition Frame Rate

The Acquisition Frame Rate camera feature allows you to set an upper limit for the camera's frame rate.

This is useful if you want to operate the camera at a constant frame rate in free run image acquisition.

How It Works

If the Acquisition Frame Rate feature is enabled, the camera's maximum frame rate is limited by the value you enter for the acquisition frame rate parameter.

For example, setting an acquisition frame rate of 20 frames per second (fps) has the following effects:

- If the other factors limiting the frame rate allow a frame rate of more than 20 fps, the frame rate will be kept at a constant frame rate of 20 fps.

- If the other factors limiting the frame rate only allow a frame rate of less than 20 fps, the frame rate won't be affected by the Acquisition Frame Rate feature.

To determine the actual frame rate, use the Resulting Frame Rate feature.

Setting the Acquisition Frame Rate

- Set the AcquisitionFrameRateEnable parameter to true.

- Set the AcquisitionFrameRateAbs parameter to the desired upper limit for the camera's frame rate in frames per second.

// Set the upper limit of the camera's frame rate to 30 fps

camera.Parameters[PLCamera.AcquisitionFrameRateEnable].SetValue(true);

camera.Parameters[PLCamera.AcquisitionFrameRateAbs].SetValue(30.0);Acquisition Mode

The Acquisition Mode camera feature allows you to choose between single frame or continuous image acquisition.

Available Acquisition Modes

In Single Frame acquisition mode, the camera will acquire exactly one image. After the Acquisition Start command has been executed, the camera waits for trigger signals. When a Frame Start trigger signal has been received and an image has been acquired, the camera switches off image acquisition. To acquire another image, you must execute the Acquisition Start command again.

In Continuous acquisition mode, the camera continuously acquires and transfers images until acquisition is switched off. After the Acquisition Start command has been executed, the camera waits for trigger signals. The camera will continue acquiring images until an Acquisition Stop command is executed.

// Configure single frame acquisition on the camera

camera.Parameters[PLCamera.AcquisitionMode].SetValue(PLCamera.AcquisitionMode.SingleFrame);

// Switch on image acquisition

camera.Parameters[PLCamera.AcquisitionStart].Execute();

// The camera waits for a trigger signal.

// When a Frame Start trigger signal has been received,

// the camera executes an Acquisition Stop command internally.

// Configure continuous image acquisition on the camera

camera.Parameters[PLCamera.AcquisitionMode].SetValue(PLCamera.AcquisitionMode.Continuous);

// Switch on image acquisition

camera.Parameters[PLCamera.AcquisitionStart].Execute();

// The camera waits for trigger signals.

// (...)

// Switch off image acquisition

camera.Parameters[PLCamera.AcquisitionStop].Execute();Acquisition Start and Stop

Before a camera can start capturing images, image acquisition has to be switched on first. Otherwise, the camera wouldn't react to incoming trigger signals.

After the AcquisitionStop command has been executed, the following occurs:

- If the camera is not currently acquiring a frame, the change becomes effective immediately.

- If the camera is currently exposing a frame, exposure is stopped and readout is started. The readout process will be allowed to finish. Afterwards, image acquisition is switched off.

- If the camera is currently reading out image data, the readout process will be allowed to finish. Afterwards, image acquisition is switched off.

// Configure continuous image acquisition on the cameras

camera.Parameters[PLCamera.AcquisitionMode].SetValue(PLCamera.AcquisitionMode.Continuous);

// Switch on image acquisition

camera.Parameters[PLCamera.AcquisitionStart].Execute();

// The camera waits for trigger signals.

// (...)

// Switch off image acquisition

camera.Parameters[PLCamera.AcquisitionStop].Execute();Acquisition Status

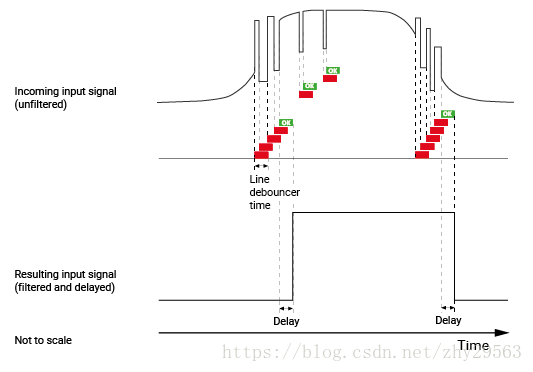

The Acquisition Status camera feature allows you to determine whether the camera is waiting for trigger signals. This is useful if you want to optimize triggered image acquisition and avoid overtriggering.

Basler strongly recommends to only use the Acquisition Status feature when the camera is configured for software triggering. When the camera is configured for hardware triggering, Basler recommends to monitor the camera's Trigger Wait signals instead.

To determine if the camera is currently waiting for trigger signals:

- Set the AcquisitionStatusSelector parameter to the desired trigger type. For example, if you want to determine if the camera is waiting for Frame Start trigger signals, set the AcquisitionStatusSelector to FrameTriggerWait.

- Get the value of the AcquisitionStatus parameter.

If the AcquisitionStatus parameter is true, the camera is waiting for a trigger signal of the trigger type selected. If the AcquisitionStatus parameter is false, the camera is busy.

// Specify that you want to determine if the camera is waiting for Frame Start trigger signals

camera.Parameters[PLCamera.AcquisitionStatusSelector].SetValue(PLCamera.AcquisitionStatusSelector.FrameTriggerWait);

// Get the acquisition status

bool isWaitingForFrameStart = camera.Parameters[PLCamera.AcquisitionStatus].GetValue();

if(isWaitingForFrameStart){

// It is now safe to apply Frame Start trigger signals

}Action Commands

The Action Commands camera feature allows you to execute actions on multiple cameras at roughly the same time by using a single broadcast protocol message.

If you want to execute actions on multiple cameras at exactly the same time, use the Scheduled Action Commands feature instead.

You can use action commands to perform the following tasks:

- Synchronously acquire images with multiple cameras

- Synchronously reset the frame counter on multiple cameras

Action commands are broadcast protocol messages that you can send to multiple devices in a GigE network.

Each action protocol message contains the following information:

- Action device key

- Action group key

- Action group mask

- Broadcast address (optional, default: 255.255.255.255)

If the camera is within the specified network segment and if the protocol information matches the action command configuration in the camera, the camera executes the corresponding action.

Action Device Key

A 32-bit number of your choice used to authorize the execution of an action command on the camera. If the action device key on the camera and the action device key in the protocol message are identical, the camera executes the corresponding action. The device key is write-only; it can't be read out of the camera.

Action Group Key

A 32-bit number of your choice used to define a group of devices on which an action should be executed. Each camera can be assigned to one group only. If the action group key on the camera and the action group key in the protocol message are identical, the camera will execute the corresponding action.

Action Group Mask

A 32-bit number of your choice used to filter out a sub-group of cameras belonging to a group of cameras. The cameras belonging to a sub-group execute an action at the same time.

The filtering is done using a logical bitwise AND operation on the group mask number of the action command and the group mask number of a camera. If both binary numbers have at least one common bit set to 1 (i.e., the result of the AND operation is non-zero), the corresponding camera belongs to the sub-group.

Example: Assume that A group of six cameras is installed on an assembly line. To execute actions on specific sub-groups, the following group mask numbers have been assigned to the cameras (sample values):

| Camera | Group Mask Number (Binary) | Group Mask Number (Hexadecimal) |

|---|---|---|

| 1 | 000001 | 0x1 |

| 2 | 000010 | 0x2 |

| 3 | 000100 | 0x4 |

| 4 | 001000 | 0x8 |

| 5 | 010000 | 0x10 |

| 6 | 100000 | 0x20 |

In this example, an action command with an action group mask of 000111 (0x7) executes an action on cameras 1, 2, and 3. And an action command with an action group mask of 101100 (0x2C) executes an action on cameras 3, 4, and 6.

Broadcast Address

A string variable used to define where the action command will be broadcast to. When using the pylon API, the broadcast address must be in dot notation, e.g., "255.255.255.255" (all adapters), "192.168.1.255" (all devices in a single subnet 192.168.1.xxx), or "192.168.1.38" (a single device). This parameter is optional. If omitted, "255.255.255.255" will be used.

Example Setup

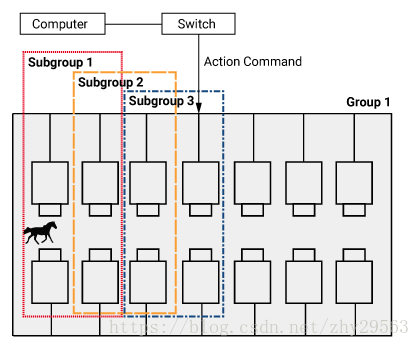

The following example setup will give you an idea of the basic concept of action commands. To analyze the movement of a horse, a group of cameras is installed parallel to a race track.

When the horse passes, four cameras (subgroup 1) synchronously execute an action (image acquisition in this example). As the horse advances, the next four cameras (subgroup 2) synchronously capture images. One after the other, the subgroups continue in this fashion until the horse has reached the end of the race track. The resulting images can be combined and analyzed in a subsequent step.

In this sample use case, the following must be defined:

- A unique device key to authorize the execution of the synchronous image acquisition. The device key must be configured on each camera and it must be the same as the device key for the action command protocol message. To define the device key, use the action device key.

- The group of cameras in a network segment that is addressed by the action command (in this example: group 1). To define the groups, use the action group key.

- The subgroups in the group of cameras that capture images synchronously (in this example: subgroups 1, 2, and 3). To define the subgroups, use the action group mask.

Using Action Commands

Configuring the Cameras

To configure the cameras so that they are able to receive action commands and perform one or more of the supported tasks: The same procedure applies if you want to configure Scheduled Action Commands on your cameras.

- Make sure that the following requirements are met:

- All cameras on which you want to configure action commands must be installed and configured in the same network segment.

- The Action Commands feature is supported by all cameras and by the Basler pylon API you are using to configure and send action commands.

- Open the connection to one of the cameras that you want to control using action commands.

- If you want to use the Action Command feature to synchronously acquire images:

- Set the TriggerSelector parameter to FrameStart.

- Set the TriggerMode parameter to On.

- Set the TriggerSource parameter to Action1.

- If you want to use the Action Command feature to synchronously reset the frame counter:

- Set the CounterResetSource parameter to Action1.

- Configure the following action command-specific parameters:

- ActionDeviceKey

- ActionGroupKey

- ActionGroupMask

- Repeat steps 2 to 6 on all cameras.

Issuing an Action Command

To issue an action command, call the IssueActionCommand method in your application.

Example:

Additional Parameters

- ActionCommandCount: Determines how many different action commands can be assigned to the device. Currently, this number is limited to 1 for all Basler cameras.

- ActionSelector: Specifies the action command to be configured.

// Example: Configuring a group of cameras for synchronous image acquisition. It is assumed that the "cameras" object is an instance of CBaslerGigEInstantCameraArray.

//--- Start of camera setup ---

for (size_t i = 0; i > cameras.GetSize(); ++i)

{

// Open the camera connection

cameras[i].Open();

// Configure the trigger selector

cameras[i].TriggerSelector.SetValue(TriggerSelector_FrameStart);

// Select the mode for the selected trigger

cameras[i].TriggerMode.SetValue(TriggerMode_On);

// Configure the source for the selected trigger

cameras[i].TriggerSource.SetValue(TriggerSource_Action1);

// Specify the action device key

cameras[i].ActionDeviceKey.SetValue(4711);

// In this example, all cameras will be in the same group

cameras[i].ActionGroupKey.SetValue(1);

// Specify the action group mask In this example, all cameras will respond to any mask other than 0

cameras[i].ActionGroupMask.SetValue(0xffffffff);

}

//--- End of camera setup ---

// Send an action command to the cameras

GigeTL->IssueActionCommand(4711, 1, 0xffffffff, "192.168.1.255");Auto Functions

Auto functions are particularly useful to maintain good image quality when imaging conditions change frequently. Most auto functions are the automatic counterparts to setting a parameter manually. For example, the Gain Auto feature controls the GainRaw parameter automatically within specified limits.

- Gain Auto

- Exposure Auto

- Balance White Auto

- Pattern Removal Auto

The individual auto functions can be used at the same time. If you are using Exposure Auto and Gain Auto at the same time, you can use the Auto Function Profile feature to specify how the effects of gain and exposure time are balanced. The pixel data for the auto functions can come from one or multiple Auto Function ROIs. To operate properly, at least one Auto Function ROI must be assigned to each auto function.

Auto Function Profile

The Auto Function Profile camera feature allows you to specify how gain and exposure time are balanced when the camera is making automatic adjustments.

To set the auto function profile, set the AutoFunctionProfile parameter to one of the following values:

- GainMinimum

- Minimize Gain (= Gain Minimum)

- Gain is kept as low as possible during the automatic adjustment process.

- ExposureMinimum

- Minimize Exposure Time (= Exposure Minimum)

- Exposure time is kept as low as possible during the automatic adjustment process.

- Smart (if available)

-

Gain is kept as low as possible and the frame rate will be kept as high as possible during automatic adjustments.

This is a four-step process:

- The camera adjusts the exposure time to achieve the target brightness value.

- If the exposure time must be increased to achieve the target brightness value, the camera increases the exposure time until a lowered frame rate is detected.

- If a lowered frame rate is detected, the camera stops increasing the exposure time and increases gain until the AutoGainRawUpperLimit value is reached.

- If the AutoGainRawUpperLimit value is reached, the camera stops increasing gain and increases the exposure time until the target brightness value is reached. This results in a lower frame rate.

-

- AntiFlicker50Hz (if available)

- AntiFlicker60Hz (if available)

-

Gain and exposure time are optimized to reduce flickering. If the camera is operating in an environment where the lighting flickers at a 50-Hz or a 60-Hz rate, the flickering lights can cause significant changes in brightness from image to image. Enabling the anti-flicker profile may reduce the effect of the flickering in the captured images.

Choose the frequency (50 Hz or 60 Hz) according your local power line frequency (e.g., North America: 60 Hz, Europe: 50 Hz).

-

// Set the auto function profile to Gain Minimum

camera.Parameters[PLCamera.AutoFunctionProfile].SetValue(PLCamera.AutoFunctionProfile.GainMinimum);

// Set the auto function profile to Exposure Minimum

camera.Parameters[PLCamera.AutoFunctionProfile].SetValue(PLCamera.AutoFunctionProfile.ExposureMinimum);

// Enable Gain and Exposure Auto auto functions and set the operating mode to Continuous

camera.Parameters[PLCamera.GainAuto].SetValue(PLCamera.GainAuto.Continuous);

camera.Parameters[PLCamera.ExposureAuto].SetValue(PLCamera.ExposureAuto.Continuous);Auto Function ROI

The Auto Function ROI camera feature allows you to specify the part of the sensor array that you want to use to control the amera's auto functions. You can create several Auto Function ROIs, each occupying different parts of the sensor array. The settings for the Auto Function ROI feature are independent of the settings for the Image ROI feature.

Changing Position and Size of an Auto Function ROI

By default, all Auto Function ROIs are set to the full resolution of the camera's sensor. However, you can change their positions and sizes as required. To change the position and size of an Auto Function ROI:

- Set the AutoFunctionAOISelector parameter to one of the available Auto Function ROIs, e.g., AOI1.

- Enter values for the following parameters to specify the position of the Auto Function ROI selected:

- AutoFunctionAOIOffsetX

- AutoFunctionAOIOffsetY

- Enter values for the following parameters to specify the size of the Auto Function ROI selected:

- AutoFunctionAOIWidth

- AutoFunctionAOIHeight

The position of an Auto Function ROI is specified based on the lines and rows of the sensor array.

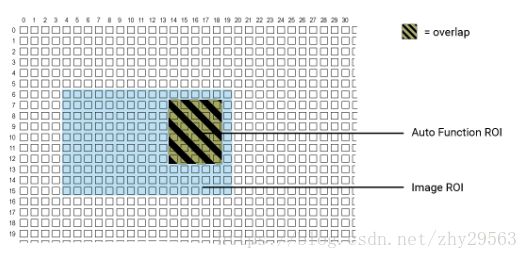

Example: Assume that you have selected Auto Function ROI 1 and specified the following settings:

- AutoFunctionAOIOffsetX = 14

- AutoFunctionAOIOffsetY = 7

- AutoFunctionAOIWidth = 5

- AutoFunctionAOIHeight = 6

This creates the following Auto Function ROI 1:

Only the pixel data from the area of overlap between the Auto Function ROI and the Image ROI will be used by the auto function assigned to it.

- If the Binning feature is enabled, the Auto Function ROI settings refer to the binned lines and columns and not to the physical lines in the sensor.

-

If the Reverse X or Reverse Y feature or both are enabled, the position of the Auto Function ROI relative to the sensor remains the same. As a consequence, different regions of the image will be controlled depending on whether or not Reverse X, Reverse Y or both are enabled.

Assigning Auto Functions

By default, each Auto Function ROI is assigned to a specific auto function. For example, the pixel data from Auto Function ROI 2 is used to control the Balance White Auto auto function.

On some camera models, the default assignments can be changed. To do so:

- Set the AutoFunctionAOISelector parameter to one of the available Auto Function ROIs, e.g., AOI1.

- Set the AutoFunctionAOIUsageWhiteBalance parameter to true if you want to assign Balance White Auto to the Auto Function ROI selected.

- Set the AutoFunctionAOIUsageIntensity parameter to true if you want to assign Exposure Auto and Gain Auto to the Auto Function ROI selected. (Exposure Auto and Gain Auto always work together.)

- If you assign one auto function to multiple Auto Function ROIs, the pixel data from all selected Auto Function ROIs will be used for the auto function.

- If you assign multiple auto functions to one Auto Function ROI, the pixel data from the Auto Function ROI will be used for all auto functions selected.

Exposure Auto and Gain Auto Assignments Work Together

When making Auto Function ROI assignments, the Gain Auto auto function and the Exposure Auto auto function always work together. They are considered as a single auto function named "Intensity" or "Brightness", depending on your camera model.

This does not imply, however, that Gain Auto and Exposure Auto must always be enabled at the same time.

Guidelines

When you are setting an Auto Function ROI, you must follow these guidelines:

| Guideline | Example |

|---|---|

| AutoFunctionAOIOffsetX + AutoFunctionAOIWidth ≤ Width of camera sensor | Camera with a 1920 x 1080 pixel sensor: AutoFunctionAOIOffsetX + AutoFunctionAOIWidth ≤ 1920 |

| AutoFunctionAOIOffsetY + AutoFunctionAOIHeight ≤ Height of camera sensor | Camera with a 1920 x 1080 pixel sensor: AutoFunctionAOIOffsetY + AutoFunctionAOIHeight ≤ 1080 |

Overlap Between Auto Function ROI and Image ROI

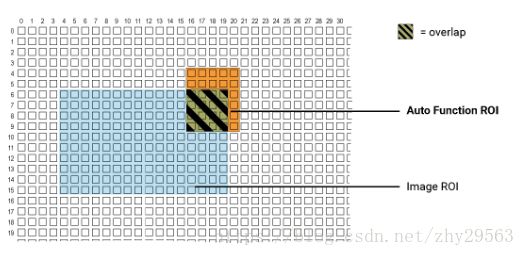

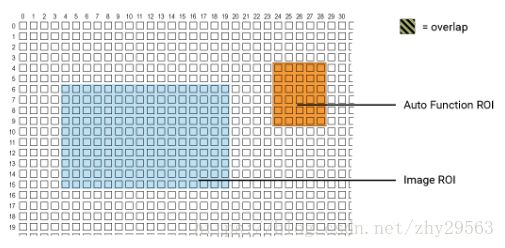

The size and position of an Auto Function ROI can be identical to the size and position of the Image ROI, but this is not a requirement. For an auto function to work, it is sufficient if both ROIs overlap each other partially.

The overlap between Auto Function ROI and Image ROI determines whether and to what extent the auto function will control the related image property. Only the pixel data from the areas of overlap will be used by the auto function to control the image property of the entire image.

- If the Auto Function ROI is completely included in the Image ROI, the pixel data from the Auto Function ROI will be used to control the image property.

- If the Image ROI is completely included in the Auto Function ROI, only the pixel data from the Image ROI will be used to control the image property.

- If the Auto Function ROI overlaps the Image ROI only partially, only the pixel data from the area of partial overlap will be used to control the image property.

- If the Auto Function ROI does not overlap the Image ROI, the related auto function will not work.

Basler strongly recommends completely including the Auto Function ROI within the Image ROI or choosing identical positions and sizes for Auto Function ROI and Image ROI.

Specifics

| Camera Model | Auto Function ROIs | Default Assignments | Assignments Can Be Changed |

|---|---|---|---|

| All ace GigE camera models | AOI 1 AOI 2 |

AOI 1: Intensity (Gain Auto + Exposure Auto) AOI 2: White Balance (Balance White Auto) |

Yes |

// Select Auto Function AOI 1

camera.Parameters[PLCamera.AutoFunctionAOISelector].SetValue(PLCamera.AutoFunctionAOISelector.AOI1);

// Specify position and size of the Auto Function ROI selected

camera.Parameters[PLCamera.AutoFunctionAOIOffsetX].SetValue(10);

camera.Parameters[PLCamera.AutoFunctionAOIOffsetY].SetValue(10);

camera.Parameters[PLCamera.AutoFunctionAOIWidth].SetValue(500);

camera.Parameters[PLCamera.AutoFunctionAOIHeight].SetValue(400);

// Enable Balance White Auto for the Auto Function ROI selected

camera.Parameters[PLCamera.AutoFunctionAOIUsageWhiteBalance].SetValue(true);

// Enable the 'Intensity' auto function (Gain Auto + Exposure Auto)

// for the Auto Function ROI selected

// Note: On some camera models, you must use AutoFunctionROIUseIntensity instead

camera.Parameters[PLCamera.AutoFunctionAOIUsageIntensity].SetValue(true);Binning

The Binning camera feature allows you to combine sensor pixel values into a single value. This may increase the signal-to-noise ratio or the camera's response to light. The camera must be idle, i.e., not capturing images.

On monochrome cameras, the camera combines (sums or averages) the pixel values of directly adjacent pixels:

On color cameras, the camera combines (sums or averages) the pixel values of adjacent pixels of the same color:

Specifying a Binning Factor

You can choose between horizontal and vertical binning. You can use both binning directions at the same time or configure only vertical or only horizontal binning.

- With horizontal binning, adjacent pixels from a certain number of columns in the image sensor are combined.

- With vertical binning, adjacent pixels from a certain number of rows in the image sensor are combined.

To specify a horizontal binning factor, enter a value for the BinningHorizontal parameter. To specify the vertical binning factor, enter a value for the BinningVertical parameter. The value of the parameters defines the binning factor. Depending on your camera model, the following values are available:

- 1: Disables binning.

- 2, 3, 4: Specifies the number of binned columns or rows (2, 3, or 4).

For example, entering a value of 3 for BinningHorizontal enables horizontal binning by 3. You can use horizontal and vertical binning at the same time. However, if you use different binning factors, objects will appear distorted in the image.

Choosing a Binning Mode

To select the binning mode for horizontal binning, set the BinningHorizontalMode parameter. To select the binning mode for vertical binning, set the BinningVerticalMode parameter. The binning mode defines how pixels are combined when binning is enabled. Depending on your camera model, the following binning modes are available:

- Sum: The values of the affected pixels are summed. This improves the signal-to-noise ratio, but also increases the camera’s response to light.

- Average: The values of the affected pixels are averaged. This greatly improves the signal-to-noise ratio without affecting the camera’s response to light.

Both modes reduce the amount of image data to be transferred. This may increase the camera's frame rate.

Considerations When Using Binning

- Effect on ROI Settings

When you are using binning, the settings for your Image ROIs and Auto Function ROIs refer to the binned rows and columns. For example, assume that you are using a camera with a 1280 x 960 sensor. Horizontal binning by 2 and vertical binning by 2 are enabled. In this case, the maximum ROI width is 640 and the maximum ROI height is 480.

- Increased Response to Light

Using binning with the binning mode set to Sum can significantly increase the camera’s response to light. When pixel values are summed, the acquired images may look overexposed. If this is the case, you can reduce the lens aperture, the intensity of your illumination, the camera’s Exposure Time setting, or the camera’s Gain setting.

- Reduced Resolution

Using binning effectively reduces the resolution of the camera’s imaging sensor. For example, if you enable horizontal binning by 2 and vertical binning by 2 on a camera with a 1280 x 960 sensor, the effective resolution of the sensor is reduced to 640 x 480.

- Possible Image Distortion

Objects will only appear undistorted in the image if the numbers of binned lines and columns are equal. With all other combinations, objects will appear distorted. For example, if you combine vertical binning by 2 with horizontal binning by 4, the target objects will appear squashed.

Binning Factors

| Camera Model | Horizontal Binning Factors | Vertical Binning Factors | Allowed Combinations (H x V Binning) |

|---|---|---|---|

| acA2500-20gm | 1, 2, 3, 4 | 1, 2, 3, 4 | All combinations |

Binning Modes

| Camera Model | Horizontal Binning Modes |

Vertical Binning Modes |

Allowed Combinations (H x V Binning Mode) |

|---|---|---|---|

| acA2500-20gm | Average Sum |

Average Sum |

All combinations |

// Enable horizontal binning by 4

camera.Parameters[PLCamera.BinningHorizontal].SetValue(4);

// Enable vertical binning by 2

camera.Parameters[PLCamera.BinningVertical].SetValue(2);

// Set the horizontal binning mode to Average

camera.Parameters[PLCamera.BinningHorizontalMode].SetValue(PLCamera.BinningHorizontalMode.Average);

// Set the vertical binning mode to Sum

camera.Parameters[PLCamera.BinningVerticalMode].SetValue(PLCamera.BinningVerticalMode.Sum);Black Level

The Black Level camera feature allows you to change the overall brightness of an image by changing the gray values of the pixels by a specified amount. For example, if you set a black level that results in a gray value increase of 3, the gray value of each pixel in the image is increased by 3. A = B + 3 To adjust the black level, enter a value for the BlackLevel parameter. The minimum black level setting is 0.

| Camera Model | Maximum Black Level [DN] |

|---|---|

| acA2500-20gm | 255 |

Black Level Effect

| Camera Model | Change in BlackLevel Parameter Value | Resulting Change in Gray Value |

|---|---|---|

| acA2500-20gm | 8-bit pixel format: +/- 4 10-bit pixel format: +/- 1 12-bit pixel format: +/- 1 |

+/- 1 |

// Set the black level to 32

camera.Parameters[PLCamera.BlackLevelRaw].SetValue(32);Center X and Center Y

Enabling Center X

To enable Center X, set the CenterX parameter to true. The camera automatically adjusts the OffsetX parameter value to center the Image ROI horizontally. The OffsetX parameter becomes read-only.

Enabling Center Y

To enable Center Y, set the CenterY parameter to true. The camera automatically adjusts the OffsetY parameter value to center the Image ROI vertically. The OffsetY parameter becomes read-only.

- You can use Center X and Center Y at the same time.

- When you enable Center X or Center Y, the camera doesn't save the current OffsetX and OffsetY parameter values. To restore the original settings, you must adjust the OffsetX and OffsetY parameters manually.

// Enable Center X

camera.Parameters[PLCamera.CenterX].SetValue(true);

// Enable Center Y

camera.Parameters[PLCamera.CenterY].SetValue(true);Counter

The Counter camera feature allows you to count certain camera events, e.g., the number of images acquired. You can get the current value of a counter by retrieving the related data chunk. If your camera supports the Counter feature, multiple counters are available. With one exception (see below), every counter has the following characteristics:

- It starts at 0.

- It counts a specific type of event (the "event source"). For example, it counts the number of images acquired. The event source is preset and can't be changed.

- Its current value can be determined by retrieving the related data chunk, e.g., the Frame Counter chunk.

- Its maximum value is 4 294 967 295. After reaching the maximum value, the counter is reset to 0 and then continues counting.

- It can be reset manually.

- It is reset to 0 whenever the camera is powered off and on again.

Exception: On some camera models, Counter 2 can be used to control the sequencer. This counter has different characteristics due to its specific purpose.

Getting the Value of a Counter

To get the current value of a counter, retrieve the related data chunk using the Data Chunks feature.

Resetting a Counter

To reset a counter:

- Set the CounterSelector parameter to the desired counter, e.g., Counter2.

- Set the CounterResetSource parameter to a software source (Software) or to a hardware source (e.g., Line1).

- Depending on the source selected in step 2, do one of the following:

- If you set a software source, execute the CounterReset command.

- If you set a hardware source, apply an electrical signal to one of the camera's input lines.

Additional Parameters

- The CounterEventSource parameter allows you to get the event source of the currently selected counter, i.e., determine which type of event increases the counter.

- The CounterResetActivation parameter currently serves no function. It is preset to RisingEdge. This means that if the counter is configured for hardware reset, the counter resets when the hardware trigger signal rises.

| Camera Model | Counter Name | Function | Event Source | Related Data Chunk | Can Be Reset |

|---|---|---|---|---|---|

| All ace GigE camera models | Counter 1 | Counts number of hardware frame start trigger signals received, regardless of whether they cause image acquisitions or not | Frame Trigger | Trigger Input Counter Chunk | Yes |

| Counter 2 | Counts number of acquired images | Frame Start | Frame Counter Chunk | Yes |

// Reset Counter 1 via software command

camera.Parameters[PLCamera.CounterSelector].SetValue(PLCamera.CounterSelector.Counter1);

camera.Parameters[PLCamera.CounterResetSource].SetValue(PLCamera.CounterResetSource.Software);

camera.Parameters[PLCamera.CounterReset].Execute();

// Get the event source of Counter 1

camera.Parameters[PLCamera.CounterSelector].SetValue(PLCamera.CounterSelector.Counter1);

string e = camera.Parameters[PLCamera.CounterEventSource].GetValue();Data Chunks

Data chunks allow you to add supplementary information to individual image acquisitions. The desired supplementary information is generated and appended as data chunks to the image data. Image data is also considered a "chunk". This "image data chunk" can't be disabled and is always the first chunk transmitted by the camera. If one or more data chunks are enabled, these chunks are transmitted as chunk 2, 3, and so on.

The figure below shows a set of chunks with the leading image data chunk and appended data chunks. The example assumes that the CRC checksum chunk feature is enabled.

After data chunks have been transmitted to the computer, they must be retrieved to obtain their information. The exact procedure depends on your camera model and the programming language used for your application. For more information about retrieving data chunks, see the Programmer's Guide and Reference Documentation delivered with the Basler pylon Camera Software Suite.

Additional Metadata

Besides the data chunks, the camera adds additional metadata to individual images, e.g., the image height, image width, the Image ROI offset, and the pixel format used. This information can be retrieved by accessing the grab result data via the pylon API.

If all of the following conditions are met, the grab result data doesn't contain any useful information (image height, image width, etc. will be set to -1):

- You are using a Basler ace classic GigE camera.

- You are using the pylon C API, the pylon C. NET API, or the pylon C++ low level API.

- The ChunkModeActive parameter is set to true.

In this case, you must retrieve the additional metadata using the pylon chunk parser. For more information, see the code samples in the Programmer's Guide and Reference Documentation delivered with the Basler pylon Camera Software Suite.

Enabling and Retrieving Data Chunks

- Set the ChunkModeActive parameter to true.

- Set the ChunkSelector parameter to the kind of chunk that you want to enable:

- GainAll

- ExposureTime

- Timestamp

- LineStatusAll

- Triggerinputcounter (if available)

- CounterValue (if available)

- Framecounter (if available)

- SequenceSetIndex or SequencerSetActive (if available)

- PayloadCRC16

- Enable the selected chunk by setting the ChunkEnable parameter to true.

- Repeat steps 2 and 3 for every desired chunk.

- Implement chunk retrieval in your application.

For information about implementing chunk retrieval, see the Programmer's Guide and Reference Documentation delivered with the Basler pylon Camera Software Suite.

Data chunks can also be viewed in the pylon Viewer.

Available Data Chunks

Gain Chunk (= GainAll Chunk)

If this chunk is available and enabled, the camera appends the gain used for image acquisition to every image. The data chunk includes the GainRaw parameter value.

Exposure Time Chunk

If this chunk is enabled, the camera appends the exposure time used for image acquisition to every image. The data chunk includes the ExposureTimeAbs parameter value. When using the Trigger Width exposure mode, the Exposure Time chunk feature is not available.

Timestamp Chunk

If this chunk is enabled, the camera appends the internal timestamp (in ticks) of the moment when image acquisition was triggered to every image.

Line Status All Chunk

If this chunk is enabled, the camera appends the status of all I/O lines at the moment when image acquisition was triggered to every image.

The data chunk includes the LineStatusAll parameter value.

Trigger Input Counter Chunk

If this chunk is available and enabled, the camera appends the number of hardware frame start trigger signals received to every image.

To do so, the camera retrieves the current value of the Counter 1 counter. On cameras with the Trigger Input Counter chunk, Counter 1 counts the number of hardware trigger signals received.

To manually reset the counter, reset Counter 1.

The trigger input counter only counts hardware trigger signals. If the camera is configured for software triggering or free run, the counter value will not increase.

Counter Value Chunk

If this chunk is available and enabled, the camera appends the number of acquired images to every image.

To do so, the camera retrieves the current value of the Counter 1 counter. On cameras with the Counter Value chunk, Counter 1 counts the number of acquired images.

To manually reset the counter, reset Counter 1.

Frame Counter Chunk

If this chunk is available and enabled, the camera appends the number of acquired images to every image.

To do so, the camera retrieves the current value of the Counter 2 counter. On cameras with the Frame Counter chunk, Counter 2 counts the number of acquired images.

To manually reset the counter, reset Counter 2.

Numbers in the counting sequence may be skipped when the acquisition mode is changed from Continuous to Single Frame. Numbers may also be skipped when overtriggering occurs.

Sequencer Set Active Chunk (= Sequence Set Index Chunk)

If this chunk is available and enabled, the camera appends the sequencer set used for image acquisition to every image.

The data chunk includes the SequencerSetActive or SequenceSetIndex parameter value (depending on your camera model).

Enabling this chunk is only useful if the camera's Sequencer feature is used for image acquisition.

CRC Checksum Chunk Feature

If this chunk is enabled, the camera appends a CRC (Cyclic Redundancy Check) checksum to every image.

The checksum is calculated using the X-modem method and includes the image data and all appended chunks, if any, except for the CRC chunk itself.

The CRC checksum chunk is always the last chunk appended to image data.

Specifics

| Camera Model | Available Data Chunks |

|---|---|

| All ace GigE camera models |

|

// Enable data chunks

camera.Parameters[PLCamera.ChunkModeActive].SetValue(true);

// Select and enable Gain All chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.GainAll);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable Exposure Time chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.ExposureTime);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable Timestamp chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.Timestamp);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable Line Status All chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.LineStatusAll);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable Trigger Input Counter chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.TriggerInputCounter);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable Frame Counter chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.FrameCounter);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable Sequence Set Index chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.SequenceSetIndex);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);

// Select and enable CRC checksum chunk

camera.Parameters[PLCamera.ChunkSelector].SetValue(PLCamera.ChunkSelector.PayloadCRC16);

camera.Parameters[PLCamera.ChunkEnable].SetValue(true);Device Information Parameters

Standard Device Information Parameters

All Basler cameras mentioned in this documentation provide the following device information parameters:

| Parameter Name |

Access |

Description |

|---|---|---|

| DeviceVendorName |

R |

The camera's vendor name, e.g., Basler. |

| DeviceModelName |

R |

The camera’s model name, e.g., acA3800-14um. |

| DeviceManufacturerInfo |

R |

The camera's manufacturer name. Usually contains an empty string. |

| DeviceVersion |

R |

The camera's version number. |

| DeviceFirmwareVersion |

R |

The camera's firmware version number. |

| DeviceID |

R |

The camera's serial number. |

| DeviceUserID |

RW |

Used to assign a user-defined name to a camera. The name is displayed in the Basler pylon Viewer and the Basler pylon USB Configurator. The name is also visible in the "friendly name" field of the device information objects returned by pylon’s device enumeration procedure. |

| DeviceScanType |

R |

The scan type of the camera's sensor (Areascan or Linescan). |

| SensorWidth | R |

The actual width of the camera's sensor in pixels. |

| SensorHeight | R |

The actual height of the camera's sensor in pixels. |

| WidthMax | R |

The maximum allowed width of the Image ROI in pixels. The value adapts to the current settings for Binning, Decimation, or Scaling (if available). |

| HeightMax | R |

The maximum allowed height of the Image ROI in pixels. The value adapts to the current settings for Binning, Decimation, or Scaling (if available). |

Additional Device Information Parameters

Depending on your camera model, the following additional device information parameters are available:

| Parameter Name |

Access |

Description |

|---|---|---|

| DeviceSFNCVersionMajor | R |

If available, the major version of the Standard Features Naming Convention (SFNC) that the camera complies with, e.g., "2" for SFNC 2.3.1. |

| DeviceSFNCVersionMinor |

R |

If available, the minor version of the Standard Features Naming Convention (SFNC) that the camera complies with, e.g., "3" for SFNC 2.3.1. |

| DeviceSFNCVersionSubMinor | R |

If available, the subminor version of the Standard Features Naming Convention (SFNC) that the camera complies with, e.g., "1" for SFNC 2.3.1. |

| DeviceLinkSelector | RW |

If available, allows you to select the link for data transmission. The parameter is preset to 0. Do not change this parameter. |

| DeviceLinkSpeed | R |

If available, the bandwidth negotiated on the specified link in bytes per second. |

| DeviceLinkThroughputLimitMode | RW |

If available, allows you to limit the maximum available bandwidth for data transmission. To enable the limit, set the parameter to On. The bandwidth is limited to the DeviceLinkThroughputLimit parameter value. |

| DeviceLinkThroughputLimit | RW |

If available, specifies the maximum available bandwidth for data transmission in bytes per second. To enable the limit, set the DeviceLinkThroughputLimitMode to On. |

| DeviceLinkCurrentThroughput | R |

If available, the actual bandwidth currently used for data transmission in bytes per second. |

| DeviceIndicatorMode | RW |

If available, allows you to turn the camera's status LED on or off. To turn the status LED on, set the parameter to Active. To turn the status LED off, set the parameter to Inactive. |

// Example: Getting some of the camera's device information parameters

// Get the camera's vendor name

string s = camera.Parameters[PLCamera.DeviceVendorName].GetValue();

// Get the camera's firmware version

s = camera.Parameters[PLCamera.DeviceFirmwareVersion].GetValue();

// Get the camera's model name

s = camera.Parameters[PLCamera.DeviceModelName].GetValue();

// Get the width of the camera's sensor

Int64 i = camera.Parameters[PLCamera.SensorWidth].GetValue();Error Codes

The camera can detect errors that you can correct yourself. If such an error occurs, the camera assigns an error code to this error and stores the error code in memory. After you have corrected the error, you can clear the error code from the list.

If several different errors have occurred, the camera stores the code for each type of error detected. The camera stores each code only once regardless of how many times it has detected the corresponding error.

Checking and Clearing Error Codes

Checking and clearing error codes is an iterative process, depending on how many errors have occurred.

- To check the last error code in the memory, get the value of the LastErrorparameter.

- Correct the corresponding error.

- To delete the last error code from the list of error codes, execute the ClearLastError command.

- Continue getting and deleting the last error code until the LastError parameter shows NoError.

Available Error Codes

| Error Code | Value | Meaning |

|---|---|---|

| 0 | No Error | The camera hasn't detected any errors since the last time the error memory was cleared. |

| 1 | Overtrigger | An overtrigger has occurred.

|

| 2 | Userset | An error occurred when attempting to load a user set. Typically, this means that the user set contains an invalid value. Try loading a different user set. |

| 3 | Invalid Parameter | A parameter has been entered that is out of range or otherwise invalid. Typically, this error only occurs when the user sets parameters via direct register access. |

| 4 |

Over Temperature |

The camera is in the over temperature mode. This error indicates that an over temperature condition exists and that damage to camera components may occur. |

| 5 | Power Failure | This error indicates that the power supply is not sufficient. Check the power supply. |

| 6 | Insufficient Trigger Width | This error is reported in Trigger Width exposure mode, when a trigger is shorter than the minimum exposure time. |

Specifics

| Camera Model | Available Error Codes |

|---|---|

| acA2500-20gm | 1, 2, 3, 4, 5, 6 |

// Get the value of the last error code in the memory

string lasterror = camera.Parameters[PLCamera.LastError].GetValue();

// Clear the value of the last error code in the memory

camera.Parameters[PLCamera.ClearLastError].Execute();Event Notification

Enabling Event Notification

- Set the EventSelector parameter to one of the following values:

- FrameStart

- FrameStartOvertrigger

- FrameStartWait

- AcquisitionStart

- AcquisitionStartOvertrigger

- AcquisitionStartWait

- ExposureEnd

- EventOverrun (if available)

- CriticalTemperature (if available)

- OverTemperature (if available)

- ActionLate (if available)

- Set the EventNotification parameter to On.

- Repeat steps 1 and 2 for all types of event notifications that you want to enable.

- Implement event handling in your application:

- For a C++ sample implementation, see the "Grab_CameraEvents" and "Grab_CameraEvents_Usb" code samples in the C++ Programmer's Guide and Reference Documentation delivered with the Basler pylon Camera Software Suite.

- For a C and C .NET sample implementation, see the "Events Sample" code sample in the C Programmer's Guide and Reference Documentation and the pylon C .NET Programmer's Guide and Reference Documentation delivered with the Basler pylon Camera Software Suite.

- Event messages are sent to the computer if there is sufficient bandwidth available. When the camera operates at high frame rates, event messages may be lost. There is no mechanism to monitor the number of event messages lost.

- After the camera has sent an event message, it waits for an acknowledgement. If no acknowledgement is received within a specified time frame, the camera resends the event message. If an acknowledgement is still not received, the resend mechanism repeats until the maximum number of retries is reached. If this maximum number of retries is reached, the message is dropped. While the camera is waiting for an acknowledgement, no new event messages can be transmitted.

- An event message is only useful when its cause still exists at the time when the event is received by the computer.

Available Events

Frame Start Event

The Frame Start event occurs whenever a Frame Start trigger has been generated by the camera (free run) or applied externally (triggered image acquisition).

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, the number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Frame Start Overtrigger Event

The Frame Start Overtrigger event occurs whenever the Frame Start trigger has been overtriggered. This happens if you apply a Frame Start trigger signal when the camera is not ready to receive the signal.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, the number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Frame Start Wait Event

The Frame Start Wait event occurs whenever the camera is ready to receive a Frame Start trigger signal.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, the number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Frame Burst Start (= Acquisition Start) Event

The Frame Burst Start event and the Acquisition Start event are identical, only their names differ. The naming depends on your camera model.

In the following, the term "Frame Burst Start event" refers to both.

The Frame Burst Start event occurs whenever a Frame Burst Start trigger has been generated by the camera (free run) or applied externally (triggered image acquisition).

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, the number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Frame Burst Start Overtrigger (= Acquisition Start Overtrigger) Event

The Frame Burst Start Overtrigger event and the Acquisition Start Overtrigger event are identical, only their names differ. The naming depends on your camera model.

In the following, the term "Frame Burst Start event" refers to both.

The Frame Burst Start Overtrigger event occurs whenever the Frame Burst Start trigger has been overtriggered. This happens if you apply a Frame Burst Start trigger signal when the camera is not ready to receive the signal.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, the number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Frame Burst Start Wait (= Acquisition Start Wait) Event

The Frame Burst Start Wait event and the Acquisition Start Wait event are identical, only their names differ. The naming depends on your camera model.

In the following, the term "Frame Burst Start event" refers to both.

The Frame Burst Start Wait event occurs whenever the camera is ready to receive a Frame Burst Start trigger signal.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Exposure End Event

The Exposure End event occurs whenever an image has been exposed.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Frame ID: Number of the image that has been exposed.

- Stream Channel Index: If available, number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Event Overrun Event

If available, the Event Overrun event occurs if the camera's internal event queue has overrun. This happens if events are generated at a very high frequency and there isn't enough bandwidth available to send the events.

The event overrun event is a warning that events are being dropped. The notification contains no specific information about how many or which events have been dropped.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The names of the parameters containing the information vary by camera model.

Critical Temperature Event

If available, the Critical Temperature event occurs if the camera’s temperature state has reached a critical level.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated. The name of the timestamp parameter depends on your camera model.

Over Temperature Event

If available, the Over Temperature event occurs if the camera’s temperature state has reached the over temperature level.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated. The name of the timestamp parameter depends on your camera model.

Action Late Event

If available, the Action Late event occurs if the camera receives a scheduled action command with a timestamp in the past.

When this event occurs, the corresponding message contains the following information:

- Timestamp: Time when the event was generated.

- Stream Channel Index: If available, number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

Specifics

| Camera Model | Events Available | Event Parameters Available |

|---|---|---|

| acA2500-20gm |

|

|

// Enable the Exposure End event notification

camera.Parameters[PLCamera.EventSelector].SetValue(PLCamera.EventSelector.ExposureEnd);

camera.Parameters[PLCamera.EventNotification].SetValue(PLCamera.EventNotification.On);

// Enable the Critical Temperature event notification

camera.Parameters[PLCamera.EventSelector].SetValue(PLCamera.EventSelector.CriticalTemperature);

camera.Parameters[PLCamera.EventNotification].SetValue(PLCamera.EventNotification.On);

// Now, you must implement event handling in your application.

// For a C++ sample implementation, see the "Grab_CameraEvents" and "Grab_CameraEvents_Usb"

// code samples in the C++ Programmer's Guide and Reference Documentation delivered

// with the Basler pylon Camera Software Suite.

// For a C and C .NET sample implementation, see the "Events Sample" code sample in

// the C Programmer's Guide and Reference Documentation and the pylon C .NET Programmer's

// Guide and Reference Documentation delivered with the Basler pylon Camera Software Suite.Exposure Auto

Prerequisites

- If the camera is configured for hardware triggering, the ExposureMode parameter must be set to Timed.

- At least one Auto Function ROI must be assigned to the Exposure Auto auto function.

- The Auto Function ROI assigned must overlap the Image ROI, either partially or completely.

Enabling or Disabling Exposure Auto

To enable or disable the Exposure Auto auto function, set the ExposureAuto parameter to one of the following operating modes:

- Once: The camera adjusts the exposure time until the specified target brightness value has been reached. When this has been achieved, or after a maximum of 30 calculation cycles, the camera sets the auto function to Off. To all following images, the camera applies the exposure time resulting from the last calculation.

- Continuous: The camera adjusts the exposure time continuously while images are acquired. The adjustment process continues until the operating mode is set to Once or Off.

- Off: Disables the Exposure Auto auto function. The exposure time is set to the value resulting from the last automatic or manual adjustment.

When the camera is capturing images continuously, the auto function takes effect with a short delay. The first few images may not be affected by the auto function.

Specifying Lower and Upper Limits

The auto function adjusts the ExposureTimeAbs parameter value within limits specified by you.

To change the limits, set the AutoExposureTimeAbsLowerLimit and the AutoExposureTimeAbsUpperLimit parameters to the desired values (in µs).

Example: Assume you have set the AutoExposureTimeAbsLowerLimit parameter to 1000 and the AutoExposureTimeAbsUpperLimit parameter to 5000. During the automatic adjustment process, the exposure time will never be lower than 1000 µs and never higher than 5000 µs.

If the AutoExposureTimeAbsUpperLimit parameter is set to a high value, the camera’s frame rate may decrease.

Specifying the Target Brightness Value

The auto function adjusts the exposure time until a target brightness value, i.e., an average gray value, has been reached.

To specify the target value, use the AutoTargetValue parameter. The parameter's value range depends on the camera model and the pixel format used.

- The target value calculation does not include other image optimizations, e.g. Gamma. Depending on the image optimizations set, images output by the camera may have a significantly lower or higher average gray value than indicated by the target value.

- The camera also uses the AutoTargetValue parameter to control the Gain Auto auto function. If you want to use Exposure Auto and Gain Auto at the same time, use the Auto Function Profile feature to specify how the effects of both are balanced.

On Basler ace GigE camera models, you can also specify a Gray Value Adjustment Damping factor. On Basler dart and pulse camera models, you can specify a Brightness Adjustment Damping factor.

When a damping factor is used, the target value is reached more slowly.

Specifics

On some camera models, you can use the Remove Parameter Limitsfeature to increase the target value parameter limits.

| Camera Model | Minimum Target Value |

Maximum Target Value |

|---|---|---|

| All ace U GigE camera models |

50 / 800a |

205 / 3280a |

// Set the Exposure Auto auto function to its minimum lower limit

// and its maximum upper limit

double minLowerLimit = camera.Parameters[PLCamera.AutoExposureTimeAbsLowerLimit].GetMinimum();

double maxUpperLimit = camera.Parameters[PLCamera.AutoExposureTimeAbsUpperLimit].GetMaximum();

camera.Parameters[PLCamera.AutoExposureTimeAbsLowerLimit].SetValue(minLowerLimit);

camera.Parameters[PLCamera.AutoExposureTimeAbsUpperLimit].SetValue(maxUpperLimit);

// Set the target brightness value to 128

camera.Parameters[PLCamera.AutoTargetValue].SetValue(128);

// Select Auto Function ROI 1

camera.Parameters[PLCamera.AutoFunctionAOISelector].SetValue(PLCamera.AutoFunctionAOISelector.AOI1);

// Enable the 'Intensity' auto function (Gain Auto + Exposure Auto)

// for the Auto Function ROI selected

camera.Parameters[PLCamera.AutoFunctionAOIUsageIntensity].SetValue(true);

// Enable Exposure Auto by setting the operating mode to Continuous

camera.Parameters[PLCamera.ExposureAuto].SetValue(PLCamera.ExposureAuto.Continuous);Exposure Time

The Exposure Time camera feature specifies how long the image sensor is exposed to light during image acquisition.

To automatically set the exposure time, use the Exposure Auto feature.

Prerequisites

- If the camera is configured for hardware triggering, the ExposureMode parameter must be set to Timed. Otherwise, the ExposureTimeAbs parameter is not available.

- The Exposure Auto auto function must be set to Off. Otherwise, setting the exposure time has no effect.

Setting the Exposure Time

To set the exposure time in microseconds, use the ExposureTimeAbs parameter.

The minimum exposure time, the maximum exposure time, and the increments in which the parameter can be changed vary by camera model.

Determining the Exposure Time

To determine the current exposure time in microseconds, get the value of the ExposureTimeAbs parameter.

This can be useful, for example, if the Exposure Auto auto function is enabled and you want to retrieve the automatically adjusted exposure time.

Exposure Time Mode

Depending on your camera model, the ExposureTimeMode parameter is available. It allows you to choose between the Standard and the Ultra Short exposure time mode. Using the Ultra Short exposure time mode lowers the value range of the ExposureTimeAbs parameter. It allows you to set very short exposure times.

- The ExposureTimeMode parameter can only be used if the prerequisites listed above are met.

- Depending on the exposure time mode, the exposure start delaychanges.

- If the Ultra Short exposure time mode is enabled, the Sequencer feature is not available.

You can set the ExposureTimeMode parameter to one of the following values:

- Standard: Enables the Standard exposure time mode. This is the default setting. When you enable this mode, the exposure time is set to the minimum value available in this exposure time mode.

- UltraShort: Allows you to set an ultra short exposure time within the value range available. When you enable this mode, the exposure time is set to the maximum value available in this exposure time mode.

Specifics

On some camera models, you can use the Remove Parameter Limitsfeature to increase the exposure time parameter limits.

| Camera Model | Minimum Exposure Time [μs] |

Maximum Exposure Time [μs] |

Increment [μs] |

ExposureTimeMode Parameter Available |

|---|---|---|---|---|

| acA2500-20gm | 137 | 1000000 | 1 | No |

// Determine the current exposure time

double d = camera.Parameters[PLCamera.ExposureTimeAbs].GetValue();

// Set the exposure time mode to Standard

// Note: Available on selected camera models only

camera.Parameters[PLCamera.ExposureTimeMode].SetValue(PLCamera.ExposureTimeMode.Standard);

// Set the exposure time to 3500 microseconds

camera.Parameters[PLCamera.ExposureTimeAbs].SetValue(3500.0);Exposure Mode

The Exposure Mode camera feature allows you to choose a method for determining the length of exposure when the camera is configured for hardware triggering.

The resulting camera behavior also depends on the Trigger Activation setting.

To set the exposure mode:

- Set the TriggerSelector parameter to FrameStart.

- Set the TriggerMode parameter to On.

- Set the TriggerSource parameter to one of the available hardware trigger sources, e.g., Line1.

- Set the ExposureMode parameter to one of the following values:

- Timed

- TriggerWidth (if available)

Available Exposure Modes

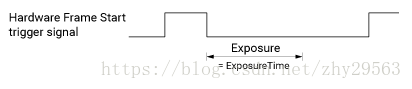

Timed Exposure Mode

Timed exposure mode is available on all camera models.

In this mode, the length of exposure is determined by the value of the camera’s Exposure Time setting.

If the camera is configured for software triggering, exposure starts when the software trigger signal is received and continues until the exposure time has expired.

If the camera is configured for hardware triggering, the following applies:

- If rising edge triggering is enabled, exposure starts when the trigger signal rises and continues until the exposure time has expired.

- If falling edge triggering is enabled, exposure starts when the trigger signal falls and continues until the exposure time has expired.

Avoiding Overtriggering in Timed Exposure Mode

If the Timed exposure mode is enabled, do not attempt to trigger a new exposure start while the previous exposure is still in progress. Otherwise, the trigger signal will be ignored, and a Frame Start Overtrigger event will be generated.

This scenario is illustrated below for rising edge triggering.

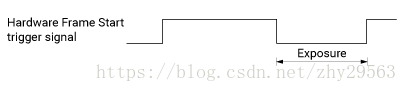

Trigger Width Exposure Mode

Trigger Width exposure mode is available on some camera models.

In this mode, the length of exposure is determined by the width of the hardware triggersignal. This is useful if you intend to vary the length of exposure for each captured frame.

If the camera is configured for rising edge triggering, exposure starts when the trigger signal rises and continues until the trigger signal falls:

If the camera is configured for falling edge triggering, exposure starts when the trigger signal falls and continues until the trigger signal rises:

Exposure Time Offset

On some camera models, when using the Trigger Width exposure mode, the exposure is slightly longer than the width of the trigger signal. This is because an exposure time offset is added automatically to the time determined by the width of the trigger signal.

To achieve the desired exposure time in Trigger Width exposure mode, you must compensate for the exposure time offset. To do so:

- Subtract the exposure time offset from the desired exposure time.

- Use the resulting time as the high or low time for the trigger signal.

Example: To achieve an exposure time of 3000 µs and the exposure time offset is 64 µs, use 3000 - 64 = 2936 µs as the high or low time for the trigger signal.

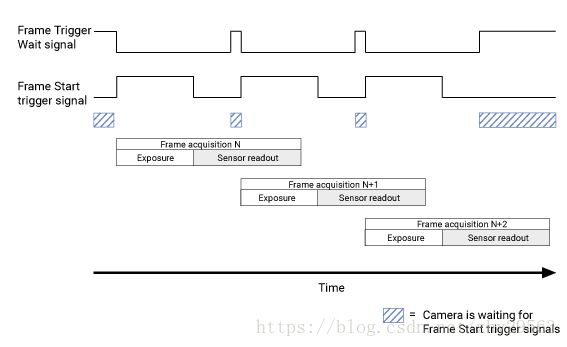

Avoiding Overtriggering in Trigger Width Exposure Mode

If the Trigger Width exposure mode is enabled, do not send trigger signals at too high a rate. Otherwise, trigger signals will be ignored, and Frame Start Overtrigger events will be generated.

You can avoid overtriggering in Trigger Width exposure mode by doing the following:

- Monitor the camera’s Frame Trigger Wait signal and only apply a Frame Start trigger signal when the Frame Trigger Wait signal is high.

- If the Exposure Overlap Time Max parameter is available, set it to the smallest exposure time you intend to use.

Specifics

| Camera Model | Available Exposure Modes |

Exposure Time Offset [µs] |

|---|---|---|

| acA2500-20gm | Timed Trigger Width |

Not specified |

// Select and enable the Frame Start trigger

camera.Parameters[PLCamera.TriggerSelector].SetValue(PLCamera.TriggerSelector.FrameStart);

camera.Parameters[PLCamera.TriggerMode].SetValue(PLCamera.TriggerMode.On);

// Set the trigger source to Line 1

camera.Parameters[PLCamera.TriggerSource].SetValue(PLCamera.TriggerSource.Line1);

// Enable Timed exposure mode

camera.Parameters[PLCamera.ExposureMode].SetValue(PLCamera.ExposureMode.Timed);Exposure Overlap Time Max

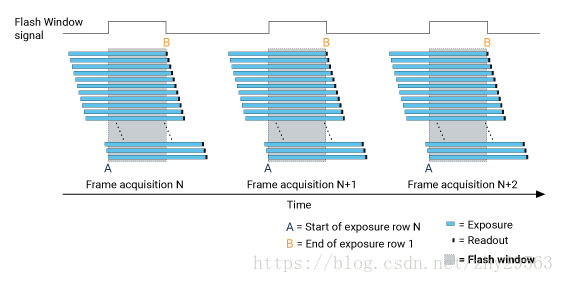

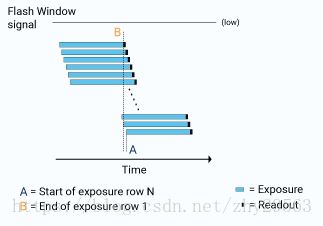

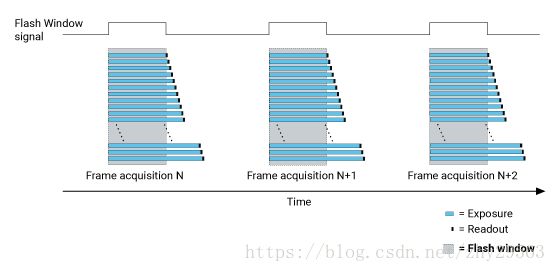

The Exposure Overlap Time Max camera feature allows you to optimize overlapping image acquisition.

Using this parameter is especially useful if you want to maximize the camera's frame rate, i.e., if you want to trigger at the highest rate possible.

The parameter is only available if you operate the camera in Trigger Width exposure mode.

Prerequisites

- The trigger mode of the camera's Frame Start trigger must be set to On.

- The ExposureMode parameter must be set to TriggerWidth.

- If the ExposureOverlapTimeMode parameter is available, the parameter must be set to Manual.

How It Works

You can use overlapping image acquisition to increase the camera's frame rate. With overlapping image acquisition, the exposure of a new image begins while the camera is still reading out the sensor data of the previous image.

In Trigger Width exposure mode, the camera doesn't "know" how long the image will be exposed before the trigger period is complete. Because of that, the camera can't fully optimize overlapping image acquisition.

To avoid this problem, enter a value for the ExposureOverlapTimeMaxAbs parameter that represents the shortest exposure time you intend to use (in µs). This helps the camera to optimize overlapping image acquisition.

If you have entered a value for the ExposureOverlapTimeMaxAbsparameter, make sure to never apply a trigger signal that is shorter than the given parameter value.

Setting the Exposure Overlap Time Max

To optimize the camera's frame rate in Trigger Width exposure mode, enter a value for the ExposureOverlapTimeMaxAbs parameter that represents the shortest exposure time you intend to use (in µs).

Example: Assume that you want to use the Trigger Width exposure mode to apply exposure times in a range from 3000 μs to 5500 μs. In this case, set the camera’s ExposureOverlapTimeMaxAbs parameter to 3000.

Additional Parameters

On some camera models, the ExposureOverlapTimeMode parameter is available.

If the parameter is available, you can set it to one of the following values:

- Automatic: The value of the ExposureOverlapTimeMaxAbs parameter is set to the maximum possible value and can't be modified. This is the default setting.

- Manual: You can configure the ExposureOverlapTimeMaxAbs parameter as desired.

If the parameter is not available, the camera always operates in the "Manual" mode.

Specifics

| Camera Model | ExposureOverlapTimeMode Parameter Available |

|---|---|

| acA2500-20gm | No |

// Set the maximum overlap time between sensor

// exposure and sensor readout to 10000 microseconds

camera.Parameters[PLCamera.ExposureOverlapTimeMaxAbs].SetValue(10000.0);Gamma

Prerequisites

- For best results, set the black level to 0 (zero) before you adjust gamma.

- If the GammaEnable parameter is available, it must be set to true.

How It Works

The camera applies a gamma correction value (γ) to the brightness value of each pixel according to the following formula (red pixel value (R) of a color camera shown as an example):

The maximum pixel value (Rmax) equals 255 for 8-bit pixel formats or 1 023 for 10-bit pixel formats.

Enabling Gamma Correction

To enable gamma correction, use the Gamma parameter. The parameter's value range is 0 to ≈4.

- Gamma = 1: The overall brightness remains unchanged.

- Gamma < 1: The overall brightness increases.

- Gamma > 1: The overall brightness decreases.

In all cases, black pixels (brightness = 0) and white pixels (brightness = maximum) will not be adjusted.

If you enable gamma correction and the pixel format is set to a 12-bit pixel format, some image information will be lost. Pixel data output will still be 12-bit, but the pixel values will be interpolated during the gamma correction process. Basler does not recommend using the Gamma feature with 12-bit pixel formats.

Additional Parameters

Depending on your camera model, the following additional parameters are available:

- GammaEnable: Enables or disables gamma correction.

- GammaSelector: Allows you to select one of the following gamma correction modes:

- User: The gamma correction value can be set as desired. (Default.)

- sRGB: The camera automatically sets a gamma correction value of approximately 0.4. This value is optimized for image display on sRGB monitors.

- BslColorSpaceMode: Allows you to select one of the following gamma correction modes:

- sRGB: The image brightness is optimized for display on an sRGB monitor. An additional gamma correction value of approximately 0.4 is applied. (Default.)

The sRGB gamma correction value is applied separately and will not be included in the Gamma parameter value. Example: You have set the color space mode to sRGB and the Gamma parameter value to 1.2. First, an automatic correction value of approximately 0.4 is applied to the pixel values. After that, a gamma correction value of 1.2 is applied to the resulting pixel values. - RGB: No additional gamma correction value is applied.

- sRGB: The image brightness is optimized for display on an sRGB monitor. An additional gamma correction value of approximately 0.4 is applied. (Default.)

| Camera Model | Additional Parameters |

|---|---|

| All ace GigE camera models |

|

// Enable the Gamma feature

camera.Parameters[PLCamera.GammaEnable].SetValue(true);

// Set the gamma type to User

camera.Parameters[PLCamera.GammaSelector].SetValue(PLCamera.GammaSelector.User);

// Set the Gamma value to 1.2

camera.Parameters[PLCamera.Gamma].SetValue(1.2);Gain

The Gain camera feature allows you to increase the brightness of the images output by the camera. Increasing the gain increases all pixel values of the image.To adjust the gain value automatically, use the Gain Auto feature.

Prerequisites

- The Gain Auto auto function must be set to Off. Otherwise, setting the gain has no effect.

Configuring Gain Settings

- If the camera's gain control is user-settable, set the GainSelector to one of the following values:

- AnalogAll: Selects the analog gain control.

- DigitalAll: Selects the digital gain control.

- Set the GainRaw parameter to the required value.

The minimum and maximum parameter values vary depending on the camera model, the pixel format chosen, and the Binning settings.

"Raw" and Absolute Gain Values

On some camera models, the gain must be entered as a "raw" value on an integer scale. The camera needs the raw value for its internal processing mechanism. The raw value, however, isn't the same as the actual gain value, which is expressed in decibels (dB).

In the camera-specific Gain Properties table, you can find a formula to calculate the absolute gain (in dB) from the raw gain value.

Analog and Digital Gain

Analog gain is applied before the signal from the camera sensor is converted into digital values. Digital gain is applied after the conversion, i.e., it is basically a multiplication of the digitized values.

Depending on your camera model, the mechanisms to control analog and digital gain can vary:

- For some cameras, gain control is analog up to and including a certain threshold. Above the threshold, gain control is digital.

- For some cameras, gain control is entirely digital.

- For some cameras, you can use the GainSelector parameter to manually switch between analog and digital gain.

Specifics

Gain Properties

| Camera Model | User-Settable Gain Control? | Gain Control Mechanism | Threshold | Gain Must be Entered as ... | Formula to Calculate Gain from Raw Gain Values |

|---|---|---|---|---|---|

| acA2500-20gm | No | Digital gain only | - | Raw | Gain = 20 × log10(GainRaw / 136) |

Gain Values

On some camera models, you can use the Remove Parameter Limitsfeature to increase the gain parameter limits.

| Camera Model | Minimum Gain Setting | Minimum Gain Setting with Vertical BinningEnabled | Maximum Gain Setting (8-bit Pixel Formats) | Maximum Gain Setting (10-bit Pixel Formats) | Maximum Gain Setting (12-bit Pixel Formats) |

|---|---|---|---|---|---|

| acA2500-20gm | 136 | 136 | 542 | 542 | - |

// Set the "raw" gain value to 400

// If you want to know the resulting gain in dB, use the formula given in this topic

camera.Parameters[PLCamera.GainRaw].SetValue(400);Gain Auto

The Gain Auto camera feature automatically adjusts the gain within specified limits until a target brightness value has been reached.

The pixel data for the auto function can come from one or multiple Auto Function ROIs.

If you want to use Gain Auto and Exposure Auto at the same time, use the Auto Function Profile feature to specify how the effects of both are balanced.

To adjust the gain manually, use the Gain feature.

Prerequisites

- At least one Auto Function ROI must be assigned to the Gain Auto auto function.

- The Auto Function ROI assigned must overlap the Image ROI, either partially or completely.

Enabling or Disabling Gain Auto

To enable or disable the Gain Auto auto function, set the GainAuto parameter to one of the following operating modes:

- Once: The camera adjusts the gain until the specified target brightness value has been reached. When this has been achieved, or after a maximum of 30 calculation cycles, the camera sets the auto function to Off and applies the gain resulting from the last calculation to all following images.

- Continuous: The camera adjusts the gain continuously while images are acquired. The adjustment process continues until the operating mode is set to Once or Off.

- Off: Disables the Gain Auto auto function. The gain is set to the value resulting from the last automatic or manual adjustment.

When the camera is capturing images continuously, the auto function takes effect with a short delay. The first few images may not be affected by the auto function.

Specifying Lower and Upper Limits

The auto function adjusts the GainRaw parameter value within limits specified by you.

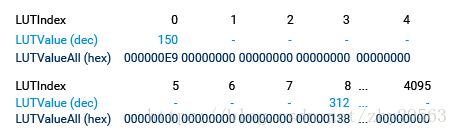

To change the limits, set the AutoGainRawLowerLimit and the AutoGainRawUpperLimitparameters to the desired values.