K8s的入门学习教程1(安装k8s集群)

目录

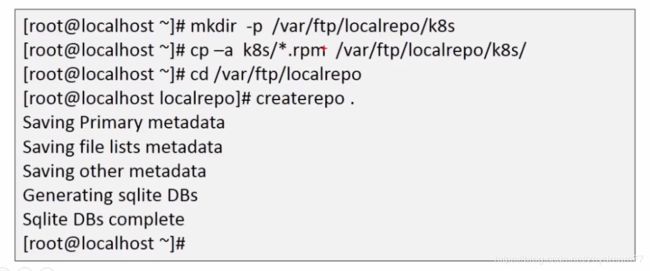

步骤一:将下载的k8s相关的包做成自定义yum源,之后集群好通过网络yum源安装

步骤二:安装etcd并创建/atomic.io/network/config网络配置(注意在master上配置)

步骤三:配置容器网络

步骤四:flannel安装(注意:flannel服务必须在docker服务之前启动)--实现不同机器间容器互通

步骤五:安装master组件

步骤六:安装node节点(此出只写了一个节点的配置,其他的节点是一模一样的配置)

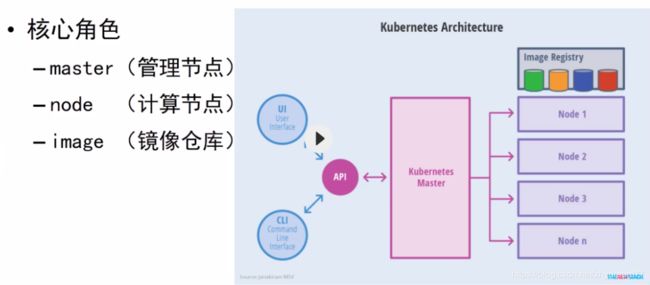

k8s是一套管理系统,主要善于管理容器集群,是开源的平台,可以实现集群的自动化部署,自动化缩容,维护等功能.

k8s适用场景:有大量跨主机的容器需要管理,快速部署应用,快速扩展应用,无缝对接新的应用功能.节省资源,优化硬件资源的使用

Master节点服务

API Server:是整个系统的对外接口,供客户端和其他组件调用

Scheduler:是负责集群内部的资源进行调度,相当于"调度室"

Controller manager:负责管理控制器,相当于"大总管"

etcd:是一个键值存储仓库,存储集群的状态,它的目标是构建一个高可用的分布式键值(key-value)数据库,基于go语言实现,使用etcd来存储网络配置,解决容器互联互通的问题.

Node节点服务

docker 容器管理

kubelet 主要负责监视指派到它的pod.包括创建,修改,删除等

kube-proxy 主要负责为pod对象提供代理,实现service的通信与负载均衡

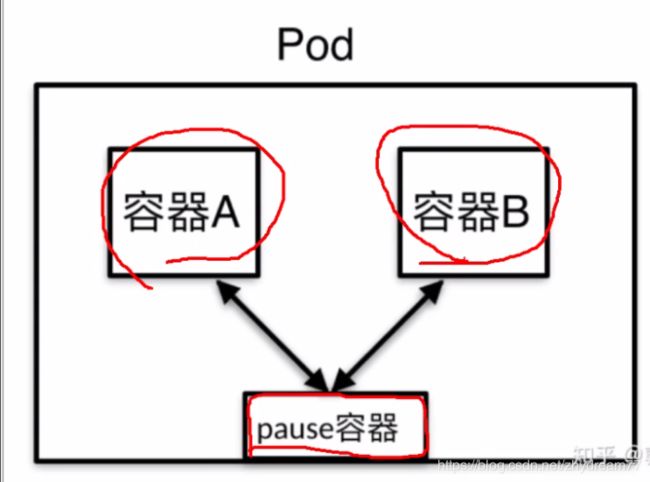

Pod是什么

pod是Kubernetes调度的基本单元

一个Pod包涵1个或多个容器

这些容器使用相同的网络命名空间和端口号

pod是一个服务的多个进程的聚合单位

pod作为一个独立的部署单位,支持横向扩展和复制

pod的作用

由若干容器组成的一个容器组

同个组内的容器共享一个存储卷(volume)

每个Pod被分配到节点上运行直运行结束或者被删除

同个Pod的容器使用相同的网络命名空间,IP地址和端口区间,相互之间能通过localhost来发现和通信

Service是什么?

一种可以访问pod服务的;策略方法,通常称为微服务

由于Pod中的服务经常会变化,给我们访问超成了不变,为了解决这一问题,service提供了基于VIP/负载均衡的访问方式

在Kubernetes集群中,每个Node运行一个kube-proxy进程,kube-proxy负责Service实现了一直VIP(虚拟IP)的形式

标签和选择器

为了建立sevice和pod间的关联,kubernetes先给每个pod打上一个标签Label.然后再给相应的service定义标签选择器(Label Selector),例如:

... ...

metadata:

labels: #声明标签

app:nginx #定义标签名字

... ...

selector: #声明标签选择器

app:nginx #为服务的后端选择标签

... ...

RC/RS/Deployment

资源对象文件

kubernetes通过Replication(简称RC)管理POD,在RC中定义了如何启动POD,如何运行,启动几副本等功能,如果我们创建文件,在其中使用Yaml的语法格式描述了上面的信息,这个文件就是我们的资源对象文件

ReplicaSet(简称RS)是RC的升级版

Deployment为Pods和RS提供描述性的更新方式

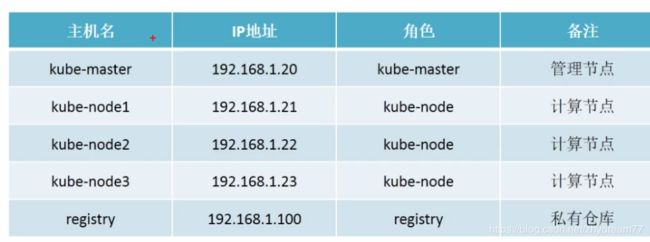

k8s的搭建

步骤一:将下载的k8s相关的包做成自定义yum源,之后集群好通过网络yum源安装

步骤二:安装etcd并创建/atomic.io/network/config网络配置(注意在master上配置)

[root@kubemaseter ~]# yum install etcd

[root@kubemaseter ~]# vim /etc/etcd/etcd.conf

6 ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" #修改此行

[root@kubemaseter ~]# systemctl enable etcd

[root@kubemaseter ~]# systemctl start etcd

[root@kubemaseter ~]# etcdctl ls /

/atomic.io

[root@kubemaseter ~]# etcdctl mk /atomic.io/network/config '{"Network":"10.254.0.0/16","Backend":{"Type":"vxlan"}}'

[root@kubemaseter ~]# etcdctl ls /atomic.io/network/config

/atomic.io/network/config

[root@kubemaseter ~]# etcdctl get /atomic.io/network/config

{"Network":"10.254.0.0/16","Backend":{"Type":"vxlan"}}

etcd键值管理:在键的组织上etcd采用了层次化的空间结构(类似于文件系统中的目录的概念),用户指定的键可以为单独的名字,创建key键值,此时实际上放在根目录/key下面.也可以为指定目录结构,如/dir1/dir2/key.则将创建相应的目录结构.主要是存储容器的网络结构.

etcdctl管理命令

语法 etctctl子命令 参数 键值

set 设置键值对

get 读取键值对

update 更改键值对

mk 创建新的键值对,rm删除键值对

mkdir 创建目录,rmdir删除目录

ls 显示目录和键值对

步骤三:配置容器网络

1.在kube-node上安装docker(所有的node节点都配置)

[root@registry ~]# yum install docker docker-distribution

[root@registry ~]# vim /etc/sysconfig/docker

13 ADD_REGISTRY='--add-registry 192.168.1.100:5000'

14 INSECURE_REGISTRY='--insecure-registry 192.168.1.100:5000'

[root@registry ~]# vim /lib/systemd/system/docker.service (docker1.3版本.安装之后默认防火墙是不容许通过的,需要更改一下)

30 ExecStartPost=/sbin/iptables -P FORWARD ACCEPT

[root@registry ~]# /sbin/iptables -P FORWARD ACCEPT

[root@registry ~]# systemctl daemon-reload

[root@registry ~]# systemctl restart docker

[root@registry ~]# systemctl enable docker

2.在registry上安装私有仓库

[root@registry ~]# yum install docker docker-distribution

[root@registry ~]# systemctl enable docker-distribution

[root@registry ~]# systemctl start docker-distribution

[root@registry ~]# docker load -i centos.tar

Loaded image: docker.io/centos:lates

[root@registry ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/centos latest 76d6bc25b8a5 19 months ago 199.7 MB

3.在私有仓库中添加myos:latest镜像

[root@registry ~]# docker run -it centos.tar

[root@8798e6cd9c81 /]# cd /etc/yum.repos.d/

[root@8798e6cd9c81 yum.repos.d]# ls

CentOS-Base.repo CentOS.repo

[root@8798e6cd9c81 yum.repos.d]# cat CentOS-Base.repo

# CentOS-Base.repo

#

# The mirror system uses the connecting IP address of the client and the

# update status of each mirror to pick mirrors that are updated to and

# geographically close to the client. You should use this for CentOS updates

# unless you are manually picking other mirrors.

#

# If the mirrorlist= does not work for you, as a fall back you can try the

# remarked out baseurl= line instead.

#

#

[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#released updates

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that may be useful

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#additional packages that extend functionality of existing packages

[centosplus]

name=CentOS-$releasever - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

#contrib - packages by Centos Users

[contrib]

name=CentOS-$releasever - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

[root@8798e6cd9c81 yum.repos.d]# cat CentOS.repo

[local_repo]

name=CentOS-$releasever - Base

baseurl="ftp://192.168.1.254/centos-1804"

enabled=1

gpgcheck=0

[root@8798e6cd9c81 yum.repos.d]# yum install bash-completion net-tools iproute psmisc rsync lftp vim

[root@8798e6cd9c81 yum.repos.d]# exit

exit

[root@registry ~]# docker commit 8798e6cd9c81 myos:latest

[root@registry ~]# docker images

myos latest a9bf132858d5 9 days ago 285.9 MB

指定私有仓库的IP地址

[root@registry ~]# vim /etc/sysconfig/docker

13 ADD_REGISTRY='--add-registry 192.168.1.100:5000'

14 INSECURE_REGISTRY='--insecure-registry 192.168.1.100:5000'

[root@registry ~]# vim /lib/systemd/system/docker.service (docker1.3版本.安装之后默认防火墙是不容许通过的,需要更改一下)

30 ExecStartPost=/sbin/iptables -P FORWARD ACCEPT

[root@registry ~]# /sbin/iptables -P FORWARD ACCEPT

[root@registry ~]# iptables -nL FORWARD

Chain FORWARD (policy ACCEPT)

target prot opt source destination

DOCKER-ISOLATION all -- 0.0.0.0/0 0.0.0.0/0

DOCKER all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

[root@registry ~]# systemctl daemon-reload

[root@registry ~]# systemctl restart docker

[root@registry ~]# docker push myos:latest 上传镜像,没网站信息的,使用默认路径上传,这个是配置私有仓库自己填写的地址

[root@registry ~]# curl http://192.168.1.100:5000/v2/_catalog

{"repositories":["defaultbackend","k8s-dns-dnsmasq-nanny-amd64","k8s-dns-kube-dns-amd64","k8s-dns-sidecar-amd64","kubernetes-dashboard-amd64","myos","nginx-ingress-controller","pod-infrastructure"]}

[root@registry ~]# curl http://192.168.1.100:5000/v2/myos/tags/list

{"name":"myos","tags":["latest","httpd","phpfpm","nginx"]}

步骤四:flannel安装(注意:flannel服务必须在docker服务之前启动)--实现不同机器间容器互通

flannel网络

flannel实质上是一种"覆盖网络(overlay network)",也就是将TCP数据包装在另一种网络包里面进行路由转发和通信,目前已经支持UDP,VxLAN,AWS,VPC和GCE路由等数据转发方式,使用flannel目标是为了使得不同主机内的容器实现互联互通.

工作原理

数据从源容器中发出后,经由所在主机的docker0虚拟网卡转发到flannel0虚拟网卡,这是个P2P的虚拟网卡,flanneld服务监听在网卡的另外一端。Flannel通过Etcd服务维护了一张节点间的路由表,详细记录了各节点子网网段 。源主机的flanneld服务将原本的数据内容UDP封装后根据自己的路由表投递给目的节点的flanneld服务,数据到达以后被解包,然后直接进入目的节点的flannel0虚拟网卡,然后被转发到目的主机的docker0虚拟网卡,最后就像本机容器通信一下的有docker0路由到达目标容器。

[root@kubemaseter ~]# yum install flannel

[root@kubemaseter ~]# vim /etc/sysconfig/flanneld

4 FLANNEL_ETCD_ENDPOINTS="http://192.168.1.20:2379" #改写成k8s的master的ip地址

[root@kubemaseter ~]# systemctl start flanneld (如果docker已经开启,要先停止docker之后在开启)

[root@kubemaseter ~]# systemctl enable flanneld

[root@kubemaseter ~]# ifconfig flannel.1

flannel.1: flags=4163

inet 10.254.62.0 netmask 255.255.255.255 broadcast 0.0.0.0

ether 5e:88:4c:6c:0d:b5 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

注意flannel每一台(所有的master和node节点)都需要安装,这个时候可以调用ansible的yml执行批量安装安装,

---

- hosts: node

tasks:

-name: remove firewalld-*

yum:

name: firewalld,firewalld-filesystem

state: absent

- name: docker install

yum:

name: docker

state: latest

update_cache: yes

- name: sync /etc/sysconfig/docker file

copy:

src: "files/{{ item }}"

dest: "/etc/sysconfig/{{ item }}"

owner: root

group: root

mode: 0644

with_items:

- docker

- flanneld

- name: sync docker.service file

copy:

src: files/docker.service

dest: /usr/lib/systemd/system/docker.service

woner: root

group: root

mode: 0644

- name: daemon-reload

shell: systemctl daemon-reload

步骤五:安装master组件

kube-master是什么

master组件提供集群的控制,对集群进行全部决策,检测和响应集群事件,

master主要由api-server,kube-scheduler,controller-manager,etcd

[root@kubemaseter ~]# yum -y install kubernetes-master-1.10.3-0.el7.x86_64 kubernetes-client-1.10.3-0.el7.x86_64

[root@kubemaseter ~]# cd /etc/kubernetes/

[root@kubemaseter kubernetes]# ls

apiserver config controller-manager scheduler

[root@kubemaseter kubernetes]#vim config

22 KUBE_MASTER="--master=http://192.168.1.20:8080" 修改此行ip为master主机的ip

[root@kubemaseter kubernetes]# vim apiserver

8 KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" 修改此行ip为0.0.0.0

17 KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.1.20:2379"

23 KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ResourceQuota"

[root@kubemaseter kubernetes]# vim /etc/hosts

# ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

192.168.1.20 kubemaseter

192.168.1.21 kubenode1

192.168.1.22 kubenode2

192.168.1.23 kubenode3

192.168.1.100 registry

[root@kubemaseter kubernetes]# systemctl enable kube-apiserver kube-controller-manager kube-scheduler

[root@kubemaseter kubernetes]# systemctl start kube-apiserver kube-controller-manager kube-scheduler

[root@kubemaseter kubernetes]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

步骤六:安装node节点(此出只写了一个节点的配置,其他的节点是一模一样的配置)

kube-node运行容器的节点,也被成为计算节点,该可以水平扩展多个节点,是维护运行pod并提供Kubernetes运行环境的,

kube-node由kubelet,kube-proxy和docker组成,需要安装的软件是kubelet,kube-proxy和docker.

[root@kubenode1 ~]# yum install kubernetes-node-1.10.3-0.el7.x86_64

[root@kubenode1 kubernetes]# ls

config kubelet kubelet.kubeconfig proxy

[root@kubenode1 ~]# vim /etc/kubernetes/config

22 KUBE_MASTER="--master=http://192.168.1.20:8080" #修改此行

[root@kubenode1 kubernetes]# vim /etc/kubernetes/kubelet

5 KUBELET_ADDRESS="--address=0.0.0.0" #修改

11 KUBELET_HOSTNAME="--hostname-override=kubenode1" #修改主机IP

14 KUBELET_ARGS="--cgroup-driver=systemd --fail-swap-on=false --kubeconfig=/etc/kubernetes/kubelet.kubeconfig

--pod-infra-container-image=pod-infrastructure:latest --cluster- dns=10.254.254.253 --cluster-domain=tedu.local."

#####################################################################################

解析:--pod-infra-container-image=pod-infrastructure:latest 为指定的pod的镜像,这个镜像需要提前下载到自定义仓库中,

[root@registry ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.1.100:5000/pod-infrastructure latest 99965fb98423 2 years ago 208.6 MB

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig这个偶尔之文件是没有的,需要创建处出来,里面编写的内容是通过命令创建出来的,具体的命令如下,命令生成之后是默认放在/root/.kube/config下的,可以看着这个文件下去改好

[root@kubenode1 ~]# kubectl config set-cluster local --server="http://192.168.1.20:8080"

[root@kubenode1 ~]# kubectl config set-context --cluster="local" local

[root@kubenode1 ~]# kubectl config set current-context local

[root@kubenode1 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@kubenode1 ~]# cat /root/.kube/config

apiVersion: v1

clusters:

- cluster:

server: http://192.168.1.20:8080

name: local

contexts:

- context:

cluster: local

user: ""

name: local

current-context: local

kind: Config

preferences: {}

users: []

#################################################################################

[root@kubenode1 kubernetes]# vim /etc/kubernetes/kubelet.kubeconfig

kind: Config

clusters:

- cluster:

server: http://192.168.1.20:8080

name: local

contexts:

- context:

cluster: local

name: local

current-context: local

[root@kubenode1 ~]# systemctl enable kubelet kube-proxy

[root@kubenode1 ~]# systemctl start kubelet kube-proxy

[root@kubenode1 ~]# systemctl status kubelet kube-proxy

[root@kubemaseter ~]# kubectl get node #查看集群的状态

NAME STATUS ROLES AGE VERSION

kubenode1 Ready