Flink集群安装及测试运行

-

下载安装包

查看自己的hadoop版本和scala版本, 这里是hadoop 2.6, scala 2.11

http://mirror.bit.edu.cn/apache/flink/flink-1.7.2/flink-1.7.2-bin-scala_2.11.tgz -

解压

tar zxvf ./apps/flink-1.7.2-bin-scala_2.11.tgz -C /home/SP-in-BD/mm/installs

安装在mm用户下的installs文件夹 -

修改flink配置文件:

vi ./conf/flink-conf.yaml

# 配置java环境

env.java.home: /usr/java/jdk1.8.0_161

# 配置主节点主机名

jobmanager.rpc.address: cdh4

# The RPC port where the JobManager is reachable.

jobmanager.rpc.port: 6124

# The heap size for the JobManager JVM

jobmanager.heap.size: 1024m

# The heap size for the TaskManager JVM

taskmanager.heap.size: 4096m

# The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline.

# 你希望每台机器能并行运行多少个slot, 机器上一个核可以运行一个slot

taskmanager.numberOfTaskSlots: 4

# The parallelism used for programs that did not specify and other parallelism.

# 整个集群最大可以的并行度, slave节点数 * 节点CPU核数

parallelism.default: 12

修改 conf/masters 文件

vi ./conf/masters

cdh4

修改 ./conf/slaves 文件

cdh3

cdh5

-

复制到各个节点

把解压并配置好的文件夹, 复制到各个节点上

scp -r flink-1.7.2 cdh3:/

主节点为cdh4,taskexecutor 为cdh3和cdh5 -

启动集群

只需在主节点上运行即可,我这里是cdh4

/flink-1.7.2/bin/start-cluster.sh (standalone方式启动) -

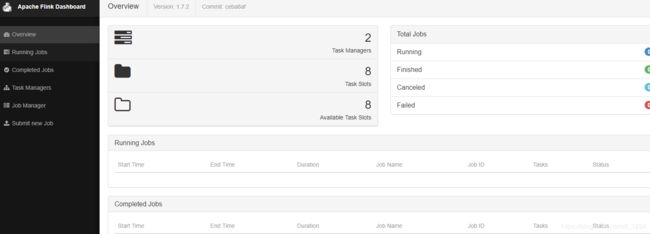

前端展示

http://cdh4:8082

7、遇到的问题

无法启动

查看日志 vi flink-zhengss-standalonesession-6-cdh3.log

2019-08-15 10:23:53,612 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Shutting StandaloneSessionClusterEntrypoint down with application status FAILED. Diagnostics java.net.BindException: Could not start actor system on any port in port range 6124

at org.apache.flink.runtime.clusterframework.BootstrapTools.startActorSystem(BootstrapTools.java:181)

at org.apache.flink.runtime.clusterframework.BootstrapTools.startActorSystem(BootstrapTools.java:121)

at org.apache.flink.runtime.clusterframework.BootstrapTools.startActorSystem(BootstrapTools.java:96)

at org.apache.flink.runtime.rpc.akka.AkkaRpcServiceUtils.createRpcService(AkkaRpcServiceUtils.java:78)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.createRpcService(ClusterEntrypoint.java:284)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.initializeServices(ClusterEntrypoint.java:255)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.runCluster(ClusterEntrypoint.java:207)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.lambda$startCluster$0(ClusterEntrypoint.java:163)

at org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.startCluster(ClusterEntrypoint.java:162)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.runClusterEntrypoint(ClusterEntrypoint.java:517)

at org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint.main(StandaloneSessionClusterEntrypoint.java:65)

.

2019-08-15 10:23:53,615 INFO akka.remote.RemoteActorRefProvider$RemotingTerminator - Shutting down remote daemon.

2019-08-15 10:23:53,617 INFO akka.remote.RemoteActorRefProvider$RemotingTerminator - Remote daemon shut down; proceeding with flushing remote transports.

2019-08-15 10:23:53,618 ERROR akka.remote.Remoting - Remoting system has been terminated abrubtly. Attempting to shut down transports

2019-08-15 10:23:53,618 INFO akka.remote.RemoteActorRefProvider$RemotingTerminator - Remoting shut down.

2019-08-15 10:23:53,621 ERROR org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Could not start cluster entrypoint StandaloneSessionClusterEntrypoint.

org.apache.flink.runtime.entrypoint.ClusterEntrypointException: Failed to initialize the cluster entrypoint StandaloneSessionClusterEntrypoint.

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.startCluster(ClusterEntrypoint.java:181)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.runClusterEntrypoint(ClusterEntrypoint.java:517)

at org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint.main(StandaloneSessionClusterEntrypoint.java:65)

Caused by: java.net.BindException: Could not start actor system on any port in port range 6124

at org.apache.flink.runtime.clusterframework.BootstrapTools.startActorSystem(BootstrapTools.java:181)

at org.apache.flink.runtime.clusterframework.BootstrapTools.startActorSystem(BootstrapTools.java:121)

at org.apache.flink.runtime.clusterframework.BootstrapTools.startActorSystem(BootstrapTools.java:96)

at org.apache.flink.runtime.rpc.akka.AkkaRpcServiceUtils.createRpcService(AkkaRpcServiceUtils.java:78)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.createRpcService(ClusterEntrypoint.java:284)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.initializeServices(ClusterEntrypoint.java:255)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.runCluster(ClusterEntrypoint.java:207)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.lambda$startCluster$0(ClusterEntrypoint.java:163)

at org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

at org.apache.flink.runtime.entrypoint.ClusterEntrypoint.startCluster(ClusterEntrypoint.java:162)

... 2 more

错误原因:

在flink-conf.yaml文件中jobmanager.rpc.port,使用的是默认的端口6123,是ipv6的端口,、

由于使用的是华为云服务器,华为云现在只能放通ipv4

解决方法:

将jobmanager.rpc.port默认的端口改为6124

-

Flink测试示例

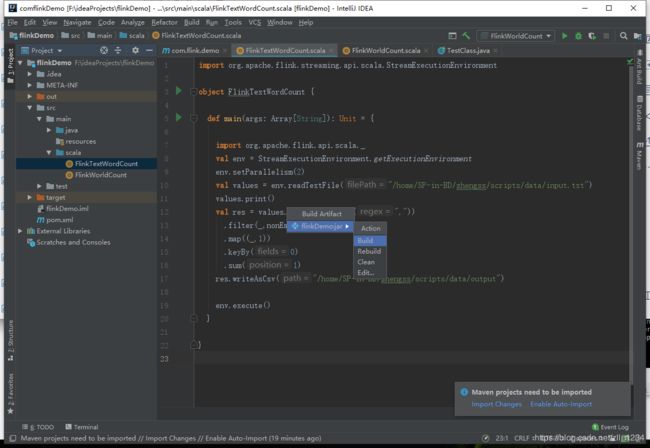

1)测试代码

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

object FlinkTextWordCount {

def main(args: Array[String]): Unit = {

import org.apache.flink.api.scala._

val env = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(2)

val values = env.readTextFile("/home/SP-in-BD/zhengss/scripts/data/input.txt")

values.print()

val res = values.flatMap(_.split(","))

.filter(_.nonEmpty)

.map((_,1))

.keyBy(0)

.sum(1)

res.writeAsCsv("/home/SP-in-BD/zhengss/scripts/data/output")

env.execute()

}

}

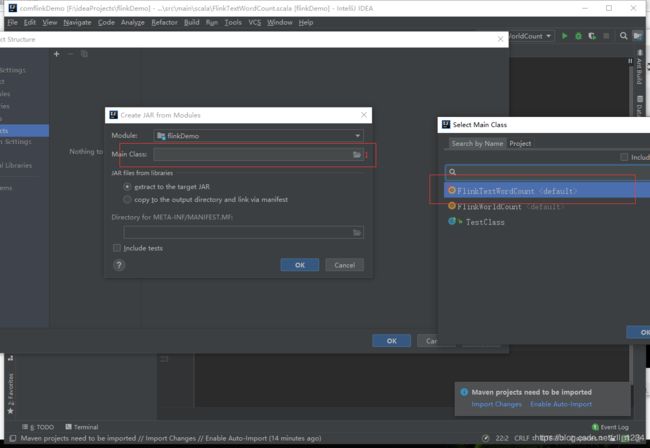

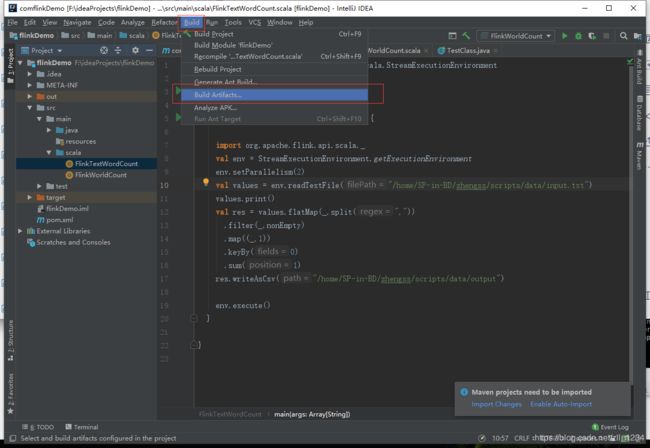

2)打包

1、打包错误

org.apache.flink.client.program.ProgramInvocationException: Neither a 'Main-Class', nor a 'program-class' entry was found in the jar file.

at org.apache.flink.client.program.PackagedProgram.getEntryPointClassNameFromJar(PackagedProgram.java:596)

at org.apache.flink.client.program.PackagedProgram.(PackagedProgram.java:190)

at org.apache.flink.client.program.PackagedProgram.(PackagedProgram.java:128)

at org.apache.flink.client.cli.CliFrontend.buildProgram(CliFrontend.java:862)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:204)

at org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:1050)

at org.apache.flink.client.cli.CliFrontend.lambda$main$11(CliFrontend.java:1126)

at org.apache.flink.runtime.security.NoOpSecurityContext.runSecured(NoOpSecurityContext.java:30)

错误源因:

由于在idea里打包方式不正确

解决方案:

正确打包方式如下:

在打包之前没有out、target和META-INF目录,build之后会生成这三个目录

然后将打成的jar包上传到服务器

2、打包错误

Error:(10, 29) could not find implicit value for evidence parameter of type org.apache.flink.api.common.typeinfo.TypeInformation[String]

val res = values.flatMap(_.split(","))

错误原因

这是因为在当前环境之下找到不到scala的包,引入如下声明即可

import org.apache.flink.api.scala._

3、启动程序

配置环境变量

sudo vi ~/.bashrc

添加内容:

export FLINK_HOME=/home/SP-in-BD/zhengss/apps/flink-1.7.2/

export PATH= F L I N K H O M E / b i n : FLINK_HOME/bin: FLINKHOME/bin:PATH

执行一般的flink的jar包(适用于测试代码)

flink run flinkDemo.jar

执行监控socket流的jar包

netstat -anlp | grep 9999

nc -l 9999

flink run flinkDemo.jar --hostname cdh4 --port 9999