物体检测——IOU系列(IOU/GIOU/DIOU/CIOU)

深度学习里非常重要的一块就是loss的设计,物体检测里怎么都逃不开IOU及其变种,一开始会使用bbox的距离作为loss,最近几年会将IOU变种直接作为loss训练,可以提点不少,让网络更容易学习到框的位置。

IOU(Intersection over Union)

def bbox_iou(self, boxes1, boxes2):

boxes1_area = boxes1[..., 2] * boxes1[..., 3]

boxes2_area = boxes2[..., 2] * boxes2[..., 3]

boxes1 = tf.concat([boxes1[..., :2] - boxes1[..., 2:] * 0.5,

boxes1[..., :2] + boxes1[..., 2:] * 0.5], axis=-1)

boxes2 = tf.concat([boxes2[..., :2] - boxes2[..., 2:] * 0.5,

boxes2[..., :2] + boxes2[..., 2:] * 0.5], axis=-1)

left_up = tf.maximum(boxes1[..., :2], boxes2[..., :2])

right_down = tf.minimum(boxes1[..., 2:], boxes2[..., 2:])

inter_section = tf.maximum(right_down - left_up, 0.0)

inter_area = inter_section[..., 0] * inter_section[..., 1]

union_area = boxes1_area + boxes2_area - inter_area

iou = 1.0 * inter_area / union_area

return iouCVPR2019--GIOU:https://arxiv.org/pdf/1902.09630.pdf

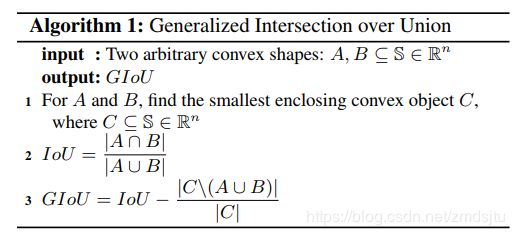

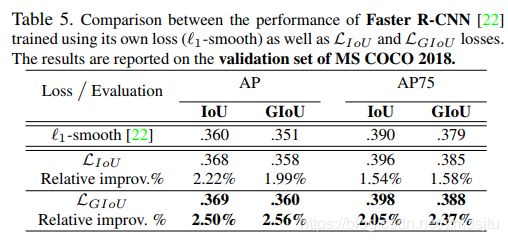

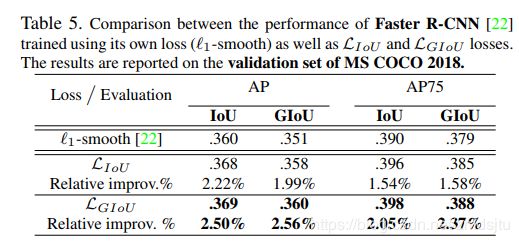

- GIOU=IOU-外接矩形空隙面积/并集

- 利用1-GIOU替代bbox距离作为loss,数据集上表现更好

- 取值范围(-1,1],当两个rect不重叠时候,IOU数值为0,但GIOU不为0,故而适合作为loss

- 比IOU更能反映直观感觉上两个Rect的接近程度,当然最主要的是能提点

def bbox_giou(self, boxes1, boxes2):

boxes1 = tf.concat([boxes1[..., :2] - boxes1[..., 2:] * 0.5,

boxes1[..., :2] + boxes1[..., 2:] * 0.5], axis=-1)

boxes2 = tf.concat([boxes2[..., :2] - boxes2[..., 2:] * 0.5,

boxes2[..., :2] + boxes2[..., 2:] * 0.5], axis=-1)

boxes1 = tf.concat([tf.minimum(boxes1[..., :2], boxes1[..., 2:]),

tf.maximum(boxes1[..., :2], boxes1[..., 2:])], axis=-1)

boxes2 = tf.concat([tf.minimum(boxes2[..., :2], boxes2[..., 2:]),

tf.maximum(boxes2[..., :2], boxes2[..., 2:])], axis=-1)

boxes1_area = (boxes1[..., 2] - boxes1[..., 0]) * (boxes1[..., 3] - boxes1[..., 1])

boxes2_area = (boxes2[..., 2] - boxes2[..., 0]) * (boxes2[..., 3] - boxes2[..., 1])

left_up = tf.maximum(boxes1[..., :2], boxes2[..., :2])

right_down = tf.minimum(boxes1[..., 2:], boxes2[..., 2:])

inter_section = tf.maximum(right_down - left_up, 0.0)

inter_area = inter_section[..., 0] * inter_section[..., 1]

union_area = boxes1_area + boxes2_area - inter_area

iou = inter_area / union_area

enclose_left_up = tf.minimum(boxes1[..., :2], boxes2[..., :2])

enclose_right_down = tf.maximum(boxes1[..., 2:], boxes2[..., 2:])

enclose = tf.maximum(enclose_right_down - enclose_left_up, 0.0)

enclose_area = enclose[..., 0] * enclose[..., 1]

giou = iou - 1.0 * (enclose_area - union_area) / enclose_area

return giouRezatofighi H , Tsoi N , Gwak J Y , et al. Generalized Intersection over Union: A Metric and A Loss for Bounding Box Regression[J]. 2019.

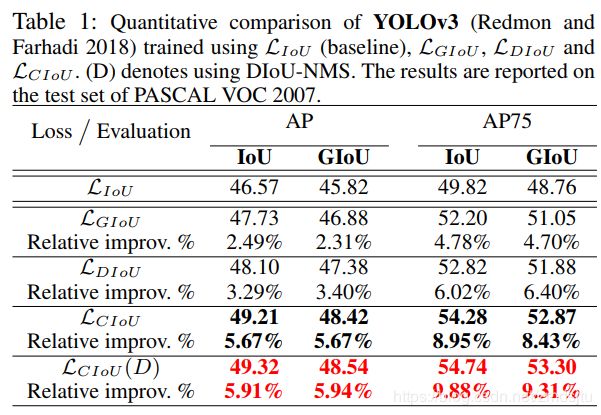

CVPR2020--DIOU/CIOU:https://arxiv.org/pdf/1911.08287.pdf

- DIOU=IOU-(中心点距离/并集外接矩形对角线距离)²

- 作为loss收敛得更快,可以进一步提点

- 用就对了

- 进一步考虑ratio,将长宽比作为罚项加进来(提出CIOU)

最后一行代表利用CIOU作为loss训练,利用DIOU来NMS

def bbox_diou_ciou(self, boxes1, boxes2):

center_vec = boxes1[..., :2] - boxes2[..., :2]

v_ = 0.4052847483961759*(tf.atan(boxes1[..., 2]/boxes1[..., 3]) - tf.atan(boxes2[..., 2]/boxes2[..., 3]))**2

boxes1 = tf.concat([boxes1[..., :2] - boxes1[..., 2:] * 0.5,

boxes1[..., :2] + boxes1[..., 2:] * 0.5], axis=-1)

boxes2 = tf.concat([boxes2[..., :2] - boxes2[..., 2:] * 0.5,

boxes2[..., :2] + boxes2[..., 2:] * 0.5], axis=-1)

boxes1 = tf.concat([tf.minimum(boxes1[..., :2], boxes1[..., 2:]),

tf.maximum(boxes1[..., :2], boxes1[..., 2:])], axis=-1)

boxes2 = tf.concat([tf.minimum(boxes2[..., :2], boxes2[..., 2:]),

tf.maximum(boxes2[..., :2], boxes2[..., 2:])], axis=-1)

boxes1_area = (boxes1[..., 2] - boxes1[..., 0]) * (boxes1[..., 3] - boxes1[..., 1])

boxes2_area = (boxes2[..., 2] - boxes2[..., 0]) * (boxes2[..., 3] - boxes2[..., 1])

left_up = tf.maximum(boxes1[..., :2], boxes2[..., :2])

right_down = tf.minimum(boxes1[..., 2:], boxes2[..., 2:])

inter_section = tf.maximum(right_down - left_up, 0.0)

inter_area = inter_section[..., 0] * inter_section[..., 1]

union_area = boxes1_area + boxes2_area - inter_area

iou = inter_area / union_area

enclose_left_up = tf.minimum(boxes1[..., :2], boxes2[..., :2])

enclose_right_down = tf.maximum(boxes1[..., 2:], boxes2[..., 2:])

enclose_vec = enclose_right_down - enclose_left_up

center_dis = tf.sqrt(center_vec[..., 0]**2+center_vec[..., 1]**2)

enclose_dis = tf.sqrt(enclose_vec[..., 0]**2+enclose_vec[..., 1]**2)

diou = iou - 1.0 * center_dis / enclose_dis

a_ = v_/(1-IOU+v_)

ciou= diou- a_*v_

return diou,ciou

Zheng, Zhaohui, Wang, Ping, Liu, Wei. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression[J]. 2019.

参考:

https://zhuanlan.zhihu.com/p/94799295

https://github.com/YunYang1994/tensorflow-yolov3