论文研读-多目标优化中的多源选择迁移框架

论文研读-多目标优化中的多源选择迁移框架

Multisource Selective Transfer Framework in Multiobjective Optimization Problems

觉得有用的话,欢迎一起讨论相互学习~

![]()

![]()

![]()

![]()

- 此篇文章为

J. Zhang, W. Zhou, X. Chen, W. Yao and L. Cao, "Multisource Selective Transfer Framework in Multiobjective Optimization Problems," in IEEE Transactions on Evolutionary Computation, vol. 24, no. 3, pp. 424-438, June 2020, doi: 10.1109/TEVC.2019.2926107.的论文学习笔记,只供学习使用,不作商业用途,侵权删除。并且本人学术功底有限如果有思路不正确的地方欢迎批评指正!

Abstract

- 在实际复杂系统设计中,当启动具有另一个参数配置的新优化实例时,总是从头开始执行,浪费大量时间重复类似的搜索过程。受可以重用过去经验来解决相关任务的迁移学习的启发,许多研究人员更加注重探索如何从过去的优化实例中学习以加速目标实例。在实际应用中,数据库中已经存储了相似资源实例。 基本问题是如何评价迁移能力以避免负迁移 , 为了获得源实例与目标实例之间的关联性,我们开发了一种名为质心分布的优化实例表示方法,该方法借助于精英候选解在进化过程中估计分布算法(EDA)中学习的概率模型的帮助。Wasserstein距离用于评估不同优化实例的质心分布之间的相似性,在此基础上,我们提出了一种新颖的框架,称为多源选择性转移优化,其中包括三种合理选择源的策略。为了选择合适的策略,根据源和目标质心分布之间的相似性总结了四个选择建议。该框架有利于选择最合适的资源,从而可以提高解决多目标优化问题的效率。 为了评价提出的框架和选择方法的有效性,我们进行了两个实验:

- 复杂多目标优化问题benchmark

- 一个实际的卫星布局优化设计问题

实验结果证明,提出的算法有更好的收敛速度和HV值

- 摘要:估计分布算法(EDA),多目标优化,多源迁移,迁移优化, Wasserstein distance

Introduction

-

对于实际工程中的复杂系统设计问题,以卫星系统设计为例,有很多过去的经验,例如在启动新设计之前,已将不同布局的卫星布局解决方案设计存储在数据库中。 利用以往的经验不仅可以提高优化效果,而且可以提高收敛速度。 对于复杂的系统设计而言,这非常重要,因为它通常涉及计算成本高昂的多学科分析[1]。但是,如何利用这些知识来加快新设计的速度,会使卫星设计者感到困惑。 转移学习关注从一个域到另一个域的数据迁移。 各种研究已成功应用于经典机器学习任务,如分类任务[2]-[4],如情绪分析[5],数字识别[6]。近年来,进化算法界的研究人员试图将迁移学习应用到优化任务[7]-[10]中。

-

三个需要关注的问题

-

Q1 (可迁移性) :如何识别相似的问题的可迁移性以降低不合适的负迁移。

-

Q2 (迁移组件) :解决方案,结构,参数等。

-

Q3 (迁移算法) :最后步骤是如何重用从源问题中提取的信息。 大多数研究关注于一对一的域自适应算法,例如MNIST数据集[13]和WIFI数据集[14]等基准测试中的传输成分分析(TCA)[11],TrAdaBoost [12]。 但是,很少有研究集中在多源迁移学习问题上。

-

在迁移学习研究领域,大部分工作集中在Q2和Q3,尤其是Q3。 但是,Q1也非常重要,因为从不适当的来源迁移会导致负迁移。 在本文中,我们特别关注多源问题的可迁移性,并研究如何衡量不同优化问题的相似性

-

[15]证明任务间的相关程度对于多任务学习的有效性十分重要,[16]发展了一个基于自编码器的多任务优化算法,其任务选择主要取决于 1)帕累托前沿解的交叉程度2)任务适应度景观的相似性 ,这些因素保证了相关性。 但是,在实际场景中,我们一开始无法获得目标问题的上述信息。

-

为了评估不同优化问题的相关性,应首先确定不同优化实例的表示形式。Santana et al. [17] 通过一种估计分布算法-估计贝叶斯网络算法(EBNA)将优化问题视为一个图形。 在他的工作中,任务用图形表示。 可以通过网络分析方法获得任务的相关性,但是表示方法在很大程度上取决于人为的图形特征,可能会丢失任务的相关信息。Yu et al. [18] 发明了一种新的表示强化学习任务的表示方法,该学习方法具有通过均匀分布采样的不同参数。他使用原型策略通过几次迭代获得的奖励向量(称为浅试验)来表示任务,从而使过去的强化学习任务得以重用。 但是,演化过程中的浅层试验会产生太多噪声,无法准确表示优化实例。

-

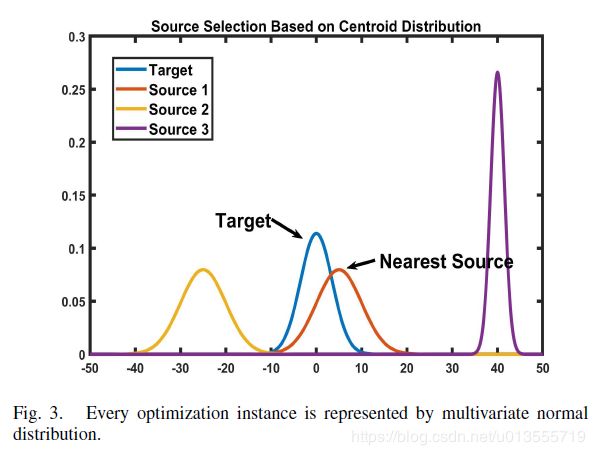

在每一个世代,利用

估计分布算法(EDA)表示种群分布,我们可以利用综合分布来表示实例,在这里称为质心分布。质心表示种群在一个世代中的中心。通过计算实例(任务)分布之间的Wasserstein distance(WD)可以获得任务之间的相似性。有了相似性,可以使用三种不同的基于遗传算法的资源选择策略来获得迁移的知识以加速进化过程中的搜索过程,为了高效使用这种策略,采用四种选择机制 -

以下是本文的贡献:

- 提出一种新的表示方式叫做 质心分布 来度量不同优化实例的相似性

- 提出一种新的基于EDA,NSGA-II三种策略的多源选择迁移优化框架来优化多目标优化问题

- 总结了迁移资源选择策略的四点建议,在此基础上,提出了应对负迁移问题的混合策略。

- 以下为本文内容概述:

- 第二节总结了迁移学习,EDA(估计分布算法),进化动态优化算法(EDO)

- 第三节总结了基于EDA和NSGA-II的多源选择迁移优化算法的基本框架和流程图

- 第四节在多目标烟花算法benchmark上做了实验,并总结了四种资源选择策略并在一个实际问题上验证了算法

- 第五节总结了本文算法

Related work

Transfer optimization

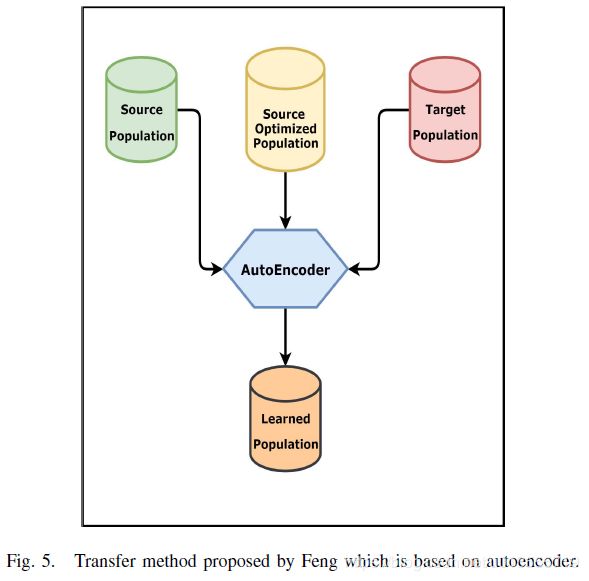

- 最近,开发了许多致力于通过机器学习技术提高现有进化算法效率的工作,尤其是重用了过去从相关问题中获得的搜索经验。Feng [7] 为了解决多目标优化问题,提出了一种基于自动编码器模型的新传输方法,加快了进化算法的搜索过程。

- 迁移优化可以两类: 单源和多源 ,目前研究大多数是单源迁移算法, 多源优化算法不仅注重迁移方式还注重实例表示和源选择

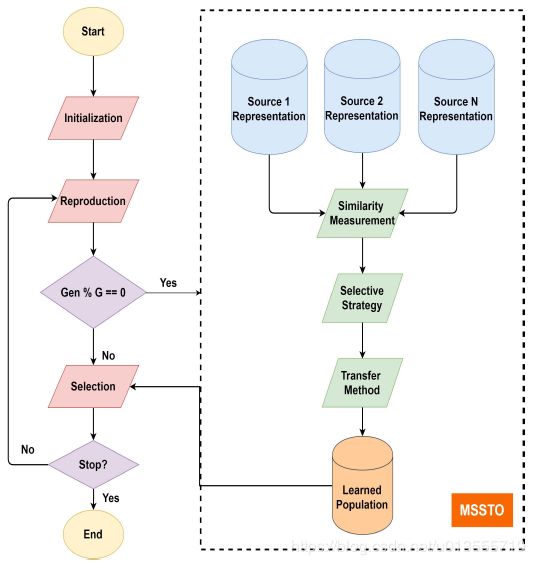

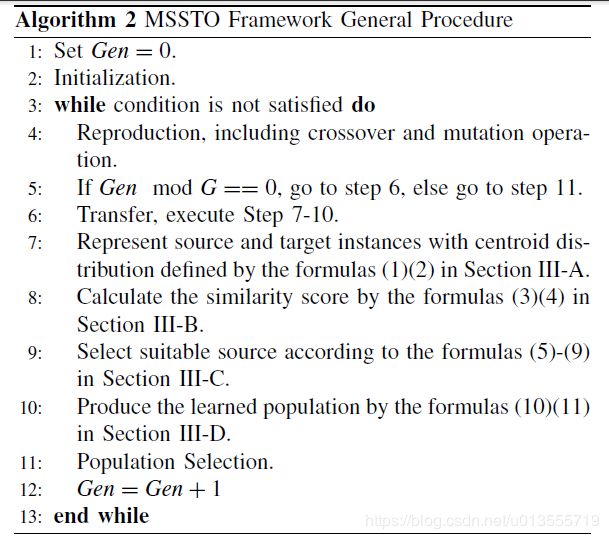

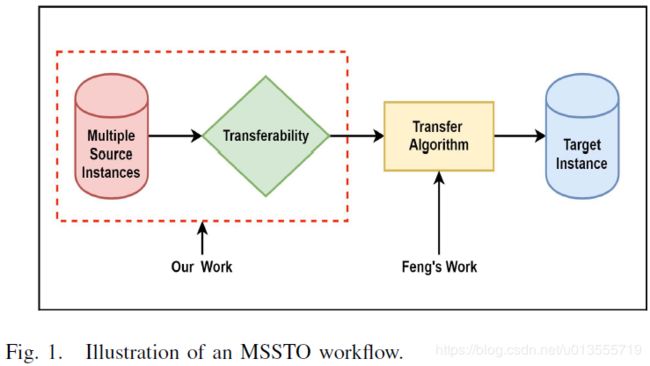

- 具体算法流程和示意如图1所示:

Estimation of Distribution Algorithms 估计分布算法 EDA

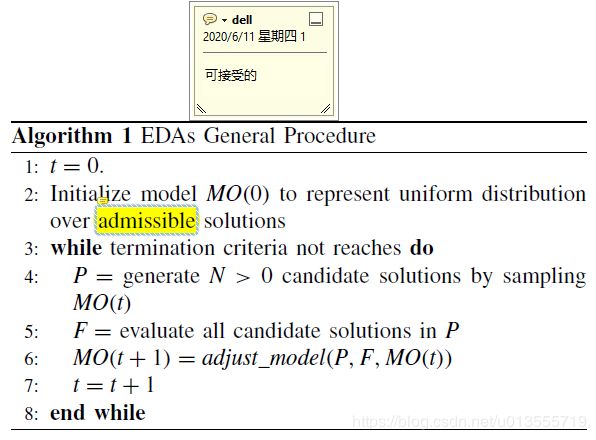

- EDA是随机优化方法,可通过学习和抽样精英候选解决方案的显式概率模型来指导寻找最佳点。 EDA与常规进化算法(遗传算法算法)之间的主要区别在于,后者通过变异和交叉操作生成新的候选解,可以将其视为隐式分布。 但是,EDA使用由概率模型表示的显式分布,例如多元正态分布,贝叶斯网络等。

- EDA常见算法结构如下图所示:

根据决策变量的相互依赖,EDA可以被分为三类:

- 单变量分解算法:其中每个变量都是相互独立的。代表算法有: 基于种群的 population-based incremental learning (PBIL) 基于种群的增量学习 [22], univariate marginal distribution algorithm (UMDA) 单变量边际分布算法 [23], compact genetic algorithm (cGA) 紧凑遗传算法 [24], etc.

- 二元分解: 在此类模型中, 变量之间的关系形成树或者森林图。双变量分解使变量之间的依赖关系形成树或森林图。 代表性算法包含互 mutual information maximizing input clustering 信息最大化输入聚类(MIMIC)[25],基于依赖关系树和二元边际分布的估计分布算法[26]等。

- 多元分解,使用有向无环图或无向图表示依赖关系 。 贝叶斯网络[27]和马尔可夫网络[28]是两个代表性的模型。

- 近年来,EDA已用于众多具有挑战性的优化问题,尤其是在多目标优化问题[29]-[33]和多峰优化问题[34],[35]中。 借助从精英解中学到的概率模型,许多类型的研究[36]都集中于如何重用过去经验中的模型以加速目标实例搜索。 受EDA迁移学习研究的启发,我们提出了一种表示实例的新方法,称为质心分布。 基于表示形式,我们提出了三种资源选择策略来产生合适的知识以进行迁移。

Evolutionary Dynamic Optimization 进化动态优化 EDO

- EDO是进化计算领域中最活跃的研究领域之一。 在某些EDO情况下,随着环境的变化,问题具有一些常见的模式。 受此启发,许多研究集中在重用类似环境中的过去搜索经验来加速进化过程[37],[38]。

- 一种常见的方法是通过一些特殊的点来学习并预测最优点的移动,Li [39]提出了基于特殊点的预测策略(SPPS):例如feed-forward center point,前馈中心点, boundary point边界点, and knee point拐点。Ruan等人[40]提出了一种基于中心点的混合多样性维护方法,以提高预测精度。 Zou等[41]提出了一种基于拐点来预测非支配集合的方法,以使种群迅速收敛到PF。

- 另一种方法是预测发生更改时应重新初始化个体或种群的位置。 Zhou的工作[42]利用历史搜索经验来指导类似的目标搜索,以有效解决动态优化问题。 提出了两种重新初始化策略,一种是根据历史预测个体的新位置。 另一个则是通过某种噪声来扰动 perturb 当前种群,这些噪声的方差由先前的变化估计。

- 当环境周期性变化时,第三种方法称为基于memory的方法通常用于通过重用存储在内存中的相关帕累托最优集(POS)信息来解决EDO问题[43]-[46]。 Jiang和Yang[37]提出了一种新的方法,称为稳态和世代进化算法(SGEA),该方法重用了过去分布良好的解,并且当环境发生改变时,根据先前和当前环境的信息重新定位了与新帕累托前沿有关的解 此外,多种群机制是解决问题的有用方法[47],[48]。

- 最近的一些工作直接将迁移学习算法(例如TCA)与动态优化算法集成在一起。 Jiang以此方式提出了两个算法框架。 一种叫做Tr-DMOEA,它结合了迁移学习和基于种群的进化算法[8],另一种则是领域自适应和基于非参数估计的EDA的组合,称为DANE-EDA [9]。 与他的工作不同,我们主要侧重于如何从多个源任务中选择合适的源以加速进化过程。

- 本文认为动态问题利用历史信息,但是问题除了某些参数以外,问题的定义没有发生变化,但是迁移学习不一样,其要通过历史信息优化的完全是不一样的两个问题。因此如何度量两个问题的相似性并且选择合适的迁移源将是本文的重点。

多源选择迁移优化框架

- 现有大多数研究对一对一传输优化更感兴趣,而忽略了实际场景中的多源属性。在本文中,我们提出了一个多源选择性迁移优化框架来解决多源实例的问题。

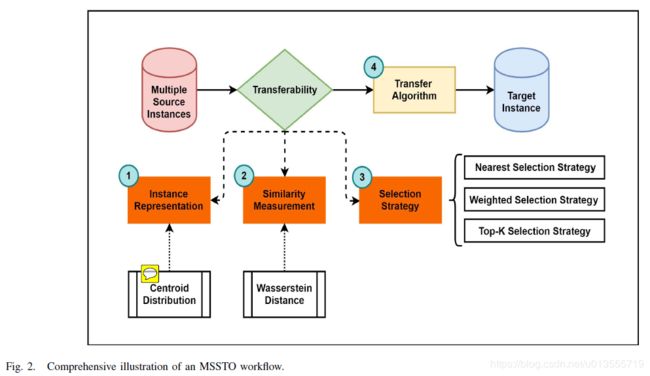

- 图2首先引入了实例表示,然后提出了源-目标相似度度量方法,提出了是那种源实例选择策略。

优化实例表示

- 质心表示的源选择策略

- 通过运行GA风格的算法,我们可以通过选择操作获得精英群体,可以将选择的解决方案的分布作为EDA的显式概率模型来学习。 我们称其为学习型质心分布,以表示空间中当前的人口扩散趋势。

- 下面的表示很关键,需要注意!

- 为避免负转移,应在迁移算法启动之前确定合适的来源。 我们提出的表示方法可以反映进化过程中源和目标之间的一些相似性信息,可以帮助目标实例选择合适的源进行迁移 。

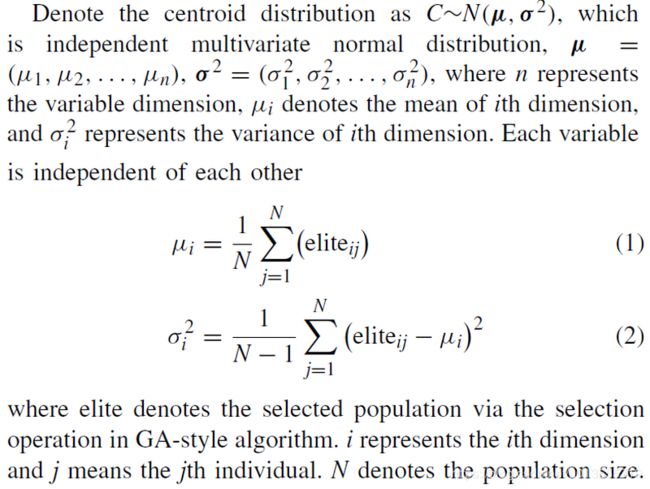

源和目标相似度度量

- WD是在给定度量空间[50]上的概率分布之间定义的距离函数。 在本文中,我们使用WD计算源和目标任务质心分布之间的距离。

- 由于质心分布C满足独立多变量标准分布,WD计算公式如下所示:

- 关于WD和Kullback–Leibler divergence

可以参考以下博文资料

多元高斯分布的KL散度

各维度不相关的多元高斯分布

多元高斯分布

两种群决策变量高斯分布的KL散度和WD距离

迁移源选择策略

- 因为使用不同的优化方法和不同的停止条件,即使是相同的优化任务也会得到不同的优化结果。并且对于高度复杂非线性和具有不同非连接局部最优的多模态优化问题,即使是使用相同的优化算子,每次得到的结果都有可能不一样。因此,即使迁移源看起来很不一样,也许其实是来自相似的任务。 实际上,当给予大量的源数据并且没有优化函数和每个源使用的优化器的先验知识的时候,很难正确判断源与目标之间的差异是否源于任务之间的差异。 因此,在本文中,我们提出了“质心分布的距离越近,实例相关程度越高”的选择策略,这实际上是一个充分但不是必要的条件。 如果距离很近,我们判断实例是相关的并且可以选择。 但是相反,如果实例相关,则我们无法判断分布将是紧密的。

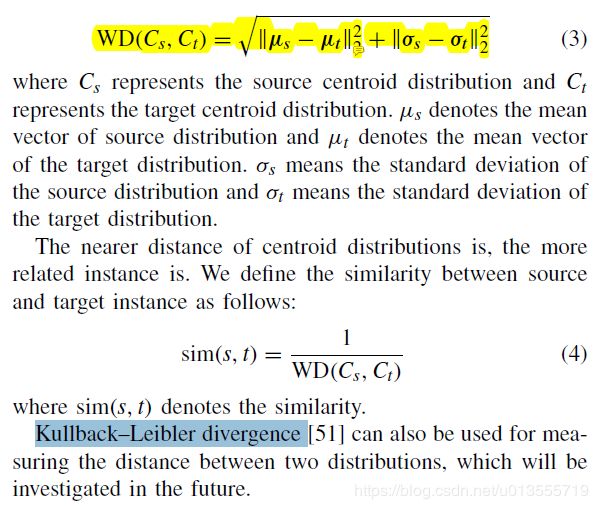

- 根据以上的分析,根据相似性,提出了三种迁移源选择策略来生成到目标实例的迁移种群,如图4所示。

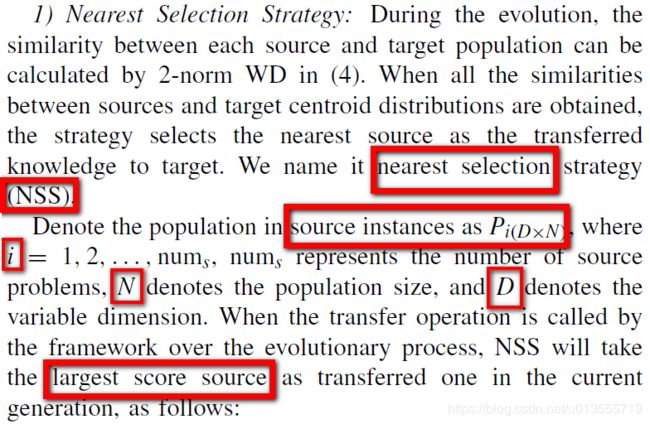

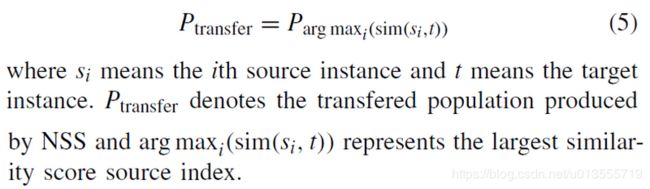

最近迁移策略–挑选质心分布最相似的任务进行迁移

- 在NSS中 认为相似程度最高的任务中包含有最有用的迁移知识。

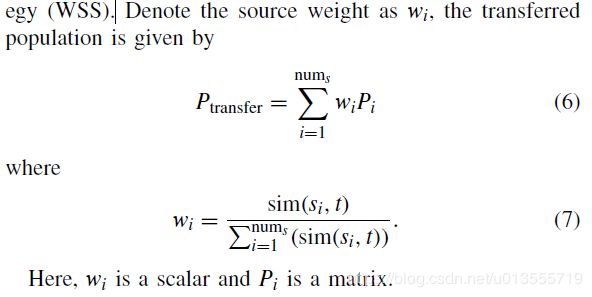

权重选择策略–利用所有资源知识

- 在某些情况下,某些源可能几乎位于相同的相似度级别(也就是说多个源和目标的相似程度接近,难以挑选)。 如果我们使用一个源进行迁移,则会丢失一些有关其他源的有用信息。 在这种情况下,提出了一种利用所有源知识的新策略。 由于不同源对迁移效率的贡献程度不同,因此最终源是当前这一代所有源的加权总和。 该策略称为加权选择策略(WSS)。

- 这里wi是一个标量,而P是一个矩阵

- 在WSS中,所有源都是有用的,但是每个源的迁移程度,重要程度不同~

TopK选择策略–按照相似度挑选TopK个源

策略选择建议

- 在这一部分中,总结了四个建议,以指导在特定条件下选择合适的选择策略。

- 这表示相似度最大的源任务能够占所有任务相似度的比。

- 相似度的方差引导,决定使用WSS还是TopK

- 相似度方差小–WSS,相似度方差大–TopK

- 基于以上分析,选择源选择策略的建议可以总结如下:

- 最大相似度msr很大–NSS选择最好的

- msr较小,相似度方差小–WSS

- msr较小,相似度方差大–TopK

- 当msr较低,且方差位于WSS和Top-K策略的临界范围时,不建议调用迁移操作。

(是否忽视了不依赖相似度从而跳转的可能性?!)

- 基于以上建议,提出了三种策略的混合版本,称为混合选择策略(MSS)。 为了探索在进化过程中是否在每一代中需要进行转移,将每次触发源选择策略。 根据所有源和目标之间的相似性信息,由以上建议确定是否转移。 更多的相似度计算带来更多的计算成本,但实际上也利用了更多的信息。

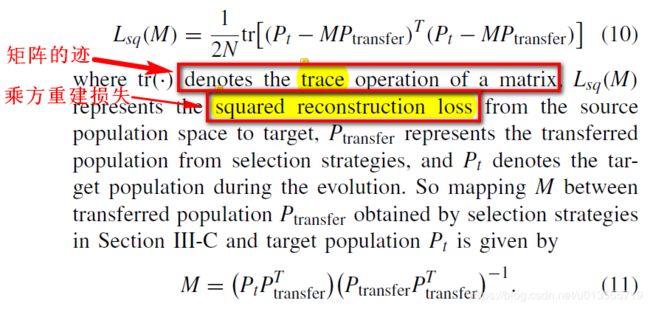

Transfer Method 迁移模块

- 使用[7]中提出的自编码器迁移进化算法进行迁移~

- 具体而言:

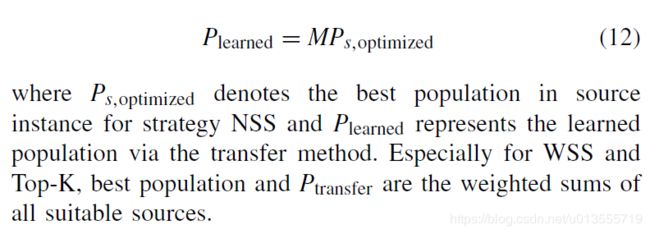

- 源种群和目标种群可以通过一层具有可靠理论推论的单层去噪自动编码器[7]连接起来。 如图5所示,基于自动编码器的迁移方法的关键是学习从源到目标种群的映射M。

- 需要注意这个映射矩阵是随着迭代不断变化改进的,需要隔一定的世代重新构建一次。以往问题的优化解包含了大量的知识,可以提高目标优化搜索速度,使我们可以通过学习映射将以往经验中的最佳解转移到目标中。

- 迁移矩阵最终由以下式子进行确定:

Proposed Framework 提出的框架

- 上一代种群

- 经过交叉和变异后的子代种群

- 经过迁移算法得到的种群

- 初始化之后,框架开始执行种群重组,信息迁移和选择操作的循环。为节省计算量,迁移操作只有在世代为G的倍数的时候调用,迁移算子被调用时,源实例和目标实例首先由质心分布表示,质心分布首先由(1)和(2)定义。然后,可以通过(3)和(4)来计算源和目标之间的相似性得分。接下来,将根据(5)–(9)选择合适的迁移源。最后,由(10)和(11)产生要迁移的学习种群。完成最终适应性评估后,循环停止。 (5)-(9)中定义的三种选择策略可以为转移方法的后续过程提供最终的源种群。该策略不仅可以提高优化效果,而且可以提高收敛速度,从而减少适应性评价量。实际应用中较少的评估意味着更少的模拟或实验,从而可以节省大量的计算或实验成本。

参考文献

[1] W. Yao, X. Chen, Q. Ouyang, and M. V. Tooren, “A reliability-based multidisciplinary design optimization procedure based on combined probability and evidence theory,” Struct. Multidiscipl. Optim., vol. 48, no. 2, pp. 339–354, 2013.

[2] C. B. Do and A. Y. Ng, “Transfer learning for text classification,” in Proc. 18th Adv. Neural Inf. Process. Syst., Vancouver, BC, Canada, Dec. 2005, pp. 299–306. [Online]. Available: http://papers.nips.cc/paper/2843-transfer-learning-for-text-classification

[3] A. Quattoni, M. Collins, and T. Darrell, “Transfer learning for image classification with sparse prototype representations,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. (CVPR), Anchorage, AK, USA, Jun. 2008, pp. 1–8. [Online]. Available: https://doi.org/10.1109/CVPR.2008.4587637

[4] S. J. Pan and Q. Yang, “A survey on transfer learning,” IEEE Trans. Knowl. Data Eng., vol. 22, no. 10, pp. 1345–1359, Oct. 2010. [Online]. Available: https://doi.org/10.1109/TKDE.2009.191

[5] Y. Yoshida, T. Hirao, T. Iwata, M. Nagata, and Y. Matsumoto, “Transfer learning for multiple-domain sentiment analysis— Identifying domain dependent/independent word polarity,” in Proc. 25th AAAI Conf. Artif. Intell., San Francisco, CA, USA, Aug. 2011, pp. 1286–1291. [Online]. Available: http://www.aaai.org/ocs/index.php/AAAI/AAAI11/paper/view/3597

[6] H. Hosseinzadeh and F. Razzazi, “LMDT: A weakly-supervised largemargin- domain-transfer for handwritten digit recognition,” Eng. Appl. Artif. Intell., vol. 52, pp. 119–125, Jun. 2016. [Online]. Available: https://doi.org/10.1016/j.engappai.2016.02.014

[7] L. Feng, Y.-S. Ong, S. Jiang, and A. Gupta, “Autoencoding evolutionary search with learning across heterogeneous problems,” IEEE Trans. Evol. Comput., vol. 21, no. 5, pp. 760–772, Oct. 2017. [Online]. Available: https://doi.org/10.1109/TEVC.2017.2682274

[8] M. Jiang, Z. Huang, L. Qiu, W. Huang, and G. G. Yen, “Transfer learning-based dynamic multiobjective optimization algorithms,” IEEE Trans. Evol. Comput., vol. 22, no. 4, pp. 501–514, Aug. 2018. [Online]. Available: https://doi.org/10.1109/TEVC.2017.2771451

[9] M. Jiang, L. Qiu, Z. Huang, and G. G. Yen, “Dynamic multi-objective estimation of distribution algorithm based on domain adaptation and nonparametric estimation,” Inf. Sci., vol. 435, pp. 203–223, Apr. 2018. [Online]. Available: https://doi.org/10.1016/j.ins.2017.12.058

[10] R. Santana, A. Mendiburu, and J. A. Lozano, “Structural transfer using EDAs: An application to multi-marker tagging SNP selection,” in Proc. IEEE Congr. Evol. Comput. (CEC), Brisbane, QLD, Australia, Jun. 2012, pp. 1–8. [Online]. Available: https://doi.org/10.1109/CEC.2012.6252963

[11] S. J. Pan, I. W. Tsang, J. T. Kwok, and Q. Yang, “Domain adaptation via transfer component analysis,” IEEE Trans. Neural Netw., vol. 22, no. 2, pp. 199–210, Feb. 2011. [Online]. Available: https://doi.org/10.1109/TNN.2010.2091281

[12] W. Dai, Q. Yang, G.-R. Xue, and Y. Yu, “Boosting for transfer learning,” in Proc. 24th Int. Conf. Mach. Learn. (ICML), Corvallis, OR, USA, Jun. 2007, pp. 193–200. [Online]. Available: https://doi.org/10.1145/1273496.1273521

[13] Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, Nov. 1998.

[14] J. G. Rohra, B. Perumal, S. J. Narayanan, P. Thakur, and R. B. Bhatt, “User localization in an indoor environment using fuzzy hybrid of particle swarm optimization & gravitational search algorithm with neural networks,” in Proc. 6th Int. Conf. Soft Comput. Problem Solving, 2017, pp. 286–295.

[15] T. Liu, Q. Yang, and D. Tao, “Understanding how feature structure transfers in transfer learning,” in Proc. 26th Int. Joint Conf. Artif. Intell. (IJCAI), Melbourne, VIC, Australia, Aug. 2017, pp. 2365–2371. [Online]. Available: https://doi.org/10.24963/ijcai.2017/329

[16] L. Feng et al., “Evolutionary multitasking via explicit autoencoding,” IEEE Trans. Cybern., vol. 49, no. 9, pp. 3457–3470, Sep. 2019.

[17] R. Santana, C. Bielza, and P. Larranaga, “Network measures for re-using problem information in EDAs,” Dept. Artif. Intell., Faculty Informat., Tech. Univ. Madrid, Madrid, Spain, Rep. UPM-FI/DIA/2010–3, 2010.

[18] Y. Yu, S.-Y. Chen, Q. Da, and Z.-H. Zhou, “Reusable reinforcement learning via shallow trails,” IEEE Trans. Neural Netw. Learn. Syst., vol. 29, no. 6, pp. 2204–2215, Jun. 2018. [Online]. Available: https://doi.org/10.1109/TNNLS.2018.2803729

[19] K. Deb, A. Pratap, S. Agarwal, and T. Meyarivan, “A fast and elitist multiobjective genetic algorithm: NSGA-II,” IEEE Trans. Evol. Comput., vol. 6, no. 2, pp. 182–197, Apr. 2002.

[20] A. Gupta, Y.-S. Ong, and L. Feng, “Insights on transfer optimization: Because experience is the best teacher,” IEEE Trans. Emerg. Topics Comput. Intell., vol. 2, no. 1, pp. 51–64, Feb. 2018. [Online]. Available: https://doi.org/10.1109/TETCI.2017.2769104

[21] M. Hauschild and M. Pelikan, “An introduction and survey of estimation of distribution algorithms,” Swarm Evol. Comput., vol. 1, no. 3, pp. 111–128, 2011. [Online]. Available: https://doi.org/10.1016/j.swevo.2011.08.003

[22] S. Baluja, “Population-based incremental learning. A method for integrating genetic search based function optimization and competitive learning,” Tech. Rep., 1994. [Online]. Available: https://dl.acm.org/ citation.cfm?id=865123

[23] H. Mühlenbein, “The equation for response to selection and its use for prediction,” Evol. Comput., vol. 5, no. 3, pp. 303–346, 1997. [Online]. Available: https://doi.org/10.1162/evco.1997.5.3.303

[24] G. R. Harik, F. G. Lobo, and D. E. Goldberg, “The compact genetic algorithm,” IEEE Trans. Evol. Comput., vol. 3, no. 4, pp. 287–297, Nov. 1999.

[25] J. S. D. Bonet, C. L. Isbell, and P. Viola, “MIMIC: Finding optima by estimating probability densities,” in Proc. Int. Conf. Neural Inf. Process. Syst., Denver, CO, USA, 1996, pp. 424–430.

[26] M. Pelikan and H. Muehlenbein, The Bivariate Marginal Distribution Algorithm. London, U.K.: Springer, 1999.

[27] M. Pelikan, D. E. Goldberg, and E. Cantú-Paz, “BOA: The Bayesian optimization algorithm,” in Proc. Conf. Genet. Evol. Comput., Orlando, FL, USA, 1999, pp. 525–532.

[28] S. Shakya, J. Mccall, and D. Brown, “Using a Markov network model in a univariate EDA: An empirical cost-benefit analysis,” in Proc. Genet. Evol. Comput. Conf. (GECCO), Washington, DC, USA, Jun. 2005, pp. 727–734.

[29] Y. Gao, L. Peng, F. Li, M. Liu, and X. Hu, “EDA-based multi-objective optimization using preference order ranking and multivariate Gaussian copula,” in Proc. 10th Int. Symp. Neural Netw. Adv. Neural Netw. II (ISNN), Dalian, China, Jul. 2013, pp. 341–350. [Online]. Available: https://doi.org/10.1007/978-3-642-39068-5_42

[30] H. Karshenas, R. Santana, C. Bielza, and P. Larrañaga, “Multiobjective estimation of distribution algorithm based on joint modeling of objectives and variables,” IEEE Trans. Evol. Comput., vol. 18, no. 4, pp. 519–542, Aug. 2014. [Online]. Available: https://doi.org/10.1109/TEVC.2013.2281524

[31] V. A. Shim, K. C. Tan, and C. Y. Cheong, “A hybrid estimation of distribution algorithm with decomposition for solving the multiobjective multiple traveling salesman problem,” IEEE Trans. Syst., Man, Cybern. C, Appl. Rev., vol. 42, no. 5, pp. 682–691, Sep. 2012. [Online]. Available: https://doi.org/10.1109/TSMCC.2012.2188285

[32] V. A. Shim, K. C. Tan, J. Y. Chia, and A. A. Mamun, “Multi-objective optimization with estimation of distribution algorithm in a noisy environment,” Evol. Comput., vol. 21, no. 1, pp. 149–177, Mar. 2013. [Online]. Available: https://doi.org/10.1162/EVCO_a_00066

[33] J. Luo, Y. Qi, J. Xie, and X. Zhang, “A hybrid multi-objective PSO–EDA algorithm for reservoir flood control operation,” Appl. Soft Comput., vol. 34, pp. 526–538, Sep. 2015. [Online]. Available: https://doi.org/10.1016/j.asoc.2015.05.036

[34] P. Lipinski, “Solving the firefighter problem with two elements using a multi-modal estimation of distribution algorithm,” in Proc. IEEE Congr. Evol. Comput. (CEC), Donostia-San Sebastián, Spain, Jun. 2017, pp. 2161–2168. [Online]. Available: https://doi.org/10.1109/CEC.2017.7969566

[35] Q. Yang, W.-N. Chen, Y. Li, C. L. P. Chen, X.-M. Xu, and J. Zhang, “Multimodal estimation of distribution algorithms,” IEEE Trans. Cybern., vol. 47, no. 3, pp. 636–650, Mar. 2017. [Online]. Available: https://doi.org/10.1109/TCYB.2016.2523000

[36] M. Pelikan, M. W. Hauschild, and P. L. Lanzi, “Transfer learning, soft distance-based bias, and the hierarchical BOA,” in Proc. 12th Int. Conf. Parallel Problem Solving Nat. I (PPSN XII), Taormina, Italy, Sep. 2012, pp. 173–183. [Online]. Available: https://doi.org/10.1007/978-3-642- 32937-1_18

[37] S. Jiang and S. Yang, “A steady-state and generational evolutionary algorithm for dynamic multiobjective optimization,” IEEE Trans. Evol. Comput., vol. 21, no. 1, pp. 65–82, Feb. 2017.

[38] S. Jiang and S. Yang, “Evolutionary dynamic multiobjective optimization: Benchmarks and algorithm comparisons,” IEEE Trans. Cybern., vol. 47, no. 1, pp. 198–211, Jan. 2017.

[39] Q. Li, J. Zou, S. Yang, J. Zheng, and R. Gan, “A predictive strategy based on special points for evolutionary dynamic multi-objective optimization,” Soft Comput., vol. 23, no. 11, pp. 3723–3739, 2019.

[40] G. Ruan, G. Yu, J. Zheng, J. Zou, and S. Yang, “The effect of diversity maintenance on prediction in dynamic multi-objective optimization,” Appl. Soft Comput., vol. 58, pp. 631–647, Sep. 2017. [Online]. Available: https://doi.org/10.1016/j.asoc.2017.05.008

[41] J. Zou, Q. Li, S. Yang, H. Bai, and J. Zheng, “A prediction strategy based on center points and knee points for evolutionary dynamic multi-objective optimization,” Appl. Soft Comput., vol. 61, pp. 806–818, Dec. 2017. [Online]. Available: https://doi.org/10.1016/j.asoc.2017.08.004

[42] A. Zhou, Y. Jin, Q. Zhang, B. Sendhoff, and E. P. K. Tsang, “Prediction-based population re-initialization for evolutionary dynamic multi-objective optimization,” in Proc. 4th Int. Conf. Evol. Multi Criterion Optim. (EMO), Matsushima, Japan, Mar. 2007, pp. 832–846. [Online]. Available: https://doi.org/10.1007/978-3-540-70928-2_62

[43] J. Branke, “Memory enhanced evolutionary algorithms for changing optimization problems,” in Proc. Congr. Evol. Comput. (CEC), vol. 3. Washington, DC, USA, 1999, pp. 1875–1882.

[44] C. K. Goh and K. C. Tan, “A competitive-cooperative coevolutionary paradigm for dynamic multiobjective optimization,” IEEE Trans. Evol. Comput., vol. 13, no. 1, pp. 103–127, Feb. 2009.

[45] I. Hatzakis and D. Wallace, “Dynamic multi-objective optimization with evolutionary algorithms: A forward-looking approach,” in Proc. 8th Annu. Conf. Genet. Evol. Comput., Seattle, WA, USA, 2006, pp. 1201–1208.

[46] Z. Zhang and S. Qian, “Artificial immune system in dynamic environments solving time-varying non-linear constrained multi-objective problems,” Soft Comput., vol. 15, no. 7, pp. 1333–1349, 2011.

[47] L. T. Bui, Z. Michalewicz, E. Parkinson, and M. B. Abello, “Adaptation in dynamic environments: A case study in mission planning,” IEEE Trans. Evol. Comput., vol. 16, no. 2, pp. 190–209, Apr. 2012.

[48] R. Shang, L. Jiao, Y. Ren, L. Li, and L. Wang, “Quantum immune clonal coevolutionary algorithm for dynamic multiobjective optimization,” Soft Comput., vol. 18, no. 4, pp. 743–756, 2014.

[49] Y. Netzer, T. Wang, A. Coates, A. Bissacco, B. Wu, and A. Y. Ng, “Reading digits in natural images with unsupervised feature learning,” 2011. [Online]. Available: https://ai.google/research/pubs/pub37648

[50] I. Olkin and F. Pukelsheim, “The distance between two random vectors with given dispersion matrices,” Linear Algebra Appl., vol. 48, no. 82, pp. 257–263, Dec. 1982.