数据分析入门--数据科学框架的搭建--03数据预处理

本文基于kaggle入门项目Titanic金牌获得者的Kernel翻译而来,并对其代码进行注解

1.定义问题

对于这个项目,问题陈述是给我们一个golden plate,开发一个算法来预测泰坦尼克号上乘客的生存结果。

项目总结:RMS泰坦尼克号沉没是历史上最臭名昭著的海难之一。1912年4月15日,在处女航期间,泰坦尼克号撞上冰山后沉没,2224名乘客和机组人员中有1502人遇难。这场耸人听闻的悲剧震惊了国际社会,并推动了更完善的船舶安全条例的实施。

造成沉船事故的原因之一是没有足够的救生艇供乘客和机组人员使用。虽然在幸存存在了一些运气成分,但一些类型的人比其他人更容易生存,如妇女、儿童和上层阶级。

由于步骤2是在kaggle上提供给我们的,所以步骤3也是如此。因此,数据争用中的正常过程(如数据体系结构、治理和提取)在这里不再需要。因此,只有数据清洗是需要进行的。

3.1库的导入

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

#load packages

import sys #access to system parameters https://docs.python.org/3/library/sys.html

print("Python version: {}". format(sys.version))

import pandas as pd #collection of functions for data processing and analysis modeled after R dataframes with SQL like features

print("pandas version: {}". format(pd.__version__))

import matplotlib #collection of functions for scientific and publication-ready visualization

print("matplotlib version: {}". format(matplotlib.__version__))

import numpy as np #foundational package for scientific computing

print("NumPy version: {}". format(np.__version__))

import scipy as sp #collection of functions for scientific computing and advance mathematics

print("SciPy version: {}". format(sp.__version__))

import IPython

from IPython import display #pretty printing of dataframes in Jupyter notebook

print("IPython version: {}". format(IPython.__version__))

import sklearn #collection of machine learning algorithms

print("scikit-learn version: {}". format(sklearn.__version__))

#misc libraries

import random

import time

#ignore warnings

import warnings

warnings.filterwarnings('ignore')

print('-'*25)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list the files in the input directory

from subprocess import check_output

print(check_output(["ls", "../input"]).decode("utf8"))

# Any results you write to the current directory are saved as output.output:

Python version: 3.6.3 |Anaconda custom (64-bit)| (default, Nov 20 2017, 20:41:42)

[GCC 7.2.0]

pandas version: 0.20.3

matplotlib version: 2.1.1

NumPy version: 1.13.0

SciPy version: 1.0.0

IPython version: 5.3.0

scikit-learn version: 0.19.1

-------------------------

gender_submission.csv

test.csv

train.csv#Common Model Algorithms

from sklearn import svm, tree, linear_model, neighbors, naive_bayes, ensemble, discriminant_analysis, gaussian_process

from xgboost import XGBClassifier

#Common Model Helpers

from sklearn.preprocessing import OneHotEncoder, LabelEncoder

from sklearn import feature_selection

from sklearn import model_selection

from sklearn import metrics

#Visualization

import matplotlib as mpl

import matplotlib.pyplot as plt

import matplotlib.pylab as pylab

import seaborn as sns

from pandas.tools.plotting import scatter_matrix

#Configure Visualization Defaults

#%matplotlib inline = show plots in Jupyter Notebook browser

%matplotlib inline

mpl.style.use('ggplot')

sns.set_style('white')

pylab.rcParams['figure.figsize'] = 12,83.2面向数据

这是面对数据的第一步。基于名成来了解你的数据。它看起来像什么(数据类型和值),他的标记是什么(独立/特征变量),它的生活目标是什么(依赖/目标变量(s))。把它想象成第一次约会,然后再跳进去,start poking it in the bedroom。

为了开始这一步,我们首先导入数据。接下来,我们使用If()和SAMPLE()函数来获得变量数据类型(即定性与定量)的快速和粗略的概述。点击这里获取源数据字典。

1.存活变量是我们的结果或因变量。这是一个二进制标称数据类型的1幸存,0没有生存。所有其他变量都是潜在的预测变量或独立变量。重要的是要注意,更多的预测变量并并不会形成更好的模型,而是正确的变量才会。

2.乘客ID和票证变量被假定为随机唯一标识符,对结果变量没有影响。因此,他们将被排除在分析之外。Pclass变量是票券类的序数数据,是社会经济地位(SES)的代表,代表1 =上层,2=中产阶级,3 =下层。

3.name变量是一个标称数据类型。在特征提取中,可以从标题、家庭大小、姓氏中获得性别,如SES可以从医生或硕士来判断。因为这些变量已经存在,我们将利用它来看看name是否像硕士一样有区别。

4.性别和票价变量连续的数据类型

5.SIBSP代表相关上船的兄弟姐妹/配偶的数量,而PARCH代表上传的父母/子女的数量。两者都是离散的定量数据类型。这可以特征工程创建一个关于家庭大小的变量。

#import data from file: https://pandas.pydata.org/pandas-docs/stable/generated/pandas.read_csv.html

data_raw = pd.read_csv('../input/train.csv')

#a dataset should be broken into 3 splits: train, test, and (final) validation

#the test file provided is the validation file for competition submission

#we will split the train set into train and test data in future sections

data_val = pd.read_csv('../input/test.csv')

#to play with our data we'll create a copy

#remember python assignment or equal passes by reference vs values, so we use the copy function: https://stackoverflow.com/questions/46327494/python-pandas-dataframe-copydeep-false-vs-copydeep-true-vs

data1 = data_raw.copy(deep = True)

#however passing by reference is convenient, because we can clean both datasets at once

data_cleaner = [data1, data_val]

#preview data

print (data_raw.info()) #https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.info.html

#data_raw.head() #https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.head.html

#data_raw.tail() #https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.tail.html

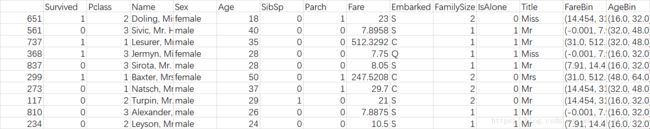

data_raw.sample(10) #https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.sample.htmloutput:

RangeIndex: 891 entries, 0 to 890

Data columns (total 12 columns):

PassengerId 891 non-null int64

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 714 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Ticket 891 non-null object

Fare 891 non-null float64

Cabin 204 non-null object

Embarked 889 non-null object

dtypes: float64(2), int64(5), object(5)

memory usage: 83.6+ KB

None

3.21数据清洗中的4C原则:Correcting, Completing,Creating, and Converting

在这个阶段中,我们将通过1)校正异常值和异常值,2)完成缺失信息,3)创建新的特征用于分析,4)将字段转换为正确的计算和呈现格式来校正数据。

1.校正:回顾数据,似乎没有任何异常或不可接受的数据输入。此外,我们看到我们可能在年龄和票价方面有潜在的离群点。并且,由于它们是合理的值,所以我们将等到完成了探索性分析之后,确定是否应该从数据集中包含或排除。应该注意的是,如果他们是不合理的值,例如年龄=800而不是80,那么现在修正可能是一个安全的决定。如果要创建一个精确的模型,当我们从原始值中修改数据时我们需要谨慎。

2.完成:在年龄、机舱和乘船地点可能有空值或缺失值。这些缺失值影响较坏,因为一些算法不知道如何处理空值和缺失值,可能导致其失效。其余算法像是决策树,可以处理空值。因此在开始模型前处理空值是非常重要的,因为我们要对比多个模型。有两种常用的方法,要么删除记录,要么使用合理的填充值。不建议删除记录,特别是大量记录,除非它真正代表不完整的记录。最好是修正缺失值,最常用的缺失值填充方式是应用模式填充。定量数据的基本方法是使用均值、中位数或平均值+随机化标准偏差进行填充。还有一种方法是使用基于特定标准的基本方法,如按类的平均年龄填充从或以票价以SES和餐费填充。有更复杂的方法,但是在部署之前,应该将其与基础模型进行比较,以确定复杂性是否确实有价值。对于这个数据集,年龄将被估算为中位数,机舱属性将被丢弃,并且上船位置将以模式进行估算。随后的模型迭代可以修改决定,以确定它是否改进了模型的精度。

3.创建:特征工程是当我们使用现有的特征来创建新的特征,以确定它们是否给我们的预测结果提供新的信息。对于这个数据集,我们将创建一个标题特征来确定它是否在生存中扮演了一个角色。

4.转换:最后,但当然不是最不重要的,我们将处理格式化。没有日期或货币格式,但数据类型格式。我们的分类数据导入对象,这使得数学计算变得困难。对于这个数据集,我们将把对象数据类型转换成分类的虚拟变量。

print('Train columns with null values:\n', data1.isnull().sum())

print("-"*10)

print('Test/Validation columns with null values:\n', data_val.isnull().sum())

print("-"*10)

data_raw.describe(include = 'all')Train columns with null values:

PassengerId 0

Survived 0

Pclass 0

Name 0

Sex 0

output:

Age 177

SibSp 0

Parch 0

Ticket 0

Fare 0

Cabin 687

Embarked 2

dtype: int64

----------

Test/Validation columns with null values:

PassengerId 0

Pclass 0

Name 0

Sex 0

Age 86

SibSp 0

Parch 0

Ticket 0

Fare 1

Cabin 327

Embarked 0

dtype: int64

----------

3.22清洗数据

###COMPLETING: complete or delete missing values in train and test/validation dataset

for dataset in data_cleaner:

#complete missing age with median

dataset['Age'].fillna(dataset['Age'].median(), inplace = True)

#complete embarked with mode

dataset['Embarked'].fillna(dataset['Embarked'].mode()[0], inplace = True)

#complete missing fare with median

dataset['Fare'].fillna(dataset['Fare'].median(), inplace = True)

#delete the cabin feature/column and others previously stated to exclude in train dataset

drop_column = ['PassengerId','Cabin', 'Ticket']

data1.drop(drop_column, axis=1, inplace = True)

print(data1.isnull().sum())

print("-"*10)

print(data_val.isnull().sum())Survived 0

Pclass 0

Name 0

Sex 0

Age 0

SibSp 0

Parch 0

Fare 0

Embarked 0

dtype: int64

----------

PassengerId 0

Pclass 0

Name 0

Sex 0

Age 0

SibSp 0

Parch 0

Ticket 0

Fare 0

Cabin 327

Embarked 0

dtype: int64###CREATE: Feature Engineering for train and test/validation dataset

for dataset in data_cleaner:

#Discrete variables

dataset['FamilySize'] = dataset ['SibSp'] + dataset['Parch'] + 1

dataset['IsAlone'] = 1 #initialize to yes/1 is alone

dataset['IsAlone'].loc[dataset['FamilySize'] > 1] = 0 # now update to no/0 if family size is greater than 1

#quick and dirty code split title from name: http://www.pythonforbeginners.com/dictionary/python-split

dataset['Title'] = dataset['Name'].str.split(", ", expand=True)[1].str.split(".", expand=True)[0]

#Continuous variable bins; qcut vs cut: https://stackoverflow.com/questions/30211923/what-is-the-difference-between-pandas-qcut-and-pandas-cut

#Fare Bins/Buckets using qcut or frequency bins: https://pandas.pydata.org/pandas-docs/stable/generated/pandas.qcut.html

dataset['FareBin'] = pd.qcut(dataset['Fare'], 4)

#Age Bins/Buckets using cut or value bins: https://pandas.pydata.org/pandas-docs/stable/generated/pandas.cut.html

dataset['AgeBin'] = pd.cut(dataset['Age'].astype(int), 5)

#cleanup rare title names

#print(data1['Title'].value_counts())

stat_min = 10 #while small is arbitrary, we'll use the common minimum in statistics: http://nicholasjjackson.com/2012/03/08/sample-size-is-10-a-magic-number/

title_names = (data1['Title'].value_counts() < stat_min) #this will create a true false series with title name as index

#apply and lambda functions are quick and dirty code to find and replace with fewer lines of code: https://community.modeanalytics.com/python/tutorial/pandas-groupby-and-python-lambda-functions/

data1['Title'] = data1['Title'].apply(lambda x: 'Misc' if title_names.loc[x] == True else x)

print(data1['Title'].value_counts())

print("-"*10)

#preview data again

data1.info()

data_val.info()

data1.sample(10)Mr 517

Miss 182

Mrs 125

Master 40

Misc 27

Name: Title, dtype: int64

----------

RangeIndex: 891 entries, 0 to 890

Data columns (total 14 columns):

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 891 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Fare 891 non-null float64

Embarked 891 non-null object

FamilySize 891 non-null int64

IsAlone 891 non-null int64

Title 891 non-null object

FareBin 891 non-null category

AgeBin 891 non-null category

dtypes: category(2), float64(2), int64(6), object(4)

memory usage: 85.5+ KB

RangeIndex: 418 entries, 0 to 417

Data columns (total 16 columns):

PassengerId 418 non-null int64

Pclass 418 non-null int64

Name 418 non-null object

Sex 418 non-null object

Age 418 non-null float64

SibSp 418 non-null int64

Parch 418 non-null int64

Ticket 418 non-null object

Fare 418 non-null float64

Cabin 91 non-null object

Embarked 418 non-null object

FamilySize 418 non-null int64

IsAlone 418 non-null int64

Title 418 non-null object

FareBin 418 non-null category

AgeBin 418 non-null category

dtypes: category(2), float64(2), int64(6), object(6)

memory usage: 46.8+ KB

3.23 转换格式

我们将把分类数据转换成用于数学分析的哑变量。有多种方法来对分类变量进行编码;我们将使用Sklearn和pandas函数。

在这个步骤中,我们还将定义X(独立/特征/解释/预测器/等)和Y(依赖/目标/结果/响应/等)变量用于数据建模。

#CONVERT: convert objects to category using Label Encoder for train and test/validation dataset

#code categorical data

label = LabelEncoder()

for dataset in data_cleaner:

dataset['Sex_Code'] = label.fit_transform(dataset['Sex'])

dataset['Embarked_Code'] = label.fit_transform(dataset['Embarked'])

dataset['Title_Code'] = label.fit_transform(dataset['Title'])

dataset['AgeBin_Code'] = label.fit_transform(dataset['AgeBin'])

dataset['FareBin_Code'] = label.fit_transform(dataset['FareBin'])

#define y variable aka target/outcome

Target = ['Survived']

#define x variables for original features aka feature selection

data1_x = ['Sex','Pclass', 'Embarked', 'Title','SibSp', 'Parch', 'Age', 'Fare', 'FamilySize', 'IsAlone'] #pretty name/values for charts

data1_x_calc = ['Sex_Code','Pclass', 'Embarked_Code', 'Title_Code','SibSp', 'Parch', 'Age', 'Fare'] #coded for algorithm calculation

data1_xy = Target + data1_x

print('Original X Y: ', data1_xy, '\n')

#define x variables for original w/bin features to remove continuous variables

data1_x_bin = ['Sex_Code','Pclass', 'Embarked_Code', 'Title_Code', 'FamilySize', 'AgeBin_Code', 'FareBin_Code']

data1_xy_bin = Target + data1_x_bin

print('Bin X Y: ', data1_xy_bin, '\n')

#define x and y variables for dummy features original

data1_dummy = pd.get_dummies(data1[data1_x])

data1_x_dummy = data1_dummy.columns.tolist()

data1_xy_dummy = Target + data1_x_dummy

print('Dummy X Y: ', data1_xy_dummy, '\n')

data1_dummy.head()output:

Original X Y: ['Survived', 'Sex', 'Pclass', 'Embarked', 'Title', 'SibSp', 'Parch', 'Age', 'Fare', 'FamilySize', 'IsAlone']

Bin X Y: ['Survived', 'Sex_Code', 'Pclass', 'Embarked_Code', 'Title_Code', 'FamilySize', 'AgeBin_Code', 'FareBin_Code']

Dummy X Y: ['Survived', 'Pclass', 'SibSp', 'Parch', 'Age', 'Fare', 'FamilySize', 'IsAlone', 'Sex_female', 'Sex_male', 'Embarked_C', 'Embarked_Q', 'Embarked_S', 'Title_Master', 'Title_Misc', 'Title_Miss', 'Title_Mr', 'Title_Mrs']

#转换:使用标签编码器将对象转换为类别,用于训练和测试/验证数据集

3.24验证清洗后的数据集

print('Train columns with null values: \n', data1.isnull().sum())

print("-"*10)

print (data1.info())

print("-"*10)

print('Test/Validation columns with null values: \n', data_val.isnull().sum())

print("-"*10)

print (data_val.info())

print("-"*10)

data_raw.describe(include = 'all')Train columns with null values:

Survived 0

Pclass 0

Name 0

Sex 0

Age 0

SibSp 0

Parch 0

Fare 0

Embarked 0

FamilySize 0

IsAlone 0

Title 0

FareBin 0

AgeBin 0

Sex_Code 0

Embarked_Code 0

Title_Code 0

AgeBin_Code 0

FareBin_Code 0

dtype: int64

----------

RangeIndex: 891 entries, 0 to 890

Data columns (total 19 columns):

Survived 891 non-null int64

Pclass 891 non-null int64

Name 891 non-null object

Sex 891 non-null object

Age 891 non-null float64

SibSp 891 non-null int64

Parch 891 non-null int64

Fare 891 non-null float64

Embarked 891 non-null object

FamilySize 891 non-null int64

IsAlone 891 non-null int64

Title 891 non-null object

FareBin 891 non-null category

AgeBin 891 non-null category

Sex_Code 891 non-null int64

Embarked_Code 891 non-null int64

Title_Code 891 non-null int64

AgeBin_Code 891 non-null int64

FareBin_Code 891 non-null int64

dtypes: category(2), float64(2), int64(11), object(4)

memory usage: 120.3+ KB

None

----------

Test/Validation columns with null values:

PassengerId 0

Pclass 0

Name 0

Sex 0

Age 0

SibSp 0

Parch 0

Ticket 0

Fare 0

Cabin 327

Embarked 0

FamilySize 0

IsAlone 0

Title 0

FareBin 0

AgeBin 0

Sex_Code 0

Embarked_Code 0

Title_Code 0

AgeBin_Code 0

FareBin_Code 0

dtype: int64

----------

RangeIndex: 418 entries, 0 to 417

Data columns (total 21 columns):

PassengerId 418 non-null int64

Pclass 418 non-null int64

Name 418 non-null object

Sex 418 non-null object

Age 418 non-null float64

SibSp 418 non-null int64

Parch 418 non-null int64

Ticket 418 non-null object

Fare 418 non-null float64

Cabin 91 non-null object

Embarked 418 non-null object

FamilySize 418 non-null int64

IsAlone 418 non-null int64

Title 418 non-null object

FareBin 418 non-null category

AgeBin 418 non-null category

Sex_Code 418 non-null int64

Embarked_Code 418 non-null int64

Title_Code 418 non-null int64

AgeBin_Code 418 non-null int64

FareBin_Code 418 non-null int64

dtypes: category(2), float64(2), int64(11), object(6)

memory usage: 63.1+ KB

None

---------- | PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 891.000000 | 891.000000 | 891.000000 | 891 | 891 | 714.000000 | 891.000000 | 891.000000 | 891 | 891.000000 | 204 | 889 |

| unique | NaN | NaN | NaN | 891 | 2 | NaN | NaN | NaN | 681 | NaN | 147 | 3 |

| top | NaN | NaN | NaN | Lindahl, Miss. Agda Thorilda Viktoria | male | NaN | NaN | NaN | 1601 | NaN | C23 C25 C27 | S |

| freq | NaN | NaN | NaN | 1 | 577 | NaN | NaN | NaN | 7 | NaN | 4 | 644 |

| mean | 446.000000 | 0.383838 | 2.308642 | NaN | NaN | 29.699118 | 0.523008 | 0.381594 | NaN | 32.204208 | NaN | NaN |

| std | 257.353842 | 0.486592 | 0.836071 | NaN | NaN | 14.526497 | 1.102743 | 0.806057 | NaN | 49.693429 | NaN | NaN |

| min | 1.000000 | 0.000000 | 1.000000 | NaN | NaN | 0.420000 | 0.000000 | 0.000000 | NaN | 0.000000 | NaN | NaN |

| 25% | 223.500000 | 0.000000 | 2.000000 | NaN | NaN | 20.125000 | 0.000000 | 0.000000 | NaN | 7.910400 | NaN | NaN |

| 50% | 446.000000 | 0.000000 | 3.000000 | NaN | NaN | 28.000000 | 0.000000 | 0.000000 | NaN | 14.454200 | NaN | NaN |

| 75% | 668.500000 | 1.000000 | 3.000000 | NaN | NaN | 38.000000 | 1.000000 | 0.000000 | NaN | 31.000000 | NaN | NaN |

| max | 891.000000 | 1.000000 | 3.000000 | NaN | NaN | 80.000000 | 8.000000 | 6.000000 | NaN | 512.329200 |

3.25

数据集有元数据集、bin、dummy分别为什么意思?

#split train and test data with function defaults

#random_state -> seed or control random number generator: https://www.quora.com/What-is-seed-in-random-number-generation

train1_x, test1_x, train1_y, test1_y = model_selection.train_test_split(data1[data1_x_calc], data1[Target], random_state = 0)

train1_x_bin, test1_x_bin, train1_y_bin, test1_y_bin = model_selection.train_test_split(data1[data1_x_bin], data1[Target] , random_state = 0)

train1_x_dummy, test1_x_dummy, train1_y_dummy, test1_y_dummy = model_selection.train_test_split(data1_dummy[data1_x_dummy], data1[Target], random_state = 0)

print("Data1 Shape: {}".format(data1.shape))

print("Train1 Shape: {}".format(train1_x.shape))

print("Test1 Shape: {}".format(test1_x.shape))

train1_x_bin.head()Data1 Shape: (891, 19)

Train1 Shape: (668, 8)

Test1 Shape: (223, 8)