- [特殊字符] 实时数据洪流突围战:Flink+Paimon实现毫秒级分析的架构革命(附压测报告)——日均百亿级数据处理成本降低60%的工业级方案

Lucas55555555

flink大数据

引言:流批一体的时代拐点据阿里云2025白皮书显示,实时数据处理需求年增速达240%,但传统Lambda架构资源消耗占比超运维成本的70%。某电商平台借助Flink+Paimon重构实时数仓后,端到端延迟从分钟级压缩至800ms,计算资源节省5.6万核/月。技术红利窗口期:2025年ApachePaimon1.0正式发布,支持秒级快照与湖仓一体,成为替代Iceberg的新范式一、痛点深挖:实时数仓

- Flink 2.0 DataStream算子全景

Edingbrugh.南空

大数据flinkflink人工智能

在实时流处理中,ApacheFlink的DataStreamAPI算子是构建流处理pipeline的基础单元。本文基于Flink2.0,聚焦算子的核心概念、分类及高级特性。一、算子核心概念:流处理的"原子操作1.数据流拓扑(StreamTopology)每个Flink应用可抽象为有向无环图(DAG),由源节点(Source)、算子节点(Operator)和汇节点(Sink)构成,算子通过数据流(S

- FlinkSQL 自定义函数详解

Tit先生

基础flinksql大数据java

FlinkSQL函数详解自定义函数除了内置函数之外,FlinkSQL还支持自定义函数,我们可以通过自定义函数来扩展函数的使用FlinkSQL当中自定义函数主要分为四大类:1.ScalarFunction:标量函数特点:每次只接收一行的数据,输出结果也是1行1列典型的标量函数如:upper(str),lower(str),abs(salary)2.TableFunction:表生成函数特点:运行时每

- Flink自定义函数之聚合函数(UDAGG函数)

土豆马铃薯

Flinkflink大数据

1.聚合函数概念聚合函数:将一个表的一个或多个行并且具有一个或多个属性聚合为标量值。聚合函数理解:假设一个关于饮料的表。表里面有三个字段,分别是id、name、price,表里有5行数据。假设你需要找到所有饮料里最贵的饮料的价格,即执行一个max()聚合。你需要遍历所有5行数据,而结果就只有一个数值。2.聚合函数实现聚合函数主要通过扩展AggregateFunction类实现。AggregateF

- Flink时间窗口详解

bxlj_jcj

Flinkflink大数据

一、引言在大数据流处理的领域中,Flink的时间窗口是一项极为关键的技术,想象一下,你要统计一个电商网站每小时的订单数量。由于订单数据是持续不断产生的,这就形成了一个无界数据流。如果没有时间窗口的概念,你就需要处理无穷无尽的数据,难以进行有效的统计分析。而时间窗口的作用,就是将这无界的数据流按照时间维度切割成一个个有限的“数据块”,方便我们对这些数据进行处理和分析。比如,我们可以定义一个1小时的时

- Flink DataStream API详解(一)

bxlj_jcj

Flinkflink大数据

一、引言Flink的DataStreamAPI,在流处理领域大显身手的核心武器。在很多实时数据处理场景中,如电商平台实时分析用户购物行为以实现精准推荐,金融领域实时监控交易数据以防范风险,DataStreamAPI都发挥着关键作用,能够对源源不断的数据流进行高效处理和分析。接下来,就让我们一起深入探索FlinkDataStreamAPI。二、DataStream编程基础搭建在开始使用FlinkDa

- flink自定义函数

逆风飞翔的小叔

flink入门到精通flink大数据bigdata

前言在很多情况下,尽管flink提供了丰富的转换算子API可供开发者对数据进行各自处理,比如map(),filter()等,但在实际使用的时候仍然不能满足所有的场景,这时候,就需要开发人员基于常用的转换算子的基础上,做一些自定义函数的处理1、来看一个常用的操作原始待读取的文件核心代码importorg.apache.flink.api.common.functions.FilterFunction

- Flink自定义函数的常用方式

飞Link

Waterflinkjava大数据

一、实现Flink提供的接口//自定义函数classMyMapFunctionimplementsMapFunction{publicIntegermap(Stringvalue){returnInteger.parseInt(value

- Flink DataStream API详解(二)

一、引言咱两书接上回,上一篇文章主要介绍了DataStreamAPI一些基本的使用,主要是针对单数据流的场景下,但是在实际的流处理场景中,常常需要对多个数据流进行合并、拆分等操作,以满足复杂的业务需求。Flink的DataStreamAPI提供了一系列强大的多流转换算子,如union、connect和split等,下面我们来详细了解一下它们的功能和用法。二、多流转换2.1union算子union算

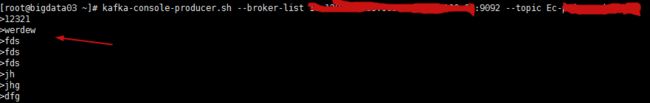

- 【Kafka】Failed to send data to Kafka: Expiring 30 record(s) for xxx 732453 ms has passed since last

九师兄

kafkabigdatazookeeper

文章目录1.美图2.背景2.尝试方案13.尝试解决24.场景再现25.场景46.场景57.场景78.场景8M.拓展本文为博主九师兄(QQ:541711153欢迎来探讨技术)原创文章,未经允许博主不允许转载。1.美图问题与【Flink】Flink写入kafka报错FailedtosenddatatoKafka:Expiring4record(s)for20001mshaspassed重复了。2.背景

- 【Flink】flink Kafka报错 : Failed to send data to Kafka: This server is not the leader for that topic-pa

九师兄

flinkkafka大数据

1.背景出现这个问题的背景请参考:【Kafka】FailedtosenddatatoKafka:Expiring30record(s)forxxx732453mshaspassedsincelast[2020-09-0513:16:09

- 安全运维的 “五层防护”:构建全方位安全体系

KKKlucifer

安全运维

在数字化运维场景中,异构系统复杂、攻击手段隐蔽等挑战日益突出。保旺达基于“全域纳管-身份认证-行为监测-自动响应-审计溯源”的五层防护架构,融合AI、零信任等技术,构建全链路安全运维体系,以下从技术逻辑与实践落地展开解析:第一层:全域资产纳管——筑牢安全根基挑战云网基础设施包含分布式计算(Hadoop/Spark)、数据流处理(Storm/Flink)等异构组件,通信协议繁杂,传统方案难以全面纳管

- kafka单个生产者向具有多个partition的topic写数据(写入分区策略)

最近碰到生产环境现象一个flink程序单并行度(一个生产者),对应topic为8分区。每个分区都能消费到生产出的数据。整理知识点如下生产者写入消息到topic,kafka将依据不同的策略将数据分配到不同的分区中1.轮询分区策略2.随机分区策略3.按key分区分配策略4.自定义分区策略1.1轮询分区策略默认的策略,也是使用最多的策略,可以最大限度的保证所有消息平均分配到分区里面如果在生产消息时,ke

- 云原生--微服务、CICD、SaaS、PaaS、IaaS

青秋.

云原生docker云原生微服务kubernetesserverlessservice_meshci/cd

往期推荐浅学React和JSX-CSDN博客一文搞懂大数据流式计算引擎Flink【万字详解,史上最全】-CSDN博客一文入门大数据准流式计算引擎Spark【万字详解,全网最新】_大数据spark-CSDN博客目录1.云原生概念和特点2.常见云模式3.云对外提供服务的架构模式3.1IaaS(Infrastructure-as-a-Service)3.2PaaS(Platform-as-a-Servi

- Apache Iceberg数据湖基础

Aurora_NeAr

apache

IntroducingApacheIceberg数据湖的演进与挑战传统数据湖(Hive表格式)的缺陷:分区锁定:查询必须显式指定分区字段(如WHEREdt='2025-07-01')。无原子性:并发写入导致数据覆盖或部分可见。低效元数据:LIST操作扫描全部分区目录(云存储成本高)。Iceberg的革新目标:解耦计算引擎与存储格式(支持Spark/Flink/Trino等);提供ACID事务、模式

- Flink ClickHouse 连接器:实现 Flink 与 ClickHouse 无缝对接

Edingbrugh.南空

大数据flinkflinkclickhouse大数据

引言在大数据处理领域,ApacheFlink是一款强大的流处理和批处理框架,而ClickHouse则是一个高性能的列式数据库,专为在线分析处理(OLAP)场景设计。FlinkClickHouse连接器为这两者之间搭建了一座桥梁,使得用户能够在Flink中方便地与ClickHouse数据库进行交互,实现数据的读写操作。本文将详细介绍FlinkClickHouse连接器的相关内容,包括其特点、使用方法

- 大数据技术之Flink

第1章Flink概述1.1Flink是什么1.2Flink特点1.3FlinkvsSparkStreaming表Flink和Streaming对比FlinkStreaming计算模型流计算微批处理时间语义事件时间、处理时间处理时间窗口多、灵活少、不灵活(窗口必须是批次的整数倍)状态有没有流式SQL有没有1.4Flink的应用场景1.5Flink分层API第2章Flink快速上手2.1创建项目在准备

- Hadoop核心组件最全介绍

Cachel wood

大数据开发hadoop大数据分布式spark数据库计算机网络

文章目录一、Hadoop核心组件1.HDFS(HadoopDistributedFileSystem)2.YARN(YetAnotherResourceNegotiator)3.MapReduce二、数据存储与管理1.HBase2.Hive3.HCatalog4.Phoenix三、数据处理与计算1.Spark2.Flink3.Tez4.Storm5.Presto6.Impala四、资源调度与集群管

- flink数据同步mysql到hive_基于Canal与Flink实现数据实时增量同步(二)

背景在数据仓库建模中,未经任何加工处理的原始业务层数据,我们称之为ODS(OperationalDataStore)数据。在互联网企业中,常见的ODS数据有业务日志数据(Log)和业务DB数据(DB)两类。对于业务DB数据来说,从MySQL等关系型数据库的业务数据进行采集,然后导入到Hive中,是进行数据仓库生产的重要环节。如何准确、高效地把MySQL数据同步到Hive中?一般常用的解决方案是批量

- Flink OceanBase CDC 环境配置与验证

Edingbrugh.南空

运维大数据flinkflinkoceanbase大数据

一、OceanBase数据库核心配置1.环境准备与版本要求版本要求:OceanBaseCE4.0+或OceanBaseEE2.2+组件依赖:需部署LogProxy服务(社区版/企业版部署方式不同)兼容模式:支持MySQL模式(默认)和Oracle模式2.创建用户与权限配置在sys租户创建管理用户(社区版示例):--连接sys租户(默认端口2881)mysql-h127.0.0.1-P2881-ur

- Flink MongoDB CDC 环境配置与验证

Edingbrugh.南空

运维大数据flinkflinkmongodb大数据

一、MongoDB数据库核心配置1.环境准备与集群要求MongoDBCDC依赖ChangeStreams特性,需满足以下条件:版本要求:MongoDB≥3.6集群模式:副本集(ReplicaSet)或分片集群(ShardedCluster)存储引擎:WiredTiger(默认自3.2版本起)副本集协议:pv1(MongoDB4.0+默认)验证集群配置:#连接MongoDBshellmongo--h

- Flink将数据流写入Kafka,Redis,ES,Mysql

浅唱战无双

flinkmysqlesrediskafka

Flink写入不同的数据源写入到Mysql写入到ES向Redis写入向kafka写入导入公共依赖org.slf4jslf4j-simple1.7.25compileorg.apache.flinkflink-java1.10.1org.apache.flinkflink-streaming-java_2.121.10.1写入到Mysql导入依赖mysqlmysql-connector-java5.

- Flink TiDB CDC 环境配置与验证

一、TiDB数据库核心配置1.启用TiCDC服务确保TiDB集群已部署TiCDC组件(版本需兼容FlinkCDC3.0.1),并启动同步服务:#示例:启动TiCDC捕获changefeedcdcclichangefeedcreate\--pd="localhost:2379"\--sink-uri="blackhole://"\--changefeed-id="flink-cdc-demo"2.验

- Flink CDC支持Oracle RAC架构CDB+PDB模式的实时数据同步吗,可以上生产环境吗

智海观潮

Flinkflinkcdcoracleflink数据同步大数据

众所周知,FlinkCDC是一个流数据集成工具,支持多种数据源的实时数据同步,包括大家所熟知的MySQL,MongoDB等。原本是作为Flink的子项目运行,后来捐献给Apache基金会,底层实现比较依赖于Flink生态。具体到数据同步底层实现则相对比较依赖于Debezium。对于Oracle实时数据同步有需求的用户来说,经常会有疑问,比如FlinkCDC支持Oracle实时数据同步吗,可以应用到

- Flink Oracle CDC 环境配置与验证

一、Oracle数据库核心配置详解1.启用归档日志(ArchivingLog)OracleCDC依赖归档日志获取增量变更数据,需按以下步骤启用:非CDB数据库配置:--以DBA身份连接数据库CONNECTsys/passwordASSYSDBA;--配置归档目标路径和大小ALTERSYSTEMSETdb_recovery_file_dest_size=10G;ALTERSYSTEMSETdb_re

- flink读取kafka的数据处理完毕写入redis

JinVijay

flinkkafkaredisflink

/**从Kafka读取数据处理完毕写入Redis*/publicclassKafkaToRedis{publicstaticvoidmain(String[]args)throwsException{StreamExecutionEnvironmentenv=StreamExecutionEnvironment.getExecutionEnvironment();//开启checkpointing

- 阿里云Flink:开启大数据实时处理新时代

云资源服务商

阿里云大数据云计算

走进阿里云Flink在大数据处理的广袤领域中,阿里云Flink犹如一颗璀璨的明星,占据着举足轻重的地位。随着数据量呈指数级增长,企业对数据处理的实时性、高效性和准确性提出了前所未有的挑战。传统的数据处理方式逐渐难以满足这些严苛的需求,而阿里云Flink凭借其卓越的特性和强大的功能,成为众多企业实现数据价值挖掘与业务创新的关键技术。它不仅继承了开源Flink的优秀基因,还融入了阿里云自主研发的创新技

- 大数据集群架构hadoop集群、Hbase集群、zookeeper、kafka、spark、flink、doris、dataeas(二)

争取不加班!

hadoophbasezookeeper大数据运维

zookeeper单节点部署wget-chttps://dlcdn.apache.org/zookeeper/zookeeper-3.8.4/apache-zookeeper-3.8.4-bin.tar.gz下载地址tarxfapache-zookeeper-3.8.4-bin.tar.gz-C/data/&&mv/data/apache-zookeeper-3.8.4-bin//data/zoo

- Hadoop、Spark、Flink 三大大数据处理框架的能力与应用场景

一、技术能力与应用场景对比产品能力特点应用场景Hadoop-基于MapReduce的批处理框架-HDFS分布式存储-容错性强、适合离线分析-作业调度使用YARN-日志离线分析-数据仓库存储-T+1报表分析-海量数据处理Spark-基于内存计算,速度快-支持批处理、流处理(StructuredStreaming)-支持SQL、ML、图计算等-支持多语言(Scala、Java、Python)-近实时处

- 数据同步工具对比:Canal、DataX与Flink CDC

智慧源点

大数据flink大数据

在现代数据架构中,数据同步是构建数据仓库、实现实时分析、支持业务决策的关键环节。Canal、DataX和FlinkCDC作为三种主流的数据同步工具,各自有着不同的设计理念和适用场景。本文将深入探讨这三者的技术特点、使用场景以及实践中的差异,帮助开发者根据实际需求选择合适的工具。1.工具概述1.1CanalCanal是阿里巴巴开源的一款基于MySQL数据库增量日志(binlog)解析的组件,主要用于

- 项目中 枚举与注解的结合使用

飞翔的马甲

javaenumannotation

前言:版本兼容,一直是迭代开发头疼的事,最近新版本加上了支持新题型,如果新创建一份问卷包含了新题型,那旧版本客户端就不支持,如果新创建的问卷不包含新题型,那么新旧客户端都支持。这里面我们通过给问卷类型枚举增加自定义注解的方式完成。顺便巩固下枚举与注解。

一、枚举

1.在创建枚举类的时候,该类已继承java.lang.Enum类,所以自定义枚举类无法继承别的类,但可以实现接口。

- 【Scala十七】Scala核心十一:下划线_的用法

bit1129

scala

下划线_在Scala中广泛应用,_的基本含义是作为占位符使用。_在使用时是出问题非常多的地方,本文将不断完善_的使用场景以及所表达的含义

1. 在高阶函数中使用

scala> val list = List(-3,8,7,9)

list: List[Int] = List(-3, 8, 7, 9)

scala> list.filter(_ > 7)

r

- web缓存基础:术语、http报头和缓存策略

dalan_123

Web

对于很多人来说,去访问某一个站点,若是该站点能够提供智能化的内容缓存来提高用户体验,那么最终该站点的访问者将络绎不绝。缓存或者对之前的请求临时存储,是http协议实现中最核心的内容分发策略之一。分发路径中的组件均可以缓存内容来加速后续的请求,这是受控于对该内容所声明的缓存策略。接下来将讨web内容缓存策略的基本概念,具体包括如如何选择缓存策略以保证互联网范围内的缓存能够正确处理的您的内容,并谈论下

- crontab 问题

周凡杨

linuxcrontabunix

一: 0481-079 Reached a symbol that is not expected.

背景:

*/5 * * * * /usr/IBMIHS/rsync.sh

- 让tomcat支持2级域名共享session

g21121

session

tomcat默认情况下是不支持2级域名共享session的,所有有些情况下登陆后从主域名跳转到子域名会发生链接session不相同的情况,但是只需修改几处配置就可以了。

打开tomcat下conf下context.xml文件

找到Context标签,修改为如下内容

如果你的域名是www.test.com

<Context sessionCookiePath="/path&q

- web报表工具FineReport常用函数的用法总结(数学和三角函数)

老A不折腾

Webfinereport总结

ABS

ABS(number):返回指定数字的绝对值。绝对值是指没有正负符号的数值。

Number:需要求出绝对值的任意实数。

示例:

ABS(-1.5)等于1.5。

ABS(0)等于0。

ABS(2.5)等于2.5。

ACOS

ACOS(number):返回指定数值的反余弦值。反余弦值为一个角度,返回角度以弧度形式表示。

Number:需要返回角

- linux 启动java进程 sh文件

墙头上一根草

linuxshelljar

#!/bin/bash

#初始化服务器的进程PId变量

user_pid=0;

robot_pid=0;

loadlort_pid=0;

gateway_pid=0;

#########

#检查相关服务器是否启动成功

#说明:

#使用JDK自带的JPS命令及grep命令组合,准确查找pid

#jps 加 l 参数,表示显示java的完整包路径

#使用awk,分割出pid

- 我的spring学习笔记5-如何使用ApplicationContext替换BeanFactory

aijuans

Spring 3 系列

如何使用ApplicationContext替换BeanFactory?

package onlyfun.caterpillar.device;

import org.springframework.beans.factory.BeanFactory;

import org.springframework.beans.factory.xml.XmlBeanFactory;

import

- Linux 内存使用方法详细解析

annan211

linux内存Linux内存解析

来源 http://blog.jobbole.com/45748/

我是一名程序员,那么我在这里以一个程序员的角度来讲解Linux内存的使用。

一提到内存管理,我们头脑中闪出的两个概念,就是虚拟内存,与物理内存。这两个概念主要来自于linux内核的支持。

Linux在内存管理上份为两级,一级是线性区,类似于00c73000-00c88000,对应于虚拟内存,它实际上不占用

- 数据库的单表查询常用命令及使用方法(-)

百合不是茶

oracle函数单表查询

创建数据库;

--建表

create table bloguser(username varchar2(20),userage number(10),usersex char(2));

创建bloguser表,里面有三个字段

&nbs

- 多线程基础知识

bijian1013

java多线程threadjava多线程

一.进程和线程

进程就是一个在内存中独立运行的程序,有自己的地址空间。如正在运行的写字板程序就是一个进程。

“多任务”:指操作系统能同时运行多个进程(程序)。如WINDOWS系统可以同时运行写字板程序、画图程序、WORD、Eclipse等。

线程:是进程内部单一的一个顺序控制流。

线程和进程

a. 每个进程都有独立的

- fastjson简单使用实例

bijian1013

fastjson

一.简介

阿里巴巴fastjson是一个Java语言编写的高性能功能完善的JSON库。它采用一种“假定有序快速匹配”的算法,把JSON Parse的性能提升到极致,是目前Java语言中最快的JSON库;包括“序列化”和“反序列化”两部分,它具备如下特征:

- 【RPC框架Burlap】Spring集成Burlap

bit1129

spring

Burlap和Hessian同属于codehaus的RPC调用框架,但是Burlap已经几年不更新,所以Spring在4.0里已经将Burlap的支持置为Deprecated,所以在选择RPC框架时,不应该考虑Burlap了。

这篇文章还是记录下Burlap的用法吧,主要是复制粘贴了Hessian与Spring集成一文,【RPC框架Hessian四】Hessian与Spring集成

- 【Mahout一】基于Mahout 命令参数含义

bit1129

Mahout

1. mahout seqdirectory

$ mahout seqdirectory

--input (-i) input Path to job input directory(原始文本文件).

--output (-o) output The directory pathna

- linux使用flock文件锁解决脚本重复执行问题

ronin47

linux lock 重复执行

linux的crontab命令,可以定时执行操作,最小周期是每分钟执行一次。关于crontab实现每秒执行可参考我之前的文章《linux crontab 实现每秒执行》现在有个问题,如果设定了任务每分钟执行一次,但有可能一分钟内任务并没有执行完成,这时系统会再执行任务。导致两个相同的任务在执行。

例如:

<?

//

test

.php

- java-74-数组中有一个数字出现的次数超过了数组长度的一半,找出这个数字

bylijinnan

java

public class OcuppyMoreThanHalf {

/**

* Q74 数组中有一个数字出现的次数超过了数组长度的一半,找出这个数字

* two solutions:

* 1.O(n)

* see <beauty of coding>--每次删除两个不同的数字,不改变数组的特性

* 2.O(nlogn)

* 排序。中间

- linux 系统相关命令

candiio

linux

系统参数

cat /proc/cpuinfo cpu相关参数

cat /proc/meminfo 内存相关参数

cat /proc/loadavg 负载情况

性能参数

1)top

M:按内存使用排序

P:按CPU占用排序

1:显示各CPU的使用情况

k:kill进程

o:更多排序规则

回车:刷新数据

2)ulimit

ulimit -a:显示本用户的系统限制参

- [经营与资产]保持独立性和稳定性对于软件开发的重要意义

comsci

软件开发

一个软件的架构从诞生到成熟,中间要经过很多次的修正和改造

如果在这个过程中,外界的其它行业的资本不断的介入这种软件架构的升级过程中

那么软件开发者原有的设计思想和开发路线

- 在CentOS5.5上编译OpenJDK6

Cwind

linuxOpenJDK

几番周折终于在自己的CentOS5.5上编译成功了OpenJDK6,将编译过程和遇到的问题作一简要记录,备查。

0. OpenJDK介绍

OpenJDK是Sun(现Oracle)公司发布的基于GPL许可的Java平台的实现。其优点:

1、它的核心代码与同时期Sun(-> Oracle)的产品版基本上是一样的,血统纯正,不用担心性能问题,也基本上没什么兼容性问题;(代码上最主要的差异是

- java乱码问题

dashuaifu

java乱码问题js中文乱码

swfupload上传文件参数值为中文传递到后台接收中文乱码 在js中用setPostParams({"tag" : encodeURI( document.getElementByIdx_x("filetag").value,"utf-8")});

然后在servlet中String t

- cygwin很多命令显示command not found的解决办法

dcj3sjt126com

cygwin

cygwin很多命令显示command not found的解决办法

修改cygwin.BAT文件如下

@echo off

D:

set CYGWIN=tty notitle glob

set PATH=%PATH%;d:\cygwin\bin;d:\cygwin\sbin;d:\cygwin\usr\bin;d:\cygwin\usr\sbin;d:\cygwin\us

- [介绍]从 Yii 1.1 升级

dcj3sjt126com

PHPyii2

2.0 版框架是完全重写的,在 1.1 和 2.0 两个版本之间存在相当多差异。因此从 1.1 版升级并不像小版本间的跨越那么简单,通过本指南你将会了解两个版本间主要的不同之处。

如果你之前没有用过 Yii 1.1,可以跳过本章,直接从"入门篇"开始读起。

请注意,Yii 2.0 引入了很多本章并没有涉及到的新功能。强烈建议你通读整部权威指南来了解所有新特性。这样有可能会发

- Linux SSH免登录配置总结

eksliang

ssh-keygenLinux SSH免登录认证Linux SSH互信

转载请出自出处:http://eksliang.iteye.com/blog/2187265 一、原理

我们使用ssh-keygen在ServerA上生成私钥跟公钥,将生成的公钥拷贝到远程机器ServerB上后,就可以使用ssh命令无需密码登录到另外一台机器ServerB上。

生成公钥与私钥有两种加密方式,第一种是

- 手势滑动销毁Activity

gundumw100

android

老是效仿ios,做android的真悲催!

有需求:需要手势滑动销毁一个Activity

怎么办尼?自己写?

不用~,网上先问一下百度。

结果:

http://blog.csdn.net/xiaanming/article/details/20934541

首先将你需要的Activity继承SwipeBackActivity,它会在你的布局根目录新增一层SwipeBackLay

- JavaScript变换表格边框颜色

ini

JavaScripthtmlWebhtml5css

效果查看:http://hovertree.com/texiao/js/2.htm代码如下,保存到HTML文件也可以查看效果:

<html>

<head>

<meta charset="utf-8">

<title>表格边框变换颜色代码-何问起</title>

</head>

<body&

- Kafka Rest : Confluent

kane_xie

kafkaRESTconfluent

最近拿到一个kafka rest的需求,但kafka暂时还没有提供rest api(应该是有在开发中,毕竟rest这么火),上网搜了一下,找到一个Confluent Platform,本文简单介绍一下安装。

这里插一句,给大家推荐一个九尾搜索,原名叫谷粉SOSO,不想fanqiang谷歌的可以用这个。以前在外企用谷歌用习惯了,出来之后用度娘搜技术问题,那匹配度简直感人。

环境声明:Ubu

- Calender不是单例

men4661273

单例Calender

在我们使用Calender的时候,使用过Calendar.getInstance()来获取一个日期类的对象,这种方式跟单例的获取方式一样,那么它到底是不是单例呢,如果是单例的话,一个对象修改内容之后,另外一个线程中的数据不久乱套了吗?从试验以及源码中可以得出,Calendar不是单例。

测试:

Calendar c1 =

- 线程内存和主内存之间联系

qifeifei

java thread

1, java多线程共享主内存中变量的时候,一共会经过几个阶段,

lock:将主内存中的变量锁定,为一个线程所独占。

unclock:将lock加的锁定解除,此时其它的线程可以有机会访问此变量。

read:将主内存中的变量值读到工作内存当中。

load:将read读取的值保存到工作内存中的变量副本中。

- schedule和scheduleAtFixedRate

tangqi609567707

javatimerschedule

原文地址:http://blog.csdn.net/weidan1121/article/details/527307

import java.util.Timer;import java.util.TimerTask;import java.util.Date;

/** * @author vincent */public class TimerTest {

- erlang 部署

wudixiaotie

erlang

1.如果在启动节点的时候报这个错 :

{"init terminating in do_boot",{'cannot load',elf_format,get_files}}

则需要在reltool.config中加入

{app, hipe, [{incl_cond, exclude}]},

2.当generate时,遇到:

ERROR