CUDA10.1回退到10.0等相关软件(TensorFlow,TensorRT)调整

系统:

Ubuntu 16.04LTS

配置:

GeForce GTX 1060 (6078MiB)

已安装好的显卡驱动:

NVIDIA-SMI 418.56 Driver Version: 418.56

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.56 Driver Version: 418.56 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce GTX 108... Off | 00000000:01:00.0 Off | N/A |

| 33% 36C P5 22W / 250W | 297MiB / 11178MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 1321 G /usr/lib/xorg/Xorg 226MiB |

| 0 2059 G compiz 62MiB |

| 0 3418 G /usr/lib/firefox/firefox 2MiB |

| 0 3824 G /usr/lib/firefox/firefox 2MiB |

+-----------------------------------------------------------------------------+

CUDA 10.0

下载runfile文件:

Archived Releases:CUDA Toolkit 10.0

https://developer.nvidia.com/cuda-10.0-download-archive(需要登录)

安装:

1.Run sudo ./cuda_10.0.130_410.48_linux.run

2.Follow the command-line prompts

Do you accept the previously read EULA?

accept/decline/quit: accept

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 410.48?

(y)es/(n)o/(q)uit: n

Install the CUDA 10.0 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-10.0 ]:

Do you want to install a symbolic link at /usr/local/cuda?

(y)es/(n)o/(q)uit: y

Install the CUDA 10.0 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /home/toson ]:

把cuda10.1卸载掉:

:/usr/local/cuda-10.1/bin$ sudo ./cuda-uninstaller

cudnn 7.5

下载7.5版本:https://developer.nvidia.com/rdp/cudnn-archive

解压到cuda目录:

$ sudo tar -zxvf cudnn-10.0-linux-x64-v7.5.0.56.tgz -C /usr/local/

其他软件

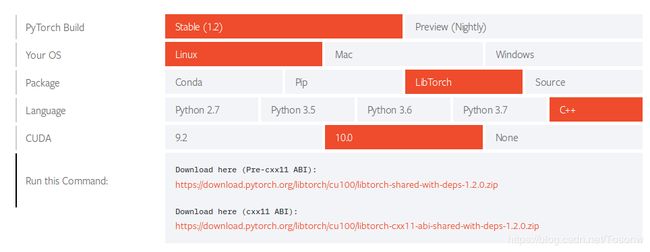

1. PyTorch

PyTorch的C++调用库可以在官网直接下载,解压即可,无需安装。

需要重新下载基于cuda10.0的:https://pytorch.org/get-started/locally/

我下载的是(cxx11 ABI):

https://download.pytorch.org/libtorch/cu100/libtorch-cxx11-abi-shared-with-deps-1.2.0.zip

下载后解压,并在CMakeLists.txt中包含引用:

set(Torch_DIR /home/toson/download_libs/libtorch/share/cmake/Torch)

find_package(Torch REQUIRED)

2. TensorFlow重新编译

我下载的是1.12版本:https://github.com/tosonw/tensorflow/archive/v1.12.0.tar.gz

解压后命令行进入目录:

$ mkdir build

$ cd build

$ ../configure

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.15.2 installed.

Please specify the location of python. [Default is /home/toson/anaconda3/bin/python]:

Found possible Python library paths:

/home/toson/anaconda3/lib/python3.6/site-packages

Please input the desired Python library path to use. Default is [/home/toson/anaconda3/lib/python3.6/site-packages]

Do you wish to build TensorFlow with Apache Ignite support? [Y/n]: n

No Apache Ignite support will be enabled for TensorFlow.

Do you wish to build TensorFlow with XLA JIT support? [Y/n]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]: n

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: y

CUDA support will be enabled for TensorFlow.

Please specify the CUDA SDK version you want to use. [Leave empty to default to CUDA 9.0]: 10.0

Please specify the location where CUDA 10.0 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7]:

Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Do you wish to build TensorFlow with TensorRT support? [y/N]: n

No TensorRT support will be enabled for TensorFlow.

Please specify the NCCL version you want to use. If NCCL 2.2 is not installed, then you can use version 1.3 that can be fetched automatically but it may have worse performance with multiple GPUs. [Default is 2.2]: 1.3

Please specify a list of comma-separated Cuda compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 6.1]:

Do you want to use clang as CUDA compiler? [y/N]: n

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]: n

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]:

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See tools/bazel.rc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

Configuration finished

编译:

# 注:"-D_GLIBCXX_USE_CXX11_ABI=0"是由于protobuf是基于GCC4等等一系列原因。

$ bazel build --config=opt --config=cuda //tensorflow:libtensorflow_cc.so

#等待有点久,最后提示以下内容就算成功了:

Target //tensorflow:libtensorflow_cc.so up-to-date:

bazel-bin/tensorflow/libtensorflow_cc.so

INFO: Elapsed time: 1206.366s, Critical Path: 162.98s

INFO: 4956 processes: 4956 local.

INFO: Build completed successfully, 5069 total actions

编译成功后,在 /bazel-bin/tensorflow 目录下会出现 libtensorflow_cc.so 文件

C版本: bazel build :libtensorflow.so

C++版本: bazel build :libtensorflow_cc.so

需要的头文件,要在源码里拷贝出来使用:

bazel-genfiles/...,eigen/...,include/...,tf/...

需要的文件都拷贝出来后,可使用bazel clean命令,把编译的那些琐碎文件清除掉,那些太占空间。

3. TensorRT

我原来的TensorRT是基于cuda10.1的

需要重新下载基于cuda10.0的TensorRT:

下载(需要登录):https://developer.nvidia.com/tensorrt

TensorRT 6.0.1.5 GA for Ubuntu 16.04 and CUDA 10.0 tar package

然后解压到自己的目录,再在CMakeLists.txt中包含就可以了。

注:tensorRT模型需要基于其版本重新生成模型文件。

如果要在python里使用tensorRT,需要安装:

$ cd TensorRT-6.0.1.5/python

$ pip install tensorrt-6.0.1.5-cp36-none-linux_x86_64.whl