AI-NLP-3.Word2Vec实战案例课

目录

安装notebooks

1.文本情感分析 英文 && 中文

Data Set

File descriptions

Data fields

第一种方式:bag_of_words_model

用pandas读入训练数据

对影评数据做预处理,大概有以下环节:

清洗数据添加到dataframe里

抽取bag of words特征(TF,用sklearn的CountVectorizer)

训练分类器

删除不用的占内容变量

读取测试数据进行预测

第二种方式:Word2Vec

读入无标签数据

和第一个ipython notebook一样做数据的预处理

用gensim训练词嵌入模型

看看训练的词向量结果如何

第二种方式 续:使用Word2Vec训练后的数据

和之前的操作一致

读入之前训练好的Word2Vec模型

我们可以根据word2vec的结果去对影评文本进行编码

用随机森林构建分类器

清理占用内容的变量

预测测试集结果并上传kaggle

2.中文应用:chinese-sentiment-analysis

安装notebooks

ipynb,顾名思义,ipython notebook

C:\Users\Administrator>python -m pip install jupyter notebook

Collecting jupyter

Downloading https://files.pythonhosted.org/packages/83/df/0f5dd132200728a86190397e1ea87cd76244e42d39ec5e88efd25b2abd7e/jupyter-1.0.0-py2.py3-none-any.whl

Collecting notebook

Downloading https://files.pythonhosted.org/packages/5e/7c/7fd8e9584779d65dfcad9fa2e09c76131a41f999f853a9c7026ed8585586/notebook-5.6.0-py2.py3-none-any.whl (8.9MB)

100% |████████████████████████████████| 8.9MB 227kB/s之后cmd中输入jupyter notebook会打开一个页面,先upload这个.ipynb后缀的文件

C:\Users\Administrator>jupyter notebook

[I 16:47:35.666 NotebookApp] Writing notebook server cookie secret to C:\Users\Administrator\AppData\Roaming\jupyter\runtime\notebook_cookie_secret然后点击上传后的.ipynb文件,点击下面的红色方框中的第一个按钮,运行,运行后,网页的下面部分会输出结果。

选中一个框,方框变成蓝色,表示选中。

如果鼠标点击代码,方框变成绿色,表示处于编辑状态。

选中方框变蓝色后,按下键盘上的小写L可以显示行数。

在cell中可以直接按tab键,可以自动补全,超级实用

1.文本情感分析 英文 && 中文

第一个示例:https://www.kaggle.com/c/word2vec-nlp-tutorial/data

bag of words meets bags of popcorn

1.基本的文本预处理技术 (网页解析, 文本抽取, 正则表达式等)

2.word2vec词向量编码与机器学习建模情感分析

数据

Data Set

The labeled data set consists of 50,000 IMDB movie reviews, specially selected for sentiment analysis. The sentiment of reviews is binary, meaning the IMDB rating < 5 results in a sentiment score of 0, and rating >=7 have a sentiment score of 1. No individual movie has more than 30 reviews. The 25,000 review labeled training set does not include any of the same movies as the 25,000 review test set. In addition, there are another 50,000 IMDB reviews provided without any rating labels.

File descriptions

- labeledTrainData - The labeled training set. The file is tab-delimited and has a header row followed by 25,000 rows containing an id, sentiment, and text for each review.

- testData - The test set. The tab-delimited file has a header row followed by 25,000 rows containing an id and text for each review. Your task is to predict the sentiment for each one.

- unlabeledTrainData - An extra training set with no labels. The tab-delimited file has a header row followed by 50,000 rows containing an id and text for each review.

- sampleSubmission - A comma-delimited sample submission file in the correct format.

Data fields

- id - Unique ID of each review

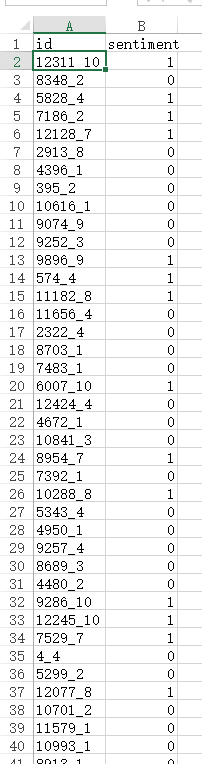

- sentiment - Sentiment of the review; 1 for positive reviews and 0 for negative reviews

- review - Text of the review

第一种方式:bag_of_words_model

import os

import re

import numpy as np

import pandas as pd

from bs4 import BeautifulSoup

import warnings #过滤掉sklearn的警告

warnings.filterwarnings(action='ignore',category=UserWarning,module='sklearn')

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import confusion_matrixpandas可以把csv转换成excel类似的,BeautifulSoup用于解析网页,sklearn用于抽取文本特征。

import nltk

#nltk.download()

from nltk.corpus import stopwords用pandas读入训练数据

datafile = os.path.join('E:/AI/NLP/NLTK/Python/3/', 'data', 'labeledTrainData.tsv')

df = pd.read_csv(datafile, sep='\t', escapechar='\\')

print('Number of reviews: {}'.format(len(df)))

df.head()Number of reviews: 25000

Out[19]:

| id | sentiment | review | |

|---|---|---|---|

| 0 | 5814_8 | 1 | With all this stuff going down at the moment w... |

| 1 | 2381_9 | 1 | "The Classic War of the Worlds" by Timothy Hin... |

简单看下第一条评论:

df['review'][0]"With all this stuff going down at the moment with MJ i've started listening to his music, watching the odd documentary here and there, watched The Wiz and watched Moonwalker again. Maybe i just want to get a certain insight into this guy who i thought was really cool in the eighties just to maybe make up my mind whether he is guilty or innocent. Moonwalker is part biography, part feature film which i remember going to see at the cinema when it was originally released. Some of it has subtle messages about MJ's feeling towards the press and also the obvious message of drugs are bad m'kay.

Visually impressive but of course this is all about Michael Jackson so unless you remotely like MJ in anyway then you are going to hate this and find it boring. Some may call MJ an egotist for consenting to the making of this movie BUT MJ and most of his fans would say that he made it for the fans which if true is really nice of him.

The actual feature film bit when it finally starts is only on for 20 minutes or so excluding the Smooth Criminal sequence and Joe Pesci is convincing as a psychopathic all powerful drug lord. Why he wants MJ dead so bad is beyond me. Because MJ overheard his plans? Nah, Joe Pesci's character ranted that he wanted people to know it is he who is supplying drugs etc so i dunno, maybe he just hates MJ's music.

Lots of cool things in this like MJ turning into a car and a robot and the whole Speed Demon sequence. Also, the director must have had the patience of a saint when it came to filming the kiddy Bad sequence as usually directors hate working with one kid let alone a whole bunch of them performing a complex dance scene.

Bottom line, this movie is for people who like MJ on one level or another (which i think is most people). If not, then stay away. It does try and give off a wholesome message and ironically MJ's bestest buddy in this movie is a girl! Michael Jackson is truly one of the most talented people ever to grace this planet but is he guilty? Well, with all the attention i've gave this subject....hmmm well i don't know because people can be different behind closed doors, i know this for a fact. He is either an extremely nice but stupid guy or one of the most sickest liars. I hope he is not the latter."对影评数据做预处理,大概有以下环节:

- 去掉html标签

- 移除标点

- 切分成词/token

- 去掉停用词

- 重组为新的句子

def display(text, title):

print(title)

print("\n----------我是分割线-------------\n")

print(text) 我们来继续显示第2个review的数据:

raw_example = df['review'][1]

display(raw_example, '原始数据')原始数据

----------我是分割线-------------

"The Classic War of the Worlds" by Timothy Hines is a very entertaining film that obviously goes to great effort and lengths to faithfully recreate H. G. Wells' classic book. Mr. Hines succeeds in doing so. I, and those who watched his film with me, appreciated the fact that it was not the standard, predictable Hollywood fare that comes out every year, e.g. the Spielberg version with Tom Cruise that had only the slightest resemblance to the book. Obviously, everyone looks for different things in a movie. Those who envision themselves as amateur "critics" look only to criticize everything they can. Others rate a movie on more important bases,like being entertained, which is why most people never agree with the "critics". We enjoyed the effort Mr. Hines put into being faithful to H.G. Wells' classic novel, and we found it to be very entertaining. This made it easy to overlook what the "critics" perceive to be its shortcomings.去掉HTML标签的数据example = BeautifulSoup(raw_example, 'html.parser').get_text()

display(example, '去掉HTML标签的数据')去掉HTML标签的数据

----------我是分割线-------------

"The Classic War of the Worlds" by Timothy Hines is a very entertaining film that obviously goes to great effort and lengths to faithfully recreate H. G. Wells' classic book. Mr. Hines succeeds in doing so. I, and those who watched his film with me, appreciated the fact that it was not the standard, predictable Hollywood fare that comes out every year, e.g. the Spielberg version with Tom Cruise that had only the slightest resemblance to the book. Obviously, everyone looks for different things in a movie. Those who envision themselves as amateur "critics" look only to criticize everything they can. Others rate a movie on more important bases,like being entertained, which is why most people never agree with the "critics". We enjoyed the effort Mr. Hines put into being faithful to H.G. Wells' classic novel, and we found it to be very entertaining. This made it easy to overlook what the "critics" perceive to be its shortcomings.

Press any key to continue . . .去掉标点的数据example_letters = re.sub(r'[^a-zA-Z]', ' ', example)

display(example_letters, '去掉标点的数据')去掉标点的数据

----------我是分割线-------------

The Classic War of the Worlds by Timothy Hines is a very entertaining film that obviously goes to great effort and lengths to faithfully recreate H G Wells classic book Mr Hines succeeds in doing so I and those who watched his film with me appreciated the fact that it was not the standard predictable Hollywood fare that comes out every year e g the Spielberg version with Tom Cruise that had only the slightest resemblance to the book Obviously everyone looks for different things in a movie Those who envision themselves as amateur critics look only to criticize everything they can Others rate a movie on more important bases like being entertained which is why most people never agree with the critics We enjoyed the effort Mr Hines put into being faithful to H G Wells classic novel and we found it to be very entertaining This made it easy to overlook what the critics perceive to be its shortcomings纯词列表数据words = example_letters.lower().split()#全转成小写并分隔

display(words, '纯词列表数据')纯词列表数据

----------我是分割线-------------

['the', 'classic', 'war', 'of', 'the', 'worlds', 'by', 'timothy', 'hines', 'is', 'a', 'very', 'entertaining', 'film', 'that', 'obviously', 'goes', 'to', 'great', 'effort', 'and', 'lengths', 'to', 'faithfully', 'recreate', 'h', 'g', 'wells', 'classic', 'book', 'mr', 'hines', 'succeeds', 'in', 'doing', 'so', 'i', 'and', 'those', 'who', 'watched', 'his', 'film', 'with', 'me', 'appreciated', 'the', 'fact', 'that', 'it', 'was', 'not', 'the', 'standard', 'predictable', 'hollywood', 'fare', 'that', 'comes', 'out', 'every', 'year', 'e', 'g', 'the', 'spielberg', 'version', 'with', 'tom', 'cruise', 'that', 'had', 'only', 'the', 'slightest', 'resemblance', 'to', 'the', 'book', 'obviously', 'everyone', 'looks', 'for', 'different', 'things', 'in', 'a', 'movie', 'those', 'who', 'envision', 'themselves', 'as', 'amateur', 'critics', 'look', 'only', 'to', 'criticize', 'everything', 'they', 'can', 'others', 'rate', 'a', 'movie', 'on', 'more', 'important', 'bases', 'like', 'being', 'entertained', 'which', 'is', 'why', 'most', 'people', 'never', 'agree', 'with', 'the', 'critics', 'we', 'enjoyed', 'the', 'effort', 'mr', 'hines', 'put', 'into', 'being', 'faithful', 'to', 'h', 'g', 'wells', 'classic', 'novel', 'and', 'we', 'found', 'it', 'to', 'be', 'very', 'entertaining', 'this', 'made', 'it', 'easy', 'to', 'overlook', 'what', 'the', 'critics', 'perceive', 'to', 'be', 'its', 'shortcomings']去掉停用词数据from nltk.corpus import stopwords

words_nostop = [w for w in words if w not in stopwords.words('english')]去掉停用词数据

----------我是分割线-------------

['classic', 'war', 'worlds', 'timothy', 'hines', 'entertaining', 'film', 'obviously', 'goes', 'great', 'effort', 'lengths', 'faithfully', 'recreate', 'h', 'g', 'wells', 'classic', 'book', 'mr', 'hines', 'succeeds', 'watched', 'film', 'appreciated', 'fact', 'standard', 'predictable', 'hollywood', 'fare', 'comes', 'every', 'year', 'e', 'g', 'spielberg', 'version', 'tom', 'cruise', 'slightest', 'resemblance', 'book', 'obviously', 'everyone', 'looks', 'different', 'things', 'movie', 'envision', 'amateur', 'critics', 'look', 'criticize', 'everything', 'others', 'rate', 'movie', 'important', 'bases', 'like', 'entertained', 'people', 'never', 'agree', 'critics', 'enjoyed', 'effort', 'mr', 'hines', 'put', 'faithful', 'h', 'g', 'wells', 'classic', 'novel', 'found', 'entertaining', 'made', 'easy', 'overlook', 'critics', 'perceive', 'shortcomings']前面的所有操作可以写成一个清洗函数:

eng_stopwords = set(stopwords.words('english'))

def clean_text(text):

text = BeautifulSoup(text, 'html.parser').get_text()

text = re.sub(r'[^a-zA-Z]', ' ', text)

words = text.lower().split()

words = [w for w in words if w not in eng_stopwords]

return ' '.join(words)

display(clean_text(raw_example),'清洗数据')清洗数据

----------我是分割线-------------

classic war worlds timothy hines entertaining film obviously goes great effort lengths faithfully recreate h g wells classic book mr hines succeeds watched film appreciated fact standard predictable hollywood fare comes every year e g spielberg version tom cruise slightest resemblance book obviously everyone looks different things movie envision amateur critics look criticize everything others rate movie important bases like entertained people never agree critics enjoyed effort mr hines put faithful h g wells classic novel found entertaining made easy overlook critics perceive shortcomings清洗数据添加到dataframe里

对每一行review进行清洗数据

df['clean_review'] = df.review.apply(clean_text)

print(df.head()) id ... clean_review

0 5814_8 ... stuff going moment mj started listening music ...

1 2381_9 ... classic war worlds timothy hines entertaining ...

2 7759_3 ... film starts manager nicholas bell giving welco...

3 3630_4 ... must assumed praised film greatest filmed oper...

4 9495_8 ... superbly trashy wondrously unpretentious explo...抽取bag of words特征(TF,用sklearn的CountVectorizer)

vectorizer = CountVectorizer(max_features = 5000) #对所有关键词的term frequency(tf)进行降序排序,只取前5000个做为关键词集

train_data_features = vectorizer.fit_transform(df.clean_review).toarray()#fit_transform转换成文档词频矩阵,toarray转成数组

print(train_data_features.shape)(25000, 5000)也就是5000行,每行25000个.

train_data_featuresarray([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]], dtype=int64)训练分类器

forest = RandomForestClassifier(n_estimators=100)#随机森林分类

forest = forest.fit(train_data_features, df.sentiment)#开始数据训练在训练集上做个predict看看效果如何

print (confusion_matrix(df.sentiment, forest.predict(train_data_features)))array([[12500, 0],

[ 0, 12500]], dtype=int64)删除不用的占内容变量

del df

del train_data_features读取测试数据进行预测

datafile = os.path.join('..', 'data', 'testData.tsv')

df = pd.read_csv(datafile, sep='\t', escapechar='\\')

print('Number of reviews: {}'.format(len(df)))

df['clean_review'] = df.review.apply(clean_text)

df.head()

test_data_features = vectorizer.transform(df.clean_review).toarray()

test_data_features.shape

result = forest.predict(test_data_features)#预测结果

output = pd.DataFrame({'id':df.id, 'sentiment':result})

print(output.head())

output.to_csv(os.path.join('..', 'data', 'Bag_of_Words_model.csv'), index=False)

del df

del test_data_featuresNumber of reviews: 25000

Out[84]:

| id | review | clean_review | |

|---|---|---|---|

| 0 | 12311_10 | Naturally in a film who's main themes are of m... | naturally film main themes mortality nostalgia... |

| 1 | 8348_2 | This movie is a disaster within a disaster fil... | movie disaster within disaster film full great... |

| 2 | 5828_4 | All in all, this is a movie for kids. We saw i... | movie kids saw tonight child loved one point k... |

Bag_of_Words_model.csv如下:

第二种方式:Word2Vec

import os

import re

import numpy as np

import pandas as pd

import warnings

warnings.filterwarnings(action='ignore',category=UserWarning,module="gensim")

from bs4 import BeautifulSoup

from gensim.models.word2vec import Word2Vec

定义一个读取CSV的函数

def load_dataset(name, nrows=None):

datasets = {

'unlabeled_train': 'unlabeledTrainData.tsv',

'labeled_train': 'labeledTrainData.tsv',

'test': 'testData.tsv'

}

if name not in datasets:

raise ValueError(name)

data_file = os.path.join('..', 'data', datasets[name])

df = pd.read_csv(data_file, sep='\t', escapechar='\\', nrows=nrows)

print('Number of reviews: {}'.format(len(df)))

return df读入无标签数据

用于训练生成word2vec词向量

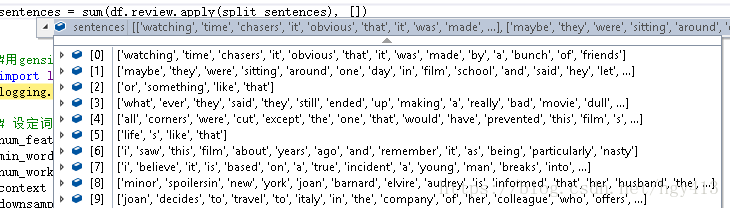

df = load_dataset('unlabeled_train')

print(df.head())Number of reviews: 50000

id review

0 9999_0 Watching Time Chasers, it obvious that it was ...

1 45057_0 I saw this film about 20 years ago and remembe...

2 15561_0 Minor Spoilers

In New York, Joan Ba...

3 7161_0 I went to see this film with a great deal of e...

4 43971_0 Yes, I agree with everyone on this site this m...和第一个ipython notebook一样做数据的预处理

稍稍有一点不一样的是,我们留了个候选,可以去除停用词,也可以不去除停用词

from nltk.corpus import stopwords

eng_stopwods = set(stopwords.words('english'))

def clean_text(text, remove_stopwords=False):

text = BeautifulSoup(text, 'html.parser').get_text()

text = re.sub(r'[^a-zA-Z]', ' ', text)

words = text.lower().split()

if remove_stopwords:

words = [w for w in words if w not in eng_stopwods]

return words

tokenizer = nltk.data.load('tokenizers/punkt/english.pickle')

def print_call_counts(f):

n = 0

def wrapped(*args, **kwargs):

nonlocal n

n += 1

if n % 1000 == 1:

print('method {} called {} times'.format(f.__name__, n))

return f(*args, **kwargs)

return wrapped

@print_call_counts#当解释器读到@的这样的修饰符之后,会先解析@后的内容,直接就把@下一行的函数或者类作为@后边的函数的参数,然后将返回值赋值给下一行修饰的函数对象。

def split_sentences(review):

raw_sentences = tokenizer.tokenizer(review.strip())#strip删除字符串中开头、结尾处,位于 rm删除序列的字符

sentences = [clean_text(s) for s in raw_sentences if s]

return sentences

sentences = sum(df.review.apply(split_sentences), [])

结果:

................

method split_sentences called 46001 times

method split_sentences called 47001 times

method split_sentences called 48001 times

method split_sentences called 49001 times

用gensim训练词嵌入模型

import logging

logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)

# 设定词向量训练的参数

num_features = 300 #300维, 建议300或500

min_word_count = 40

num_workers = 4 #训练时的线程数

context = 10 #上下文窗口大小

downsampling = 1e-3 # Downsample setting for frequent words

model_name = '{}features_{}minwords_{}context.model'.format(num_features, min_word_count,context)#要保存的文件名

print('Training model...')

model = Word2Vec(sentences, workers=num_features, size=num_features, min_count = min_word_count, window = context, sample=downsampling)

# If you don't plan to train the model any further, calling

# init_sims will make the model much more memory-efficient.

model.init_sims(replace=True)

# It can be helpful to create a meaningful model name and

# save the model for later use. You can load it later using Word2Vec.load()

model.save(os.path.join('..', 'data', model_name)).....

2018-08-16 17:10:55,051 : INFO : worker thread finished; awaiting finish of 0 more threads

2018-08-16 17:10:55,052 : INFO : EPOCH - 5 : training on 11877527 raw words (8394318 effective words) took 19.0s, 442045 effective words/s

2018-08-16 17:10:55,053 : INFO : training on a 59387635 raw words (41968004 effective words) took 95.8s, 438035 effective words/s

2018-08-16 17:14:34,696 : INFO : precomputing L2-norms of word weight vectors

2018-08-16 17:14:35,547 : INFO : saving Word2Vec object under ..\data\300features_40minwords_10context.model, separately None

2018-08-16 17:14:35,554 : INFO : not storing attribute vectors_norm

2018-08-16 17:14:35,562 : INFO : not storing attribute cum_table

2018-08-16 17:14:35,886 : INFO : saved ..\data\300features_40minwords_10context.model

看看训练的词向量结果如何

print(model.most_similar("man"))[('woman', 0.6256189346313477),

('lady', 0.5953349471092224),

('lad', 0.576863169670105),

('person', 0.5407935380935669),

('farmer', 0.5382746458053589),

('chap', 0.536788821220398),

('soldier', 0.5292650461196899),

('men', 0.5261573791503906),

('monk', 0.5237958431243896),

('guy', 0.5213091373443604)]

第二种方式 续:使用Word2Vec训练后的数据

训练后的数据文件名为300features_40minwords_10context.model,前面保存的.

import warnings

warnings.filterwarnings('ignore')

import os

import re

import numpy as np

import pandas as pd

from bs4 import BeautifulSoup

from nltk.corpus import stopwords

from gensim.models.word2vec import Word2Vec

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import confusion_matrix

from sklearn.cluster import KMeans

和之前的操作一致

def load_dataset(name, nrows=None):

datasets = {

'unlabeled_train': 'unlabeledTrainData.tsv',

'labeled_train': 'labeledTrainData.tsv',

'test': 'testData.tsv'

}

if name not in datasets:

raise ValueError(name)

data_file = os.path.join('..', 'data', datasets[name])

df = pd.read_csv(data_file, sep='\t', escapechar='\\', nrows=nrows)

print('Number of reviews: {}'.format(len(df)))

return df

eng_stopwords = set(stopwords.words('english'))

def clean_text(text, remove_stopwords=False):

text = BeautifulSoup(text, 'html.parser').get_text()

text = re.sub(r'[^a-zA-Z]', ' ', text)

words = text.lower().split()

if remove_stopwords:

words = [w for w in words if w not in eng_stopwords]

return words

读入之前训练好的Word2Vec模型

model_name = '300features_40minwords_10context.model'

model = Word2Vec.load(os.path.join('..', 'data', model_name))我们可以根据word2vec的结果去对影评文本进行编码

编码方式有一点粗暴,简单说来就是把这句话中的词的词向量做平均

df = load_dataset('labeled_train')

df.head()Number of reviews: 25000

Out[14]:

| id | sentiment | review | |

|---|---|---|---|

| 0 | 5814_8 | 1 | With all this stuff going down at the moment w... |

| 1 | 2381_9 | 1 | "The Classic War of the Worlds" by Timothy Hin... |

| 2 | 7759_3 | 0 | The film starts with a manager (Nicholas Bell)... |

def to_review_vector(review):

words = clean_text(review, remove_stopwords=True)

array = np.array([model[w] for w in words if w in model])

return pd.Series(array.mean(axis=0))

train_data_features = df.review.apply(to_review_vector)

print(train_data_features.head()) 0 1 2 3 4 ... 295 296 297 298 299

0 -0.002746 0.005741 0.004646 -0.001938 0.009835 ... -0.005903 0.010316 0.000723 -0.014974 -0.007718

1 -0.003350 -0.006660 0.000073 0.004966 0.001066 ... 0.014924 0.002365 0.012350 -0.006034 -0.025690

2 -0.016884 -0.006035 0.000061 0.003758 0.008695 ... 0.006264 0.002883 0.002217 -0.026501 -0.041674

3 -0.009798 -0.000712 0.006659 -0.017110 0.006017 ... 0.015451 0.011731 0.008902 -0.020935 -0.036668

4 -0.008019 -0.006775 0.009767 0.002874 0.014989 ... -0.000688 -0.000424 -0.003103 -0.031588 -0.019807

[5 rows x 300 columns]用随机森林构建分类器

forest = RandomForestClassifier(n_estimators = 100, random_state=42)

forest = forest.fit(train_data_features, df.sentiment)同样在训练集上试试,确保模型能正常work

confusion_matrix(df.sentiment, forest.predict(train_data_features))清理占用内容的变量

del df

del train_data_features预测测试集结果并上传kaggle

df = load_dataset('test')

df.head()

test_data_features = df.review.apply(to_review_vector)

print(test_data_features.head())

result = forest.predict(test_data_features)

output = pd.DataFrame({'id':df.id, 'sentiment':result})

output.to_csv(os.path.join('..', 'data', 'Word2Vec_model.csv'), index=False)

output.head()

del df

del test_data_features

del forestNumber of reviews: 25000

0 1 2 3 4 ... 295 296 297 298 299

0 0.003222 -0.002921 0.009352 -0.027743 0.018592 ... 0.011904 0.004627 0.015087 -0.016692 -0.018632

1 -0.013426 0.003515 0.002579 -0.022269 -0.009693 ... -0.008517 -0.005674 -0.007146 -0.026965 -0.019395

2 0.001031 -0.001867 0.021952 -0.033233 0.005209 ... -0.004877 0.008913 0.017697 -0.007476 -0.006233

3 -0.014347 0.002951 0.022032 -0.009660 0.005736 ... 0.003137 0.004633 0.020197 -0.016389 -0.033783

4 -0.000612 -0.006142 0.000142 -0.000970 0.011840 ... 0.007408 -0.011372 0.014652 -0.018350 -0.011623

[5 rows x 300 columns]

2.中文应用:chinese-sentiment-analysis

#我们依旧会用gensim去做word2vec的处理,会用sklearn当中的SVM进行建模

import warnings

warnings.filterwarnings("ignore")

from sklearn.model_selection import train_test_split

from gensim.models.word2vec import Word2Vec

import numpy as np

import pandas as pd

import jieba

from sklearn.externals import joblib

from sklearn.svm import SVC

import sys

#载入数据,做预处理(分词),切分训练集与测试集

def load_file_and_preprocessing():

neg = pd.read_excel("../data/neg.xls",header=None,index=None)

pos = pd.read_excel("../data/pos.xls",header=None,index=None)

cw = lambda x: list(jieba.cut(x))

pos['words'] = pos[0].apply(cw)

neg['words'] = neg[0].apply(cw)

#print (pos['words'])

#use 1 for positive sentiment, 0 for negative

y = np.concatenate((np.ones(len(pos)), np.zeros(len(neg))))#数组拼接,ones用来构造全一矩阵,zeros用来构造全零矩阵

x_train, x_test, y_train, y_test = train_test_split(np.concatenate((pos['words'], neg['words'])), y, test_size=0.2)#train_test_split函数用于将矩阵随机划分为训练子集和测试子集,并返回划分好的训练集测试集样本和训练集测试集标签。

np.save('../data/y_train.npy',y_train)

np.save('../data/y_test.npy',y_test)

return x_train,x_test

#对每个句子的所有词向量取均值,来生成一个句子的vector

def build_sentence_vector(text,size,imdb_w2v):

vec = np.zeros(size).reshape((1,size))

count = 0

for word in text:

try:

vec += imdb_w2v[word].reshape((1,size))#逐点求和

count += 1.

except KeyError:

continue

if count != 0:

vec /= count

return vec

#计算词向量

def get_train_vecs(x_train,x_test):

n_dim = 300

#初始化模型和词表

imdb_w2v = Word2Vec(size=n_dim, min_count=10)

imdb_w2v.build_vocab(x_train)

#在评论训练集上建模(可能会花费几分钟)

imdb_w2v.train(x_train,total_examples=imdb_w2v.corpus_count,epochs=imdb_w2v.epochs)

train_vecs = np.concatenate([build_sentence_vector(z, n_dim, imdb_w2v) for z in x_train])

np.save("../data/train_vecs.npy",train_vecs)

print (train_vecs.shape)

#在测试集上训练

imdb_w2v.train(x_test,total_examples=imdb_w2v.corpus_count,epochs=imdb_w2v.epochs)

imdb_w2v.save("../data/w2v_model.pkl")

#Build test tweet vectors then scale

test_vecs = np.concatenate([build_sentence_vector(z, n_dim,imdb_w2v) for z in x_test])

np.save("../data/test_vecs.npy",test_vecs)

print (test_vecs.shape)

def get_data():

train_vecs=np.load('../data/train_vecs.npy')

y_train=np.load('../data/y_train.npy')

test_vecs=np.load('../data/test_vecs.npy')

y_test=np.load('../data/y_test.npy')

return train_vecs,y_train,test_vecs,y_test

#训练svm模型

def svm_train(train_vecs, y_train, test_vecs, y_test):

clf=SVC(kernel='rbf',verbose=True)

clf.fit(train_vecs,y_train)

joblib.dump(clf, '../data/model.pkl')

print (clf.score(test_vecs, y_test))

#构建待预测句子的向量

def get_predict_vecs(words):

n_dim = 300

imdb_w2v = Word2Vec.load('../data/w2v_model.pkl')

train_vecs = build_sentence_vector(words, n_dim, imdb_w2v)

return train_vecs

#对单个句子进行情感判断

def svm_predict(string):

words=jieba.lcut(string)

words_vecs = get_predict_vecs(words)

clf = joblib.load('../data/model.pkl')

result = clf.predict(words_vecs)

if int(result[0])==1:

print (string,' positive')

else:

print (string,' negative')

#初始化训练,第一次调用时生成model.pkl,后面就可以不用跑了

#x_train,x_test = load_file_and_preprocessing()

#get_train_vecs(x_train,x_test)

#train_vecs,y_train,test_vecs,y_test = get_data()

#svm_train(train_vecs, y_train, test_vecs, y_test)

##对输入句子情感进行判断

string='电池充完了电连手机都打不开.简直烂的要命.真是金玉其外,败絮其中!连5号电池都不如'

svm_predict(string)

string='牛逼的手机,从3米高的地方摔下去都没坏,质量非常好'

svm_predict(string)

Loading model cost 0.742 seconds.

Prefix dict has been built succesfully.

电池充完了电连手机都打不开.简直烂的要命.真是金玉其外,败絮其中!连5号电池都不如 negative

牛逼的手机,从3米高的地方摔下去都没坏,质量非常好 positive