Datawhale_数据分析组队学习task6

数据聚合与分组运算

- GroupBy机制

- 对分组进行迭代

- 选取一列或列的子集

- 通过字典或Series进行分组

- 通过函数进行分组

- 根据索引级别分组

- 数据聚合

- 面向列的多函数应用

- apply:一般性的“拆分-应用-合并”

- 禁止分组键

- 分位数和桶分析

- 用特定于分组的值填充缺失值

- 透视表

GroupBy机制

import pandas as pd

import numpy as np

df = pd.DataFrame({'key1' : ['a', 'a', 'b', 'b', 'a'],'key2' : ['one', 'two', 'one', 'two','one'],

'data1' : np.random.randn(5),'data2' : np.random.randn(5)})

df

>>>

key1 key2 data1 data2

0 a one 1.612273 -1.761942

1 a two 0.422988 1.186852

2 b one 0.630012 0.317237

3 b two -0.096043 -1.286106

4 a one 1.170058 -0.112766

In [3]:

# 按key1进行分组,并计算data1列的平均值

grouped = df['data1'].groupby(df['key1'])

grouped

Out[3]:

In [5]:

grouped.mean()

Out[5]:

key1

a 1.068440

b 0.266985

Name: data1, dtype: float64

In [10]:

# 传入多个数组的列表

means = df['data1'].groupby([df['key1'],df['key2']]).mean()

means

Out[10]:

key1 key2

a one 1.391165

two 0.422988

b one 0.630012

two -0.096043

Name: data1, dtype: float64

In [11]:

means.unstack()

Out[11]:

key2 one two

key1

a 1.391165 0.422988

b 0.630012 -0.096043

In [12]:

states = np.array(['Ohio', 'California', 'California','Ohio', 'Ohio'])

years = np.array([2005, 2005, 2006, 2005, 2006])

df['data1'].groupby([states,years]).mean()

Out[12]:

California 2005 0.422988

2006 0.630012

Ohio 2005 0.758115

2006 1.170058

Name: data1, dtype: float64

In [14]:

# groupby的size方法可以返回含有分组大小的Series

df.groupby(['key1','key2']).size()

Out[14]:

key1 key2

a one 2

two 1

b one 1

two 1

dtype: int64

对分组进行迭代

for name,group in df.groupby('key1'):

print(name)

print(group)

>>>

a

key1 key2 data1 data2

0 a one 1.612273 -1.761942

1 a two 0.422988 1.186852

4 a one 1.170058 -0.112766

b

key1 key2 data1 data2

2 b one 0.630012 0.317237

3 b two -0.096043 -1.286106

In [16]:

# 多重键的情况,元组的第一个元素是由键值组成的元组

for (k1,k2),group in df.groupby(['key1','key2']):

print(k1,k2)

print(group)

a one

key1 key2 data1 data2

0 a one 1.612273 -1.761942

4 a one 1.170058 -0.112766

a two

key1 key2 data1 data2

1 a two 0.422988 1.186852

b one

key1 key2 data1 data2

2 b one 0.630012 0.317237

b two

key1 key2 data1 data2

3 b two -0.096043 -1.286106

In [17]:

pieces = dict(list(df.groupby('key1'))) # groupby默认在axis = 0上分组

pieces['b']

Out[17]:

key1 key2 data1 data2

2 b one 0.630012 0.317237

3 b two -0.096043 -1.286106

In [18]:

df.dtypes

Out[18]:

key1 object

key2 object

data1 float64

data2 float64

dtype: object

In [20]:

grouped = df.groupby(df.dtypes,axis = 1)

for dtype,group in grouped:

print(dtype)

print(group)

float64

data1 data2

0 1.612273 -1.761942

1 0.422988 1.186852

2 0.630012 0.317237

3 -0.096043 -1.286106

4 1.170058 -0.112766

object

key1 key2

0 a one

1 a two

2 b one

3 b two

4 a one

选取一列或列的子集

df.groupby('key1')['data1']

df.groupby('key1')[['data2']]

等价于

df['data1'].groupby(df['key1'])

df[['data2']].groupby(df['key1'])

df

>>>

key1 key2 data1 data2

0 a one 1.612273 -1.761942

1 a two 0.422988 1.186852

2 b one 0.630012 0.317237

3 b two -0.096043 -1.286106

4 a one 1.170058 -0.112766

In [22]:

# 计算data2列的平均值并以DataFrame形式的到结果

df.groupby(['key1','key2'])[['data2']].mean()

Out[22]:

data2

key1 key2

a one -0.937354

two 1.186852

b one 0.317237

two -1.286106

In [24]:

s_grouped = df.groupby(['key1','key2'])['data2']

s_grouped

Out[24]:

In [25]:

s_grouped.mean()

Out[25]:

key1 key2

a one -0.937354

two 1.186852

b one 0.317237

two -1.286106

Name: data2, dtype: float64

通过字典或Series进行分组

people = pd.DataFrame(np.random.randn(5, 5),

columns=['a', 'b', 'c', 'd', 'e'],

index=['Joe', 'Steve', 'Wes', 'Jim','Travis'])

people

>>>

a b c d e

Joe 0.003533 -1.037063 0.955879 -0.782302 1.168097

Steve -0.684024 1.088523 -0.885087 0.388805 1.128606

Wes 0.255142 -1.447020 0.027178 0.038802 0.028278

Jim 0.755857 1.357942 -0.719431 0.035387 0.621884

Travis -0.599465 0.702602 0.076018 0.115762 -1.593297

In [27]:

people.iloc[2:3,[1,2]] = np.nan

people

Out[27]:

a b c d e

Joe 0.003533 -1.037063 0.955879 -0.782302 1.168097

Steve -0.684024 1.088523 -0.885087 0.388805 1.128606

Wes 0.255142 NaN NaN 0.038802 0.028278

Jim 0.755857 1.357942 -0.719431 0.035387 0.621884

Travis -0.599465 0.702602 0.076018 0.115762 -1.593297

In [30]:

mapping = {'a': 'red', 'b': 'red', 'c': 'blue','d': 'blue', 'e': 'red', 'f' : 'orange'}

by_column = people.groupby(mapping,axis = 1)

by_column.sum()

Out[30]:

blue red

Joe 0.173578 0.134567

Steve -0.496283 1.533104

Wes 0.038802 0.283420

Jim -0.684044 2.735684

Travis 0.191780 -1.490160

In [31]:

map_series = pd.Series(mapping)

map_series

Out[31]:

a red

b red

c blue

d blue

e red

f orange

dtype: object

In [33]:

people.groupby(map_series,axis = 1).count()

Out[33]:

blue red

Joe 2 3

Steve 2 3

Wes 1 2

Jim 2 3

Travis 2 3

通过函数进行分组

# 计算一个字符串长度的数组

people.groupby(len).sum()

Out[34]:

a b c d e

3 1.014532 0.320880 0.236449 -0.708113 1.818260

5 -0.684024 1.088523 -0.885087 0.388805 1.128606

6 -0.599465 0.702602 0.076018 0.115762 -1.593297

In [35]:

key_list = ['one', 'one', 'one', 'two', 'two']

people.groupby([len,key_list]).min()

Out[35]:

a b c d e

3 one 0.003533 -1.037063 0.955879 -0.782302 0.028278

two 0.755857 1.357942 -0.719431 0.035387 0.621884

5 one -0.684024 1.088523 -0.885087 0.388805 1.128606

6 two -0.599465 0.702602 0.076018 0.115762 -1.593297

根据索引级别分组

columns = pd.MultiIndex.from_arrays([['US', 'US', 'US','JP', 'JP'],[1, 3, 5, 1, 3]],names=['cty', 'tenor'])

hier_df = pd.DataFrame(np.random.randn(4, 5),columns=columns)

hier_df

>>>

cty US JP

tenor 1 3 5 1 3

0 -1.170114 -1.302459 0.227997 0.829014 0.411373

1 -1.146333 -0.495806 0.416194 -2.010128 1.923935

2 -0.497282 -0.528917 -1.807007 0.355747 0.243122

3 -0.449244 0.357974 2.179747 -0.098429 -0.989450

In [39]:

# level关键字传递级别序号或名字

hier_df.groupby(level = 'cty',axis = 1).count()

Out[39]:

cty JP US

0 2 3

1 2 3

2 2 3

3 2 3

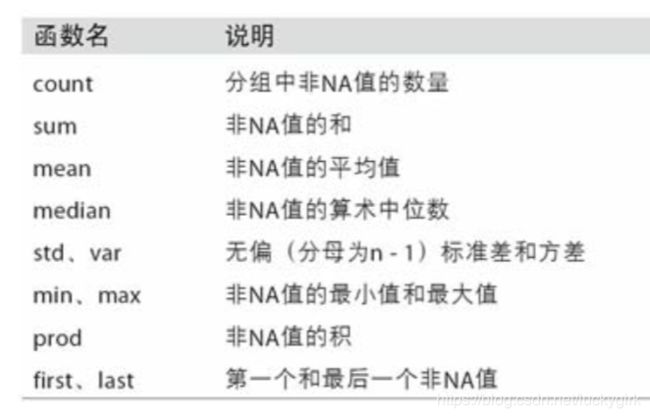

数据聚合

df

>>>

key1 key2 data1 data2

0 a one 1.612273 -1.761942

1 a two 0.422988 1.186852

2 b one 0.630012 0.317237

3 b two -0.096043 -1.286106

4 a one 1.170058 -0.112766

In [42]:

# quantile计算样本分位数

grouped = df.groupby('key1')

grouped['data1'].quantile(0.9)

Out[42]:

key1

a 1.523830

b 0.557406

Name: data1, dtype: float64

In [44]:

# aggregate或agg方法使用自己的聚合函数

def peak_to_peak(arr):

return arr.max() - arr.min()

grouped.agg(peak_to_peak)

Out[44]:

data1 data2

key1

a 1.189284 2.948793

b 0.726054 1.603343

In [45]:

grouped.describe()

Out[45]:

data1 data2

count mean std min 25% 50% 75% max count mean std min 25% 50% 75% max

key1

a 3.0 1.068440 0.601119 0.422988 0.796523 1.170058 1.391165 1.612273 3.0 -0.229286 1.477846 -1.761942 -0.937354 -0.112766 0.537043 1.186852

b 2.0 0.266985 0.513398 -0.096043 0.085471 0.266985 0.448498 0.630012 2.0 -0.484434 1.133734 -1.286106 -0.885270 -0.484434 -0.083599 0.317237

面向列的多函数应用

tips = pd.read_csv(r'/Users/faye/Desktop/examples/tips.csv')

tips['tip_pct'] = tips['tip']/ tips['total_bill']

tips[:6]

>>>

total_bill tip smoker day time size tip_pct

0 16.99 1.01 No Sun Dinner 2 0.059447

1 10.34 1.66 No Sun Dinner 3 0.160542

2 21.01 3.50 No Sun Dinner 3 0.166587

3 23.68 3.31 No Sun Dinner 2 0.139780

4 24.59 3.61 No Sun Dinner 4 0.146808

5 25.29 4.71 No Sun Dinner 4 0.186240

In [49]:

grouped = tips.groupby(['day','smoker'])

grouped_pct = grouped['tip_pct']

grouped_pct.agg('mean')

Out[49]:

day smoker

Fri No 0.151650

Yes 0.174783

Sat No 0.158048

Yes 0.147906

Sun No 0.160113

Yes 0.187250

Thur No 0.160298

Yes 0.163863

Name: tip_pct, dtype: float64

In [50]:

# 传入一组函数或函数名

grouped_pct.agg(['mean','std',peak_to_peak])

Out[50]:

mean std peak_to_peak

day smoker

Fri No 0.151650 0.028123 0.067349

Yes 0.174783 0.051293 0.159925

Sat No 0.158048 0.039767 0.235193

Yes 0.147906 0.061375 0.290095

Sun No 0.160113 0.042347 0.193226

Yes 0.187250 0.154134 0.644685

Thur No 0.160298 0.038774 0.193350

Yes 0.163863 0.039389 0.151240

In [51]:

grouped_pct.agg([('foo', 'mean'), ('bar', np.std)])

Out[51]:

foo bar

day smoker

Fri No 0.151650 0.028123

Yes 0.174783 0.051293

Sat No 0.158048 0.039767

Yes 0.147906 0.061375

Sun No 0.160113 0.042347

Yes 0.187250 0.154134

Thur No 0.160298 0.038774

Yes 0.163863 0.039389

In [52]:

functions = ['count', 'mean', 'max']

result = grouped['tip_pct', 'total_bill'].agg(functions)

result

Out[52]:

tip_pct total_bill

count mean max count mean max

day smoker

Fri No 4 0.151650 0.187735 4 18.420000 22.75

Yes 15 0.174783 0.263480 15 16.813333 40.17

Sat No 45 0.158048 0.291990 45 19.661778 48.33

Yes 42 0.147906 0.325733 42 21.276667 50.81

Sun No 57 0.160113 0.252672 57 20.506667 48.17

Yes 19 0.187250 0.710345 19 24.120000 45.35

Thur No 45 0.160298 0.266312 45 17.113111 41.19

Yes 17 0.163863 0.241255 17 19.190588 43.11

In [53]:

result['tip_pct']

Out[53]:

count mean max

day smoker

Fri No 4 0.151650 0.187735

Yes 15 0.174783 0.263480

Sat No 45 0.158048 0.291990

Yes 42 0.147906 0.325733

Sun No 57 0.160113 0.252672

Yes 19 0.187250 0.710345

Thur No 45 0.160298 0.266312

Yes 17 0.163863 0.241255

In [54]:

ftuples = [('Durchschnitt', 'mean'),('Abweichung', np.var)]

grouped['tip_pct', 'total_bill'].agg(ftuples)

Out[54]:

tip_pct total_bill

Durchschnitt Abweichung Durchschnitt Abweichung

day smoker

Fri No 0.151650 0.000791 18.420000 25.596333

Yes 0.174783 0.002631 16.813333 82.562438

Sat No 0.158048 0.001581 19.661778 79.908965

Yes 0.147906 0.003767 21.276667 101.387535

Sun No 0.160113 0.001793 20.506667 66.099980

Yes 0.187250 0.023757 24.120000 109.046044

Thur No 0.160298 0.001503 17.113111 59.625081

Yes 0.163863 0.001551 19.190588 69.808518

In [55]:

grouped.agg({'tip' : np.max, 'size' : 'sum'})

Out[55]:

tip size

day smoker

Fri No 3.50 9

Yes 4.73 31

Sat No 9.00 115

Yes 10.00 104

Sun No 6.00 167

Yes 6.50 49

Thur No 6.70 112

Yes 5.00 40

In [56]:

grouped.agg({'tip_pct' : ['min', 'max', 'mean', 'std'],'size' : 'sum'})

Out[56]:

tip_pct size

min max mean std sum

day smoker

Fri No 0.120385 0.187735 0.151650 0.028123 9

Yes 0.103555 0.263480 0.174783 0.051293 31

Sat No 0.056797 0.291990 0.158048 0.039767 115

Yes 0.035638 0.325733 0.147906 0.061375 104

Sun No 0.059447 0.252672 0.160113 0.042347 167

Yes 0.065660 0.710345 0.187250 0.154134 49

Thur No 0.072961 0.266312 0.160298 0.038774 112

Yes 0.090014 0.241255 0.163863 0.039389 40

In [57]:

# 以“没有行索引”的形式返回聚合数据

tips.groupby(['day', 'smoker'], as_index=False).mean()

Out[57]:

day smoker total_bill tip size tip_pct

0 Fri No 18.420000 2.812500 2.250000 0.151650

1 Fri Yes 16.813333 2.714000 2.066667 0.174783

2 Sat No 19.661778 3.102889 2.555556 0.158048

3 Sat Yes 21.276667 2.875476 2.476190 0.147906

4 Sun No 20.506667 3.167895 2.929825 0.160113

5 Sun Yes 24.120000 3.516842 2.578947 0.187250

6 Thur No 17.113111 2.673778 2.488889 0.160298

7 Thur Yes 19.190588 3.030000 2.352941 0.163863

apply:一般性的“拆分-应用-合并”

def top(df, n=5, column='tip_pct'):

return df.sort_values(by=column)[-n:]

top(tips,n = 6)

>>>

total_bill tip smoker day time size tip_pct

109 14.31 4.00 Yes Sat Dinner 2 0.279525

183 23.17 6.50 Yes Sun Dinner 4 0.280535

232 11.61 3.39 No Sat Dinner 2 0.291990

67 3.07 1.00 Yes Sat Dinner 1 0.325733

178 9.60 4.00 Yes Sun Dinner 2 0.416667

172 7.25 5.15 Yes Sun Dinner 2 0.710345

In [61]:

tips.groupby('smoker').apply(top)

Out[61]:

total_bill tip smoker day time size tip_pct

smoker

No 88 24.71 5.85 No Thur Lunch 2 0.236746

185 20.69 5.00 No Sun Dinner 5 0.241663

51 10.29 2.60 No Sun Dinner 2 0.252672

149 7.51 2.00 No Thur Lunch 2 0.266312

232 11.61 3.39 No Sat Dinner 2 0.291990

Yes 109 14.31 4.00 Yes Sat Dinner 2 0.279525

183 23.17 6.50 Yes Sun Dinner 4 0.280535

67 3.07 1.00 Yes Sat Dinner 1 0.325733

178 9.60 4.00 Yes Sun Dinner 2 0.416667

172 7.25 5.15 Yes Sun Dinner 2 0.710345

In [62]:

tips.groupby(['smoker', 'day']).apply(top, n=1,column='total_bill')

Out[62]:

total_bill tip smoker day time size tip_pct

smoker day

No Fri 94 22.75 3.25 No Fri Dinner 2 0.142857

Sat 212 48.33 9.00 No Sat Dinner 4 0.186220

Sun 156 48.17 5.00 No Sun Dinner 6 0.103799

Thur 142 41.19 5.00 No Thur Lunch 5 0.121389

Yes Fri 95 40.17 4.73 Yes Fri Dinner 4 0.117750

Sat 170 50.81 10.00 Yes Sat Dinner 3 0.196812

Sun 182 45.35 3.50 Yes Sun Dinner 3 0.077178

Thur 197 43.11 5.00 Yes Thur Lunch 4 0.115982

In [63]:

result = tips.groupby('smoker')['tip_pct'].describe()

result

Out[63]:

count mean std min 25% 50% 75% max

smoker

No 151.0 0.159328 0.039910 0.056797 0.136906 0.155625 0.185014 0.291990

Yes 93.0 0.163196 0.085119 0.035638 0.106771 0.153846 0.195059 0.710345

In [64]:

result.unstack('smoker')

Out[64]:

smoker

count No 151.000000

Yes 93.000000

mean No 0.159328

Yes 0.163196

std No 0.039910

Yes 0.085119

min No 0.056797

Yes 0.035638

25% No 0.136906

Yes 0.106771

50% No 0.155625

Yes 0.153846

75% No 0.185014

Yes 0.195059

max No 0.291990

Yes 0.710345

dtype: float64

禁止分组键

tips.groupby('smoker',group_keys = False).apply(top)

Out[66]:

total_bill tip smoker day time size tip_pct

88 24.71 5.85 No Thur Lunch 2 0.236746

185 20.69 5.00 No Sun Dinner 5 0.241663

51 10.29 2.60 No Sun Dinner 2 0.252672

149 7.51 2.00 No Thur Lunch 2 0.266312

232 11.61 3.39 No Sat Dinner 2 0.291990

109 14.31 4.00 Yes Sat Dinner 2 0.279525

183 23.17 6.50 Yes Sun Dinner 4 0.280535

67 3.07 1.00 Yes Sat Dinner 1 0.325733

178 9.60 4.00 Yes Sun Dinner 2 0.416667

172 7.25 5.15 Yes Sun Dinner 2 0.710345

分位数和桶分析

frame = pd.DataFrame({'data1':np.random.randn(1000),'data2':np.random.randn(1000)})

quartiles = pd.cut(frame.data1,4)

quartiles[:10]

>>>

0 (-1.615, -0.000613]

1 (-1.615, -0.000613]

2 (-0.000613, 1.614]

3 (-1.615, -0.000613]

4 (-0.000613, 1.614]

5 (-1.615, -0.000613]

6 (-1.615, -0.000613]

7 (1.614, 3.228]

8 (-1.615, -0.000613]

9 (-1.615, -0.000613]

Name: data1, dtype: category

Categories (4, interval[float64]): [(-3.235, -1.615] < (-1.615, -0.000613] < (-0.000613, 1.614] < (1.614, 3.228]]

In [68]:

def get_stats(group):

return {'min': group.min(), 'max': group.max(),'count': group.count(), 'mean': group.mean()}

grouped = frame.data2.groupby(quartiles)

grouped.apply(get_stats).unstack()

Out[68]:

count max mean min

data1

(-3.235, -1.615] 43.0 2.142106 0.070060 -1.430279

(-1.615, -0.000613] 407.0 2.906171 0.135426 -2.743878

(-0.000613, 1.614] 499.0 2.784244 0.081631 -3.205625

(1.614, 3.228] 51.0 2.499800 0.054287 -2.490635

In [69]:

grouping = pd.qcut(frame.data1, 10, labels=False)

grouped = frame.data2.groupby(grouping)

grouped.apply(get_stats).unstack()

Out[69]:

count max mean min

data1

0 100.0 2.339063 0.141428 -2.244534

1 100.0 2.711519 0.153914 -2.057574

2 100.0 2.906171 0.171634 -2.743878

3 100.0 2.073188 0.149568 -2.395140

4 100.0 2.784675 -0.022247 -2.388540

5 100.0 2.531319 0.201157 -2.391423

6 100.0 2.784244 0.141187 -2.595624

7 100.0 2.622564 -0.049855 -3.205625

8 100.0 2.366410 0.072830 -2.209372

9 100.0 2.501468 0.056716 -2.870079

用特定于分组的值填充缺失值

s = pd.Series(np.random.randn(6))

s[::2] = np.nan

s

>>>

0 NaN

1 0.270764

2 NaN

3 -0.465053

4 NaN

5 1.378137

dtype: float64

In [71]:

s.fillna(s.mean())

Out[71]:

0 0.394616

1 0.270764

2 0.394616

3 -0.465053

4 0.394616

5 1.378137

dtype: float64

In [72]:

# 对不同的分组填充不同的值

states = ['Ohio', 'New York', 'Vermont', 'Florida', 'Oregon', 'Nevada', 'California', 'Idaho']

group_key = ['East']*4 + ['West']*4

data = pd.Series(np.random.randn(8),index = states)

data

Out[72]:

Ohio -0.688042

New York -1.234445

Vermont -0.008028

Florida 0.670283

Oregon 0.899524

Nevada 0.178562

California -0.083762

Idaho -0.395532

dtype: float64

In [73]:

# 将一些值设为缺失值

data[['Vermont', 'Nevada', 'Idaho']] = np.nan

data

Out[73]:

Ohio -0.688042

New York -1.234445

Vermont NaN

Florida 0.670283

Oregon 0.899524

Nevada NaN

California -0.083762

Idaho NaN

dtype: float64

In [74]:

data.groupby(group_key).mean()

Out[74]:

East -0.417402

West 0.407881

dtype: float64

In [75]:

# 分组平均值填充缺失值

fill_mean = lambda g: g.fillna(g.mean())

data.groupby(group_key).apply(fill_mean)

Out[75]:

Ohio -0.688042

New York -1.234445

Vermont -0.417402

Florida 0.670283

Oregon 0.899524

Nevada 0.407881

California -0.083762

Idaho 0.407881

dtype: float64

In [76]:

fill_values = {'East': 0.5, 'West': -1}

fill_func = lambda g: g.fillna(fill_values[g.name])

data.groupby(group_key).apply(fill_func)

Out[76]:

Ohio -0.688042

New York -1.234445

Vermont 0.500000

Florida 0.670283

Oregon 0.899524

Nevada -1.000000

California -0.083762

Idaho -1.000000

dtype: float64

透视表

tips.pivot_table(index=['day', 'smoker'])

size tip tip_pct total_bill

day smoker

Fri No 2.250000 2.812500 0.151650 18.420000

Yes 2.066667 2.714000 0.174783 16.813333

Sat No 2.555556 3.102889 0.158048 19.661778

Yes 2.476190 2.875476 0.147906 21.276667

Sun No 2.929825 3.167895 0.160113 20.506667

Yes 2.578947 3.516842 0.187250 24.120000

Thur No 2.488889 2.673778 0.160298 17.113111

Yes 2.352941 3.030000 0.163863 19.190588

In [79]:

tips.pivot_table(['tip_pct', 'size'], index=['time','day'], columns='smoker')

Out[79]:

size tip_pct

smoker No Yes No Yes

time day

Dinner Fri 2.000000 2.222222 0.139622 0.165347

Sat 2.555556 2.476190 0.158048 0.147906

Sun 2.929825 2.578947 0.160113 0.187250

Thur 2.000000 NaN 0.159744 NaN

Lunch Fri 3.000000 1.833333 0.187735 0.188937

Thur 2.500000 2.352941 0.160311 0.163863

In [80]:

tips.pivot_table(['tip_pct', 'size'], index=['time','day'],columns='smoker', margins=True)

Out[80]:

size tip_pct

smoker No Yes All No Yes All

time day

Dinner Fri 2.000000 2.222222 2.166667 0.139622 0.165347 0.158916

Sat 2.555556 2.476190 2.517241 0.158048 0.147906 0.153152

Sun 2.929825 2.578947 2.842105 0.160113 0.187250 0.166897

Thur 2.000000 NaN 2.000000 0.159744 NaN 0.159744

Lunch Fri 3.000000 1.833333 2.000000 0.187735 0.188937 0.188765

Thur 2.500000 2.352941 2.459016 0.160311 0.163863 0.161301

All 2.668874 2.408602 2.569672 0.159328 0.163196 0.160803

In [81]:

tips.pivot_table(['tip_pct', 'size'], index=['time','day'],columns='smoker', margins=True)

Out[81]:

size tip_pct

smoker No Yes All No Yes All

time day

Dinner Fri 2.000000 2.222222 2.166667 0.139622 0.165347 0.158916

Sat 2.555556 2.476190 2.517241 0.158048 0.147906 0.153152

Sun 2.929825 2.578947 2.842105 0.160113 0.187250 0.166897

Thur 2.000000 NaN 2.000000 0.159744 NaN 0.159744

Lunch Fri 3.000000 1.833333 2.000000 0.187735 0.188937 0.188765

Thur 2.500000 2.352941 2.459016 0.160311 0.163863 0.161301

All 2.668874 2.408602 2.569672 0.159328 0.163196 0.160803

In [82]:

tips.pivot_table('tip_pct', index=['time', 'smoker'],columns='day',aggfunc=len, margins=True)

Out[82]:

day Fri Sat Sun Thur All

time smoker

Dinner No 3.0 45.0 57.0 1.0 106.0

Yes 9.0 42.0 19.0 NaN 70.0

Lunch No 1.0 NaN NaN 44.0 45.0

Yes 6.0 NaN NaN 17.0 23.0

All 19.0 87.0 76.0 62.0 244.0

In [83]:

tips.pivot_table('tip_pct', index=['time', 'size','smoker'],columns='day', aggfunc='mean',fill_value=0)

Out[83]:

day Fri Sat Sun Thur

time size smoker

Dinner 1 No 0.000000 0.137931 0.000000 0.000000

Yes 0.000000 0.325733 0.000000 0.000000

2 No 0.139622 0.162705 0.168859 0.159744

Yes 0.171297 0.148668 0.207893 0.000000

3 No 0.000000 0.154661 0.152663 0.000000

Yes 0.000000 0.144995 0.152660 0.000000

4 No 0.000000 0.150096 0.148143 0.000000

Yes 0.117750 0.124515 0.193370 0.000000

5 No 0.000000 0.000000 0.206928 0.000000

Yes 0.000000 0.106572 0.065660 0.000000

6 No 0.000000 0.000000 0.103799 0.000000

Lunch 1 No 0.000000 0.000000 0.000000 0.181728

Yes 0.223776 0.000000 0.000000 0.000000

2 No 0.000000 0.000000 0.000000 0.166005

Yes 0.181969 0.000000 0.000000 0.158843

3 No 0.187735 0.000000 0.000000 0.084246

Yes 0.000000 0.000000 0.000000 0.204952

4 No 0.000000 0.000000 0.000000 0.138919

Yes 0.000000 0.000000 0.000000 0.155410

5 No 0.000000 0.000000 0.000000 0.121389

6 No 0.000000 0.000000 0.000000 0.173706