爬虫之多线程爬取智联招聘信息

前言:

本文爬取对象为智联搜索大数据岗位内容信息,并将信息保存到本地。

案例中使用的HttpClientUtils工具类参考上一篇文章https://blog.csdn.net/qq_15076569/article/details/83015273

案例中使用的Dao层请参考上一篇文章https://blog.csdn.net/qq_15076569/article/details/83015273

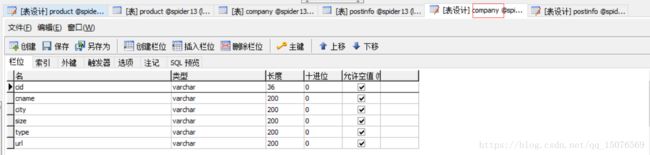

1)本地创建数据库表信息:company,postinfo

2)案例使用的pom.xml文件

spiderParent

com.xucj

1.0-SNAPSHOT

4.0.0

spiderDay02

org.apache.httpcomponents

httpclient

4.5.3

org.jsoup

jsoup

1.7.2

mysql

mysql-connector-java

5.1.34

com.google.code.gson

gson

2.8.0

org.springframework

spring-jdbc

4.0.6.RELEASE

c3p0

c3p0

0.9.1.2

org.projectlombok

lombok

1.16.18

org.apache.commons

commons-lang3

3.3.2

org.apache.maven.plugins

maven-compiler-plugin

3.1

1.8

1.8

utf-8

maven-assembly-plugin

com.xucj.index.IndexJdSpider

jar-with-dependencies

3)公司Company和岗位PostInfo的实体

/**

* 公司信息实体

*/

@SuppressWarnings("serial")

@AllArgsConstructor

@NoArgsConstructor

@Data

@Accessors(chain=true)

public class Company implements Serializable{

private String cid;//id

private String cname;//名称

private String city;//所在城市

private String size;//公司人数

private String type;//公司类型

private String url;//公司网址

}

/**

* 岗位信息实体

*/

@SuppressWarnings("serial")

@AllArgsConstructor

@NoArgsConstructor

@Data

@Accessors(chain=true)

public class PostInfo implements Serializable{

private String pid;//岗位编号

private String jobName;//岗位名称

private String salary;//岗位薪资

private String postAddres;//地点

private String workingExp;//年限

private String eduLevel;//学历

private String emplType;//性质

private String peopleNum;//招聘人数

private String welfare;//职位福利

private String positionInfo;//岗位信息

private String cid;//发布所属公司

private String positionURL;//招聘地址

private Date updateDate;//岗位更新时间

private Date createDate;//岗位创建时间

private Date endDate;//招聘结束时间

private String introduce;//公司简介

}

4)爬取类

import com.google.gson.*;

import com.xucj.dao.ProductDao;

import com.xucj.entity.Company;

import com.xucj.entity.PostInfo;

import com.xucj.utils.HttpClientUtils;

import org.apache.commons.lang3.ArrayUtils;

import org.apache.commons.lang3.StringUtils;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.select.Elements;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

import java.util.UUID;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* 智联招聘爬虫(大数据)

*/

public class AdvertiseForSpider {

private static SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

private static ProductDao productDao = new ProductDao();

private static int numFound;

//阻塞队列

private static BlockingQueue> blockingQueue = new ArrayBlockingQueue(1000);

//线程池

private static ExecutorService executorService = Executors.newFixedThreadPool(35);

public static void main(String[] args) throws IOException, ParseException, InterruptedException {

watch();

manyThread();

manyPage();

}

/**

* 监控当前队列个数

*/

public static void watch(){

new Thread(new Runnable() {

@Override

public void run() {

while (true){

try {

Thread.sleep(1000);

System.out.println("当前队列个数:"+blockingQueue.size());

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}).start();

}

public static void manyThread(){

for(int i=0;i<30;i++){

executorService.execute(new Runnable() {

@Override

public void run() {

while (true){

try {

Map map = blockingQueue.take();

productDao.addCompany(getCompany(map));

productDao.addPostInfo(getPostInfo(map));

} catch (Exception e) {

e.printStackTrace();

}

}

}

});

}

}

/**

* 多页解析

* @throws IOException

* @throws ParseException

*/

public static void manyPage() throws IOException, ParseException, InterruptedException {

Gson gson = new Gson();

for(int i=0;i> list = gson.fromJson(results, List.class);

for (Map map : list) {

blockingQueue.put(map);

}

}

}

/**

* 封装公司信息

* @param map

* @return

*/

public static Company getCompany(Map map){

Company company = new Company();

Map city = (Map)map.get("city");

map = (Map)map.get("company");

company.setCid(map.get("number").toString());

company.setCname(map.get("name").toString());

company.setUrl(map.get("url").toString());

company.setSize(((Map)map.get("size")).get("name").toString());

company.setType(((Map)map.get("type")).get("name").toString());

company.setCity(city.get("display").toString());

return company;

}

/**

* 封装岗位信息

* @param map

* @return

*/

public static PostInfo getPostInfo(Map map) throws IOException, ParseException {

if("校招".equals(map.get("salary").toString()) || "校园".equals(map.get("emplType").toString())){

return getPostInfo2(map);

}

PostInfo postInfo = new PostInfo();

String positionURL = map.get("positionURL").toString();

postInfo.setPid(UUID.randomUUID().toString());

//岗位URL

postInfo.setPositionURL(positionURL);

String html = HttpClientUtils.doGet(positionURL);

Document document = Jsoup.parse(html);

//岗位名称

Elements name = document.select("[class=inner-left fl] h1");

postInfo.setJobName(name.text());

//岗位薪资

Elements price = document.select(".terminalpage-left>ul>li:first-child strong");

postInfo.setSalary(price.text());

//上班地点

Elements addres = document.select(".tab-inner-cont:first-child h2");

postInfo.setPostAddres(addres.text());

//工作经验年限

Elements workingExp = document.select(".terminalpage-left>ul>li:nth-child(5) strong");

postInfo.setWorkingExp(workingExp.text());

//学历要求

Elements eduLevel = document.select(".terminalpage-left>ul>li:nth-child(6) strong");

postInfo.setEduLevel(eduLevel.text());

//岗位性质

Elements emplType = document.select(".terminalpage-left>ul>li:nth-child(4) strong");

postInfo.setEmplType(emplType.text());

//招聘人数

Elements peopleNum = document.select(".terminalpage-left>ul>li:nth-child(7) strong");

postInfo.setPeopleNum(peopleNum.text());

//职位福利

ArrayList welfares = (ArrayList) map.get("welfare");

postInfo.setWelfare(ArrayUtils.toString(welfares,","));

//职位信息

Elements positionInfo = document.select("[class=terminalpage-main clearfix] .tab-inner-cont p");

postInfo.setPositionInfo(positionInfo.text());

//公司

postInfo.setCid(((Map)map.get("company")).get("number").toString());

//岗位创建时间

postInfo.setCreateDate(sdf.parse(map.get("createDate").toString()));

//岗位更新时间

postInfo.setUpdateDate(sdf.parse(map.get("updateDate").toString()));

//招聘结束时间

postInfo.setEndDate(sdf.parse(map.get("endDate").toString()));

//公司简介

Elements introduce = document.select(".tab-inner-cont:nth-child(2) p");

postInfo.setIntroduce(introduce.text());

return postInfo;

}

/**

* 校招封装岗位信息

* @param map

* @return

*/

public static PostInfo getPostInfo2(Map map) throws IOException, ParseException {

PostInfo postInfo = new PostInfo();

postInfo.setPid(UUID.randomUUID().toString());

postInfo.setJobName(map.get("jobName").toString());

postInfo.setSalary(map.get("salary")!=null?map.get("salary").toString():null);

postInfo.setPostAddres(((Map)map.get("city")).get("display").toString());

postInfo.setWorkingExp(((Map)map.get("workingExp")).get("name").toString());

postInfo.setEmplType(map.get("emplType").toString());

String positionURL = map.get("positionURL").toString();

postInfo.setPositionURL(positionURL);

String html = HttpClientUtils.doGet(positionURL);

Document document = Jsoup.parse(html);

Elements peopleNum = document.select(".cJobDetailInforBotWrap>li:nth-child(6)");

postInfo.setPeopleNum(peopleNum.text());

List welfares = (List) map.get("welfare");

postInfo.setWelfare(ArrayUtils.toString(welfares,","));

Elements positionInfo = document.select("[class=cJob_Detail f14] p");

postInfo.setPositionInfo(positionInfo.text());

postInfo.setCid(((Map)map.get("company")).get("number").toString());

postInfo.setCreateDate(sdf.parse(map.get("createDate").toString()));

postInfo.setUpdateDate(sdf.parse(map.get("updateDate").toString()));

postInfo.setEndDate(sdf.parse(map.get("endDate").toString()));

Elements edulevel = document.select(".cJobDetailInforBotWrap>li:last-child");

postInfo.setEduLevel(edulevel.text());

String[] urls = ((Map)map.get("company")).get("url").toString().split("/");

String htmlDetail = HttpClientUtils.doGet("https://xiaoyuan.zhaopin.com/jobdetail/GetCompanyIntro/" + postInfo.getCid() + "?subcompanyid=" + urls[urls.length - 1] + "&showtype=2");

JsonObject object = new JsonParser().parse(htmlDetail).getAsJsonObject();

postInfo.setIntroduce(java.net.URLDecoder.decode(object.get("intro").toString(), "UTF-8"));

return postInfo;

}

} 5)爬取结果

公司信息结果:

岗位信息结果: