phoenix+hbase+Spark整合,Spark处理数据操作phoenix入hbase,Spring Cloud整合phoenix

1 版本要求

Spark版本:spark-2.3.0-bin-hadoop2.7

Phoenix版本:apache-phoenix-4.14.1-HBase-1.4-bin

HBASE版本:hbase-1.4.2

上面的版本必须是对应的,否则会报错

2 Phoenix + HBase + Spark整合

A:安装HBASE,这里略,默认都会

B:Phoenix + HBASE整合,参考:https://blog.csdn.net/tototuzuoquan/article/details/81506285,要注意的是支持的版本组合是:apache-phoenix-4.14.1-HBase-1.4-bin + spark-2.3.0-bin-hadoop2.7 + hadoop-3.0.1,低版本的phoenix会导致Spark + Phoenix程序执行报错。可以到官网下载2018年11月中旬更新的apache-phoenix-4.14.1-HBase-1.4-bin 版本。

C:Phoenix + Spark整合的过程中别忘记在$SPARK_HOME/conf/spark-defaults.conf 中增加以下代码:

spark.driver.extraClassPath /data/installed/apache-phoenix-4.14.1-HBase-1.4-bin/phoenix-spark-4.14.1-HBase-1.4.jar:/data/installed/apache-phoenix-4.14.1-HBase-1.4-bin/phoenix-4.14.1-HBase-1.4-client.jar

spark.executor.extraClassPath /data/installed/apache-phoenix-4.14.1-HBase-1.4-bin/phoenix-spark-4.14.1-HBase-1.4.jar:/data/installed/apache-phoenix-4.14.1-HBase-1.4-bin/phoenix-4.14.1-HBase-1.4-client.jar

如果不加上面的配置,在Spark + Phoenix项目执行过程中将报错。

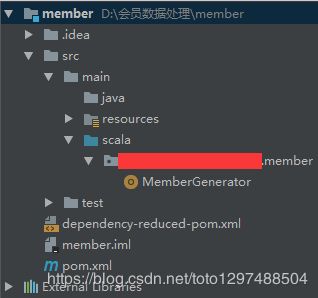

3 Spark + Phoenix项目

3.1 工程结构

3.2 配置pom文件

artifactId>joda-time

${joda-time.version}

org.scala-lang

scala-library

${scala-library.version}

org.apache.spark

spark-core_2.11

${spark-core_2.11.version}

org.apache.spark

spark-streaming_2.11

${spark-streaming_2.11.version}

provided

org.apache.hadoop

hadoop-hdfs

${hadoop-hdfs.version}

org.apache.hadoop

hadoop-client

${hadoop-client.version}

org.apache.hadoop

hadoop-common

${hadoop-common.version}

com.alibaba

fastjson

${fastjson.version}

org.apache.spark

spark-sql_2.11

${spark-sql_2.11.version}

org.apache.spark

spark-hive_2.11

${spark-hive_2.11.version}

mysql

mysql-connector-java

${mysql.version}

org.apache.hbase

hbase-client

${hbase.version}

org.apache.hbase

hbase-server

${hbase.version}

com.lmax

disruptor

3.3.6

org.apache.phoenix

phoenix-core

${phoenix.version}

org.apache.phoenix

phoenix-spark

${phoenix.version}

provided

junit

junit

${junit.version}

src/main/scala

src/test/scala

net.alchim31.maven

scala-maven-plugin

3.2.0

compile

testCompile

-dependencyfile

${project.build.directory}/.scala_dependencies

org.apache.maven.plugins

maven-surefire-plugin

2.18.1

false

true

**/*Test.*

**/*Suite.*

org.apache.maven.plugins

maven-shade-plugin

2.3

package

shade

*:*

META-INF/*.SF

META-INF/*.DSA

META-INF/*.RSA

xxx.xxx.bigdata.xxxx.member.MemberGenerator

其中下面的jar包依赖是整合phoenix的关键:

org.apache.phoenix

phoenix-core

${phoenix.version}

org.apache.phoenix

phoenix-spark

${phoenix.version}

provided

3.3 创建phoenix的表

DROP TABLE DATA_CENTER_MEMBER;

CREATE TABLE IF NOT EXISTS DATA_CENTER_MEMBER(

PK VARCHAR PRIMARY KEY,

AREA_CODE VARCHAR(10),

AREA_NAME VARCHAR(30),

AGENT_ID VARCHAR(30),

AGENT_NAME VARCHAR(20),

SHOP_ID VARCHAR(40),

USER_ID VARCHAR(40),

BUYBACK_CYCLE DECIMAL(15,4),

PURCHASE_NUM BIGINT,

FIRST_PAY_TIME BIGINT,

LAST_PAY_TIME BIGINT,

UNPURCHASE_TIME BIGINT,

COMMON TINYINT,

STORED TINYINT,

POPULARIZE TINYINT,

VERSION_DATE INTEGER,

ADD_TIME BIGINT,

CREATE_DATE INTEGER

) COMPRESSION='GZ',DATA_BLOCK_ENCODING=NONE SPLIT ON ('0|','1|','2|','3|','4|','5|','6|','7|','8|','9|','10|','11|','12|','13|','14|', '15|','16|','17|','18|','19|','20|','21|','22|','23|','24|','25|','26|','27|','28|','29|','30|','31|','32|','33|','34|','35|','36|','37|','38|','39|', '40|','41|','42|','43|','44|','45|','46|','47|','48|','49|');

--使用覆盖索引

CREATE index idx_version_date on DATA_CENTER_MEMBER(VERSION_DATE) include(PK,LAST_PAY_TIME,FIRST_PAY_TIME,PURCHASE_NUM);

其中rowkey的格式如下:

PK 为 AREA_CODE%50|AREA_CODE|AGENT_ID|ADD_TIME|SHOP_ID|USER_ID (预分区数量为50个)

在查询的时候最好都是基于rowkey进行查询,这个rowkey查询的速度基本是毫秒级别的出来。

3.4 Spark Scala + Phoenix的代码

package xxx.xxx.bigdata.xxxx.member

import java.util.Date

import xxx.xxx.bigdata.common.utils.DateUtils

import org.apache.spark.sql.SparkSession

import scala.collection.mutable.ListBuffer

object MemberGenerator {

/**

* 如果有参数,直接返回参数中的值,如果没有默认是前一天的时间

* @param args :系统运行参数

* @param pattern :时间格式

* @return

*/

def gainDayByArgsOrSysCreate(args: Array[String],pattern: String):String = {

//如果有参数,直接返回参数中的值,如果没有默认是前一天的时间

if(args.length > 0) {

args(0)

} else {

val previousDay = DateUtils.addOrMinusDay(new Date(), -1);

DateUtils.dateFormat(previousDay, "yyyy-MM-dd");

}

}

/**

* 处理会员数据

* @param args :传递的参数,args(0):处理哪天的数据 args(1):phoenix的zookeeper链接相关

*/

def main(args: Array[String]): Unit = {

val spark = SparkSession

.builder()

.appName("MemberGenerator")

//.master("local[*]")

.master("spark://bigdata1:7077")

.config("spark.sql.warehouse.dir", "/user/hive/warehouse")

//driver进程使用的内存数

.config("spark.driver.memory","2g")

//每个executor进程使用的内存数。和JVM内存串拥有相同的格式(如512m,2g)

.config("spark.executor.memory","2g")

.enableHiveSupport()

.getOrCreate();

val previousDayStr = gainDayByArgsOrSysCreate(args,"yyyy-MM-dd")

//昨天时间,格式:yyyyMMdd - 1 (初始化的时候,数据是昨天的数据。处理之后,变成处理时的时间)

val nowDate = DateUtils.getNowDateSimple().toLong - 1

// val df1 = spark.read.json("/xxxx/data-center/member/" + previousDayStr + "/member.json");

val df1 = spark.read.json(args(0));

spark.sql("use data_center");

//只有在有数据的时候才执行下面的操作

if(df1.count() > 0) {

df1.createOrReplaceTempView("member_temp")

//小表在前面提高数据执行效率

val df2 = spark.sql(

"SELECT " +

" ts.areacode as AREA_CODE, " +

" ts.areaname as AREA_NAME, " +

" ts.agentid as AGENT_ID, " +

" ts.agentname as AGENT_NAME, " +

" mt.shopId as SHOP_ID, " +

" mt.userId as USER_ID, " +

" mt.commonMember as COMMON, " +

" mt.storedMember as STORED, " +

" mt.popularizeMember as POPULARIZE," +

" mt.addTime as ADD_TIME " +

"FROM " +

" tb_shop ts," +

" member_temp mt " +

"WHERE " +

" ts.shopId = mt.shopId ")

df2.createOrReplaceTempView("member_temp2")

//df2.show()

//注意,要通过Spark修改数据,需要先执行下面的load操作,然后再执行下面的saveToPhoenix的操作

val df = spark.read

.format("org.apache.phoenix.spark")

.options(

Map("table" -> "DATA_CENTER_MEMBER",

"zkUrl" -> args(1))

//"zkUrl" -> "jdbc:phoenix:bigdata3:2181")

).load

df.show()

var list = new ListBuffer[(Any,Any, Any, Any, Any, Any, Any, Any, Any, Any, Any, Any,Any, Any,Any,Any)]

df4.collect().foreach(

x => {

//获取成为会员的时间,格式:yyyyMMdd 为整型数据

val CREATE_DATE = Integer.parseInt(DateUtils.getLongToString(x.get(10).asInstanceOf[Long] * 1000,DateUtils.PATTERN_DATE_SIMPLE))

//println(">>>>>>>>>>" + x.get(5).toString + " " + x.get(6).toString + " " + x.get(9))

// PK 为 AREA_CODE%50|AREA_CODE|AGENT_ID|ADD_TIME|SHOP_ID|USER_ID (预分区数量为50个)

val PK = (x.get(0).toString.toInt % 20) + "|" + x.get(4).toString + "|" + x.get(5).toString

val temp = (

PK,

x.get(0),

x.get(1),

x.get(2),

x.get(3),

x.get(4),

x.get(5),

x.get(6),

x.get(7),

x.get(8),

x.get(9),

x.get(10),

x.get(11),

x.get(12),

nowDate,

CREATE_DATE

)

list.+=(temp)

//每当有10000条记录之后,保存或更新数据

if (list.length / 10000 == 1) {

sc.parallelize(list)

.saveToPhoenix(

"MEMBER",

Seq("PK","AREA_CODE", "AREA_NAME", "AGENT_ID", "AGENT_NAME", "SHOP_ID", "USER_ID", "COMMON", "STORED", "POPULARIZE", "PURCHASE_NUM" , "ADD_TIME", "FIRST_PAY_TIME", "LAST_PAY_TIME","VERSION_DATE","CREATE_DATE"),

zkUrl = Some(args(1)))

//执行完成之后,将这个list清空

list.clear()

//这里指休眠n毫秒

println("休眠200毫秒")

Thread.sleep(200)

}

})

//如果最后list中的结果不为空

if(!list.isEmpty) {

sc.parallelize(list)

.saveToPhoenix(

"DATA_CENTER_MEMBER",

Seq("PK","AREA_CODE", "AREA_NAME", "AGENT_ID", "AGENT_NAME", "SHOP_ID", "USER_ID", "COMMON", "STORED", "POPULARIZE", "PURCHASE_NUM" , "ADD_TIME", "FIRST_PAY_TIME", "LAST_PAY_TIME","VERSION_DATE","CREATE_DATE"),

zkUrl = Some(args(1)))

list.clear()

}

}

spark.stop()

//程序正常退出

System.exit(0)

}

}

4 Spring Cloud + Phoenix 代码

这里不列Spring Cloud配置的代码,只写涉及到Phoenix相关的代码。

4.1 工程结构

4.2 pom.xml

4.0.0

xxx.xxx.bigdata.xxxx.datacenter

project-pom

1.0.1-SNAPSHOT

../project-pom/pom.xml

member

jar

member

会员数据模块

UTF-8

UTF-8

1.8

xxx.xxx.frame3

common-utils

${youx-frame3-common.version}

xxx.xxx.frame3

common-dao

${youx-frame3-common.version}

xxx.xxx.frame3

common-service

${youx-frame3-common.version}

xxx.xxx.frame3

common-web

${youx-frame3-common.version}

org.apache.phoenix

phoenix-core

${phoenix-core.version}

org.slf4j

slf4j-log4j12

log4j

log4j

org.springframework.boot

spring-boot-devtools

true

org.springframework.boot

spring-boot-maven-plugin

org.apache.maven.plugins

maven-jar-plugin

${project.build.directory}/classes

xxx.xxx.bigdata.xxxx.datacenter.member.MemberApplication

false

true

lib/

bootstrap.properties

org.apache.maven.plugins

maven-dependency-plugin

copy-dependencies

package

copy-dependencies

jar

jar

${project.build.directory}/lib

4.3 bootstrap.properties 的内容

server.port=6022

spring.application.name=member

#===================统一配置服务注册中心(Spring Cloud Eureka)=============================

eureka.client.service-url.defaultZone=http://127.0.0.1:5000/eureka

#===================统一配置开启Feign(Spring Cloud Feign)=================================

#开启Feign中的断路器功能

feign.hystrix.enabled=true

#===================统一配置管理客户端配置(Spring Cloud Config)===========================

#高可用配置中心通过服务Id去自动发现config-server服务组

spring.cloud.config.discovery.enabled=true

spring.cloud.config.discovery.service-id=config-server

# 配置规则为{spring.cloud.config.name}-{spring.cloud.config.profile}.properties,指定配置文件前缀(所有项目的配置文件前缀必须以:application-dev开头)

spring.cloud.config.name=application

spring.cloud.config.profile=dev-sfsadas

# 分支配置

spring.cloud.config.label=trunk

# 配置服务端的地址,高可用模式下配置了serivice-id,所以这里就不用指定uri了

#spring.cloud.config.uri=http://127.0.0.1:5002/

#=============配置消息总线所需的参数配置(Spring Cloud Bus)=================================

#Spring Cloud Bus将分布式的节点用轻量的消息代理连接起来。它可以用于广播文件的更改或者服务之间的通讯

#也可以用于监听。

spring.rabbitmq.host=xxx.xxx.xxx.xxx

spring.rabbitmq.port=5672

spring.rabbitmq.username=guest

spring.rabbitmq.password=guest

spring.cloud.bus.enabled=true

spring.cloud.bus.trace.enabled=true

management.endpoints.web.exposure.include=bus-refresh

#===================统一配置服务链路追踪(Spring Cloud Sleuth)=============================

spring.sleuth.web.client.enabled=true

#将采样比例设置为1.0,也就是全部都需要,默认是0.1

spring.sleuth.sampler.probability=1.0

spring.zipkin.base-url=http://localhost:9411

#===================统一配置数据库连接=============================

spring.datasource.name=member

#使用druid数据源

spring.datasource.type=com.alibaba.druid.pool.DruidDataSource

#数据源连接url

spring.datasource.url=jdbc:phoenix:xxx.xxx.xxx.xxx:2181

spring.datasource.username=root

spring.datasource.password=123456

spring.datasource.driver-class-name=org.apache.phoenix.jdbc.PhoenixDriver

spring.datasource.filters=stat

spring.datasource.maxActive=60

spring.datasource.initialSize=10

spring.datasource.maxWait=60000

spring.datasource.minIdle=10

spring.datasource.timeBetweenEvictionRunsMillis=60000

spring.datasource.minEvictableIdleTimeMillis=300000

spring.datasource.validationQuery=SELECT 1

spring.datasource.testWhileIdle=true

spring.datasource.testOnBorrow=false

spring.datasource.testOnReturn=false

spring.datasource.poolPreparedStatements=true

spring.datasource.maxOpenPreparedStatements=10

#配置mybaits相关参数:https://www.cnblogs.com/lianggp/p/7573653.html

#MyBatis Mapper 所对应的 XML 文件位置,如果您在 Mapper 中有自定义方法(XML 中有自定义实现)

# ,需要进行该配置,告诉 Mapper 所对应的 XML 文件位置

mybatis-plus.mapper-locations=classpath*:/mapper/*.xml

# MyBaits 别名包扫描路径,通过该属性可以给包中的类注册别名,注册后在 Mapper 对应的 XML

# 文件中可以直接使用类名,而不用使用全限定的类名(即 XML 中调用的时候不用包含包名)。

mybatis-plus.type-aliases-package=xxx.xxx.bigdata.xxx.datacenter.member.entity

#启动时是否检查MyBaits XML文件的存在,默认不检查

mybatis-plus.check-config-location=false

# 数据库类型,默认值为未知的数据库类型 如果值为OTHER,启动时会根据数据库连接url获取数据库类型;如果不是OTHER则不会自动获取数据库类型

mybatis-plus.global-config.db-config.db-type=mysql

# 通过下面的方式打印运行的sql

mybatis-plus.configuration.log-impl=org.apache.ibatis.logging.stdout.StdOutImpl

#使得返回的结果默认是驼峰格式

#mybatis-plus.configuration.map-underscore-to-camel-case=true

#pagehelper分页插件

pagehelper.helper-dialect=mysql

pagehelper.reasonable=true

pagehelper.support-methods-arguments=true

pagehelper.params=count=countSql

上面的关键点是数据库连接池配置

4.4 MemberApplication的内容

package xxx.xxx.bigdata.xxxx.datacenter.member;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.EnableAutoConfiguration;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.cloud.client.discovery.EnableDiscoveryClient;

import org.springframework.cloud.context.config.annotation.RefreshScope;

import org.springframework.cloud.netflix.eureka.EnableEurekaClient;

import org.springframework.cloud.netflix.hystrix.EnableHystrix;

import org.springframework.cloud.openfeign.EnableFeignClients;

@SpringBootApplication

@MapperScan(“xxx.xxx.bigdata.xxxx.datacenter.member.mapper”)

@EnableAutoConfiguration

@EnableEurekaClient

@EnableDiscoveryClient

@EnableFeignClients

@EnableHystrix

@RefreshScope

public class MemberApplication {

public static void main(String[] args) {

SpringApplication.run(MemberApplication.class, args);

}

}

4.5 其它代码

项目中的其它代码略,那些和常规的写法类似。

注意的是Phoenix不支持事务,所以@Transaction这样的注解不要写了,否则会报错。