电商数仓项目

文章目录

- 一.数仓采集

- 1.数仓的概念

- 2.项目需求及框架

- 3.数据生成模块

- 4.数据采集模块

- 二.用户行为数据仓库

- 1.数仓分层概念

- 2.数仓搭建环境准备

- 3. 数仓环境之ODS层

- 4.数仓搭建之DWD层

- 5.业务知识储备

- 6.用户活跃主题

- 7.用户新增主题

- 8.用户留存主题

- 9.新数据准备

- 10.沉默用户数

- 11.本周回流用户数

- 12.本周流失用户数

- 13.最近连续三周活跃用户数

- 14.最近七天内连续三天活跃用户数

- 三.系统业务数据仓库

- 1.电商业务与数据结构简介

- 2.数仓理论

- 3.数仓搭建

- 4.GMV成交总额

- 5. 转化率及漏斗分析

- 6 .品牌复购率

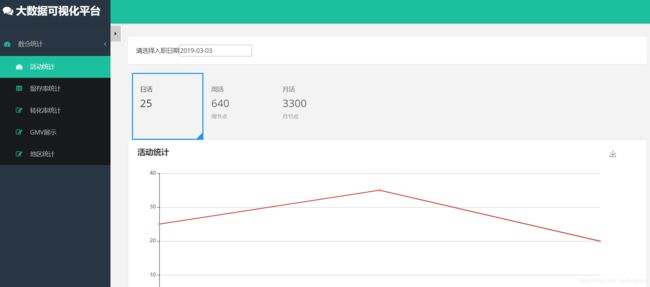

- 7.数据可视化

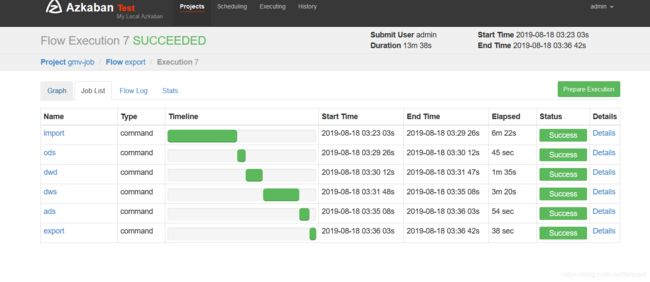

- 8 Azkaban调度器

- 9.订单表拉链表

- 四.实时数仓

- 1.Presto

- 2.Druid

一.数仓采集

1.数仓的概念

数据仓库是为企业所有决策制定过程,提供所有系统数据支持的战略集合。包括对数据的:清洗,转义,分类,合并,拆分,统计等等。

2.项目需求及框架

A. 需求分析.

1)数据采集平台搭建

2)实现用户行为数据仓库的分层搭建

3)实现业务数据仓库的分层搭建

4)针对数据仓库中的数据进行留存、转化率分析、GMV、复购率、活跃等报表分析。

B. 技术选型

1)数据采集传输:Flume,Kafka,Sqoop

2) 数据存储:Mysql,HDFS

3)数据计算:Hive,Tez,Spark

4) 数据查询:Presto,Druid

C. 系统数据流程设计

D. 框架版本选择

E. 服务器选型:

物理机 or 云主机

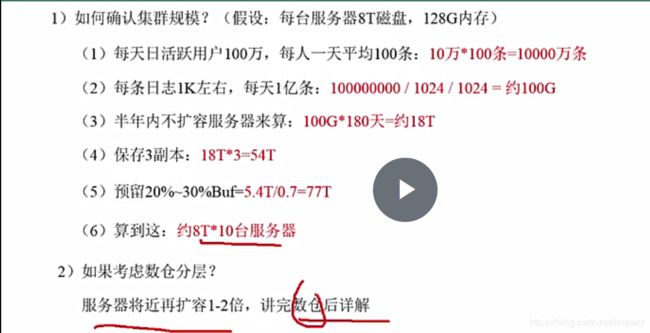

F. 集群资源规划设计

1)确认集群规模

2) 集群服务器规划

本项目采用3台虚拟机模拟测试集群。

3.数据生成模块

A . 埋点数据基本格式

B.事件日志数据

1)商品列表页

2)商品点击页

3)商品详情页

4)广告

5)消息通知

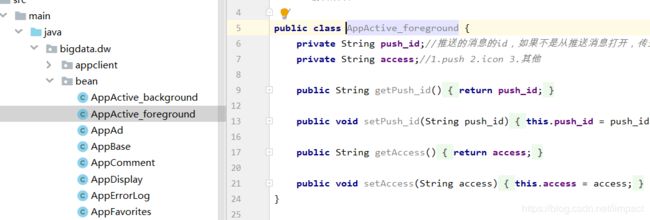

6)用户前台活跃

7)用户后台活跃

8)评论

9)收藏

10)点赞

11)错误日志

C. 启动日志数据

与事件日志格式不同,结构更简单。

D.数据生成脚本

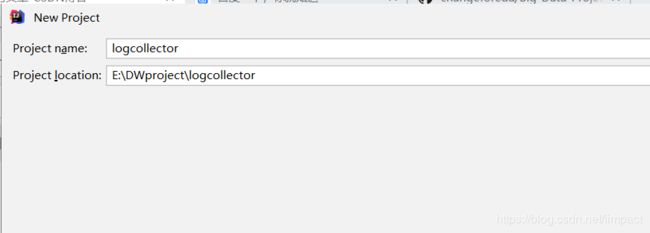

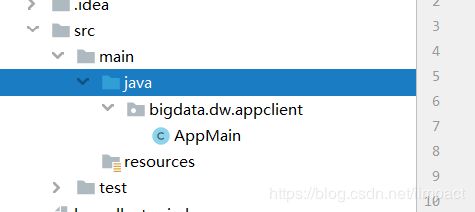

b) 创建一个包名:bigdata.dw

c) 在com.atguigu.appclient包下创建一个类,AppMain

4.0.0

bigdata.dw

log-collector

1.0-SNAPSHOT

1.7.20

1.0.7

com.alibaba

fastjson

1.2.51

ch.qos.logback

logback-core

${logback.version}

ch.qos.logback

logback-classic

${logback.version}

maven-compiler-plugin

2.3.2

1.8

1.8

maven-assembly-plugin

jar-with-dependencies

bigdata.dw.appclient.AppMain

make-assembly

package

single

2)模拟生成日志数据

a)bean类对象编写

创建beab包,编写bean程序

b) main函数编写

在AppMain类中加入以下代码:

package bigdata.dw.appclient;

import java.io.UnsupportedEncodingException;

import java.util.Random;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import bigdata.dw.bean.*;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/**

* 日志行为数据模拟

*/

public class AppMain {

private final static Logger logger = LoggerFactory.getLogger(AppMain.class);

private static Random rand = new Random();

// 设备id

private static int s_mid = 0;

// 用户id

private static int s_uid = 0;

// 商品id

private static int s_goodsid = 0;

public static void main(String[] args) {

// 参数一:控制发送每条的延时时间,默认是0

Long delay = args.length > 0 ? Long.parseLong(args[0]) : 0L;

// 参数二:循环遍历次数

int loop_len = args.length > 1 ? Integer.parseInt(args[1]) : 1000;

// 生成数据

generateLog(delay, loop_len);

}

private static void generateLog(Long delay, int loop_len) {

for (int i = 0; i < loop_len; i++) {

int flag = rand.nextInt(2);

switch (flag) {

case (0):

//应用启动

AppStart appStart = generateStart();

String jsonString = JSON.toJSONString(appStart);

//控制台打印

logger.info(jsonString);

break;

case (1):

JSONObject json = new JSONObject();

json.put("ap", "app");

json.put("cm", generateComFields());

JSONArray eventsArray = new JSONArray();

// 事件日志

// 商品点击,展示

if (rand.nextBoolean()) {

eventsArray.add(generateDisplay());

json.put("et", eventsArray);

}

// 商品详情页

if (rand.nextBoolean()) {

eventsArray.add(generateNewsDetail());

json.put("et", eventsArray);

}

// 商品列表页

if (rand.nextBoolean()) {

eventsArray.add(generateNewList());

json.put("et", eventsArray);

}

// 广告

if (rand.nextBoolean()) {

eventsArray.add(generateAd());

json.put("et", eventsArray);

}

// 消息通知

if (rand.nextBoolean()) {

eventsArray.add(generateNotification());

json.put("et", eventsArray);

}

// 用户前台活跃

if (rand.nextBoolean()) {

eventsArray.add(generatbeforeground());

json.put("et", eventsArray);

}

// 用户后台活跃

if (rand.nextBoolean()) {

eventsArray.add(generateBackground());

json.put("et", eventsArray);

}

//故障日志

if (rand.nextBoolean()) {

eventsArray.add(generateError());

json.put("et", eventsArray);

}

// 用户评论

if (rand.nextBoolean()) {

eventsArray.add(generateComment());

json.put("et", eventsArray);

}

// 用户收藏

if (rand.nextBoolean()) {

eventsArray.add(generateFavorites());

json.put("et", eventsArray);

}

// 用户点赞

if (rand.nextBoolean()) {

eventsArray.add(generatePraise());

json.put("et", eventsArray);

}

//时间

long millis = System.currentTimeMillis();

//控制台打印

logger.info(millis + "|" + json.toJSONString());

break;

}

// 延迟

try {

Thread.sleep(delay);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

/**

* 公共字段设置

*/

private static JSONObject generateComFields() {

AppBase appBase = new AppBase();

//设备id

appBase.setMid(s_mid + "");

s_mid++;

// 用户id

appBase.setUid(s_uid + "");

s_uid++;

// 程序版本号 5,6等

appBase.setVc("" + rand.nextInt(20));

//程序版本名 v1.1.1

appBase.setVn("1." + rand.nextInt(4) + "." + rand.nextInt(10));

// 安卓系统版本

appBase.setOs("8." + rand.nextInt(3) + "." + rand.nextInt(10));

// 语言 es,en,pt

int flag = rand.nextInt(3);

switch (flag) {

case (0):

appBase.setL("es");

break;

case (1):

appBase.setL("en");

break;

case (2):

appBase.setL("pt");

break;

}

// 渠道号 从哪个渠道来的

appBase.setSr(getRandomChar(1));

// 区域

flag = rand.nextInt(2);

switch (flag) {

case 0:

appBase.setAr("BR");

case 1:

appBase.setAr("MX");

}

// 手机品牌 ba ,手机型号 md,就取2位数字了

flag = rand.nextInt(3);

switch (flag) {

case 0:

appBase.setBa("Sumsung");

appBase.setMd("sumsung-" + rand.nextInt(20));

break;

case 1:

appBase.setBa("Huawei");

appBase.setMd("Huawei-" + rand.nextInt(20));

break;

case 2:

appBase.setBa("HTC");

appBase.setMd("HTC-" + rand.nextInt(20));

break;

}

// 嵌入sdk的版本

appBase.setSv("V2." + rand.nextInt(10) + "." + rand.nextInt(10));

// gmail

appBase.setG(getRandomCharAndNumr(8) + "@gmail.com");

// 屏幕宽高 hw

flag = rand.nextInt(4);

switch (flag) {

case 0:

appBase.setHw("640*960");

break;

case 1:

appBase.setHw("640*1136");

break;

case 2:

appBase.setHw("750*1134");

break;

case 3:

appBase.setHw("1080*1920");

break;

}

// 客户端产生日志时间

long millis = System.currentTimeMillis();

appBase.setT("" + (millis - rand.nextInt(99999999)));

// 手机网络模式 3G,4G,WIFI

flag = rand.nextInt(3);

switch (flag) {

case 0:

appBase.setNw("3G");

break;

case 1:

appBase.setNw("4G");

break;

case 2:

appBase.setNw("WIFI");

break;

}

// 拉丁美洲 西经34°46′至西经117°09;北纬32°42′至南纬53°54′

// 经度

appBase.setLn((-34 - rand.nextInt(83) - rand.nextInt(60) / 10.0) + "");

// 纬度

appBase.setLa((32 - rand.nextInt(85) - rand.nextInt(60) / 10.0) + "");

return (JSONObject) JSON.toJSON(appBase);

}

/**

* 商品展示事件

*/

private static JSONObject generateDisplay() {

AppDisplay appDisplay = new AppDisplay();

boolean boolFlag = rand.nextInt(10) < 7;

// 动作:曝光商品=1,点击商品=2,

if (boolFlag) {

appDisplay.setAction("1");

} else {

appDisplay.setAction("2");

}

// 商品id

String goodsId = s_goodsid + "";

s_goodsid++;

appDisplay.setGoodsid(goodsId);

// 顺序 设置成6条吧

int flag = rand.nextInt(6);

appDisplay.setPlace("" + flag);

// 曝光类型

flag = 1 + rand.nextInt(2);

appDisplay.setExtend1("" + flag);

// 分类

flag = 1 + rand.nextInt(100);

appDisplay.setCategory("" + flag);

JSONObject jsonObject = (JSONObject) JSON.toJSON(appDisplay);

return packEventJson("display", jsonObject);

}

/**

* 商品详情页

*/

private static JSONObject generateNewsDetail() {

AppNewsDetail appNewsDetail = new AppNewsDetail();

// 页面入口来源

int flag = 1 + rand.nextInt(3);

appNewsDetail.setEntry(flag + "");

// 动作

appNewsDetail.setAction("" + (rand.nextInt(4) + 1));

// 商品id

appNewsDetail.setGoodsid(s_goodsid + "");

// 商品来源类型

flag = 1 + rand.nextInt(3);

appNewsDetail.setShowtype(flag + "");

// 商品样式

flag = rand.nextInt(6);

appNewsDetail.setShowtype("" + flag);

// 页面停留时长

flag = rand.nextInt(10) * rand.nextInt(7);

appNewsDetail.setNews_staytime(flag + "");

// 加载时长

flag = rand.nextInt(10) * rand.nextInt(7);

appNewsDetail.setLoading_time(flag + "");

// 加载失败码

flag = rand.nextInt(10);

switch (flag) {

case 1:

appNewsDetail.setType1("102");

break;

case 2:

appNewsDetail.setType1("201");

break;

case 3:

appNewsDetail.setType1("325");

break;

case 4:

appNewsDetail.setType1("433");

break;

case 5:

appNewsDetail.setType1("542");

break;

default:

appNewsDetail.setType1("");

break;

}

// 分类

flag = 1 + rand.nextInt(100);

appNewsDetail.setCategory("" + flag);

JSONObject eventJson = (JSONObject) JSON.toJSON(appNewsDetail);

return packEventJson("newsdetail", eventJson);

}

/**

* 商品列表

*/

private static JSONObject generateNewList() {

AppLoading appLoading = new AppLoading();

// 动作

int flag = rand.nextInt(3) + 1;

appLoading.setAction(flag + "");

// 加载时长

flag = rand.nextInt(10) * rand.nextInt(7);

appLoading.setLoading_time(flag + "");

// 失败码

flag = rand.nextInt(10);

switch (flag) {

case 1:

appLoading.setType1("102");

break;

case 2:

appLoading.setType1("201");

break;

case 3:

appLoading.setType1("325");

break;

case 4:

appLoading.setType1("433");

break;

case 5:

appLoading.setType1("542");

break;

default:

appLoading.setType1("");

break;

}

// 页面 加载类型

flag = 1 + rand.nextInt(2);

appLoading.setLoading_way("" + flag);

// 扩展字段1

appLoading.setExtend1("");

// 扩展字段2

appLoading.setExtend2("");

// 用户加载类型

flag = 1 + rand.nextInt(3);

appLoading.setType("" + flag);

JSONObject jsonObject = (JSONObject) JSON.toJSON(appLoading);

return packEventJson("loading", jsonObject);

}

/**

* 广告相关字段

*/

private static JSONObject generateAd() {

AppAd appAd = new AppAd();

// 入口

int flag = rand.nextInt(3) + 1;

appAd.setEntry(flag + "");

// 动作

flag = rand.nextInt(5) + 1;

appAd.setAction(flag + "");

// 状态

flag = rand.nextInt(10) > 6 ? 2 : 1;

appAd.setContent(flag + "");

// 失败码

flag = rand.nextInt(10);

switch (flag) {

case 1:

appAd.setDetail("102");

break;

case 2:

appAd.setDetail("201");

break;

case 3:

appAd.setDetail("325");

break;

case 4:

appAd.setDetail("433");

break;

case 5:

appAd.setDetail("542");

break;

default:

appAd.setDetail("");

break;

}

// 广告来源

flag = rand.nextInt(4) + 1;

appAd.setSource(flag + "");

// 用户行为

flag = rand.nextInt(2) + 1;

appAd.setBehavior(flag + "");

// 商品类型

flag = rand.nextInt(10);

appAd.setNewstype("" + flag);

// 展示样式

flag = rand.nextInt(6);

appAd.setShow_style("" + flag);

JSONObject jsonObject = (JSONObject) JSON.toJSON(appAd);

return packEventJson("ad", jsonObject);

}

/**

* 启动日志

*/

private static AppStart generateStart() {

AppStart appStart = new AppStart();

//设备id

appStart.setMid(s_mid + "");

s_mid++;

// 用户id

appStart.setUid(s_uid + "");

s_uid++;

// 程序版本号 5,6等

appStart.setVc("" + rand.nextInt(20));

//程序版本名 v1.1.1

appStart.setVn("1." + rand.nextInt(4) + "." + rand.nextInt(10));

// 安卓系统版本

appStart.setOs("8." + rand.nextInt(3) + "." + rand.nextInt(10));

//设置日志类型

appStart.setEn("start");

// 语言 es,en,pt

int flag = rand.nextInt(3);

switch (flag) {

case (0):

appStart.setL("es");

break;

case (1):

appStart.setL("en");

break;

case (2):

appStart.setL("pt");

break;

}

// 渠道号 从哪个渠道来的

appStart.setSr(getRandomChar(1));

// 区域

flag = rand.nextInt(2);

switch (flag) {

case 0:

appStart.setAr("BR");

case 1:

appStart.setAr("MX");

}

// 手机品牌 ba ,手机型号 md,就取2位数字了

flag = rand.nextInt(3);

switch (flag) {

case 0:

appStart.setBa("Sumsung");

appStart.setMd("sumsung-" + rand.nextInt(20));

break;

case 1:

appStart.setBa("Huawei");

appStart.setMd("Huawei-" + rand.nextInt(20));

break;

case 2:

appStart.setBa("HTC");

appStart.setMd("HTC-" + rand.nextInt(20));

break;

}

// 嵌入sdk的版本

appStart.setSv("V2." + rand.nextInt(10) + "." + rand.nextInt(10));

// gmail

appStart.setG(getRandomCharAndNumr(8) + "@gmail.com");

// 屏幕宽高 hw

flag = rand.nextInt(4);

switch (flag) {

case 0:

appStart.setHw("640*960");

break;

case 1:

appStart.setHw("640*1136");

break;

case 2:

appStart.setHw("750*1134");

break;

case 3:

appStart.setHw("1080*1920");

break;

}

// 客户端产生日志时间

long millis = System.currentTimeMillis();

appStart.setT("" + (millis - rand.nextInt(99999999)));

// 手机网络模式 3G,4G,WIFI

flag = rand.nextInt(3);

switch (flag) {

case 0:

appStart.setNw("3G");

break;

case 1:

appStart.setNw("4G");

break;

case 2:

appStart.setNw("WIFI");

break;

}

// 拉丁美洲 西经34°46′至西经117°09;北纬32°42′至南纬53°54′

// 经度

appStart.setLn((-34 - rand.nextInt(83) - rand.nextInt(60) / 10.0) + "");

// 纬度

appStart.setLa((32 - rand.nextInt(85) - rand.nextInt(60) / 10.0) + "");

// 入口

flag = rand.nextInt(5) + 1;

appStart.setEntry(flag + "");

// 开屏广告类型

flag = rand.nextInt(2) + 1;

appStart.setOpen_ad_type(flag + "");

// 状态

flag = rand.nextInt(10) > 8 ? 2 : 1;

appStart.setAction(flag + "");

// 加载时长

appStart.setLoading_time(rand.nextInt(20) + "");

// 失败码

flag = rand.nextInt(10);

switch (flag) {

case 1:

appStart.setDetail("102");

break;

case 2:

appStart.setDetail("201");

break;

case 3:

appStart.setDetail("325");

break;

case 4:

appStart.setDetail("433");

break;

case 5:

appStart.setDetail("542");

break;

default:

appStart.setDetail("");

break;

}

// 扩展字段

appStart.setExtend1("");

return appStart;

}

/**

* 消息通知

*/

private static JSONObject generateNotification() {

AppNotification appNotification = new AppNotification();

int flag = rand.nextInt(4) + 1;

// 动作

appNotification.setAction(flag + "");

// 通知id

flag = rand.nextInt(4) + 1;

appNotification.setType(flag + "");

// 客户端弹时间

appNotification.setAp_time((System.currentTimeMillis() - rand.nextInt(99999999)) + "");

// 备用字段

appNotification.setContent("");

JSONObject jsonObject = (JSONObject) JSON.toJSON(appNotification);

return packEventJson("notification", jsonObject);

}

/**

* 前台活跃

*/

private static JSONObject generatbeforeground() {

AppActive_foreground appActive_foreground = new AppActive_foreground();

// 推送消息的id

int flag = rand.nextInt(2);

switch (flag) {

case 1:

appActive_foreground.setAccess(flag + "");

break;

default:

appActive_foreground.setAccess("");

break;

}

// 1.push 2.icon 3.其他

flag = rand.nextInt(3) + 1;

appActive_foreground.setPush_id(flag + "");

JSONObject jsonObject = (JSONObject) JSON.toJSON(appActive_foreground);

return packEventJson("active_foreground", jsonObject);

}

/**

* 后台活跃

*/

private static JSONObject generateBackground() {

AppActive_background appActive_background = new AppActive_background();

// 启动源

int flag = rand.nextInt(3) + 1;

appActive_background.setActive_source(flag + "");

JSONObject jsonObject = (JSONObject) JSON.toJSON(appActive_background);

return packEventJson("active_background", jsonObject);

}

/**

* 错误日志数据

*/

private static JSONObject generateError() {

AppErrorLog appErrorLog = new AppErrorLog();

String[] errorBriefs = {"at cn.lift.dfdf.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)", "at cn.lift.appIn.control.CommandUtil.getInfo(CommandUtil.java:67)"}; //错误摘要

String[] errorDetails = {"java.lang.NullPointerException\\n " + "at cn.lift.appIn.web.AbstractBaseController.validInbound(AbstractBaseController.java:72)\\n " + "at cn.lift.dfdf.web.AbstractBaseController.validInbound", "at cn.lift.dfdfdf.control.CommandUtil.getInfo(CommandUtil.java:67)\\n " + "at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\\n" + " at java.lang.reflect.Method.invoke(Method.java:606)\\n"}; //错误详情

//错误摘要

appErrorLog.setErrorBrief(errorBriefs[rand.nextInt(errorBriefs.length)]);

//错误详情

appErrorLog.setErrorDetail(errorDetails[rand.nextInt(errorDetails.length)]);

JSONObject jsonObject = (JSONObject) JSON.toJSON(appErrorLog);

return packEventJson("error", jsonObject);

}

/**

* 为各个事件类型的公共字段(时间、事件类型、Json数据)拼接

*/

private static JSONObject packEventJson(String eventName, JSONObject jsonObject) {

JSONObject eventJson = new JSONObject();

eventJson.put("ett", (System.currentTimeMillis() - rand.nextInt(99999999)) + "");

eventJson.put("en", eventName);

eventJson.put("kv", jsonObject);

return eventJson;

}

/**

* 获取随机字母组合

*

* @param length 字符串长度

*/

private static String getRandomChar(Integer length) {

StringBuilder str = new StringBuilder();

Random random = new Random();

for (int i = 0; i < length; i++) {

// 字符串

str.append((char) (65 + random.nextInt(26)));// 取得大写字母

}

return str.toString();

}

/**

* 获取随机字母数字组合

*

* @param length 字符串长度

*/

private static String getRandomCharAndNumr(Integer length) {

StringBuilder str = new StringBuilder();

Random random = new Random();

for (int i = 0; i < length; i++) {

boolean b = random.nextBoolean();

if (b) { // 字符串

// int choice = random.nextBoolean() ? 65 : 97; 取得65大写字母还是97小写字母

str.append((char) (65 + random.nextInt(26)));// 取得大写字母

} else { // 数字

str.append(String.valueOf(random.nextInt(10)));

}

}

return str.toString();

}

/**

* 收藏

*/

private static JSONObject generateFavorites() {

AppFavorites favorites = new AppFavorites();

favorites.setCourse_id(rand.nextInt(10));

favorites.setUserid(rand.nextInt(10));

favorites.setAdd_time((System.currentTimeMillis() - rand.nextInt(99999999)) + "");

JSONObject jsonObject = (JSONObject) JSON.toJSON(favorites);

return packEventJson("favorites", jsonObject);

}

/**

* 点赞

*/

private static JSONObject generatePraise() {

AppPraise praise = new AppPraise();

praise.setId(rand.nextInt(10));

praise.setUserid(rand.nextInt(10));

praise.setTarget_id(rand.nextInt(10));

praise.setType(rand.nextInt(4) + 1);

praise.setAdd_time((System.currentTimeMillis() - rand.nextInt(99999999)) + "");

JSONObject jsonObject = (JSONObject) JSON.toJSON(praise);

return packEventJson("praise", jsonObject);

}

/**

* 评论

*/

private static JSONObject generateComment() {

AppComment comment = new AppComment();

comment.setComment_id(rand.nextInt(10));

comment.setUserid(rand.nextInt(10));

comment.setP_comment_id(rand.nextInt(5));

comment.setContent(getCONTENT());

comment.setAddtime((System.currentTimeMillis() - rand.nextInt(99999999)) + "");

comment.setOther_id(rand.nextInt(10));

comment.setPraise_count(rand.nextInt(1000));

comment.setReply_count(rand.nextInt(200));

JSONObject jsonObject = (JSONObject) JSON.toJSON(comment);

return packEventJson("comment", jsonObject);

}

/**

* 生成单个汉字

*/

private static char getRandomChar() {

String str = "";

int hightPos; //

int lowPos;

Random random = new Random();

//随机生成汉子的两个字节

hightPos = (176 + Math.abs(random.nextInt(39)));

lowPos = (161 + Math.abs(random.nextInt(93)));

byte[] b = new byte[2];

b[0] = (Integer.valueOf(hightPos)).byteValue();

b[1] = (Integer.valueOf(lowPos)).byteValue();

try {

str = new String(b, "GBK");

} catch (UnsupportedEncodingException e) {

e.printStackTrace();

System.out.println("错误");

}

return str.charAt(0);

}

/**

* 拼接成多个汉字

*/

private static String getCONTENT() {

StringBuilder str = new StringBuilder();

for (int i = 0; i < rand.nextInt(100); i++) {

str.append(getRandomChar());

}

return str.toString();

}

}

4.数据采集模块

A. 服务器准备

1)下载VMware及centos镜像,安装centos虚拟机

2)配置虚拟机

a.cd /etc/sysconfig/network-scripts

sudo vi ifcfg-eth0

sudo chkconfig iptables off

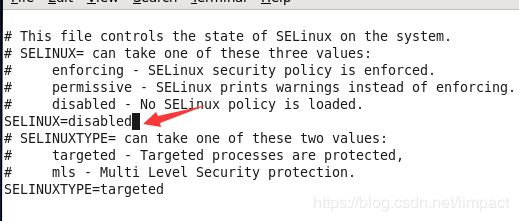

c.关闭linux安全加载机制

cd /etc/selinux/

sudo vi config

sudo vi /etc/hosts

cd /etc/udev/rules.d/

sudo rm -f 70-persistent-net.rules

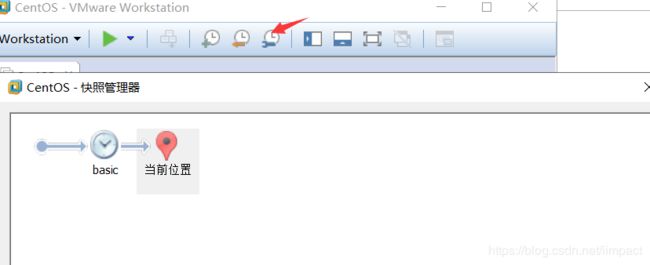

f.关闭虚拟机

拍摄快照

克隆3台basic虚拟机,

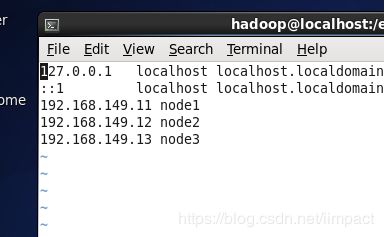

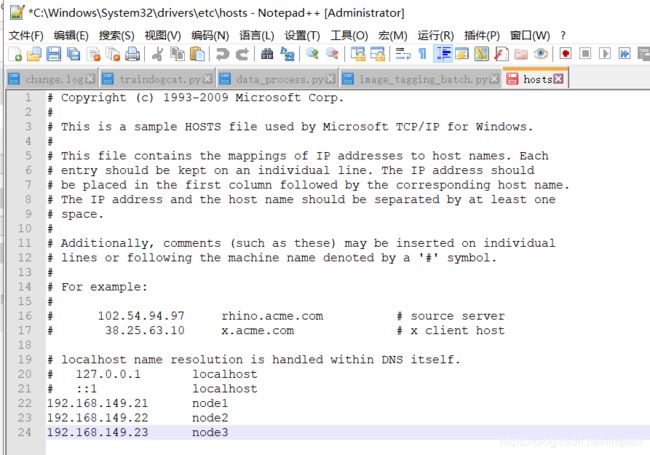

g.配置克隆出的3台虚拟机的ip地址和主机名

sudo vi /etc/sysconfig/network-scripts/ifcfg-eth0

sudo vi /etc/sysconfig/network

配置windows本机的hosts文件:

h.禁用图形界面节省资源

sudo vi /etc/inittab

i. 关机,拍摄快照副本。

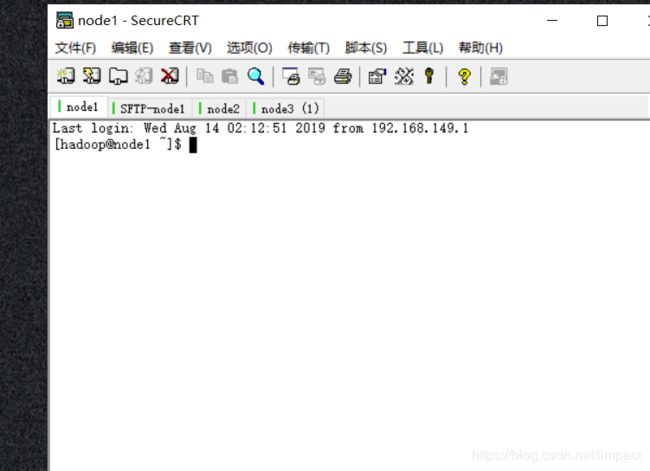

j.安装securecrt,连接3台虚拟机

B. Hadoop安装(配置8个文件)

1)在node1 的/opt目录下创建module、software文件夹

cd /opt

sudo mkdir module

sudo mkdir software

在node2,node3/opt目录下创建module文件夹

修改module、software文件夹的所有者

sudo chown hadoop:hadoop module/ software/

2)安装java

a.上传jdk压缩包和hadoop jar包,hadoop lzo jar包

b.安装java

卸载现有JDK

(1)查询是否安装Java软件:

rpm -qa | grep java

(2)如果安装的版本低于1.7,卸载该JDK:

sudo rpm -e 软件包

安装jdk

tar -zxvf jdk-8u144-linux-x64.tar.gz -C /opt/module/

配置jdk环境变量:

sudo vi /etc/profile加入以下内容:

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_144

export PATH= P A T H : PATH: PATH:JAVA_HOME/bin

c. 安装hadoop

tar -zxvf hadoop-2.7.2.tar.gz -C /opt/module/

配置hadoop 环境变量

sudo vi /etc/profile

加入以下内容:

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH= P A T H : PATH: PATH:HADOOP_HOME/bin

export PATH= P A T H : PATH: PATH:HADOOP_HOME/sbin

d. 编写集群分发脚本:

在家目录下创建bin文件夹

mkdir bin

编写xsync脚本:

vim xsnc;

加入以下内容:

#!/bin/bash

#1 获取输入参数个数,如果没有参数,直接退出

pcount=$#

if((pcount==0)); then

echo no args;

exit;

fi

#2 获取文件名称

p1=$1

fname=basename $p1

echo fname=$fname

#3 获取上级目录到绝对路径

pdir=cd -P $(dirname $p1); pwd

echo pdir=$pdir

#4 获取当前用户名称

user=whoami

#5 循环

for((host=2; host<4; host++)); do

echo ------------------- node$host --------------

rsync -rvl p d i r / pdir/ pdir/fname u s e r @ n o d e user@node user@nodehost:$pdir

done

赋予权限:

chmod 777 xsync

3)配置集群:

a.配置core-site.xml:

vim core-site.xml,加入以下内容:

fs.defaultFS

hdfs://node1:9000

hadoop.tmp.dir

/opt/module/hadoop-2.7.2/data/tmp

b.hdfs-site.xml

配置hadoop-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

配置hdfs-site.xml

dfs.replication

1

dfs.namenode.secondary.http-address

node3:50090

c.YARN配置文件

配置yarn-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

配置yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.hostname

node2

d. MapReduce配置文件

配置mapred-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

配置mapred-site.xml

cp mapred-site.xml.template mapred-site.xml

e.配置slaves

4)分发:

a.配置ssh

node1 上:

cd .ssh

ssh-keygen -t rsa 三次回车

ssh-copy-id node1

ssh-copy-id node2

ssh-copy-id node3

node2(yarn)上:

cd .ssh

ssh-keygen -t rsa 三次回车

ssh-copy-id node1

ssh-copy-id node2

ssh-copy-id node3

b.分发脚本

node1上执行:

xsync jdk1.8.0_144/

xsync hadoop-2.7.2/

sudo scp /etc/profile root@node3:/etc/profile

sudo scp /etc/profile root@node2:/etc/profile

5)启动集群

node1 上执行:

格式化:bin/hdfs namenode format

启动hdfs: sbin/start-dfs.sh

node2上启动yarn: sbin/start-yarn.sh

6)hdfs调优:

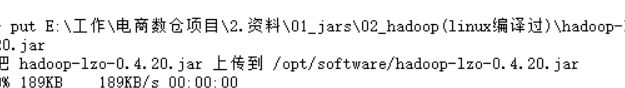

a.支持LZO压缩配置

将之前上传的hadoop-lzo-0.4.20.jar 放入hadoop-2.7.2/share/hadoop/common/

cp hadoop-lzo-0.4.20.jar /opt/module/hadoop-2.7.2/share/hadoop/common/

分发到其他节点:

xsync hadoop-lzo-0.4.20.jar

配置core-site.xml,加入以下内容:

io.compression.codecs

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec

io.compression.codec.lzo.class

com.hadoop.compression.lzo.LzoCodec

分发core-site.xml:

xsync core-site.xml

C. Zookeeper安装

1)上传zookeeper压缩包

sftp> put E:\工作\电商数仓项目\2.资料\01_jars\03_zookeeper\zookeeper-3.4.10.tar.gz

2)解压安装zookeeper:

tar -zxvf zookeeper-3.4.10.tar.gz -C /opt/module/

分发:

xsync zookeeper-3.4.10/

3)配置服务器编号

a.在/opt/module/zookeeper-3.4.10/这个目录下创建zkData

mkdir zkData

b. 在/opt/module/zookeeper-3.4.10/zkData目录下创建一个myid的文件

touch myid

c.编辑myid文件,在文件中添加与server对应的编号(1):

vi myid

d.分发 :

xsync myid

将node2、node3上修改myid文件中内容为2、3

4)配置zoo.cfg文件

a.重命名/opt/module/zookeeper-3.4.10/conf这个目录下的zoo_sample.cfg为zoo.cfg

mv zoo_sample.cfg zoo.cfg

b.打开zoo.cfg文件

vim zoo.cfg

修改数据存储路径配置

dataDir=/opt/module/zookeeper-3.4.10/zkData

增加如下配置

#######################cluster##########################

server.1=node1:2888:3888

server.2=node2:2888:3888

server.3=node3:2888:3888

分发:

xsync zoo.cfg

5)编写zookeeper启动停止脚本:

在node1的/home/hadoop/bin目录下创建脚本:

vim zk.sh

加入以下代码:

#! /bin/bash

case $1 in

"start"){

for i in node1 node2 node3

do

ssh $i "/opt/module/zookeeper-3.4.10/bin/zkServer.sh start"

done

};;

"stop"){

for i in node1 node2 node3

do

ssh $i "/opt/module/zookeeper-3.4.10/bin/zkServer.sh stop"

done

};;

"status"){

for i in node1 node2 node3

do

ssh $i "/opt/module/zookeeper-3.4.10/bin/zkServer.sh status"

done

};;

esac

b.增加脚本执行权限

chmod 777 zk.sh

c.配置linux环境变量:

把/etc/profile里面的环境变量追加到~/.bashrc目录

cat /etc/profile >> ~/.bashrc

cat /etc/profile >> ~/.bashrc

cat /etc/profile >> ~/.bashrc

d.启动停止集群:

zk.sh stop

zk.sh start

zk.sh status

D.日志生成

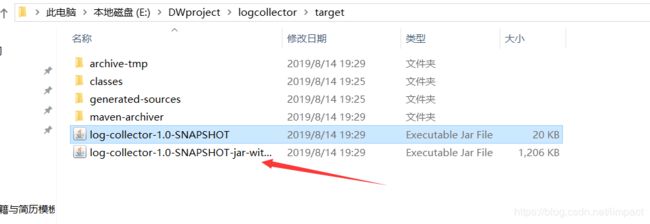

1)将之前生成的jar包log-collector-0.0.1-SNAPSHOT-jar-with-dependencies.jar拷贝到node1 /opt/modul上,

put E:\DWproject\logcollector\target\log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar

分发:

xsync log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar

删除3号节点上的jar包(节省资源)

node3 :rm -rf log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar

2)在node2上执行jar程序:

java -classpath log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar bigdata.dw.appclient.AppMain >/opt/module/test.log

3)在/tmp/logs路径下查看生成的日志文件

cd /tmp/logs/

ls

3)集群日志生成启动脚本:

a.在bin目录下创建脚本:

vim lg.sh

添加如下内容:

#! /bin/bash

for i in hadoop102 hadoop103

do

ssh $i "java -classpath /opt/module/log-collector-1.0-SNAPSHOT-jar-with-dependencies.jar bigdata.dw.appclient.AppMain $1 $2 >/opt/module/test.log &"

done

~

b.修改权限:

chmod 777 lg.sh

c.启动脚本

lg.sh

4)集群时间同步修改脚本

a.在bin目录下创建脚本dt.sh

vim dt .sh

在脚本中编写如下内容:

#!/bin/bash

log_date=$1

for i in node1 node2 node3

do

ssh -t $i “sudo date -s $log_date”

done

chmod 777dt.sh

执行脚本:

dt.sh 2019-3-10

5)集群所有进程查看脚本

vim xcall.sh

在脚本中编写如下内容:

#! /bin/bash

for i in node1 node2 node3

do

echo --------- $i ----------

ssh i " i " i"*"

done

chmod 777 xcall.sh

执行脚本: xcall.sh jps

E.采集日志

1)Flume安装:

a.上传安装包

put E:\工作\电商数仓项目\2.资料\01_jars\04_flume\apache-flume-1.7.0-bin.tar.gz

b.解压apache-flume-1.7.0-bin.tar.gz到/opt/module/目录下

tar -zxvf apache-flume-1.7.0-bin.tar.gz -C /opt/module/

c.修改apache-flume-1.7.0-bin的名称为flume

mv apache-flume-1.7.0-bin flume

d.将flume/conf下的flume-env.sh.template文件修改为flume-env.sh,并配置flume-env.sh文件

mv flume-env.sh.template flume-env.sh

vi flume-env.sh

2)Flume具体配置:

a.在/opt/module/flume/conf目录下创建file-flume-kafka.conf文件

vim file-flume-kafka.conf

加入以下内容:

a1.sources=r1

a1.channels=c1 c2

# configure source

a1.sources.r1.type = TAILDIR

a1.sources.r1.positionFile = /opt/module/flume/test/log_position.json

a1.sources.r1.filegroups = f1

a1.sources.r1.filegroups.f1 = /tmp/logs/app.+

a1.sources.r1.fileHeader = true

a1.sources.r1.channels = c1 c2

#interceptor

a1.sources.r1.interceptors = i1 i2

a1.sources.r1.interceptors.i1.type = bigdata.dw.flume.interceptor.LogETLInterceptor$Builder

a1.sources.r1.interceptors.i2.type = bigdata.dw.flume.interceptor.LogTypeInterceptor$Builder

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = topic

a1.sources.r1.selector.mapping.topic_start = c1

a1.sources.r1.selector.mapping.topic_event = c2

# configure channel

a1.channels.c1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c1.kafka.bootstrap.servers = node1:9092,node2:9092,node3:9092

a1.channels.c1.kafka.topic = topic_start

a1.channels.c1.parseAsFlumeEvent = false

a1.channels.c1.kafka.consumer.group.id = flume-consumer

a1.channels.c2.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.c2.kafka.bootstrap.servers = node1:9092,node2:9092,node3:9092

a1.channels.c2.kafka.topic = topic_event

a1.channels.c2.parseAsFlumeEvent = false

a1.channels.c2.kafka.consumer.group.id = flume-consumer

3)Flume的ETL和分类型拦截器

本项目中自定义了两个拦截器,分别是:ETL拦截器、日志类型区分拦截器。

ETL拦截器主要用于,过滤时间戳不合法和Json数据不完整的日志

日志类型区分拦截器主要用于,将启动日志和事件日志区分开来,方便发往Kafka的不同Topic。

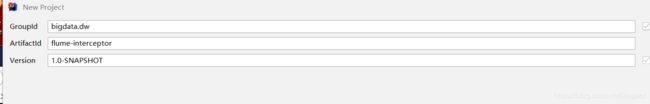

a.创建Maven工程flume-interceptor

b.创建包名:bigdata.dw.flume.interceptor

c.在pom.xml文件中添加如下配置

org.apache.flume

flume-ng-core

1.7.0

maven-compiler-plugin

2.3.2

1.8

1.8

maven-assembly-plugin

jar-with-dependencies

make-assembly

package

single

d.在bigdata.dw.flume.interceptor包下创建LogETLInterceptor类名

Flume ETL拦截器LogETLInterceptor

写入代码:

package com.atguigu.flume.interceptor;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.List;

public class LogETLInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

// 1 获取数据

byte[] body = event.getBody();

String log = new String(body, Charset.forName("UTF-8"));

// 2 判断数据类型并向Header中赋值

if (log.contains("start")) {

if (LogUtils.validateStart(log)){

return event;

}

}else {

if (LogUtils.validateEvent(log)){

return event;

}

}

// 3 返回校验结果

return null;

}

@Override

public List intercept(List events) {

ArrayList interceptors = new ArrayList<>();

for (Event event : events) {

Event intercept1 = intercept(event);

if (intercept1 != null){

interceptors.add(intercept1);

}

}

return interceptors;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new LogETLInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

e.创建Flume日志过滤工具类,加入代码:

package com.atguigu.flume.interceptor;

import org.apache.commons.lang.math.NumberUtils;

public class LogUtils {

public static boolean validateEvent(String log) {

// 服务器时间 | json

// 1549696569054 | {"cm":{"ln":"-89.2","sv":"V2.0.4","os":"8.2.0","g":"[email protected]","nw":"4G","l":"en","vc":"18","hw":"1080*1920","ar":"MX","uid":"u8678","t":"1549679122062","la":"-27.4","md":"sumsung-12","vn":"1.1.3","ba":"Sumsung","sr":"Y"},"ap":"weather","et":[]}

// 1 切割

String[] logContents = log.split("\\|");

// 2 校验

if(logContents.length != 2){

return false;

}

//3 校验服务器时间

if (logContents[0].length()!=13 || !NumberUtils.isDigits(logContents[0])){

return false;

}

// 4 校验json

if (!logContents[1].trim().startsWith("{") || !logContents[1].trim().endsWith("}")){

return false;

}

return true;

}

public static boolean validateStart(String log) {

if (log == null){

return false;

}

// 校验json

if (!log.trim().startsWith("{") || !log.trim().endsWith("}")){

return false;

}

return true;

}

}

f.创建Flume日志类型区分拦截器LogTypeInterceptor

package com.atguigu.flume.interceptor;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

public class LogTypeInterceptor implements Interceptor {

@Override

public void initialize() {

}

@Override

public Event intercept(Event event) {

// 区分日志类型: body header

// 1 获取body数据

byte[] body = event.getBody();

String log = new String(body, Charset.forName("UTF-8"));

// 2 获取header

Map headers = event.getHeaders();

// 3 判断数据类型并向Header中赋值

if (log.contains("start")) {

headers.put("topic","topic_start");

}else {

headers.put("topic","topic_event");

}

return event;

}

@Override

public List intercept(List events) {

ArrayList interceptors = new ArrayList<>();

for (Event event : events) {

Event intercept1 = intercept(event);

interceptors.add(intercept1);

}

return interceptors;

}

@Override

public void close() {

}

public static class Builder implements Interceptor.Builder{

@Override

public Interceptor build() {

return new LogTypeInterceptor();

}

@Override

public void configure(Context context) {

}

}

}

g.打包:

4)选上面一个jar包放入到node1的/opt/module/flume/lib文件夹下面。

put E:\DWproject\flumeinterceptor\target\flume-interceptor-1.0-SNAPSHOT.jar

5)分发:

xsync flume/

6)日志采集Flume启动停止脚本

bin目录下创建脚本f1.sh

vim f1.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

for i in node1 node2

do

echo " --------启动 $i 采集flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/conf/file-flume-kafka.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/dev/null 2>&1 &"

done

};;

"stop"){

for i in node1 node2

do

echo " --------停止 $i 采集flume-------"

ssh $i "ps -ef | grep file-flume-kafka | grep -v grep |awk '{print \$2}' | xargs kill"

done

};;

esac

开始采集:

fl.sh start

停止采集:

fl.sh stop

F.Kafka安装:

1) Kafka集群安装

a.上传安装包:

put E:\工作\电商数仓项目\2.资料\01_jars\05_kafka\kafka_2.11-0.11.0.2.tgz

上传manger压缩包

put E:\工作\电商数仓项目\2.资料\01_jars\05_kafka\kafka-manager-1.3.3.22.zip

b.解压安装kafka:

tar -zxvf kafka_2.11-0.11.0.0.tgz -C /opt/module/

修改解压后的文件名称:

mv kafka_2.11-0.11.0.2/ kafka

创建日志目录

mkdir logs

2)进入配置目录

cd kafka/config/

配置server.properties中以下内容:

#broker的全局唯一编号,不能重复

broker.id=0

#删除topic功能使能

delete.topic.enable=true

#kafka运行日志存放的路径

log.dirs=/opt/module/kafka/logs

#配置连接Zookeeper集群地址

zookeeper.connect=node1:2181,node2:2181,node3:2181

3)配置环境变量:

sudo vi /etc/profile

#KAFKA_HOME

export KAFKA_HOME=/opt/module/kafka

export PATH= P A T H : PATH: PATH:KAFKA_HOME/bin

source /etc/profile

4)分发:

xsync kafka/

分发之后记得配置其他机器的环境变量

scp /etc/profile root@node2:/etc/profile

scp /etc/profile root@node3:/etc/profile

5)分别在node1和node2上修改配置文件/opt/module/kafka/config/server.properties中的broker.id=1、broker.id=2

6)Kafka集群启动停止脚本

bin目录下创建脚本kf.sh

vim kf.sh

#! /bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------启动 $i Kafka-------"

# 用于KafkaManager监控

ssh $i "export JMX_PORT=9988 && /opt/module/kafka/bin/kafka-server-start.sh -daemon /opt/module/kafka/config/server.properties "

done

};;

"stop"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------停止 $i Kafka-------"

ssh $i "/opt/module/kafka/bin/kafka-server-stop.sh stop"

done

};;

esac

chmod 777 kf.sh

b.启动停止脚本:

kf.sh start

kf.sh stop

停止脚本有问题:参考kafka停止脚本不能用

7)查看Kafka Topic列表

bin/kafka-topics.sh --zookeeper node1:2181 --list

8)Kafka消费消息

bin/kafka-console-consumer.sh

–zookeeper node1:2181 --from-beginning --topic topic_start

9)生产消息

bin/kafka-console-producer.sh

–broker-list node1:9092 --topic topic_start

G.Kafka Manager安装

1)Kafka Manager是yahoo的一个Kafka监控管理项目。

a.解压kafka-manager-1.3.3.22.zip到/opt/module目录

mv kafka-manager-1.3.3.22.zip /opt/module/

unzip kafka-manager-1.3.3.22.zip

b.进入到/opt/module/kafka-manager-1.3.3.22/conf目录,在application.conf文件中修改kafka-manager.zkhosts

vim application.conf

kafka-manager.zkhosts=“node1:2181,node2:2181,node3:2181”

c.启动KafkaManager

nohup bin/kafka-manager -Dhttp.port=7456 >/opt/module/kafka-manager-1.3.3.22/start.log 2>&1 &

d.KafkaManager使用

https://blog.csdn.net/u011089412/article/details/87895652

2)Kafka Manager启动停止脚本

bin目录下

vim km.sh

#! /bin/bash

case $1 in

"start"){

echo " -------- 启动 KafkaManager -------"

nohup /opt/module/kafka-manager-1.3.3.22/bin/kafka-manager -Dhttp.port=7456 >start.log 2>&1 &

};;

"stop"){

echo " -------- 停止 KafkaManager -------"

jps | grep ProdServerStart |awk '{print $1}' | xargs kill

};;

esac

chmod 777 km.sh

启动与停止脚本:

km.sh start

km.sh stop

H.消费Kafka数据Flume

(1)在node3的/opt/module/flume/conf目录下创建kafka-flume-hdfs.conf文件

vim kafka-flume-hdfs.conf

a1.sources=r1 r2

a1.channels=c1 c2

a1.sinks=k1 k2

## source1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = node1:9092,node2:9092,node3:9092

a1.sources.r1.kafka.topics=topic_start

## source2

a1.sources.r2.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r2.batchSize = 5000

a1.sources.r2.batchDurationMillis = 2000

a1.sources.r2.kafka.bootstrap.servers = node1:9092,node2:9092,node3:9092

a1.sources.r2.kafka.topics=topic_event

## channel1

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /opt/module/flume/checkpoint/behavior1

a1.channels.c1.dataDirs = /opt/module/flume/data/behavior1/

a1.channels.c1.maxFileSize = 2146435071

a1.channels.c1.capacity = 1000000

a1.channels.c1.keep-alive = 6

## channel2

a1.channels.c2.type = file

a1.channels.c2.checkpointDir = /opt/module/flume/checkpoint/behavior2

a1.channels.c2.dataDirs = /opt/module/flume/data/behavior2/

a1.channels.c2.maxFileSize = 2146435071

a1.channels.c2.capacity = 1000000

a1.channels.c2.keep-alive = 6

## sink1

a1.sinks.k1.type = hdfs

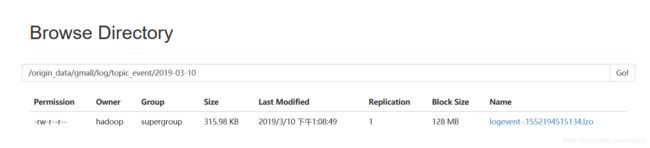

a1.sinks.k1.hdfs.path = /origin_data/gmall/log/topic_start/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = logstart-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = second

##sink2

a1.sinks.k2.type = hdfs

a1.sinks.k2.hdfs.path = /origin_data/gmall/log/topic_event/%Y-%m-%d

a1.sinks.k2.hdfs.filePrefix = logevent-

a1.sinks.k2.hdfs.round = true

a1.sinks.k2.hdfs.roundValue = 10

a1.sinks.k2.hdfs.roundUnit = second

## 虏禄要虏煤小脦录镁

a1.sinks.k1.hdfs.rollInterval = 10

a1.sinks.k1.hdfs.rollSize = 134217728

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k2.hdfs.rollInterval = 10

a1.sinks.k2.hdfs.rollSize = 134217728

a1.sinks.k2.hdfs.rollCount = 0

## 驴脴脝盲脦录镁脢原脡脦录镁隆拢

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k2.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = lzop

a1.sinks.k2.hdfs.codeC = lzop

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

a1.sources.r2.channels = c2

a1.sinks.k2.channel= c2

2)日志消费Flume启动停止脚本

node1 家用户bin目录下创建脚本f2.sh

vim f2.sh

#! /bin/bash

case $1 in

"start"){

for i in node3

do

echo " --------启动 $i 消费flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/conf/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/opt/module/flume/log.txt 2>&1 &"

done

};;

"stop"){

for i in node3

do

echo " --------停止 $i 消费flume-------"

ssh $i "ps -ef | grep kafka-flume-hdfs | grep -v grep |awk '{print \$2}' | xargs kill"

done

};;

esac

chmod 777 f2.sh

启动与停止消费脚本

f2.sh start

f2.sh stop

I.采集通道启动/停止脚本

bin目录下创建脚本cluster.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

echo " -------- 启动 集群 -------"

echo " -------- 启动 hadoop集群 -------"

/opt/module/hadoop-2.7.2/sbin/start-dfs.sh

ssh hadoop103 "/opt/module/hadoop-2.7.2/sbin/start-yarn.sh"

#启动 Zookeeper集群

zk.sh start

sleep 4s;

#启动 Flume采集集群

f1.sh start

#启动 Kafka采集集群

kf.sh start

sleep 6s;

#启动 Flume消费集群

f2.sh start

#启动 KafkaManager

km.sh start

};;

"stop"){

echo " -------- 停止 集群 -------"

#停止 KafkaManager

km.sh stop

#停止 Flume消费集群

f2.sh stop

#停止 Kafka采集集群

kf.sh stop

sleep 6s;

#停止 Flume采集集群

f1.sh stop

#停止 Zookeeper集群

zk.sh stop

echo " -------- 停止 hadoop集群 -------"

ssh hadoop103 "/opt/module/hadoop-2.7.2/sbin/stop-yarn.sh"

/opt/module/hadoop-2.7.2/sbin/stop-dfs.sh

};;

esac

chmod 777 cluster.sh

2)集群启动与停止脚本

cluster.sh start

cluster.sh stop

可能要先执行下面两条指令:

日志时间修改:dt.sh 2019-2-10(最先执行)

日志生成:lg.sh

lzo压缩出问题,需要安装lzo,lzo安装

二.用户行为数据仓库

1.数仓分层概念

2.数仓搭建环境准备

A. Hive&MySQL安装

1)上传安装包:

put E:\工作\电商数仓项目\2.资料\01_jars\06_hive\apache-hive-1.2.1-bin.tar.gz

put E:\工作\电商数仓项目\2.资料\01_jars\06_hive\apache-tez-0.9.1-bin.tar.gz

put E:\工作\电商数仓项目\2.资料\01_jars\07_mysql\mysql-libs.zip

2)解压安装:

tar -zxvf apache-hive-1.2.1-bin.tar.gz -C /opt/module

修改apache-hive-1.2.1-bin.tar.gz的名称为hive

mv apache-hive-1.2.1-bin/ hive

3)修改/opt/module/hive/conf目录下的hive-env.sh.template名称为hive-env.sh

mv hive-env.sh.template hive-env.sh

4)配置hive-env.sh文件

(a)配置HADOOP_HOME路径

HADOOP_HOME=/opt/module/hadoop-2.7.2

(b)配置HIVE_CONF_DIR路径

export HIVE_CONF_DIR=/opt/module/hive/conf

5)MySql安装

a.查看mysql是否安装,如果安装了,卸载mysql

rpm -qa|grep mysql

b.卸载

sudo rpm -e --nodeps mysql-libs-5.1.66-2.el6_3.x86_64

c.解压mysql-libs.zip文件到当前目录

unzip mysql-libs.zip

d.进入到mysql-libs文件夹下,安装MySql服务器

rpm -ivh MySQL-server-5.6.24-1.el6.x86_64.rpm

查看产生的随机密码

cat /root/.mysql_secret

查看mysql状态

service mysql status

启动mysql

service mysql start

e.安装MySql客户端

rpm -ivh MySQL-client-5.6.24-1.el6.x86_64.rpm

链接mysql

mysql -uroot -pF2WKrHqrJE7Zfhb2

修改密码

SET PASSWORD=PASSWORD(‘123456’);

退出mysql

exit

f. 修改MySql中user表中主机配置

配置只要是root用户+密码,在任何主机上都能登录MySQL数据库。

1.进入mysql

[root@hadoop102 mysql-libs]# mysql -uroot -p123456

2.显示数据库

mysql>show databases;

3.使用mysql数据库

mysql>use mysql;

4.展示mysql数据库中的所有表

mysql>show tables;

5.展示user表的结构

mysql>desc user;

6.查询user表

mysql>select User, Host, Password from user;

7.修改user表,把Host表内容修改为%

mysql>update user set host=’%’ where host=‘localhost’;

8.删除root用户的其他host

mysql>

delete from user where Host=‘node1’;

delete from user where Host=‘127.0.0.1’;

delete from user where Host=’::1’;

9.刷新

mysql>flush privileges;

10.退出

mysql>quit;

g.Hive元数据配置到MySql

1 驱动拷贝

在/opt/software/mysql-libs目录下解压mysql-connector-java-5.1.27.tar.gz驱动包

tar -zxvf mysql-connector-java-5.1.27.tar.gz

拷贝/opt/software/mysql-libs/mysql-connector-java-5.1.27目录下的mysql-connector-java-5.1.27-bin.jar到/opt/module/hive/lib/

cp mysql-connector-java-5.1.27-bin.jar /opt/module/hive/lib/

6)配置Metastore到MySql

1.在/opt/module/hive/conf目录下创建一个hive-site.xm

vi hive-site.xml

2.根据官方文档配置参数,拷贝数据到hive-site.xml文件中

javax.jdo.option.ConnectionURL

jdbc:mysql://node1:3306/metastore?createDatabaseIfNotExist=true

JDBC connect string for a JDBC metastore

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

Driver class name for a JDBC metastore

javax.jdo.option.ConnectionUserName

root

username to use against metastore database

javax.jdo.option.ConnectionPassword

123456

password to use against metastore database

hive.cli.print.header

true

hive.cli.print.current.db

true

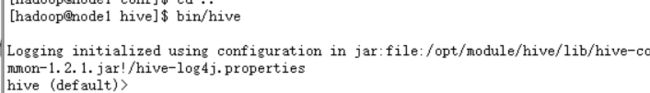

7)启动hive:

bin/hive;

8)关闭元数据检查

vim hive-site.xml

hive.metastore.schema.verification

false

B.Hive运行引擎Tez

Tez可以将多个有依赖的作业转换为一个作业,这样只需写一次HDFS,且中间节点较少,从而大大提升作业的计算性能。

1)a.上传安装包(已完成)

b.解压安装:

tar -zxvf apache-tez-0.9.1-bin.tar.gz -C /opt/module/

c.修改名称:

mv apache-tez-0.9.1-bin/ tez-0.9.1

2)在Hive中配置Tez

a.进入到Hive的配置目录:/opt/module/hive/conf

b.在hive-env.sh文件中添加tez环境变量配置和依赖包环境变量配置

添加如下配置

export TEZ_HOME=/opt/module/tez-0.9.1 #是你的tez的解压目录

export TEZ_JARS=""

for jar in `ls $TEZ_HOME |grep jar`; do

export TEZ_JARS=$TEZ_JARS:$TEZ_HOME/$jar

done

for jar in `ls $TEZ_HOME/lib`; do

export TEZ_JARS=$TEZ_JARS:$TEZ_HOME/lib/$jar

done

export HIVE_AUX_JARS_PATH=/opt/module/hadoop-2.7.2/share/hadoop/common/hadoop-lzo-0.4.20.jar$TEZ_JARS

c.在hive-site.xml文件中添加如下配置,更改hive计算引擎

hive.execution.engine

tez

d.配置Tez

在Hive的/opt/module/hive/conf下面创建一个tez-site.xml文件

tez.lib.uris

${fs.defaultFS}/tez/tez-0.9.1,${fs.defaultFS}/tez/tez-0.9.1/lib

tez.lib.uris.classpath

${fs.defaultFS}/tez/tez-0.9.1,${fs.defaultFS}/tez/tez-0.9.1/lib

tez.use.cluster.hadoop-libs

true

tez.history.logging.service.class

org.apache.tez.dag.history.logging.ats.ATSHistoryLoggingService

e.上传Tez到集群

将/opt/module/tez-0.9.1上传到HDFS的/tez路径

hadoop fs -mkdir /tez

hadoop fs -put /opt/module/tez-0.9.1/ /tez

f.测试

启动hive:

bin/hive

1)运行Tez时检查到用过多内存而被NodeManager杀死进程问题:

关掉虚拟内存检查。我们选这个,修改yarn-site.xml

yarn.nodemanager.vmem-check-enabled

false

xsync yarn-site.xml

重启集群

cluster.sh stop

cluster.sh start

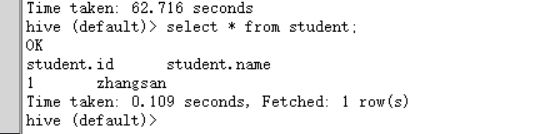

2)创建LZO表

create table student(

id int,

name string);

向表中插入数据:

insert into student values(1,“zhangsan”);

如果没有报错就成功了

select * from student;

3. 数仓环境之ODS层

A.创建数据库

创建gmall数据库

create database gmall;

使用gmall数据库

use gmall;

B.创建启动日志表ods_start_log

1)创建输入数据是lzo输出是text,支持json解析的分区表

hive (gmall)>

drop table if exists ods_start_log;

CREATE EXTERNAL TABLE ods_start_log (`line` string)

PARTITIONED BY (`dt` string)

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_start_log';

2)加载数据

load data inpath '/origin_data/gmall/log/topic_start/2019-03-10' into table gmall.ods_start_log partition(dt='2019-03-10');

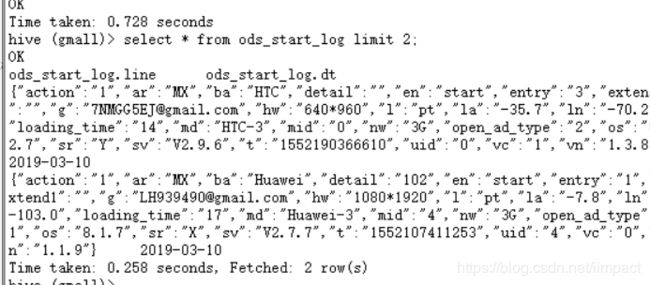

3)查看是否加载成功:

select * from ods_start_log limit 2;

C.创建事件日志表ods_event_log

1)创建输入数据是lzo输出是text,支持json解析的分区表

drop table if exists ods_event_log;

CREATE EXTERNAL TABLE ods_event_log(`line` string)

PARTITIONED BY (`dt` string)

STORED AS

INPUTFORMAT 'com.hadoop.mapred.DeprecatedLzoTextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION '/warehouse/gmall/ods/ods_event_log';

2)加载数据

load data inpath '/origin_data/gmall/log/topic_event/2019-03-10' into table gmall.ods_event_log partition(dt='2019-03-10');

3)查看是否加载成功

select * from ods_event_log limit 2;

D.ODS层加载数据脚本

vim ods_log.sh

#!/bin/bash

# 定义变量方便修改

APP=gmall

hive=/opt/module/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

echo "===日志日期为 $do_date==="

sql="

load data inpath '/origin_data/gmall/log/topic_start/$do_date' into table "$APP".ods_start_log partition(dt='$do_date');

load data inpath '/origin_data/gmall/log/topic_event/$do_date' into table "$APP".ods_event_log partition(dt='$do_date');

"

$hive -e "$sql"

2)脚本使用

ods_log.sh 2019-02-11

3)查看导入数据

select * from ods_start_log where dt='2019-03-11' limit 2;

select * from ods_event_log where dt='2019-03-11' limit 2;

4.数仓搭建之DWD层

对ODS层数据进行清洗(去除空值,脏数据,超过极限范围的数据,行式存储改为列存储,改压缩格式)。

A.创建启动表

1)建表语句:

drop table if exists dwd_start_log;

CREATE EXTERNAL TABLE dwd_start_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`entry` string,

`open_ad_type` string,

`action` string,

`loading_time` string,

`detail` string,

`extend1` string

)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_start_log/';

2)向启动表导入数据

insert overwrite table dwd_start_log

PARTITION (dt='2019-03-10')

select

get_json_object(line,'$.mid') mid_id,

get_json_object(line,'$.uid') user_id,

get_json_object(line,'$.vc') version_code,

get_json_object(line,'$.vn') version_name,

get_json_object(line,'$.l') lang,

get_json_object(line,'$.sr') source,

get_json_object(line,'$.os') os,

get_json_object(line,'$.ar') area,

get_json_object(line,'$.md') model,

get_json_object(line,'$.ba') brand,

get_json_object(line,'$.sv') sdk_version,

get_json_object(line,'$.g') gmail,

get_json_object(line,'$.hw') height_width,

get_json_object(line,'$.t') app_time,

get_json_object(line,'$.nw') network,

get_json_object(line,'$.ln') lng,

get_json_object(line,'$.la') lat,

get_json_object(line,'$.entry') entry,

get_json_object(line,'$.open_ad_type') open_ad_type,

get_json_object(line,'$.action') action,

get_json_object(line,'$.loading_time') loading_time,

get_json_object(line,'$.detail') detail,

get_json_object(line,'$.extend1') extend1

from ods_start_log

where dt='2019-03-10';

3)测试

select * from dwd_start_log limit 2;

C.DWD层启动表加载数据脚本

1)在bin目录下创建脚本

vim dwd_start_log.sh

#!/bin/bash

# 定义变量方便修改

APP=gmall

hive=/opt/module/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table "$APP".dwd_start_log

PARTITION (dt='$do_date')

select

get_json_object(line,'$.mid') mid_id,

get_json_object(line,'$.uid') user_id,

get_json_object(line,'$.vc') version_code,

get_json_object(line,'$.vn') version_name,

get_json_object(line,'$.l') lang,

get_json_object(line,'$.sr') source,

get_json_object(line,'$.os') os,

get_json_object(line,'$.ar') area,

get_json_object(line,'$.md') model,

get_json_object(line,'$.ba') brand,

get_json_object(line,'$.sv') sdk_version,

get_json_object(line,'$.g') gmail,

get_json_object(line,'$.hw') height_width,

get_json_object(line,'$.t') app_time,

get_json_object(line,'$.nw') network,

get_json_object(line,'$.ln') lng,

get_json_object(line,'$.la') lat,

get_json_object(line,'$.entry') entry,

get_json_object(line,'$.open_ad_type') open_ad_type,

get_json_object(line,'$.action') action,

get_json_object(line,'$.loading_time') loading_time,

get_json_object(line,'$.detail') detail,

get_json_object(line,'$.extend1') extend1

from "$APP".ods_start_log

where dt='$do_date';

"

$hive -e "$sql"

2)脚本使用

3)查询导入结果

select * from dwd_start_log where dt=‘2019-03-11’ limit 2;

E.DWD层事件表数据解析

1)创建基础明细表

a.

drop table if exists dwd_base_event_log;

CREATE EXTERNAL TABLE dwd_base_event_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`event_name` string,

`event_json` string,

`server_time` string)

PARTITIONED BY (`dt` string)

stored as parquet

location '/warehouse/gmall/dwd/dwd_base_event_log/';

b.说明:其中event_name和event_json用来对应事件名和整个事件。这个地方将原始日志1对多的形式拆分出来了。操作的时候我们需要将原始日志展平,需要用到UDF和UDTF。

2)自定义UDF和UDTF函数

a.这里直接用现成的hivefunction-1.0-SNAPSHOT上传到node1的/opt/module/hive/

b.将jar包添加到Hive的classpath

hive (gmall)> add jar /opt/module/hive/hivefunction-1.0-SNAPSHOT.jar;

c.创建临时函数与开发好的java class关联

create temporary function base_analizer as ‘com.atguigu.udf.BaseFieldUDF’;

create temporary function flat_analizer as ‘com.atguigu.udtf.EventJsonUDTF’;

4.2.4 解析事件日志基础明细表

1)解析事件日志基础明细表

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_base_event_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

event_name,

event_json,

server_time

from

(

select

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[0] as mid_id,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[1] as user_id,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[2] as version_code,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[3] as version_name,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[4] as lang,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[5] as source,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[6] as os,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[7] as area,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[8] as model,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[9] as brand,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[10] as sdk_version,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[11] as gmail,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[12] as height_width,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[13] as app_time,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[14] as network,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[15] as lng,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[16] as lat,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[17] as ops,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[18] as server_time

from ods_event_log where dt='2019-03-10' and base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la')<>''

) sdk_log lateral view flat_analizer(ops) tmp_k as event_name, event_json;

2)测试:

select * from dwd_base_event_log limit 2;

4.2.5 DWD层数据解析脚本

bin目录下创建脚本

vim dwd_base_log.sh

#!/bin/bash

# 定义变量方便修改

APP=gmall

hive=/opt/module/hive/bin/hive

# 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天

if [ -n "$1" ] ;then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

add jar /opt/module/hive/hivefunction-1.0-SNAPSHOT.jar;

create temporary function base_analizer as 'com.atguigu.udf.BaseFieldUDF';

create temporary function flat_analizer as 'com.atguigu.udtf.EventJsonUDTF';

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table "$APP".dwd_base_event_log

PARTITION (dt='$do_date')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source ,

os ,

area ,

model ,

brand ,

sdk_version ,

gmail ,

height_width ,

network ,

lng ,

lat ,

app_time ,

event_name ,

event_json ,

server_time

from

(

select

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[0] as mid_id,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[1] as user_id,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[2] as version_code,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[3] as version_name,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[4] as lang,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[5] as source,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[6] as os,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[7] as area,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[8] as model,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[9] as brand,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[10] as sdk_version,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[11] as gmail,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[12] as height_width,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[13] as app_time,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[14] as network,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[15] as lng,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[16] as lat,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[17] as ops,

split(base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[18] as server_time

from "$APP".ods_event_log where dt='$do_date' and base_analizer(line,'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la')<>''

) sdk_log lateral view flat_analizer(ops) tmp_k as event_name, event_json;

"

$hive -e "$sql"

4)查询导入结果

select * from dwd_base_event_log where dt=‘2019-03-11’ limit 2;

4.3DWD层事件表获取

4.3.1 商品点击表

1)建表语句

drop table if exists dwd_display_log;

CREATE EXTERNAL TABLE dwd_display_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`action` string,

`goodsid` string,

`place` string,

`extend1` string,

`category` string,

`server_time` string

)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_display_log/';

2)导入数据

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_display_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.action') action,

get_json_object(event_json,'$.kv.goodsid') goodsid,

get_json_object(event_json,'$.kv.place') place,

get_json_object(event_json,'$.kv.extend1') extend1,

get_json_object(event_json,'$.kv.category') category,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='display';

3)测试

select * from dwd_display_log limit 2;

4.3.2 商品详情页表

1)建表语句

drop table if exists dwd_newsdetail_log;

CREATE EXTERNAL TABLE dwd_newsdetail_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`entry` string,

`action` string,

`goodsid` string,

`showtype` string,

`news_staytime` string,

`loading_time` string,

`type1` string,

`category` string,

`server_time` string)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_newsdetail_log/';

2)导入数据

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_newsdetail_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.entry') entry,

get_json_object(event_json,'$.kv.action') action,

get_json_object(event_json,'$.kv.goodsid') goodsid,

get_json_object(event_json,'$.kv.showtype') showtype,

get_json_object(event_json,'$.kv.news_staytime') news_staytime,

get_json_object(event_json,'$.kv.loading_time') loading_time,

get_json_object(event_json,'$.kv.type1') type1,

get_json_object(event_json,'$.kv.category') category,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='newsdetail';

3)测试

hive (gmall)> select * from dwd_newsdetail_log limit 2;

4.3.3 商品列表页表

1)建表语句

hive (gmall)>

drop table if exists dwd_loading_log;

CREATE EXTERNAL TABLE dwd_loading_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`action` string,

`loading_time` string,

`loading_way` string,

`extend1` string,

`extend2` string,

`type` string,

`type1` string,

`server_time` string)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_loading_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_loading_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.action') action,

get_json_object(event_json,'$.kv.loading_time') loading_time,

get_json_object(event_json,'$.kv.loading_way') loading_way,

get_json_object(event_json,'$.kv.extend1') extend1,

get_json_object(event_json,'$.kv.extend2') extend2,

get_json_object(event_json,'$.kv.type') type,

get_json_object(event_json,'$.kv.type1') type1,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='loading';

3)测试

hive (gmall)> select * from dwd_loading_log limit 2;

4.3.4 广告表

1)建表语句

hive (gmall)>

drop table if exists dwd_ad_log;

CREATE EXTERNAL TABLE dwd_ad_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`entry` string,

`action` string,

`content` string,

`detail` string,

`ad_source` string,

`behavior` string,

`newstype` string,

`show_style` string,

`server_time` string)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_ad_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_ad_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.entry') entry,

get_json_object(event_json,'$.kv.action') action,

get_json_object(event_json,'$.kv.content') content,

get_json_object(event_json,'$.kv.detail') detail,

get_json_object(event_json,'$.kv.source') ad_source,

get_json_object(event_json,'$.kv.behavior') behavior,

get_json_object(event_json,'$.kv.newstype') newstype,

get_json_object(event_json,'$.kv.show_style') show_style,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='ad';

3)测试

hive (gmall)> select * from dwd_ad_log limit 2;、

4.3.5 消息通知表

1)建表语句

hive (gmall)>

drop table if exists dwd_notification_log;

CREATE EXTERNAL TABLE dwd_notification_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`action` string,

`noti_type` string,

`ap_time` string,

`content` string,

`server_time` string

)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_notification_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_notification_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.action') action,

get_json_object(event_json,'$.kv.noti_type') noti_type,

get_json_object(event_json,'$.kv.ap_time') ap_time,

get_json_object(event_json,'$.kv.content') content,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='notification';

3)测试

hive (gmall)> select * from dwd_notification_log limit 2;

4.3.6 用户前台活跃表

1)建表语句

hive (gmall)>

drop table if exists dwd_active_foreground_log;

CREATE EXTERNAL TABLE dwd_active_foreground_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`push_id` string,

`access` string,

`server_time` string)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_foreground_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_active_foreground_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.push_id') push_id,

get_json_object(event_json,'$.kv.access') access,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='active_foreground';

3)测试

hive (gmall)> select * from dwd_active_foreground_log limit 2;

4.3.7 用户后台活跃表

1)建表语句

hive (gmall)>

drop table if exists dwd_active_background_log;

CREATE EXTERNAL TABLE dwd_active_background_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`active_source` string,

`server_time` string

)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_background_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_active_background_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.active_source') active_source,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='active_background';

3)测试

hive (gmall)> select * from dwd_active_background_log limit 2;

4.3.8 评论表

1)建表语句

hive (gmall)>

drop table if exists dwd_comment_log;

CREATE EXTERNAL TABLE dwd_comment_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`comment_id` int,

`userid` int,

`p_comment_id` int,

`content` string,

`addtime` string,

`other_id` int,

`praise_count` int,

`reply_count` int,

`server_time` string

)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_comment_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_comment_log

PARTITION (dt='2019-03-10')

select

mid_id,

user_id,

version_code,

version_name,

lang,

source,

os,

area,

model,

brand,

sdk_version,

gmail,

height_width,

app_time,

network,

lng,

lat,

get_json_object(event_json,'$.kv.comment_id') comment_id,

get_json_object(event_json,'$.kv.userid') userid,

get_json_object(event_json,'$.kv.p_comment_id') p_comment_id,

get_json_object(event_json,'$.kv.content') content,

get_json_object(event_json,'$.kv.addtime') addtime,

get_json_object(event_json,'$.kv.other_id') other_id,

get_json_object(event_json,'$.kv.praise_count') praise_count,

get_json_object(event_json,'$.kv.reply_count') reply_count,

server_time

from dwd_base_event_log

where dt='2019-03-10' and event_name='comment';

3)测试

hive (gmall)> select * from dwd_comment_log limit 2;

4.3.9 收藏表

1)建表语句

hive (gmall)>

drop table if exists dwd_favorites_log;

CREATE EXTERNAL TABLE dwd_favorites_log(

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`network` string,

`lng` string,

`lat` string,

`id` int,

`course_id` int,

`userid` int,

`add_time` string,

`server_time` string

)

PARTITIONED BY (dt string)

location '/warehouse/gmall/dwd/dwd_favorites_log/';

2)导入数据

hive (gmall)>

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table dwd_favorites_log

PARTITION (dt='2019-03-10')