iOS开发之opencv学习笔记二:使用CascadeClassifier进行对特定物体的跟踪

上一篇介绍了opencv的下载和安装

如果成功编译出opencv3.2.0的iOS framework,那么就可以使用opencv的功能了。

1.CascadeClassifier是什么?

opencv有一个很强大的功能:识别跟踪。你可以利用CascadeClassifier对特定的物体进行跟踪,来实现VR/AR功能。

2.怎么做人脸识别跟踪?

首先,我们需要一个级联特征库,这是一个xml文件,可以认为它是opencv的记忆载体。

我找到了一个haarcascade_frontalface_alt2.xml,这记录的是如何辨别人脸的特征库。那么,我们开始做人脸识别吧。

创建一个iOS工程,把haarcascade_frontalface_alt2.xml拷到工程,导入头文件

#ifdef __cplusplus

#import

#import

#import

#import

#import

#import

#import

#import

#endif 建议做一个PCH文件,把这块放到PCH文件里。

做一个摄像头取景的ViewController,大家都是iOS高手,我就不赘述过程了。不过我建议使用AVCaptureVideoPreviewLayer来渲染帧数据,而不是用UIImageView。

初始化并加载一个CascadeClassifier

NSString *path = [[NSBundle mainBundle] pathForResource:@"haarcascade_frontalface_alt2" ofType:@"xml"];

cv::CascadeClassifier clsfr;

clsfr.load([path cStringUsingEncoding:NSASCIIStringEncoding]);在摄像头帧回调里面将CMSampleBufferRef转换成UIIMage

+ (UIImage *) imageFromSampleBuffer:(CMSampleBufferRef) sampleBuffer

{

// Get a CMSampleBuffer's Core Video image buffer for the media data

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

// Lock the base address of the pixel buffer

CVPixelBufferLockBaseAddress(imageBuffer, 0);

// Get the number of bytes per row for the plane pixel buffer

void *baseAddress = CVPixelBufferGetBaseAddress(imageBuffer);

// Get the number of bytes per row for the plane pixel buffer

size_t bytesPerRow = CVPixelBufferGetBytesPerRow(imageBuffer);

// Get the pixel buffer width and height

size_t width = CVPixelBufferGetWidth(imageBuffer);

size_t height = CVPixelBufferGetHeight(imageBuffer);

// Create a device-dependent gray color space

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

// Create a bitmap graphics context with the sample buffer data

CGContextRef context = CGBitmapContextCreate(baseAddress, width, height, 8,

bytesPerRow, colorSpace, kCGBitmapByteOrder32Little | kCGImageAlphaNoneSkipFirst);

// Create a Quartz image from the pixel data in the bitmap graphics context

CGImageRef quartzImage = CGBitmapContextCreateImage(context);

// Unlock the pixel buffer

CVPixelBufferUnlockBaseAddress(imageBuffer,0);

// Free up the context and color space

CGContextRelease(context);

CGColorSpaceRelease(colorSpace);

// Create an image object from the Quartz image

UIImage *image = [UIImage imageWithCGImage:quartzImage scale:1 orientation:UIImageOrientationRight];

// Release the Quartz image

CGImageRelease(quartzImage);

// _frameImage = image;

return (image);

}这时候就得到一张可能90度倒转的图片,这时候就要对这张图片进行修正

- (UIImage *)fixOrientation

{

if (self.imageOrientation == UIImageOrientationUp) return self;

// We need to calculate the proper transformation to make the image upright.

// We do it in 2 steps: Rotate if Left/Right/Down, and then flip if Mirrored.

CGAffineTransform transform = CGAffineTransformIdentity;

switch (self.imageOrientation)

{

case UIImageOrientationDown:

case UIImageOrientationDownMirrored:

transform = CGAffineTransformTranslate(transform, self.size.width, self.size.height);

transform = CGAffineTransformRotate(transform, M_PI);

break;

case UIImageOrientationLeft:

case UIImageOrientationLeftMirrored:

transform = CGAffineTransformTranslate(transform, self.size.width, 0);

transform = CGAffineTransformRotate(transform, M_PI_2);

break;

case UIImageOrientationRight:

case UIImageOrientationRightMirrored:

transform = CGAffineTransformTranslate(transform, 0, self.size.height);

transform = CGAffineTransformRotate(transform, -M_PI_2);

break;

case UIImageOrientationUp:

case UIImageOrientationUpMirrored:

break;

}

switch (self.imageOrientation)

{

case UIImageOrientationUpMirrored:

case UIImageOrientationDownMirrored:

transform = CGAffineTransformTranslate(transform, self.size.width, 0);

transform = CGAffineTransformScale(transform, -1, 1);

break;

case UIImageOrientationLeftMirrored:

case UIImageOrientationRightMirrored:

transform = CGAffineTransformTranslate(transform, self.size.height, 0);

transform = CGAffineTransformScale(transform, -1, 1);

break;

case UIImageOrientationUp:

case UIImageOrientationDown:

case UIImageOrientationLeft:

case UIImageOrientationRight:

break;

}

// Now we draw the underlying CGImage into a new context, applying the transform

// calculated above.

CGContextRef ctx = CGBitmapContextCreate(NULL, self.size.width, self.size.height,

CGImageGetBitsPerComponent(self.CGImage), 0,

CGImageGetColorSpace(self.CGImage),

CGImageGetBitmapInfo(self.CGImage));

CGContextConcatCTM(ctx, transform);

switch (self.imageOrientation)

{

case UIImageOrientationLeft:

case UIImageOrientationLeftMirrored:

case UIImageOrientationRight:

case UIImageOrientationRightMirrored:

CGContextDrawImage(ctx, CGRectMake(0,0,self.size.height,self.size.width), self.CGImage);

break;

default:

CGContextDrawImage(ctx, CGRectMake(0,0,self.size.width,self.size.height), self.CGImage);

break;

}

CGImageRef cgimg = CGBitmapContextCreateImage(ctx);

UIImage *img = [UIImage imageWithCGImage:cgimg];

CGContextRelease(ctx);

CGImageRelease(cgimg);

return img;

}然后将这张图片转换成cv::Mat

+ (void) UIImageToMat:(UIImage *)image mat:(cv::Mat&)m alphaExist:(bool)alphaExist

{

CGColorSpaceRef colorSpace = CGImageGetColorSpace(image.CGImage);

CGFloat cols = image.size.width, rows = image.size.height;

CGContextRef contextRef;

CGBitmapInfo bitmapInfo = kCGImageAlphaPremultipliedLast;

if (CGColorSpaceGetModel(colorSpace) == 0)

{

m.create(rows, cols, CV_8UC1); // 8 bits per component, 1 channel

bitmapInfo = kCGImageAlphaNone;

if (!alphaExist)

bitmapInfo = kCGImageAlphaNone;

contextRef = CGBitmapContextCreate(m.data, m.cols, m.rows, 8,

m.step[0], colorSpace,

bitmapInfo);

}

else

{

m.create(rows, cols, CV_8UC4); // 8 bits per component, 4 channels

if (!alphaExist)

bitmapInfo = kCGImageAlphaNoneSkipLast |

kCGBitmapByteOrderDefault;

contextRef = CGBitmapContextCreate(m.data, m.cols, m.rows, 8,

m.step[0], colorSpace,

bitmapInfo);

}

CGContextDrawImage(contextRef, CGRectMake(0, 0, cols, rows),

image.CGImage);

CGContextRelease(contextRef);

}

接下来就可以这样了

vector faces;

double scalingFactor = 1.1;

int minNeighbors = 2;

int flags = 0;

clsfr.detectMultiScale(mat,

faces,

scalingFactor,

minNeighbors,

flags,

cv::Size(30, 30));

if (faces.size() > 0) {

//创建画布将人脸部分标记出

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef contextRef = CGBitmapContextCreate(NULL, img.size.width, img.size.height,8, img.size.width * 4,colorSpace, kCGImageAlphaPremultipliedLast|kCGBitmapByteOrderDefault);

CGContextSetLineWidth(contextRef, 4);

CGContextSetRGBStrokeColor(contextRef, 1.0, 0.0, 0.0, 1);

for(int i = 0; i < faces.size(); i++) {

// Calc the rect of faces

CvRect cvrect = faces[i];

CGRect face_rect = CGContextConvertRectToDeviceSpace(contextRef, CGRectMake(cvrect.x*scale, cvrect.y*scale , cvrect.width*scale, cvrect.height*scale));

CGContextStrokeRect(contextRef, face_rect);

}

dispatch_async(dispatch_get_main_queue(), ^{

CGImageRef rectImageRef = CGBitmapContextCreateImage(contextRef);

UIImage *rectImage = [UIImage imageWithCGImage:rectImageRef];

_imageView.image = rectImage;

CGContextRelease(contextRef);

});

} 这里面_imageView是覆盖在摄像头预览图层上的,你就可以看到一个小框框跟着你拍到的人脸后面跑来跑去了。

3.哪里可以找到haarcascade_frontalface_alt2.xml或者其他的特征库?

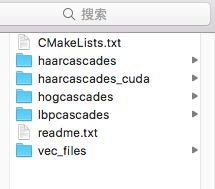

在opencv的data目录有这几个目录

名字包含cascades的目录里面都是xml文件,这些就是opencv贡献者们训练出来用来跟踪特定物体的特征库。