基于DataX的crontab定时同步功能——CentOS7.6下DataX完美详细安装配置使用解析,和crontab灵魂结合

DataX crontab亵玩 学习

0.环境

现在JDK版本是需要1.8了,官方源码都进行了升级

| ITEM | VERSION |

|---|---|

| CentOS | 7.6 |

| JDK | 1.8.0_232 |

| Python | 2.7.15 |

| Maven | 3.6.3 |

1.JDK安装配置【完美】

# 卸载默认环境

yum -y remove java-1.8.0-openjdk*

yum -y remove tzdata-java*

# 下载安装依赖

yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

# 查到系统默认安装jdk的位置/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-0.el7_6.x86_64/jre/bin/java

[root@ansible-server randolph]# ls -lrt /usr/bin/java

lrwxrwxrwx 1 root root 22 8月 23 13:42 /usr/bin/java -> /etc/alternatives/java

[root@ansible-server randolph]# ls -lrt /etc/alternatives/java

lrwxrwxrwx 1 root root 73 8月 23 13:42 /etc/alternatives/java -> /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-0.el7_6.x86_64/jre/bin/java

# 加到环境变量

vim /etc/profile

# jdk

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

export JRE_HOME=$JAVA_HOME/jre

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

source /etc/profile

# 检测

java -version

javac

2.Maven安装配置——用于编译git clone下来的源码

cd /opt/

wget https://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

tar -zxvf apache-maven-3.6.3-bin.tar.gz

mv apache-maven-3.6.3/ maven # 改名方便操作

vim /etc/profile # 修改配置文件,并在末尾添加

# maven

M2_HOME=/opt/maven # 这里的路径注意下

export PATH=${M2_HOME}/bin:${PATH}

# 重载文件立即生效

source /etc/profile

检查maven安装是否成功

mvn -v

检查maven已经安装

[root@ansible-server opt]# mvn -v

Apache Maven 3.6.3 (cecedd343002696d0abb50b32b541b8a6ba2883f)

Maven home: /opt/maven

Java version: 1.8.0_222, vendor: Oracle Corporation, runtime: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.222.b10-0.el7_6.x86_64/jre

Default locale: zh_CN, platform encoding: UTF-8

OS name: "linux", version: "3.10.0-957.27.2.el7.x86_64", arch: "amd64", family: "unix"

3.检查系统版本、python版本

实际上有python2/3都OK,后面datax的bin文件夹下的三个py文件datax.py也有python2/3版本,在执行命令的时候只需要对应即可。

[root@client-1 ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

[root@ansible-server DataX]# java -version

openjdk version "1.8.0_232"

OpenJDK Runtime Environment (build 1.8.0_232-b09)

OpenJDK 64-Bit Server VM (build 25.232-b09, mixed mode)

[root@ansible-server ~]# python -V

Python 2.7.15

[root@ansible-server ~]# python3 -V

Python 3.6.8

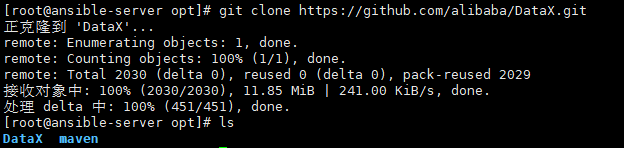

1.DataX安装部署

git clone https://github.com/alibaba/DataX.git

cd DataX

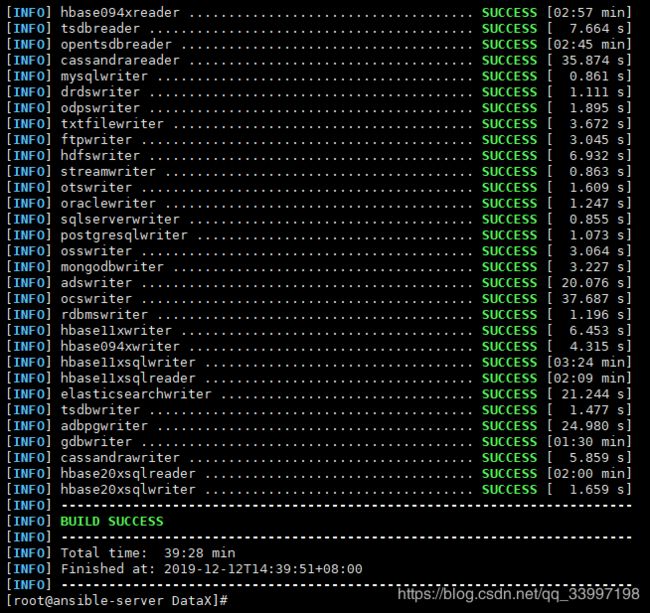

mvn -U clean package assembly:assembly -Dmaven.test.skip=true # maven打包

[INFO] datax/lib/slf4j-api-1.7.10.jar already added, skipping

[INFO] datax/lib/logback-classic-1.0.13.jar already added, skipping

[INFO] datax/lib/logback-core-1.0.13.jar already added, skipping

[INFO] datax/lib/commons-math3-3.1.1.jar already added, skipping

[INFO] datax/lib/hamcrest-core-1.3.jar already added, skipping

[WARNING] Assembly file: /opt/DataX/target/datax is not a regular file (it may be a directory). It cannot be attached to the project build for installation or deployment.

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for datax-all 0.0.1-SNAPSHOT:

[INFO]

[INFO] datax-all .......................................... SUCCESS [03:23 min]

[INFO] datax-common ....................................... SUCCESS [ 22.458 s]

[INFO] datax-transformer .................................. SUCCESS [ 25.734 s]

[INFO] datax-core ......................................... SUCCESS [01:02 min]

[INFO] plugin-rdbms-util .................................. SUCCESS [ 20.741 s]

[INFO] mysqlreader ........................................ SUCCESS [ 1.096 s]

[INFO] drdsreader ......................................... SUCCESS [ 2.539 s]

[INFO] sqlserverreader .................................... SUCCESS [ 1.885 s]

[INFO] postgresqlreader ................................... SUCCESS [ 5.029 s]

[INFO] oraclereader ....................................... SUCCESS [ 1.047 s]

[INFO] odpsreader ......................................... SUCCESS [ 43.033 s]

[INFO] otsreader .......................................... SUCCESS [ 31.965 s]

[INFO] otsstreamreader .................................... SUCCESS [ 16.498 s]

[INFO] plugin-unstructured-storage-util ................... SUCCESS [03:08 min]

[INFO] txtfilereader ...................................... SUCCESS [ 5.172 s]

[INFO] hdfsreader ......................................... SUCCESS [06:13 min]

[INFO] streamreader ....................................... SUCCESS [ 1.028 s]

[INFO] ossreader .......................................... SUCCESS [ 12.157 s]

[INFO] ftpreader .......................................... SUCCESS [ 6.172 s]

[INFO] mongodbreader ...................................... SUCCESS [ 9.056 s]

[INFO] rdbmsreader ........................................ SUCCESS [ 1.186 s]

[INFO] hbase11xreader ..................................... SUCCESS [04:06 min]

[INFO] hbase094xreader .................................... SUCCESS [02:57 min]

[INFO] tsdbreader ......................................... SUCCESS [ 7.664 s]

[INFO] opentsdbreader ..................................... SUCCESS [02:45 min]

[INFO] cassandrareader .................................... SUCCESS [ 35.874 s]

[INFO] mysqlwriter ........................................ SUCCESS [ 0.861 s]

[INFO] drdswriter ......................................... SUCCESS [ 1.111 s]

[INFO] odpswriter ......................................... SUCCESS [ 1.895 s]

[INFO] txtfilewriter ...................................... SUCCESS [ 3.672 s]

[INFO] ftpwriter .......................................... SUCCESS [ 3.045 s]

[INFO] hdfswriter ......................................... SUCCESS [ 6.932 s]

[INFO] streamwriter ....................................... SUCCESS [ 0.863 s]

[INFO] otswriter .......................................... SUCCESS [ 1.609 s]

[INFO] oraclewriter ....................................... SUCCESS [ 1.247 s]

[INFO] sqlserverwriter .................................... SUCCESS [ 0.855 s]

[INFO] postgresqlwriter ................................... SUCCESS [ 1.073 s]

[INFO] osswriter .......................................... SUCCESS [ 3.064 s]

[INFO] mongodbwriter ...................................... SUCCESS [ 3.227 s]

[INFO] adswriter .......................................... SUCCESS [ 20.076 s]

[INFO] ocswriter .......................................... SUCCESS [ 37.687 s]

[INFO] rdbmswriter ........................................ SUCCESS [ 1.196 s]

[INFO] hbase11xwriter ..................................... SUCCESS [ 6.453 s]

[INFO] hbase094xwriter .................................... SUCCESS [ 4.315 s]

[INFO] hbase11xsqlwriter .................................. SUCCESS [03:24 min]

[INFO] hbase11xsqlreader .................................. SUCCESS [02:09 min]

[INFO] elasticsearchwriter ................................ SUCCESS [ 21.244 s]

[INFO] tsdbwriter ......................................... SUCCESS [ 1.477 s]

[INFO] adbpgwriter ........................................ SUCCESS [ 24.980 s]

[INFO] gdbwriter .......................................... SUCCESS [01:30 min]

[INFO] cassandrawriter .................................... SUCCESS [ 5.859 s]

[INFO] hbase20xsqlreader .................................. SUCCESS [02:00 min]

[INFO] hbase20xsqlwriter .................................. SUCCESS [ 1.659 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 39:28 min

[INFO] Finished at: 2019-12-12T14:39:51+08:00

[INFO] ------------------------------------------------------------------------

[root@ansible-server DataX]#

2.DataX使用/踩坑

1.配置一个简单例子

做什么好呢,正好手上有三个虚机absible-server client-1 client-2;

我们将absible-server mysql数据库test.user同步到client-1数据库test.user吧;

配置的任务是json格式的,我们假设任务的配置文件叫做mysql2mysql.json

需要用datax.py执行,看一眼datax.py就知道项目是python2的;

mysql2mysql.json位置在上一层目录job文件夹下存放;

1.如何跑任务呢:

python /opt/DataX/target/datax/datax/bin/datax.py /opt/DataX/target/datax/datax/job/mysql2mysql.json

成功的话会是这样的:

但是我相信你需要仔细看一下任务的json文件该如何去配置

2.配置文件格式说明:

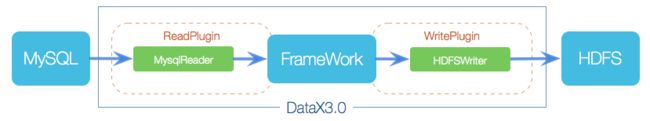

可以看到job分为reader和writer两部分,正体现了datax的架构特征:

阿里云开源离线同步工具DataX3.0介绍

部分参数说明:

- jdbcUrl jdbc:mysql://127.0.0.1:3306/test?characterEncoding=utf8

里面需要配置源IP/目标IP:数据库端口/数据库名?characterEncoding=utf8 控制编码格式,防止写入中文出现错误 - username 这个用户,需要在数据库中创建,并赋予其增删改查等权限

创建的可以用来读取主库/修改从库的用户,我直接使用的root用户,并且后面试错后给root用户【允许所有远程机器访问】的权限

参考MySQL用户授权(GRANT)

/opt/DataX/target/datax/datax/job/mysql2mysql.json :

{

"job": {

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"column": [

"id",

"name"

],

"connection": [

{

"jdbcUrl": ["jdbc:mysql://127.0.0.1:3306/test?characterEncoding=utf8"],

"table": ["user"]

}

],

"password": "asdf",

"username": "root"

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"column": [

"id",

"name"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://192.168.255.134:3306/test?characterEncoding=utf8",

"table": ["user"]

}

],

"password": "asdf",

"username": "root"

}

}

}

],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

上面的文件配置完,就可以用datax.py跑这个json文件了,当然,我第一次跑,遇到了几处错误

3.跑任务出错与测试

1.Host ‘ansible-server’ is not allowed to connect to this MySQL server

原因:mysql服务器出于安全考虑,默认只允许本地登录数据库服务器

因此主服务器133不能用root访问从服务器数据库:

[root@ansible-server job]# mysql -h192.168.255.134 -uroot -p

Enter password:

ERROR 1130 (HY000): Host '192.168.255.133' is not allowed to connect to this MySQL server

从服务器将mysql的root用户的host从"localhost"改成"%",允许所有远程端访问:

主服务器的root用户也作同样修改,进入mysql库,看下root的host,然后改成%,确认下:

mysql> use mysql;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select host, user from user;

+-----------+---------------+

| host | user |

+-----------+---------------+

| localhost | mysql.session |

| localhost | mysql.sys |

| localhost | root |

+-----------+---------------+

3 rows in set (0.28 sec)

mysql> update user set host = '%' where user = 'root';

Query OK, 1 row affected (0.59 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> flush privileges;

Query OK, 0 rows affected (0.14 sec)

mysql> select host, user from user;

+-----------+---------------+

| host | user |

+-----------+---------------+

| % | root |

| localhost | mysql.session |

| localhost | mysql.sys |

+-----------+---------------+

3 rows in set (0.00 sec)

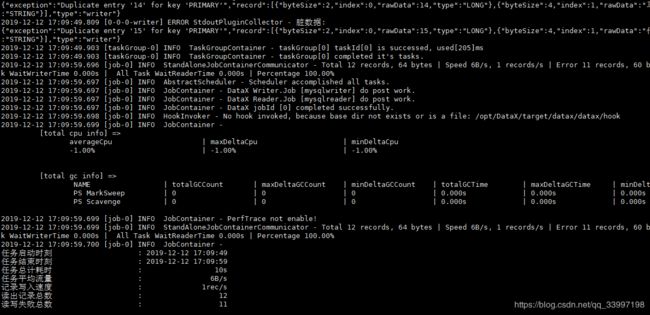

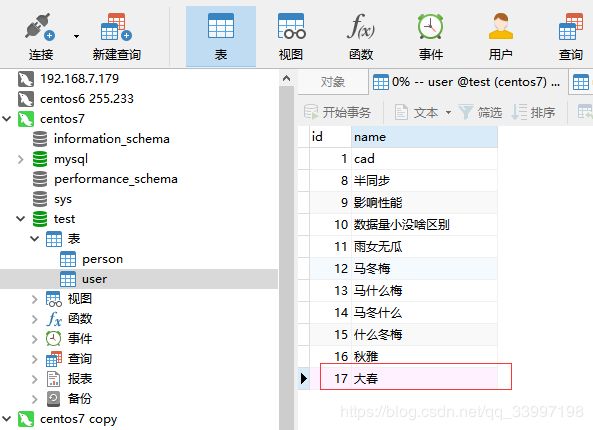

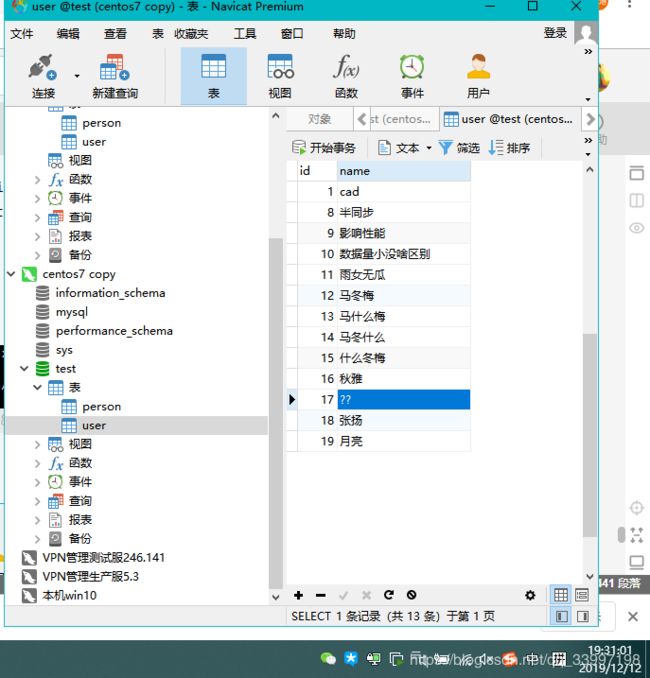

2.跑同步任务成功【但中文插入错误】

检查下有没有将主库的test.user表同步到从库134的test.user表:

出现了错误,将中文数据显示错误,jdbcurl末尾加上?characterEncoding=utf8

主库test.user表插入一条数据:

[root@ansible-server job]# python /opt/DataX/target/datax/datax/bin/datax.py /opt/DataX/target/datax/datax/job/mysql2mysql.json

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2019-12-12 17:04:18.115 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2019-12-12 17:04:18.122 [main] INFO Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.232-b09

jvmInfo: Linux amd64 3.10.0-957.27.2.el7.x86_64

cpu num: 4

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2019-12-12 17:04:18.143 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"mysqlreader",

"parameter":{

"column":[

"id",

"name"

],

"connection":[

{

"jdbcUrl":[

"jdbc:mysql://127.0.0.1:3306/test"

],

"table":[

"user"

]

}

],

"password":"****",

"username":"root"

}

},

"writer":{

"name":"mysqlwriter",

"parameter":{

"column":[

"id",

"name"

],

"connection":[

{

"jdbcUrl":"jdbc:mysql://192.168.255.134:3306/test",

"table":[

"user"

]

}

],

"password":"****",

"username":"root"

}

}

}

],

"setting":{

"speed":{

"channel":"1"

}

}

}

2019-12-12 17:04:18.161 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2019-12-12 17:04:18.163 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2019-12-12 17:04:18.164 [main] INFO JobContainer - DataX jobContainer starts job.

2019-12-12 17:04:18.165 [main] INFO JobContainer - Set jobId = 0

2019-12-12 17:04:18.467 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://127.0.0.1:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2019-12-12 17:04:18.484 [job-0] INFO OriginalConfPretreatmentUtil - table:[user] has columns:[id,name].

2019-12-12 17:04:18.930 [job-0] INFO OriginalConfPretreatmentUtil - table:[user] all columns:[

id,name

].

2019-12-12 17:04:18.967 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

INSERT INTO %s (id,name) VALUES(?,?)

], which jdbcUrl like:[jdbc:mysql://192.168.255.134:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true]

2019-12-12 17:04:18.967 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2019-12-12 17:04:18.968 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

2019-12-12 17:04:18.968 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do prepare work .

2019-12-12 17:04:18.968 [job-0] INFO JobContainer - jobContainer starts to do split ...

2019-12-12 17:04:18.969 [job-0] INFO JobContainer - Job set Channel-Number to 1 channels.

2019-12-12 17:04:18.973 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] splits to [1] tasks.

2019-12-12 17:04:18.974 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] splits to [1] tasks.

2019-12-12 17:04:18.990 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2019-12-12 17:04:18.993 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2019-12-12 17:04:18.996 [job-0] INFO JobContainer - Running by standalone Mode.

2019-12-12 17:04:19.007 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2019-12-12 17:04:19.010 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2019-12-12 17:04:19.010 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2019-12-12 17:04:19.019 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2019-12-12 17:04:19.024 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [select id,name from user

] jdbcUrl:[jdbc:mysql://127.0.0.1:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

2019-12-12 17:04:19.043 [0-0-0-reader] INFO CommonRdbmsReader$Task - Finished read record by Sql: [select id,name from user

] jdbcUrl:[jdbc:mysql://127.0.0.1:3306/test?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

2019-12-12 17:04:19.226 [0-0-0-writer] WARN CommonRdbmsWriter$Task - 回滚此次写入, 采用每次写入一行方式提交. 因为:Duplicate entry '1' for key 'PRIMARY'

2019-12-12 17:04:19.243 [0-0-0-writer] ERROR StdoutPluginCollector -

com.mysql.jdbc.exceptions.jdbc4.MySQLIntegrityConstraintViolationException: Duplicate entry '1' for key 'PRIMARY'

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[na:1.8.0_232]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[na:1.8.0_232]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_232]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_232]

at com.mysql.jdbc.Util.handleNewInstance(Util.java:377) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.Util.getInstance(Util.java:360) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:971) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3887) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3823) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:2435) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2582) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2530) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.PreparedStatement.executeInternal(PreparedStatement.java:1907) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.mysql.jdbc.PreparedStatement.execute(PreparedStatement.java:1199) ~[mysql-connector-java-5.1.34.jar:5.1.34]

at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.doOneInsert(CommonRdbmsWriter.java:382) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.doBatchInsert(CommonRdbmsWriter.java:362) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.startWriteWithConnection(CommonRdbmsWriter.java:297) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.startWrite(CommonRdbmsWriter.java:319) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.plugin.writer.mysqlwriter.MysqlWriter$Task.startWrite(MysqlWriter.java:78) [mysqlwriter-0.0.1-SNAPSHOT.jar:na]

at com.alibaba.datax.core.taskgroup.runner.WriterRunner.run(WriterRunner.java:56) [datax-core-0.0.1-SNAPSHOT.jar:na]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_232]

2019-12-12 17:04:19.246 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '1' for key 'PRIMARY'","record":[{"byteSize":1,"index":0,"rawData":1,"type":"LONG"},{"byteSize":3,"index":1,"rawData":"cad","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.257 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '8' for key 'PRIMARY'","record":[{"byteSize":1,"index":0,"rawData":8,"type":"LONG"},{"byteSize":3,"index":1,"rawData":"半同步","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.263 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '9' for key 'PRIMARY'","record":[{"byteSize":1,"index":0,"rawData":9,"type":"LONG"},{"byteSize":4,"index":1,"rawData":"影响性能","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.267 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '10' for key 'PRIMARY'","record":[{"byteSize":2,"index":0,"rawData":10,"type":"LONG"},{"byteSize":8,"index":1,"rawData":"数据量小没啥区别","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.270 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '11' for key 'PRIMARY'","record":[{"byteSize":2,"index":0,"rawData":11,"type":"LONG"},{"byteSize":4,"index":1,"rawData":"雨女无瓜","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.285 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '12' for key 'PRIMARY'","record":[{"byteSize":2,"index":0,"rawData":12,"type":"LONG"},{"byteSize":3,"index":1,"rawData":"马冬梅","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.289 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '13' for key 'PRIMARY'","record":[{"byteSize":2,"index":0,"rawData":13,"type":"LONG"},{"byteSize":4,"index":1,"rawData":"马什么梅","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.294 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '14' for key 'PRIMARY'","record":[{"byteSize":2,"index":0,"rawData":14,"type":"LONG"},{"byteSize":4,"index":1,"rawData":"马冬什么","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.297 [0-0-0-writer] ERROR StdoutPluginCollector - 脏数据:

{"exception":"Duplicate entry '15' for key 'PRIMARY'","record":[{"byteSize":2,"index":0,"rawData":15,"type":"LONG"},{"byteSize":4,"index":1,"rawData":"什么冬梅","type":"STRING"}],"type":"writer"}

2019-12-12 17:04:19.523 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[508]ms

2019-12-12 17:04:19.524 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2019-12-12 17:04:29.015 [job-0] INFO StandAloneJobContainerCommunicator - Total 11 records, 60 bytes | Speed 6B/s, 1 records/s | Error 10 records, 56 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2019-12-12 17:04:29.016 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2019-12-12 17:04:29.016 [job-0] INFO JobContainer - DataX Writer.Job [mysqlwriter] do post work.

2019-12-12 17:04:29.016 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do post work.

2019-12-12 17:04:29.016 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2019-12-12 17:04:29.017 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: /opt/DataX/target/datax/datax/hook

2019-12-12 17:04:29.018 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2019-12-12 17:04:29.018 [job-0] INFO JobContainer - PerfTrace not enable!

2019-12-12 17:04:29.019 [job-0] INFO StandAloneJobContainerCommunicator - Total 11 records, 60 bytes | Speed 6B/s, 1 records/s | Error 10 records, 56 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2019-12-12 17:04:29.020 [job-0] INFO JobContainer -

任务启动时刻 : 2019-12-12 17:04:18

任务结束时刻 : 2019-12-12 17:04:29

任务总计耗时 : 10s

任务平均流量 : 6B/s

记录写入速度 : 1rec/s

读出记录总数 : 11

读写失败总数 : 10

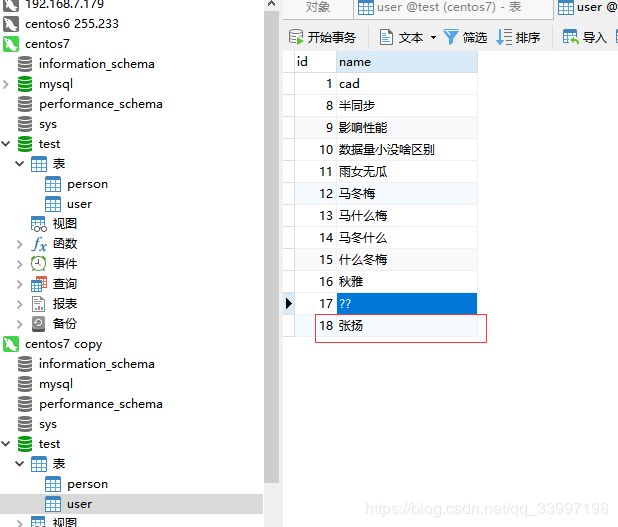

从库结果:

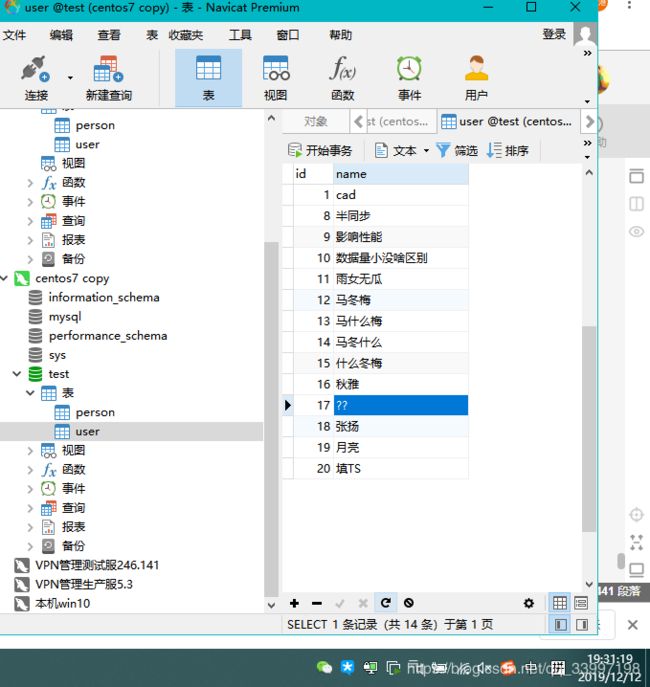

再次尝试:

但是之前错误的没有改正

相较于mysql自带的主从复制功能,这里阿里的DataX同步“主从库”不会因为数据不一致而直接同步不成功,IO线程挂掉,而是选择忽略,接着同步其他数据。蛮像Noteability软件的。

3.结合crontabs,使DataX可以配置定时任务

1.确保安装crontabs

yum install crontabs

# crontabs-1.11-6.20121102git.el7.noarch 已安装并且是最新版本

常用命令:

service crond start # 启服务

service crond stop # 停服务

service crond restart # 重启服务

service crond reload # 重载配置

service crond status # 查看服务状态

crontab -l # 查看当前用户的定时任务

2.如何创建crontab定时任务

我们在/opt/DataX/target/datax/datax/job下创建定时任务文件,因为是crontabs+datax,就叫做crondatax吧:

vim crondatax

30,31,32,33 19 * * * python /opt/DataX/target/datax/datax/bin/datax.py /opt/DataX/target/datax/datax/job/mysql2mysql.json >>/opt/DataX/target/datax/datax/job/log.`date +\%Y\%m\%d\%H\%M\%S` 2>&1

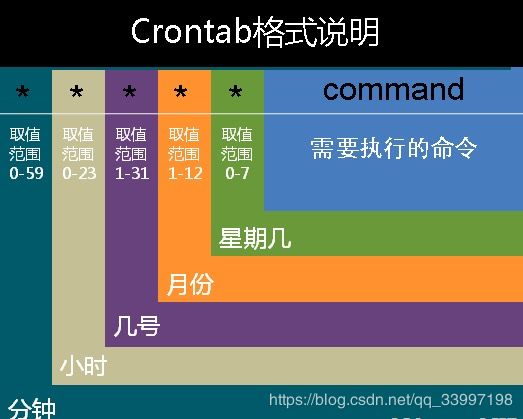

格式说明:参照下图,解释下上面的测试例子:19时的30,31,32,33分分别做一次这个任务python /opt/DataX/target/datax/datax/bin/datax.py /opt/DataX/target/datax/datax/job/mysql2mysql.json,然后将每次执行的日志记录下,下图的log.xxxx就是,内容不赘述,都成功了。

填写说明:再新建一个任务,就在crondatax文件换一行写入即可。

![]()

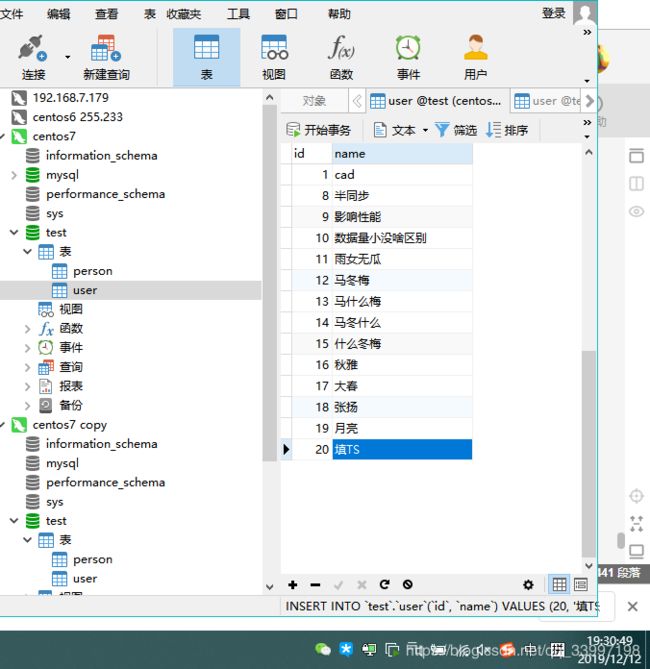

跑任务,看下有没有:

![]()

跑起來了,这主库加的一条:

还未刷新navicat:

刷新,数据已经同步进来了,【我手速太慢,截图】:

4.未来计划

深入使用mysql自带的主从同步功能,并注重了解边界;

结合更常见的场景去使用crontab+datax做定时异步任务,与前者作对比,争取多发现几个各自的特点;

我知乎搜下然后去实验

下面还有基于datax的web项目,我还没看,不过要是我们去开发,调用api实现前端设置对一些库的同步,备份,等操作;

碍于经验和眼光,我暂时没别的想法。

大家都知道 mysql有自带的主从同步功能,也是需要从库去建立连接,然后开启对二进制文件的IO和SQL线程

但是同步过程中若因为同步问题出错,IO线程挂掉,还要人工干预

阿里的datax牺牲了相较于mysql主从同步(半同步模式)实时性较高的优势,提高了同步的稳定性能,再与crontab定时工具灵魂相融合,将运维的数据备份、同步等任务变得个性化,完美化。