本实验是使用nginx来建立一个简单网页,同时结合HA来提供高可用服务,接下来就是真个安装和配置的过程:

整体拓扑如下图所示:

--------- ---------

| HA1 |___________| HA2 |

|_______| |_______|

--------- ---------

| HA1 |___________| HA2 |

|_______| |_______|

VIP:192.168.1.80

HA1:192.168.1.78

HA2:192.168.1.151

HA1:192.168.1.78

HA2:192.168.1.151

一、环境变量的初始化

1.安装开发环境

#yum groupinstall "Development Tools" "Development Libraries"

#yum install gcc openssl-devel zlib-devel

#yum install pcre-devel

2.添加nginx用户

#groupadd nginx

#useradd -g nginx -M -s /sbin/false nginx

3.配置/etc/hosts文件

#vim /etc/hosts

192.168.1.78 node1.luowei.com node1

192.168.1.151 node2.luowei.com node2

4.配置两个HA之间不用密码就能相互访问:

#ssh-keygen -t rsa

#ssh-copy-id -i .ssh/id_rsa.pub root@node2

同时也在HA2上做3、4操作

二、安装最新版本的nginx,这个2011-9-14号刚发布的nginx-1.1.3.tar.gz,详细可以参照官网 http://www.nginx.com

#tar xf nginx-1.1.3.tar.gz

#cd nginx-1.1.3

#tar xf nginx-1.1.3.tar.gz

#cd nginx-1.1.3

#./configure \

--prefix=/usr \ //安装的目录

--sbin-path=/usr/sbin/nginx \ //可执行文件的路径

--conf-path=/etc/nginx/nginx.conf \ //配置文件的路径

--error-log-path=/var/log/nginx/error.log \ //错误日志的位置

--http-log-path=/var/log/nginx/access.log \ //访问日志的位置

--pid-path=/var/run/nginx/nginx.pid \ //pid模块的位置

--lock-path=/var/lock/nginx.lock \

--user=nginx \ //用户名

--group=nginx \ //用户组名

--with-http_ssl_module \ //支持ssl的模块

--with-http_flv_module \ //支持流媒体功能

--with-http_stub_status_module \ //状态检测的模块

--with-http_gzip_static_module \ //压缩模块

--http-client-body-temp-path=/var/tmp/nginx/client/ \

--http-proxy-temp-path=/var/tmp/nginx/proxy/ \ //代理的缓存的位置

--http-fastcgi-temp-path=/var/tmp/nginx/fcgi/ \ //使用fastcgi的目录

--with-pcre

--prefix=/usr \ //安装的目录

--sbin-path=/usr/sbin/nginx \ //可执行文件的路径

--conf-path=/etc/nginx/nginx.conf \ //配置文件的路径

--error-log-path=/var/log/nginx/error.log \ //错误日志的位置

--http-log-path=/var/log/nginx/access.log \ //访问日志的位置

--pid-path=/var/run/nginx/nginx.pid \ //pid模块的位置

--lock-path=/var/lock/nginx.lock \

--user=nginx \ //用户名

--group=nginx \ //用户组名

--with-http_ssl_module \ //支持ssl的模块

--with-http_flv_module \ //支持流媒体功能

--with-http_stub_status_module \ //状态检测的模块

--with-http_gzip_static_module \ //压缩模块

--http-client-body-temp-path=/var/tmp/nginx/client/ \

--http-proxy-temp-path=/var/tmp/nginx/proxy/ \ //代理的缓存的位置

--http-fastcgi-temp-path=/var/tmp/nginx/fcgi/ \ //使用fastcgi的目录

--with-pcre

#make && make install //编译安装

三、配置服务脚本,并且启动服务进行测试

#vim /etc/init.d/nginx

#!/bin/sh

#

# nginx - this script starts and stops the nginx daemon

#

# chkconfig: - 85 15

# description: Nginx is an HTTP(S) server, HTTP(S) reverse \

# proxy and IMAP/POP3 proxy server

# processname: nginx

# config: /etc/nginx/nginx.conf

# config: /etc/sysconfig/nginx

# pidfile: /var/run/nginx.pid

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

nginx="/usr/sbin/nginx"

prog=$(basename $nginx)

NGINX_CONF_FILE="/etc/nginx/nginx.conf"

[ -f /etc/sysconfig/nginx ] && . /etc/sysconfig/nginx

lockfile=/var/lock/subsys/nginx

make_dirs() {

# make required directories

user=`nginx -V 2>&1 | grep "configure arguments:" | sed 's/[^*]*--user=\([^ ]*\).*/\1/g' -`

options=`$nginx -V 2>&1 | grep 'configure arguments:'`

for opt in $options; do

if [ `echo $opt | grep '.*-temp-path'` ]; then

value=`echo $opt | cut -d "=" -f 2`

if [ ! -d "$value" ]; then

# echo "creating" $value

mkdir -p $value && chown -R $user $value

fi

fi

done

}

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

make_dirs

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc $prog -QUIT

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

}

restart() {

configtest || return $?

stop

sleep 1

start

}

reload() {

configtest || return $?

echo -n $"Reloading $prog: "

killproc $nginx -HUP

RETVAL=$?

echo

}

force_reload() {

restart

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest)

$1

;;

reload)

rh_status_q || exit 7

$1

;;

force-reload)

force_reload

;;

status)

rh_status

;;

condrestart|try-restart)

rh_status_q || exit 0

;;

*)

echo $"Usage: $0 {start|stop|status|restart|condrestart|try-restart|reload|force-reload|configtest}"

exit 2

esac

#vim /etc/init.d/nginx

#!/bin/sh

#

# nginx - this script starts and stops the nginx daemon

#

# chkconfig: - 85 15

# description: Nginx is an HTTP(S) server, HTTP(S) reverse \

# proxy and IMAP/POP3 proxy server

# processname: nginx

# config: /etc/nginx/nginx.conf

# config: /etc/sysconfig/nginx

# pidfile: /var/run/nginx.pid

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

nginx="/usr/sbin/nginx"

prog=$(basename $nginx)

NGINX_CONF_FILE="/etc/nginx/nginx.conf"

[ -f /etc/sysconfig/nginx ] && . /etc/sysconfig/nginx

lockfile=/var/lock/subsys/nginx

make_dirs() {

# make required directories

user=`nginx -V 2>&1 | grep "configure arguments:" | sed 's/[^*]*--user=\([^ ]*\).*/\1/g' -`

options=`$nginx -V 2>&1 | grep 'configure arguments:'`

for opt in $options; do

if [ `echo $opt | grep '.*-temp-path'` ]; then

value=`echo $opt | cut -d "=" -f 2`

if [ ! -d "$value" ]; then

# echo "creating" $value

mkdir -p $value && chown -R $user $value

fi

fi

done

}

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

make_dirs

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc $prog -QUIT

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

}

restart() {

configtest || return $?

stop

sleep 1

start

}

reload() {

configtest || return $?

echo -n $"Reloading $prog: "

killproc $nginx -HUP

RETVAL=$?

echo

}

force_reload() {

restart

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest)

$1

;;

reload)

rh_status_q || exit 7

$1

;;

force-reload)

force_reload

;;

status)

rh_status

;;

condrestart|try-restart)

rh_status_q || exit 0

;;

*)

echo $"Usage: $0 {start|stop|status|restart|condrestart|try-restart|reload|force-reload|configtest}"

exit 2

esac

#chmod +x /etc/init.d/nginx //为脚本赋予执行的权限

#chkconfig --add nginx //添加到服务队列中

#service nginx start //启动服务

#chkconfig --add nginx //添加到服务队列中

#service nginx start //启动服务

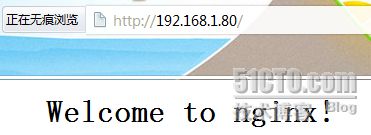

这个时候在浏览器中输入 http://192.168.1.78就可以看到一个测试页面了

四、以上过程也要在HA2上进行操作,我这里就省略了

五、配置corosycn

在配置corosync的时候要确保nginx服务是停止的,并且不会随机器启动而自动启动

#service nginx stop

#chkconfig nginx off

(注:这个要在两个节点上都要操作的,要符合HA高可用集群的条件!)

#service nginx stop

#chkconfig nginx off

(注:在两个上面都要做)

在配置corosync的时候要确保nginx服务是停止的,并且不会随机器启动而自动启动

#service nginx stop

#chkconfig nginx off

(注:这个要在两个节点上都要操作的,要符合HA高可用集群的条件!)

#service nginx stop

#chkconfig nginx off

(注:在两个上面都要做)

安装和配置corosync:

[root@node1 corosync]# ls

cluster-glue-1.0.6-1.6.el5.i386.rpm

cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

corosync-1.2.7-1.1.el5.i386.rpm

corosynclib-1.2.7-1.1.el5.i386.rpm

heartbeat-3.0.3-2.3.el5.i386.rpm

heartbeat-libs-3.0.3-2.3.el5.i386.rpm

libesmtp-1.0.4-5.el5.i386.rpm

openais-1.1.3-1.6.el5.i386.rpm

openaislib-1.1.3-1.6.el5.i386.rpm

pacemaker-1.0.11-1.2.el5.i386.rpm

pacemaker-libs-1.0.11-1.2.el5.i386.rpm

perl-TimeDate-1.16-5.el5.noarch.rpm

resource-agents-1.0.4-1.1.el5.i386.rpm

以上是所有的软件包,我这里就是用本地yum安装了

#yum localinstall * --nogpgcheck -y

[root@node1 corosync]# ls

cluster-glue-1.0.6-1.6.el5.i386.rpm

cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

corosync-1.2.7-1.1.el5.i386.rpm

corosynclib-1.2.7-1.1.el5.i386.rpm

heartbeat-3.0.3-2.3.el5.i386.rpm

heartbeat-libs-3.0.3-2.3.el5.i386.rpm

libesmtp-1.0.4-5.el5.i386.rpm

openais-1.1.3-1.6.el5.i386.rpm

openaislib-1.1.3-1.6.el5.i386.rpm

pacemaker-1.0.11-1.2.el5.i386.rpm

pacemaker-libs-1.0.11-1.2.el5.i386.rpm

perl-TimeDate-1.16-5.el5.noarch.rpm

resource-agents-1.0.4-1.1.el5.i386.rpm

以上是所有的软件包,我这里就是用本地yum安装了

#yum localinstall * --nogpgcheck -y

#cd /etc/corosync/

#cp corosync.conf.example corosync.conf

#vim corosync.conf

compatibility: whitetank

#cp corosync.conf.example corosync.conf

#vim corosync.conf

compatibility: whitetank

totem {

version: 2

secauth: on

threads: 0

interface {

ringnumber: 0

bindnetaddr: 192.168.1.0 //这个是你使用的网段的网络号

mcastaddr: 226.94.1.1

mcastport: 5405

}

}

version: 2

secauth: on

threads: 0

interface {

ringnumber: 0

bindnetaddr: 192.168.1.0 //这个是你使用的网段的网络号

mcastaddr: 226.94.1.1

mcastport: 5405

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: no

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

fileline: off

to_stderr: no

to_logfile: yes

to_syslog: no

logfile: /var/log/cluster/corosync.log

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

ver: 0

name: pacemaker

}

aisexc {

user: root

group: root

}

以上是我的配置文件

#mkdir /var/log/cluster //创建日志文件的目录

#corosync-keygen //生成认证文件

mode: disabled

}

service {

ver: 0

name: pacemaker

}

aisexc {

user: root

group: root

}

以上是我的配置文件

#mkdir /var/log/cluster //创建日志文件的目录

#corosync-keygen //生成认证文件

接下来就是把两个文件拷贝到HA2上了,使用scp命令就行了

#scp -p /etc/corosync/authkeys /etc/corosync/corosync.conf node2:/etc/corosync

#scp -p /etc/corosync/authkeys /etc/corosync/corosync.conf node2:/etc/corosync

启动HA高可用集群:

#service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ] //启动成功

#ssh node2 -- 'service corosync start' //我在HA1上启动的集群

Starting Corosync Cluster Engine (corosync): [ OK ]

有上面可以看出,两个集群都已经启动起来了!

#service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ] //启动成功

#ssh node2 -- 'service corosync start' //我在HA1上启动的集群

Starting Corosync Cluster Engine (corosync): [ OK ]

有上面可以看出,两个集群都已经启动起来了!

六、接下来就是在集群上配置nginx的资源了

在配置资源之前还要禁用stonith,不实用quorum(以为是两节点的集群);

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# property stonith-enabled=false

接下来就是为nginx配置资源了,由于我们需要一个共同的网页,而访问的时候需要一个IP地址,也就是我们上面说的VIP,还要有一个nginx的服务进程,所以定义两个资源就行了;

crm(live)configure# primitive NginxIP ocf:heartbeat:IPaddr params ip="192.168.1.80"

crm(live)configure# primitive NginxServer lsb:nginx

crm(live)configure# group Nginxgroup NginxIP NginxServer

crm(live)configure# verify

crm(live)configure# commit

crm(live)configure# bye

[root@node1 ~]# crm status

============

Last updated: Fri Sep 16 23:18:28 2011

Stack: openais

Current DC: node1.luowei.com - partition with quorum

Version: 1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87

2 Nodes configured, 2 expected votes

1 Resources configured.

============

在配置资源之前还要禁用stonith,不实用quorum(以为是两节点的集群);

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# property stonith-enabled=false

接下来就是为nginx配置资源了,由于我们需要一个共同的网页,而访问的时候需要一个IP地址,也就是我们上面说的VIP,还要有一个nginx的服务进程,所以定义两个资源就行了;

crm(live)configure# primitive NginxIP ocf:heartbeat:IPaddr params ip="192.168.1.80"

crm(live)configure# primitive NginxServer lsb:nginx

crm(live)configure# group Nginxgroup NginxIP NginxServer

crm(live)configure# verify

crm(live)configure# commit

crm(live)configure# bye

[root@node1 ~]# crm status

============

Last updated: Fri Sep 16 23:18:28 2011

Stack: openais

Current DC: node1.luowei.com - partition with quorum

Version: 1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node2.luowei.com node1.luowei.com ]

Resource Group: Nginxgroup

NginxIP (ocf::heartbeat:IPaddr): Started node2.luowei.com

NginxServer (lsb:nginx): Started node2.luowei.com

可以看出节点都启动了,而且配置的资源也启动了!

然后看一下我们配置的资源的配置文件:

[root@node2 ~]# crm configure show

INFO: building help index

node node1.luowei.com

node node2.luowei.com

primitive NginxIP ocf:heartbeat:IPaddr \

params ip="192.168.1.80"

primitive NginxServer lsb:nginx

group Nginxgroup NginxIP NginxServer

property $id="cib-bootstrap-options" \

dc-version="1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

no-quorum-policy="ignore" \

stonith-enabled="false"

NginxIP (ocf::heartbeat:IPaddr): Started node2.luowei.com

NginxServer (lsb:nginx): Started node2.luowei.com

可以看出节点都启动了,而且配置的资源也启动了!

然后看一下我们配置的资源的配置文件:

[root@node2 ~]# crm configure show

INFO: building help index

node node1.luowei.com

node node2.luowei.com

primitive NginxIP ocf:heartbeat:IPaddr \

params ip="192.168.1.80"

primitive NginxServer lsb:nginx

group Nginxgroup NginxIP NginxServer

property $id="cib-bootstrap-options" \

dc-version="1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

no-quorum-policy="ignore" \

stonith-enabled="false"

接下来测试:

1.在浏览器中输入 http://192.168.1.80

1.在浏览器中输入 http://192.168.1.80

4.停止HA2的corosync服务,然后在使用上面的命令查看,可以看到网页依然能出现,而原本出现的eth0:0的几口跑到了HA1上了,资源转移到了HA1上了,实验成功,结束!