比较完整的hadoop集群组件的安装教程

操作系统:centos7.4

内核:3.10.0-693.el7.x86_64

前提:关闭seliunx和firewalld

所有软件包统一上传到/usr/local/src下面

1. 集群环境

192.168.217.136 master

192.168.217.137 slave1

192.168.217.138 slave2

设置免密钥登录:

master节点执行:

ssh-keygen

ssh-copy-id master

ssh-copy-id slave1

ssh-copy-id slave2

2.搭建java开发环境

tar -xzvf jdk-8u172-linux-x64.tar.gz

vim /etc/profile 在末尾加入:

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

然后使环境变量更新

source /etc/profile

java -version

![]()

3.安装hadoop 2.6.5

#master节点

3.1 解压

cd /usr/local/src/hadoop-2.6.5

tar -xzvf hadoop-2.6.5.tar.gz

3.2 修改hadoop配置文件

vim hadoop-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

vim yarn-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

vim slaves

slave1

slave2

vim core-site.xml

fs.defaultFS

hdfs://192.168.217.136:9000

hadoop.tmp.dir

file:/usr/local/src/hadoop-2.6.5/tmp/

vim hdfs-site.xml

dfs.namenode.secondary.http-address

master:9001

dfs.namenode.name.dir

file:/usr/local/src/hadoop-2.6.5/dfs/name

dfs.datanode.data.dir

file:/usr/local/src/hadoop-2.6.5/dfs/data

dfs.replication

2

cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml

mapreduce.framework.name

yarn

vim yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.address

master:8032

yarn.resourcemanager.scheduler.address

master:8030

yarn.resourcemanager.resource-tracker.address

master:8035

yarn.resourcemanager.admin.address

master:8033

yarn.resourcemanager.webapp.address

master:8088

yarn.nodemanager.vmem-check-enabled

false

3.3 #创建临时目录和文件目录

mkdir /usr/local/src/hadoop-2.6.5/tmp

mkdir -p /usr/local/src/hadoop-2.6.5/dfs/name

mkdir -p /usr/local/src/hadoop-2.6.5/dfs/data

3.4 配置环境变量

#master、slave1、slave2

vim /etc/profile

HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export PATH=$PATH:$HADOOP_HOME/bin

#刷新环境变量

source /etc/profile

3.5 拷贝文件安装包到从节点

scp -r /usr/local/src/hadoop-2.6.5 salve1:/usr/local/src/

scp -r /usr/local/src/hadoop-2.6.5 salve2:/usr/local/src/

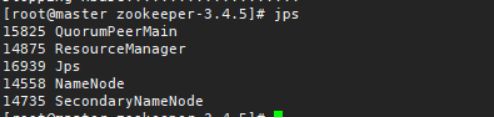

3.6 启动集群

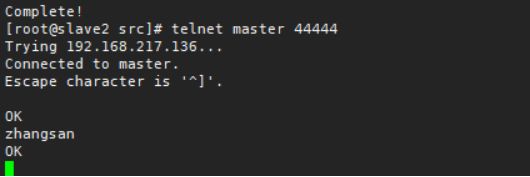

#初始化Namenode

hadoop namenode -format

![]()

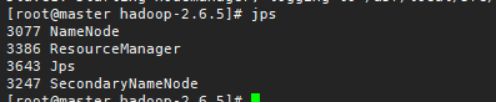

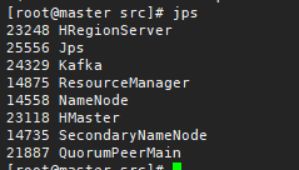

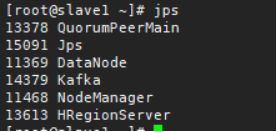

3.7 集群状态:

#master jps

3.8 操作命令:和hadoop1.x一样

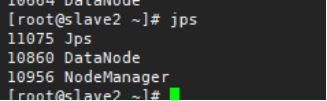

3.9 关闭集群

4.安装zookeeper 3.4.5

4.1 cd /usr/local/src/

tar -xzvf zookeeper-3.4.5.tar.gz

4.2 修改zookeepr的配置

#Master

cd zookeeper-3.4.5

#创建日志文件夹及数据文件夹

mkdir data

mkdir log

#修改配置

cd conf

mv zoo_sample.cfg zoo.cfg

vim zoo.cfg

dataDir=/usr/local/src/zookeeper-3.4.5/data

dataLogDir=/usr/local/src/zookeeper-3.4.5/log

server.1=master:2888:3888

server.2=slave1:2888:3888

server.3=slave2:2888:3888

4.3 配置环境变量

#master/slave1/2

vim /etc/profile

ZOOKEEPER_HOME=/usr/local/src/zookeeper-3.4.5

export PATH=$PATH:$ZOOKEEPER_HOME/bin

source /etc/profile

4.4 拷贝安装包到slave1/2

#Master

scp -r /usr/local/src/zookeeper-3.4.5 root@slave1:/usr/local/src/

scp -r /usr/local/src/zookeeper-3.4.5 root@slave2:/usr/local/src/

4.5 分别添加id

#Master

echo “1” > /usr/local/src/zookeeper-3.4.5/data/myid

#Slave1

echo “2” > /usr/local/src/zookeeper-3.4.5/data/myid

#Slave2

echo “3” > /usr/local/src/zookeeper-3.4.5/data/myid

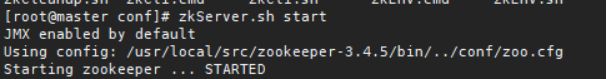

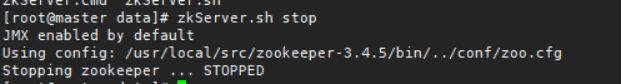

4.6 启动Zookeeper服务

#Master、Slave1、Slave2

zkServer.sh start

![]()

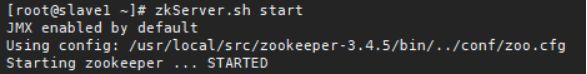

4.7 查看运行状态

zkServer.sh status

#Master

#Slave1

![]()

#Slave2

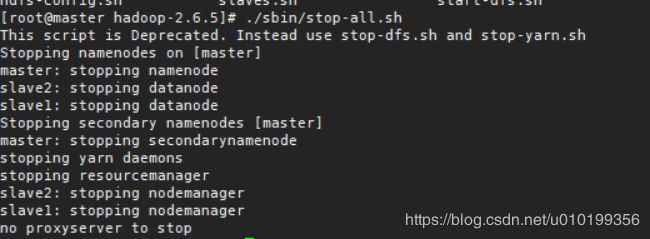

4.8 查看进程状态

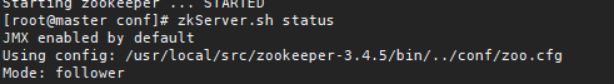

4.9 关闭集群

#master/slave1/2

zkServer.sh stop

5.安装hbase1.3.1

备注:zookeeeper不会自启动,要先启动hadoop和zk,然后启动hbase。

5.1 cd /usr/local/src/

tar -xzvf hbase-1.3.1-bin.tar.gz

5.2 修改hbase的配置文件

cd hbase-1.3.1/conf

vim regionservers

master

slave1

slave2

vim hbase-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib

修改【这一句前面的#去掉】:

export HBASE_MANAGES_ZK=false`

HBASE_MANAGES_ZK=false 时使用独立的,为true时使用默认自带的。

vim hbase-site.xml

hbase.tmp.dir

/var/hbase

hbase.rootdir

hdfs://master:9000/hbase

hbase.cluster.distributed

true

hbase.zookeeper.quorum

master,slave1,slave2

hbase.zookeeper.property.dataDir

/usr/local/src/zookeeper-3.4.5

hbase.master.info.port

60010

5.3 增加环境变量

#Master、Slave1、Slave2

vim /etc/profile

HBASE_HOME=/usr/local/src/hbase-1.3.1

export HBASE_CLASSPATH=$HBASE_HOME/lib

HBASE_LOG_DIR=$HBASE_HOME/logs

export PATH=$PATH:$HBASE_HOME/bin

#刷新环境变量

source /etc/profile

#创建文件夹:在hbase目录中

mkdir logs

mkdir zookeeper

mkdir -p /var/hbase

5.4 拷贝安装包

#Master

scp -r /usr/local/src/hbase-1.3.1 root@slave1:/usr/local/src/

scp -r /usr/local/src/hbase-1.3.1 root@slave2:/usr/local/src/

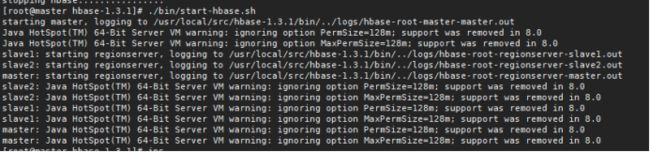

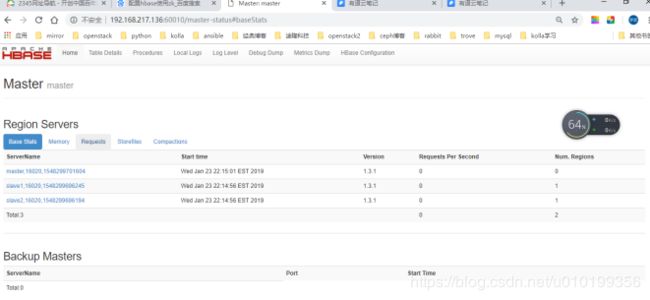

5.5 启动集群

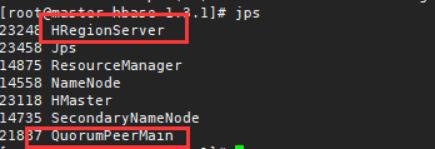

5.6 进程状态

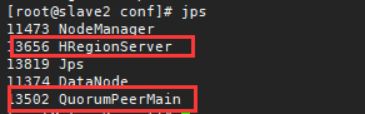

5.7 查看监控界面

5.8关闭集群

#master

stop-hbase.sh

6.安装kafka

6.1解压

cd /usr/local/src

tar –xzvf kafka_2.11-0.10.2.1

6.2修改kafka的配置文件

cd kafka_2.11-0.10.2.1/config

vim server.propertie

log.dirs=/tmp/kafka-logs

zookeeper.connect=master:2181,slave1:2181,slave2:2181

6.3 增加环境变量

#master slave1/2

vim /etc/profile

KAFKA_HOME=/usr/local/src/kafka_2.11-0.10.2.1

export PATH= K A F K A H O M E / b i n : KAFKA_HOME/bin: KAFKAHOME/bin:PATH

#刷新环境变量

source /etc/profile

6.4 拷贝软件包

scp -r /usr/local/src/kafka_2.11-0.10.2.1 slave1:/usr/local/src

scp -r /usr/local/src/kafka_2.11-0.10.2.1 slave2:/usr/local/src

6.5 修改Kafka配置文件

#Master

vim config/server.propertie

broker.id=0

#Slave1

vim config/server.propertie

broker.id=1

#Slave2

vim config/server.propertie

broker.id=2

6.6 启动Kafka-Zookeeper集群

#如果启动了Zookeeper集群则跳过此步骤

vim /usr/local/src/kafka_2.11-0.10.2.1/bin/start-kafka-zookeeper.sh

/usr/local/src/kafka_2.11-0.10.2.1/bin/zookeeper-server-start.sh /usr/local/src/kafka_2.11-0.10.2.1/config/zookeeper.propeties

chmod +x /usr/local/src/kafka_2.11-0.10.2.1/bin/start-kafka-zookeeper.sh

start-kafka-zookeeper.sh

6.7 启动Kafka集群

#Master、Slave1、Slave2

vim /usr/local/src/kafka_2.11-0.10.2.1/bin/start-kafka.sh

/usr/local/src/kafka_2.11-0.10.2.1/bin/kafka-server-start.sh /usr/local/src/kafka_2.11-0.10.2.1/config/server.properties

chmod +x /usr/local/src/kafka_2.11-0.10.2.1/bin/start-kafka.sh

start-kafka.sh & 后台启动

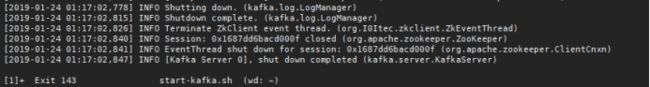

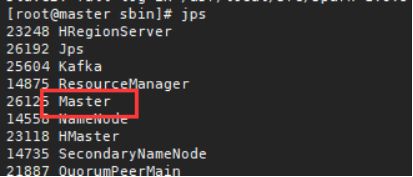

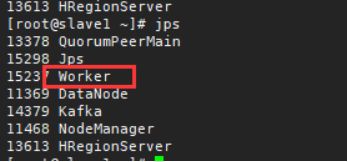

![]()

![]()

6.8 查看状态

6.9 关闭集群

7. 安装scala-2.11.4

cd /usr/local/src

tar –xzvf scala-2.11.4.tar.gz

8. 安装spark-1.6.3-bin-hadoop2.6

8.1 cd /usr/local/src

tar -xzvf spark-1.6.3-bin-hadoop2.6.tgz

8.2 修改配置文件

cd spark-1.6.3-bin-hadoop2.6/conf

cp spark-env.sh.template spark-env.sh

vim spark-env.sh

export SCALA_HOME=/usr/local/src/scala-2.11.4

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

export HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

SPARK_MASTER_IP=master

SPARK_LOCAL_DIRS=/usr/local/src/spark-1.6.3-bin-hadoop2.6

SPARK_DRIVER_MEMORY=1G

vim slaves

slave1

slave2

8.3 拷贝安装包

scp -r /usr/local/src/spark-1.6.3-bin-hadoop2.6 slave1:/usr/local/src

scp -r /usr/local/src/spark-1.6.3-bin-hadoop2.6 slave2:/usr/local/src

scp -r /usr/local/src/scala-2.11.4 slave1:/usr/local/src

scp -r /usr/local/src/scala-2.11.4 slave2:/usr/local/src

8.4 启动集群

./sbin/start-all.sh

以上错误没有问题,可以忽略。(由于不懂原理,暂时不知道如何解释)。

8.5 查看状态

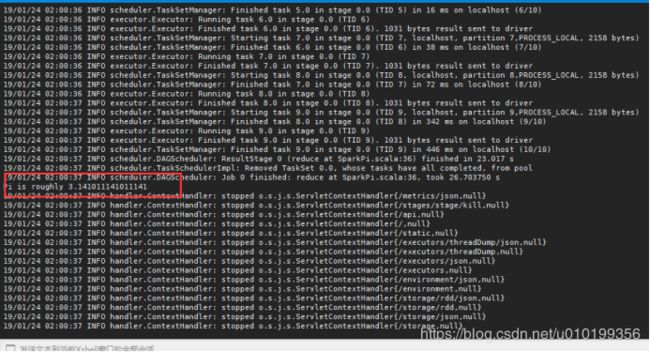

8.6 验证:

#本地模式

./bin/run-example SparkPi 10 --master local[2]

9. 安装apache-hive-1.2.2-bin(服务端)

9.1 解压

cd /usr/local/src

tar -xzvf apache-hive-1.2.2-bin.tar.gz

9.2 修改hive的配置文件

cd /usr/local/src/apache-hive-1.2.2-bin/conf

vim hive-site.xml

添加以下代码及配置项:

javax.jdo.option.ConnectionURL

jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

yarn

javax.jdo.option.ConnectionPassword

yarn

hive.metastore.warehouse.dir

/usr/hive/warehouse

hive.exec.scratchdir

/usr/hive/tmp

hive.querylog.location

/usr/hive/log

9.3 创建hive-site.xml指定的目录

[root@master conf]# hadoop fs -mkdir -p /usr/hive/warehouse

[root@master conf]# hadoop fs -mkdir -p /usr/hive/tmp

[root@master conf]# hadoop fs -mkdir -p /usr/hive/log

9.4 修改hdfs目录权限

[root@master conf]# hadoop fs -chmod 777 /usr/hive/warehouse

[root@master conf]# hadoop fs -chmod 777 /usr/hive/tmp

[root@master conf]# hadoop fs -chmod 777 /usr/hive/log

9.5安装mysql

yum install mariadb mariadb-server python2-MySQL -y

systemctl start mariadb.service

mysql_secure_installation

修改密码

[root@master yum.repos.d]# mysql_secure_installation

设置密码root密码为hadoop

9.6 配置hive环境变量

#master节点

vim /etc/profile

HIVE_HOME=/usr/local/src/apache-hive-1.2.2-bin

export CLASSPATH=$CLASSPATH:${HIVE_HOME}/lib

export PATH=$PATH:$HIVE_HOME/bin

source /etc/profile

因为 Hive 使用了 Hadoop, 需要在 hive-env.sh 文件中指定 Hadoop 安装路径:

cp hive-env.sh.template hive-env.sh

vim hive-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

export HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export HIVE_HOME=/usr/local/src/apache-hive-1.2.2-bin

export HIVE_CONF_DIR=/usr/local/src/apache-hive-1.2.2-bin/conf

vim hive-log4j.properties

hive.log.dir=/usr/local/src/apache-hive-1.2.2-bin/logs

mkdir /usr/local/src/apache-hive-1.2.2-bin/logs

9.7 下载java操作数据库的连接驱动:

cd /usr/local/src

wget http://ftp.ntu.edu.tw/MySQL/Downloads/Connector-J/mysql-connector-java-5.1.46.tar.gz

tar zxvf mysql-connector-java-5.1.46.tar.gz

2)复制连接库文件

cp mysql-connector-java-5.1.46/mysql-connector-java-5.1.46-bin.jar /usr/local/src/apache-hive-1.2.2-bin/lib

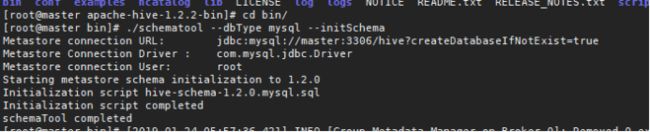

9.8 格式化数据库

[root@master bin]# ./schematool --dbType mysql --initSchema

Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 1.2.0

Initialization script hive-schema-1.2.0.mysql.sql

[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

at jline.TerminalFactory.create(TerminalFactory.java:101)

at jline.TerminalFactory.get(TerminalFactory.java:158)

at org.apache.hive.beeline.BeeLineOpts.(BeeLineOpts.java:74)

at org.apache.hive.beeline.BeeLine.(BeeLine.java:117)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:346)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:326)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:266)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:243)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Exception in thread "main" java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected

条件hive为1.2.2

Hadoop为2.6.5

是因为/usr/local/src/hadoop-2.6.5/share/hadoop/yarn/lib/目录下存在老的jline

[root@master lib]# ls -al /usr/local/src/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar

-rw-rw-r-- 1 1000 1000 87325 Oct 2 2016 /usr/local/src/hadoop-2.6.5/share/hadoop/yarn/lib/jline-0.9.94.jar

解决办法:cp /usr/local/src/apache-hive-1.2.2-bin/lib/jline-2.12.jar /usr/local/src/hadoop-2.6.5/share/hadoop/yarn/lib/

将原来的jline-0.9.94.jar备份 ,否则,依然报错

[root@master bin]# ./schematool --dbType mysql --initSchema

Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 1.2.0

Initialization script hive-schema-1.2.0.mysql.sql

Initialization script completed

9.9 启动metastore服务

hive --service metastore &

![]()

ps aux | grep RunJar

![]()

10.安装apache-hive-1.2.2-bin(客户端)此处以slave1作为客户端

10.1解压

cd /usr/local/src/

tar -xzvf apache-hive-1.2.2-bin.tar.gz

10.2 修改配置文件

cd /usr/local/src/apache-hive-1.2.2-bin/conf

vim hive-site.xml

hive.metastore.uris

thrift://master:9083

IP address (or fully-qualified domain name) and port of the metastore host

hive.metastore.warehouse.dir

/usr/hive/warehouse

hive.exec.scratchdir

/usr/hive/tmp

hive.querylog.location

/usr/hive/log

vim hive-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_172

export HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export HIVE_HOME=/usr/local/src/apache-hive-1.2.2-bin

export HIVE_CONF_DIR=/usr/local/src/apache-hive-1.2.2-bin/conf

vim hive-log4j.properties

hive.log.dir=/usr/local/src/apache-hive-1.2.2-bin/logs

mkdir /usr/local/src/apache-hive-1.2.2-bin/logs

10.3加载环境变量

vim /etc/profile

HIVE_HOME=/usr/local/src/apache-hive-1.2.2-bin

export CLASSPATH=$CLASSPATH:${HIVE_HOME}/lib

export PATH=$PATH:$HIVE_HOME/bin

source /etc/profile

10.4 客户端验证:

执行hive,然后如下错误:

解决办法,可以查看服务端的解决办法。

再次执行hive:

hive> create database test;

OK

Time taken: 1.824 seconds

hive> create database CAU_DATA;

OK

Time taken: 0.173 seconds

hive> use CAU_DATA;

OK

hive> CREATE EXTERNAL TABLE SURF_CLI_CHN_MUL_DAY_EVP

> (id string comment ‘台站编号’,

> col1 string comment ‘临时字段1’,

> col2 string comment ‘临时字段2’,

> col3 string comment ‘临时字段3’,

> year_id string comment ‘年’,

> month_id string comment ‘月’,

> day_id string comment ‘日’,

> col4 string comment ‘临时字段4’,

> col5 string comment ‘临时字段5’,

> col6 string comment ‘临时字段6’,

> col7 string comment ‘临时字段7’)

> COMMENT ‘表描述信息’

> PARTITIONED BY (year int,month int)

> LOCATION ‘/CAU/SURF_CLI_CHN_MUL_DAY-EVP’;

OK

Time taken: 0.886 seconds

hive> alter table SURF_CLI_CHN_MUL_DAY_EVP add partition(year=‘2017’,month=‘01’);

OK

Time taken: 0.335 seconds

hive> show tables;

OK

surf_cli_chn_mul_day_evp

Time taken: 0.064 seconds, Fetched: 1 row(s)

hive> show partitions SURF_CLI_CHN_MUL_DAY_EVP;

OK

year=2017/month=1

Time taken: 0.239 seconds, Fetched: 1 row(s)

客户端验证完成。

11 storm-1.2.2 安装

前提:安装好zookeeper

11.1 下载解压

cd /usr/local/src

wget https://mirrors.aliyun.com/apache/storm/apache-storm-1.2.2/apache-storm-1.2.2.tar.gz

tar -xzvf apache-storm-1.2.2.tar.gz

11.2 创建日志目录:

cd /usr/local/src/apache-storm-1.2.2/

mkdir logs

11.3 修改配置文件

cd /usr/local/src/apache-storm-1.2.2/conf

vim storm.yaml

storm.zookeeper.servers:

- "master"

- "slave1"

- "slave2"

nimbus.seeds: ["master", "slave1", "slave2"]

storm.local.dir: /tmp/storm

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

11.4 配置环境变量

vim /etc/profile

STORM_HOME=/usr/local/src/apache-storm-1.2.2

export PATH=$PATH:$STORM_HOME/bin

source /etc/profile

11.5 拷贝文件到slave1 slave2

#master

scp -r /usr/local/src/apache-storm-1.2.2 slave1:/usr/local/src/

scp -r /usr/local/src/apache-storm-1.2.2 slave2:/usr/local/src/

11.6 启动集群

先启动zookeeper

#master

vim /usr/local/src/apache-storm-1.2.2/bin/start-storm-master.sh

/usr/local/src/apache-storm-1.2.2/bin/storm nimbus &

/usr/local/src/apache-storm-1.2.2/bin/storm ui &

/usr/local/src/apache-storm-1.2.2/bin/storm logviewer &

chmod +x /usr/local/src/apache-storm-1.2.2/bin/start-storm-master.sh

start-storm-master.sh

#slave1/2

vim /usr/local/src/apache-storm-1.2.2/bin/start-storm-salve.sh

/usr/local/src/apache-storm-1.2.2/bin/storm Supervisor &

/usr/local/src/apache-storm-1.2.2/bin/storm logviewer &

chmod +x /usr/local/src/apache-storm-1.2.2/bin/start-storm-slave.sh

start-storm-master.sh

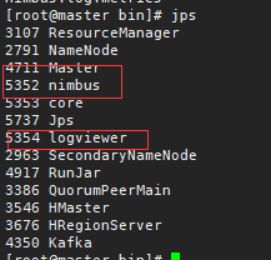

11.7 查看状态

jps

#master节点

#salve1/2

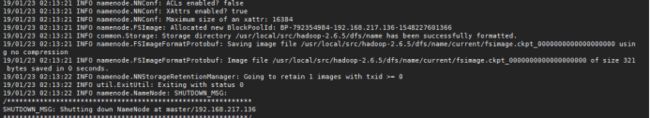

![]()

![]()

查看UI

http://master:8080(spark web的默认端口也是8080) 在访问时首先要关掉spark。

(也可以修改spark或者storm的web_ui的端口)

Spark web_UI的端口指定在文件./sbin/start-master.sh中第70行

SPARK_MASTER_WEBUI_PORT=8080

Storm的配置端口暂时只找到了SECURITY.md的8080第31行。

![]()

11.8关闭集群

#master、Slave1、Slave2

vim /usr/local/src/apache-storm-1.2.2/bin/stop-storm.sh

kill ps aux| grep storm | grep -v 'grep' | awk '{print $2}'

chmod +x /usr/local/src/apache-storm-1.2.2/bin/stop-storm.sh

stop-storm.sh

12. flume1.6 安装

12.1解压

cd /usr/local/src

tar -xzvf apache-flume-1.6.0-bin.tar.gz

12.2 修改flume的配置

#netcCat

vim conf/flume-netcat.conf

#Name the components on this agent

agent.sources = r1

agent.sinks = k1

agent.channels = c1

#Describe/configuration the source

agent.sources.r1.type = netcat

agent.sources.r1.bind = 127.0.0.1

agent.sources.r1.port = 44444

#Describe the sink

agent.sinks.k1.type = logger

#Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 1000

agent.channels.c1.transactionCapacity = 100

#Bind the source and sink to the channel

agent.sources.r1.channels = c1

agent.sinks.k1.channel = c1

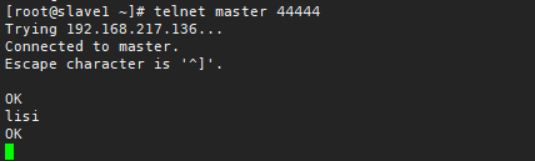

#验证

#Server

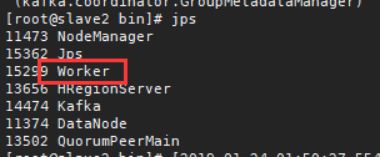

bin/flume-ng agent --conf conf --conf-file conf/flume-netcat.conf --name=agent -Dflume.root.logger=INFO,console

#Client

telnet master 44444

#Exec

vim conf/flume-exec.conf

#Name the components on this agent

agent.sources = r1

agent.sinks = k1

agent.channels = c1

#Describe/configuration the source

agent.sources.r1.type = exec

agent.sources.r1.command = tail -f /data/hadoop/flume/test.txt

#Describe the sink

agent.sinks.k1.type = logger

#Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 1000

agent.channels.c1.transactionCapacity = 100

#Bind the source and sink to the channel

agent.sources.r1.channels = c1

agent.sinks.k1.channel = c1

#Server

bin/flume-ng agent --conf conf --conf-file conf/flume-exec.conf --name=agent -Dflume.root.logger=INFO,console

#Client

while true;do echo `date` >> /data/hadoop/flume/test.txt ; sleep 1; done

备注:此处验证是服务端和客户端是同一台机器

![]()

![]()

#Avro

vim conf/flume-avro.conf

#Define a memory channel called c1 on agent

agent.channels.c1.type = memory

#Define an avro source alled r1 on agent and tell it

agent.sources.r1.channels = c1

agent.sources.r1.type = avro

agent.sources.r1.bind = 127.0.0.1

agent.sources.r1.port = 44444

#Describe/configuration the source

agent.sinks.k1.type = hdfs

agent.sinks.k1.channel = c1

agent.sinks.k1.hdfs.path = hdfs://master:9000/flume_data_pool

agent.sinks.k1.hdfs.filePrefix = events-

agent.sinks.k1.hdfs.fileType = DataStream

agent.sinks.k1.hdfs.writeFormat = Text

agent.sinks.k1.hdfs.rollSize = 0

agent.sinks.k1.hdfs.rollCount= 600000

agent.sinks.k1.hdfs.rollInterval = 600

agent.channels = c1

agent.sources = r1

agent.sinks = k1

#验证

#Server

bin/flume-ng agent --conf conf --conf-file conf/flume-netcat.conf --name=agent -Dflume.root.logger=DEBUG,console

#Client

telnet master 44444

![]()

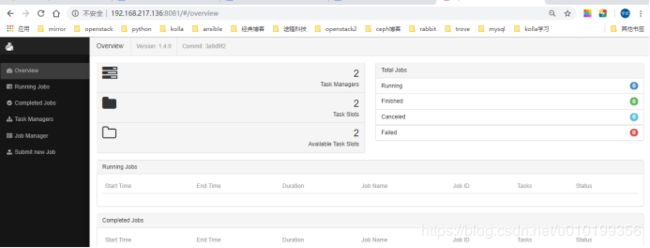

13. flink-1.4.0-bin-hadoop26-scala_2.11环境安装

13.1 解压

cd /usr/local/src

tar -xzvf flink-1.4.0-bin-hadoop26-scala_2.11.tgz

13.2 修改配置文件

vim flink-1.5.2/conf/flink-conf.yaml

jobmanager.rpc.address: master

vim masters

master:8081

vim slaves

slave1

slave2

13.3 拷贝软件包到两个从节点

scp -r /usr/local/src/flink-1.4.0 slave1:/usr/local/src

scp -r /usr/local/src/flink-1.4.0 slave2:/usr/local/src

13.4 配置环境变量:

vim /etc/profile

FLINK_HOME=/usr/local/src/flink-1.4.0

export PATH=$PATH:$FLINK_HOME/bin

source /etc/profile

13.5启动集群

cd /usr/local/src/flink-1.5.2/bin

./start-cluster.sh

13.6 监控界面

http://master:8081

jps

master:

![]()

#slave1/2

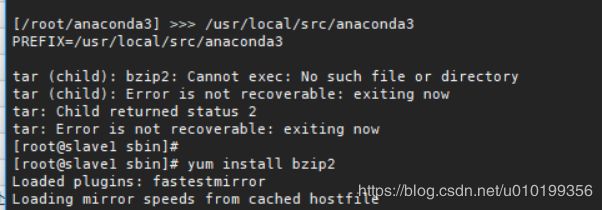

14.安装anaconda3解决python3和python2的兼容问题

14.1 拷贝脚本:

cd /usr/local/sbin/

Anaconda3-4.4.0-Linux-x86_64.sh

sh Anaconda3-4.4.0-Linux-x86_64.sh

发生如下错误:安装bzip2

yum install -y bzip2

删除目录 rm –rf /usr/local/src/anaconda3

再次执行:sh Anaconda3-4.4.0-Linux-x86_64.sh

直到安装完成。

14.2 配置环境变量:

vim /etc/profile

ANACONDA3_HOME=/usr/local/src/anaconda3

export PATH=$PATH:$ANACONDA3_HOME/bin

source /etc/profile

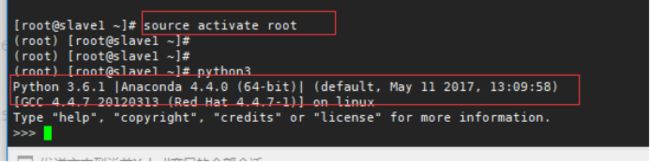

14.3 创建python2.7的运行环境

conda create -n py27 python=2.7 -y -c https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

source activate py27进入python27的环境

source deactivate py27 退出python27的环境

![]()

进入python36的环境:

conda info –e

source activate root

python 进入python36的环境

source detactivate root 退出python36环境。