OpenStack Load Balancer LBaaS

Load-Balancer-as-a-Service(LbaaS)

TheLBaaS extension enables OpenStack tenants to load-balance their VMtraffic.

Theextension enables you to:

• Load-balanceclient traffic from one network to application services, such as VMs,on the

sameor a different network.

• Load-balanceseveral protocols, such as TCP and HTTP.

• Monitorthe health of application services.

• Supportsession persistence.

1Concepts

Touse OpenStack LBaaS APIs effectively, you should understand severalkey concepts:

| Concepts |

Description |

||||||||

| LoadBalancer |

Theprimary load-balancing configuration object. Specifies the virtualIP address where client traffic is received. Aload balancer is a logical device. It is used to distributeworkloads between multiple back-end systems or services callednodes, basedon the criteria defined as part of its configuration. |

||||||||

| VIP |

AVIP is the primary load balancing configuration object thatspecifies the virtual IP address and port on which client trafficis received, as well as other details such as the load balancingmethod to be use, protocol, etc. This entity is sometimes known inLB products under the name of a "virtual server", a"vserver" or a "listener".

Avirtual IP is an Internet Protocol (IP) address configured on theload balancer for use by clients connecting to a service that isload balanced. Incoming connections and requests are distributedto back-end nodes based on the configuration of the load balancer. ListenerRepresents a single listening port. Defines the protocol and canoptionally provide TLS termination. |

||||||||

Pool |

Aload balancing pool is a logical set of devices, such as webservers, that you group together to receive and process traffic.The load balancing function chooses a member of the pool accordingto the configured load balancing method to handle the new requestsor connections received on the VIP address. There is only one poolfor a VIP. |

||||||||

PoolMember |

Theapplication that runs on the back-end server. Apool member represents the application running on back-end server. |

||||||||

HealthMonitoring |

Ahealth monitor is used to determine whether or not back-endmembers of the VIP's pool are usable for processing a request. Apool can have several health monitors associated with it. Thereare different types of health monitors supported by the OpenStackLBaaS service:

Whena pool has several monitors associated with it, each member of thepool is monitored by all these monitors. If any monitor declaresthe member as unhealthy, then the member status is changed toINACTIVE and the member won't participate in its pool's loadbalancing. In other words, ALL monitors must declare the member tobe healthy for it to stay ACTIVE. |

||||||||

SessionPersistence |

Sessionpersistence is a feature of the load balancing service. Itattempts to force connections or requests in the same session tobe processed by the same member as long as it is active. TheOpenStack LBaaS service supports three types of persistence:

LBaaSsupports session persistence by ensuring incoming requests arerouted to the same instance within a pool of multiple instances. |

||||||||

ConnectionLimits |

Tocontrol incoming traffic on the VIP address as well as traffic foreach member of a pool, you can set a connection limit on the VIPor on the pool, beyond which the load balancing function willrefuse client requests or connections. This can be used to thwartDoS attacks and to allow each member of the pool to continue towork within its limits. ForHTTP and HTTPS protocols, since several HTTP requests can bemultiplexed on the same TCP connection, the connection limit valueis interpreted as the maximum number of requests allowed. Ingresstraffic can be shaped with connection limits. This feature allowsworkload control, and can also assist with mitigating DoS (Denialof Service) attacks. |

2High-Level Task Flow

Thehigh-level task flow for using LBaaS API to configure load balancingis as follows:

?The tenant creates a Pool, which is initially empty

?The tenant creates one or several Members in the Pool

?The tenant create one or several Health Monitors

?The tenant associates the Health Monitors with the Pool

?The tenant finally creates a VIP with the Pool

Touse the LBaaS extension to configure load balancing

1.Create a pool, which is initially empty.

2.Create one or more members in the pool.

3.Create a health monitor.

4.Associate the health monitor with the pool.

5.Create a load balancer object.

6.Create a listener.

7.Associate the listener with the load balancer.

8.Associate the pool with the listener.

9.Optional. If you use HTTPS termination, complete these tasks:

a.Add the TLS certificate, key, and optional chain to Barbican.

b.Associate the Barbican container with the listener.

10.Optional. If you use layer-7 HTTP switching, complete these tasks:

a.Create any additional pools, members, and health monitors that areused as nondefault pools.

b.Create a layer-7 policy that associates the listener with thenon-default pool.

c.Create rules for the layer-7 policy that describe the logic thatselects the nondefault pool for servicing some requests.

| 概念 |

描述 |

| VIP |

可以把一个VIP看做是具有一个虚拟IP地址和指定端口的负载均衡器,当然还有其他的属性,比如均衡算法,协议等。 |

| Pool |

一个pool代表一组逻辑设备(通常是同质设备),比如web服务器。负载均衡算法会选择pool中的某一member接收进入系统的流量或连接。目前一个VIP对应一个Pool。 |

| Poolmember |

后端服务器上运行的application |

| Healthmonitor |

检测pool内member的状态。一个pool可对应多个healthmonitor。 有四种类型:PING、TCP、HTTP、HTTPS。每种类型就是使用相应的协议对member进行检测。 |

| SessionPersistence |

也就是一般我们听到的“Session粘滞”,是规定session相同的连接或请求转发的行为。目前支持三种类型: |

| ConnectionLimits |

这个特性主要用来抵御DoS攻击 |

http://blog.csdn.net/lynn_kong/article/details/8528512

https://www.ustack.com/blog/neutron_loadbalance/

Grizzly中的LoadBalancer初步分析

本blog欢迎转发,但请保留原作者信息:

新浪微博:@孔令贤HW

Blog地址:http://blog.csdn.net/lynn_kong

内容系本人学习、研究和总结,如有雷同,实属荣幸!

在Grizzly版本中,Quantum组件引入了一个新的网络服务:LoadBalancer(LBaaS),服务的架构遵从ServiceInsertion框架(也是G版引入)。LoadBalancer为租户提供到一组虚拟机的流量的负载均衡,类似于Amazon的ELB。昨天(2013.1.20)Grizzly_2放出,实现10个BP,修复82个bug。大致过了下代码,目前我能识别到的更新如下:

1.增加servicetype扩展(serviceinsertion实现的前提条件),表示一种网络服务类型(LB,FW,VPN等),为了向后兼容,载入时会创建默认的servicetype

2.安全组功能从Nova移植到了Quantum

3.增加portbinding扩展,给port增加了三个属性:binding:vif_type,binding:host_id,binding:profile(这个属性是Cisco专用)

4.Ryu插件支持provider扩展

5.增加loadbalancer扩展以实现负载均衡功能

6.新增一个Quantum插件(BigSwitch)

1. 基本流程

租户创建一个pool,初始时的member个数为0;

租户在该pool内创建一个或多个member

租户创建一个或多个healthmonitor

租户将healthmonitors与pool关联

租户使用pool创建vip

2. 概念

l VIP

可以把一个VIP看做是具有一个虚拟IP地址和指定端口的负载均衡器,当然还有其他的属性,比如均衡算法,协议等。

l Pool

一个pool代表一组逻辑设备(通常是同质设备),比如web服务器。负载均衡算法会选择pool中的某一member接收进入系统的流量或连接。目前一个VIP对应一个Pool。

l Poolmember

代表了后端的一个应用服务器。

l Healthmonitor

一个healthmonitor用来检测pool内member的状态。一个pool可对应多个healthmonitor。有四种类型:

PING、TCP、HTTP、HTTPS。每种类型就是使用相应的协议对member进行检测。

l SessionPersistence

也就是一般我们听到的“Session粘滞”,是规定session相同的连接或请求转发的行为。目前支持三种类型:

SOURCE_IP:指从同一个IP发来的连接请求被某个member接收处理;

HTTP_COOKIE:该模式下,loadbalancer为客户端的第一次连接生成cookie,后续携带该cookie的请求会被某个member处理

APP_COOKIE:该模式下,依靠后端应用服务器生成的cookie决定被某个member处理

l ConnectionLimits

这个特性主要用来抵御DoS攻击

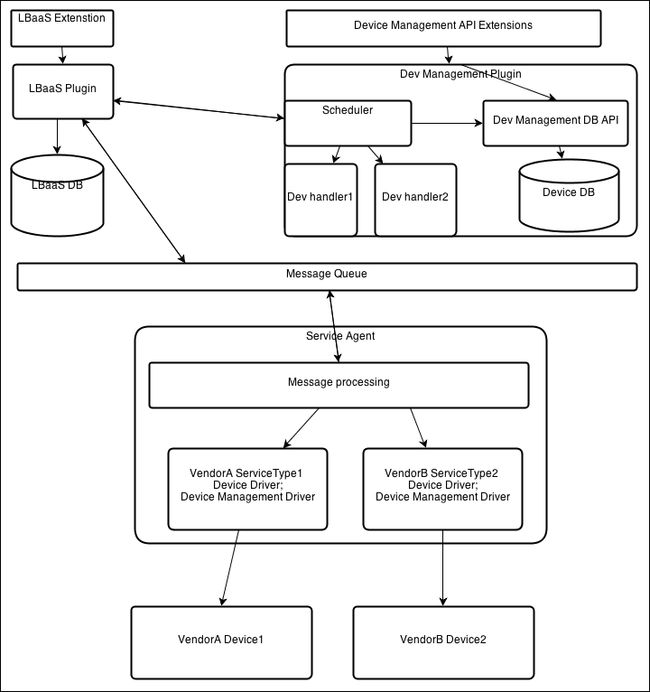

3. 架构图

截止到2013.1.22号,Grizzly_2版本仅实现了LBaaSPlugin部分,LBaaSAgent和Scheduler/DeviceManagement正在开发。

如上图,可见LBaaS与QuantumPlugin的架构基本一致,将上层的逻辑概念与底层的实现分离。主要模块如下:

1.LBaaS Quantum Extension:处理RESTAPI调用

2.LBaaS Quantum AdvSvcPlugin:核心Plugin之一。Quantum在Folsom版本仅支持一个Plugin,但在实现了ServiceInsertion之后可以运行多个服务的不同Plugin共存。

3.LBaaS Agent:同QuantumAgent一样,是部署在各个计算节点的独立进程

4.Driver:与实际的设备打交道,实现逻辑模型

4. Scheduler/DeviceManagement

Scheduler/DeviceManagement是一个单独的Quantum组件,其功能主要有两个方面:

1. 实现服务的逻辑模型

2. 为了实现高级服务(AdvancedService),比如loadbalancers, firewalls, gateways等而提供面向租户的扩展API

以创建Pool为例,流程图如下:

LBaaSPlugin收到创建pool的请求;

LBaaSPlugin在DB中新增记录,返回pool_id;

LBaaSPlugin调用Scheduler,传递service_type,pool_id,pool等参数;

Scheduler选择满足条件的device,保存device和pool的映射,将device_info返回;

Agent调用Driver,Driver将device接入租户网络,实现逻辑模型;

Agent向LBaaS通知,更新Pool在DB中的状态;

本博客欢迎转发,但请保留原作者(@孔令贤HW)信息!内容系本人学习、研究和总结,如有雷同,实属荣幸!

实例参考:http://blog.csdn.net/zhaihaifei/article/details/74732547