三月第一天

今天写关于ESRGAN的论文阅读笔记~

ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks 是18年港中文的工作。

摘要中介绍了文章的主要工作: 文章主要通过网络结构、和adversarial loss 和 perceptual loss 两种损失函数对SRGAN【1】进行改进。(改网络结构和损失函数是目前深度学习的主要改进方向)

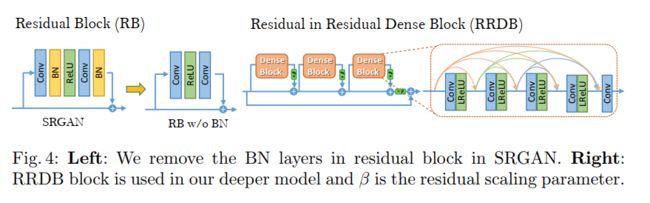

在网络结构方面,作者引入RRDB模块(主要是去掉 batch normalization 和dense 连接的改变。在adversarial loss方面的改进主要是运用了relativistic GAN【2】使relative realness instead of the absolute value. 在perceptual loss方面主要通过运用在激活之前的特征进行计算(之前是用激活后的特征)。

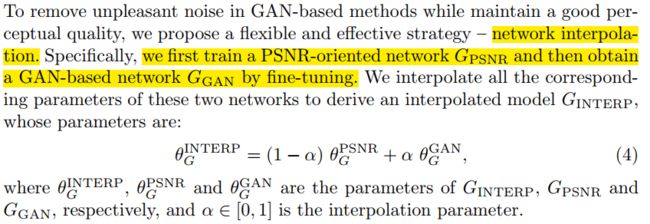

论文话提到插值网络,就是对两个网络结构的参数组合得到新的网络,这部分内容在摘要没有提到。

1. Introduction

这部分主要提到了 PSNR的度量方式和subjective evaluation of human observers是存在矛盾的。(于是有perceptual loss)等。

2. Related Work

网络结构改进:

residual learning, Laplacian pyramid structure, residual blocks, recursive learning, densely connected network, deep back projection 以及 residual dense work.

还有EDSR[3] 去掉BN层,channel attention[4]还有强化学习【5】非监督学习【6】

stablize training a very deep model:

residual peth/residual scaling/robust initialization

perceptual-driven approaches:

perceptual loss/ Contextual loss

incorporate semantic prior【7】

improved regularization for discriminator:

gradient clipping/spectral normalization【8】

3. Proposed Methods

3.1 网络结构

基于SRResNet的结构:

将其中的basic block进行了改进:

在RRDB模块中 use dense block in the main path as【9】

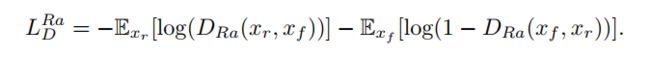

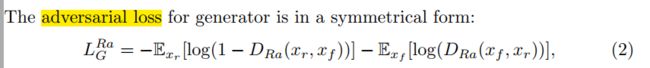

3.2 Relativistic Discriminator

所以Discriminator 的函数为:

The discriminator loss is then defined as:

优点在于:the gradients from both generated data and real data in adversarial training.

3.3 Perceptual Loss

改进在于:constraining on features before activation rather than after activation.

原因是:activated features 是非常稀疏的特别是在较深的网络结构下,这样提供的监督信号是较弱的。还有就是利用activation后的features会导致inconsistent reconstructed brightness(我觉得这和激活函数的不连续性有关??)

3.4 Network Interpolation

这方面的工作我是第一次看到,我觉得这有点于相当是结合了两个网络的优点。

作者也提到可以通过插值两个网络得到的图片以及tune the weights of content loss and adversarial loss。但是这两种方法都没有直接插值网络参数好,个人感觉这个还可以再探究一下!

4. Experiments

这部分我主要提及作者所说的一些训练的trick和需要注意的细节。

Pre-training with pixel-wise loss helps GAN-based methods to obtain more visually pleasing results. The reasons are that it can avoid undesired local optima for the generator; after pre-training, the discriminator receives relatively good super-resolved images instead of extreme fake ones (black or noisy images) at the very begining, which helps it to focus more on texture discrimination.(也就是通过L1 loss能够避免局部最优,同时我们由改进的adversarial loss可以发现当fake 图片也会影响梯度,当fake的图片太糟糕的时候可能会导致不利)

In contrast to SRGAN, which claimed that deeper models are increasingly difficult to train, our deeper model shows its superior performance with easy training, thanks to the improvements mentioned above especially the proposed RRDB without BN layers。

Interestingly, it is observed that the network interpolation strategy provides a smooth control of balancing perceptual quality and fidelity。

5. Conclusion

到此,论文就看完啦,不过论文还有相应的支撑材料~

总结:

A. RDDB blocks without BN layers

B. residual scaling and smaller initialization

C. relativistic GAN

D. enhanced the perceptual loss by using feature before activation

有耐心看到最后的你最好看~

【1】Ledig, C., Theis, L., Husz´ar, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J., Wang, Z., et al.: Photo-realistic single image superresolution using a generative adversarial network. In: CVPR. (2017)

【2】Jolicoeur-Martineau, A.: The relativistic discriminator: a key element missing from

standard gan. arXiv preprint arXiv:1807.00734 (2018)

【3】Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: CVPRW. (2017)

【4】Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In: ECCV. (2018)

【5】Yu, K., Dong, C., Lin, L., Loy, C.C.: Crafting a toolchain for image restoration by deep reinforcement learning. In: CVPR. (2018)

【6】Yuan, Y., Liu, S., Zhang, J., Zhang, Y., Dong, C., Lin, L.: Unsupervised image super-resolution using cycle-in-cycle generative adversarial networks. In: CVPRW. (2018)

【7】Wang, X., Yu, K., Dong, C., Loy, C.C.: Recovering realistic texture in image super-resolution by deep spatial feature transform. In: CVPR. (2018)

【8】Miyato, T., Kataoka, T., Koyama, M., Yoshida, Y.: Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957 (2018)

【9】Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: CVPR. (2018)