Day04 使用paddle框架进行口罩分类

由于这次项目还是跟上次的车牌识别、手势识别大同小异,所以我也不赘述太多。不同的是这次要求是复现VggNet来实现分类任务,但我个人觉得数据集样本数量太少,不适合用太深的网络来进行分类,所以还是那个网络,还是辣个AlexNet,还是那个微调~(实际是自己太懒不想搞那么麻烦)。没想到取得了当日的先锋榜第一名,这也证明了神经网络不是越深越好,而是越适合越好。不说了不说了,直接开始把。

还是那句话深度学习不外乎四个步骤:

1. 数据标签处理

2. 构建网络模型

3. 规划网络超参

4. 训练评估模型

首先导入我们需要的库

import os

import zipfile

import random

import json

import paddle

import sys

import numpy as np

from PIL import Image

from PIL import ImageEnhance

import paddle.fluid as fluid

from multiprocessing import cpu_count

import matplotlib.pyplot as plt

import time

import matplotlib.pyplot as plt

import paddle.fluid.layers as layers

from paddle.fluid.dygraph import Pool2D,Conv2D

from paddle.fluid.dygraph import Linear

设置配置参数

'''

参数配置

'''

train_parameters = {

"input_size": [3, 224, 224], #输入图片的shape

"class_dim": -1, #分类数

"src_path":"maskDetect.zip",#原始数据集路径

"target_path":"data/", #要解压的路径

"train_list_path": "data/train.txt", #train.txt路径

"eval_list_path": "data/eval.txt", #eval.txt路径

"readme_path": "data/readme.json", #readme.json路径

"label_dict":{}, #标签字典

"num_epochs": 50, #训练轮数

"train_batch_size": 32, #训练时每个批次的大小

"learning_strategy": { #优化函数相关的配置

"lr": 0.001 #超参数学习率

}

}

一、数据标签处理

paddle为大家准备的口罩分类数据集包含从网上爬取的116张带口罩的,70张不带口罩的图片,数据集地址

def unzip_data(src_path,target_path):

'''

解压原始数据集,将src_path路径下的zip包解压至data目录下

'''

if(not os.path.isdir(target_path + "maskDetect")):

z = zipfile.ZipFile(src_path, 'r')

z.extractall(path=target_path)

z.close()

def get_data_list(target_path,train_list_path,eval_list_path):

'''

生成数据列表

'''

#存放所有类别的信息

class_detail = []

#获取所有类别保存的文件夹名称

data_list_path=target_path+"maskDetect/"

class_dirs = os.listdir(data_list_path)

#总的图像数量

all_class_images = 0

#存放类别标签

class_label=0

#存放类别数目

class_dim = 0

#存储要写进eval.txt和train.txt中的内容

trainer_list=[]

eval_list=[]

#读取每个类别,['maskimages', 'nomaskimages']

for class_dir in class_dirs:

if class_dir != ".DS_Store":

class_dim += 1

#每个类别的信息

class_detail_list = {}

eval_sum = 0

trainer_sum = 0

#统计每个类别有多少张图片

class_sum = 0

#获取类别路径

path = data_list_path + class_dir

# 获取所有图片

img_paths = os.listdir(path)

for img_path in img_paths: # 遍历文件夹下的每个图片

name_path = path + '/' + img_path # 每张图片的路径

if class_sum % 10 == 0: # 每10张图片取一个做验证数据

eval_sum += 1 # test_sum为测试数据的数目

eval_list.append(name_path + "\t%d" % class_label + "\n")

else:

trainer_sum += 1

trainer_list.append(name_path + "\t%d" % class_label + "\n")#trainer_sum测试数据的数目

class_sum += 1 #每类图片的数目

all_class_images += 1 #所有类图片的数目

# 说明的json文件的class_detail数据

class_detail_list['class_name'] = class_dir #类别名称,如jiangwen

class_detail_list['class_label'] = class_label #类别标签

class_detail_list['class_eval_images'] = eval_sum #该类数据的测试集数目

class_detail_list['class_trainer_images'] = trainer_sum #该类数据的训练集数目

class_detail.append(class_detail_list)

#初始化标签列表

train_parameters['label_dict'][str(class_label)] = class_dir

class_label += 1

#初始化分类数

train_parameters['class_dim'] = class_dim

#乱序

random.shuffle(eval_list)

with open(eval_list_path, 'a') as f:

for eval_image in eval_list:

f.write(eval_image)

random.shuffle(trainer_list)

with open(train_list_path, 'a') as f2:

for train_image in trainer_list:

f2.write(train_image)

# 说明的json文件信息

readjson = {}

readjson['all_class_name'] = data_list_path #文件父目录

readjson['all_class_images'] = all_class_images

readjson['class_detail'] = class_detail

jsons = json.dumps(readjson, sort_keys=True, indent=4, separators=(',', ': '))

with open(train_parameters['readme_path'],'w') as f:

f.write(jsons)

print ('生成数据列表完成!')

def custom_reader(file_list):

'''

自定义reader

'''

def reader():

with open(file_list, 'r') as f:

lines = [line.strip() for line in f]

for line in lines:

img_path, lab = line.strip().split('\t')

img = Image.open(img_path)

if img.mode != 'RGB':

img = img.convert('RGB')

img = img.resize((224, 224), Image.BILINEAR)

img = np.array(img).astype('float32')

img = img.transpose((2, 0, 1)) # HWC to CHW

img = img/255 # 像素值归一化

yield img, int(lab)

return reader

'''

参数初始化

'''

src_path=train_parameters['src_path']

target_path=train_parameters['target_path']

train_list_path=train_parameters['train_list_path']

eval_list_path=train_parameters['eval_list_path']

batch_size=train_parameters['train_batch_size']

'''

解压原始数据到指定路径

'''

unzip_data(src_path,target_path)

'''

划分训练集与验证集,乱序,生成数据列表

'''

#每次生成数据列表前,首先清空train.txt和eval.txt

with open(train_list_path, 'w') as f:

f.seek(0)

f.truncate()

with open(eval_list_path, 'w') as f:

f.seek(0)

f.truncate()

#生成数据列表

get_data_list(target_path,train_list_path,eval_list_path)

'''

构造数据提供器

'''

print(train_list_path)

print(eval_list_path)

train_reader = paddle.batch(custom_reader(train_list_path),

batch_size=batch_size,

drop_last=True)

eval_reader = paddle.batch(custom_reader(eval_list_path),

batch_size=19,

drop_last=True)

构建神经网络

这里我们以典型的AlexNet构建我们的神经网络结构,并进行微调

构建代码如下:

class MyDNN(fluid.dygraph.Layer):

def __init__(self, name_scope, num_classes=2):

super(MyDNN, self).__init__(name_scope)

name_scope = self.full_name()

self.conv1 = Conv2D(num_channels=3, num_filters=96, filter_size=3, stride=2, padding=2, act='relu')

self.pool1 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv2 = Conv2D(num_channels=96, num_filters=256, filter_size=3, stride=2, padding=2, act='relu')

self.pool2 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.conv3 = Conv2D(num_channels=256, num_filters=384, filter_size=3, stride=2, padding=2, act='relu')

self.conv4 = Conv2D(num_channels=384, num_filters=384, filter_size=3, stride=1, padding=1, act='relu')

self.conv5 = Conv2D(num_channels=384, num_filters=256, filter_size=3, stride=1, padding=1, act='relu')

self.pool5 = Pool2D(pool_size=2, pool_stride=2, pool_type='max')

self.fc1 = Linear(input_dim=4096, output_dim=4096, act='relu')

self.drop_ratio1 = 0.5

self.fc2 = Linear(input_dim=4096, output_dim=4096, act='relu')

self.drop_ratio2 = 0.5

self.fc3 = Linear(input_dim=4096, output_dim=num_classes)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.pool2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool5(x)

x = fluid.layers.reshape(x, [x.shape[0], -1])

x = self.fc1(x)

# 在全连接之后使用dropout抑制过拟合

x= fluid.layers.dropout(x, self.drop_ratio1)

x = self.fc2(x)

# 在全连接之后使用dropout抑制过拟合

x = fluid.layers.dropout(x, self.drop_ratio2)

x = self.fc3(x)

return x

规划网络超参和训练评估模型

这里我们的优化器是

MomentumOptimizer

,损失函数是

softmax_with_cross_entropy

'''

模型训练

'''

def draw_train_process(title,iters,costs,accs,label_cost,lable_acc):

plt.title(title, fontsize=24)

plt.xlabel("iter", fontsize=20)

plt.ylabel("cost/acc", fontsize=20)

plt.plot(iters, costs,color='red',label=label_cost)

plt.plot(iters, accs,color='green',label=lable_acc)

plt.legend()

plt.grid()

plt.show()

def draw_process(title,color,iters,data,label):

plt.title(title, fontsize=24)

plt.xlabel("iter", fontsize=20)

plt.ylabel(label, fontsize=20)

plt.plot(iters, data,color=color,label=label)

plt.legend()

plt.grid()

plt.show()

all_train_iter=0

all_train_iters=[]

all_train_costs=[]

all_train_accs=[]

with fluid.dygraph.guard():

print(train_parameters['class_dim'])

print(train_parameters['label_dict'])

alex = MyDNN('alexnet')

alex.train()

optimizer = fluid.optimizer.Momentum(learning_rate=0.01,momentum=0.9,parameter_list=alex.parameters())

for epoch_num in range(train_parameters['num_epochs']):

for batch_id, data in enumerate(train_reader()):

dy_x_data = np.array([x[0] for x in data]).astype('float32')

y_data = np.array([x[1] for x in data]).astype('int64')

y_data = y_data[:, np.newaxis]

#将Numpy转换为DyGraph接收的输入

img = fluid.dygraph.to_variable(dy_x_data)

label = fluid.dygraph.to_variable(y_data)

out = alex(img)

loss = fluid.layers.softmax_with_cross_entropy(out, label)

avg_loss = fluid.layers.mean(loss)

acc=fluid.layers.accuracy(out,label)

#使用backward()方法可以执行反向网络

avg_loss.backward()

optimizer.minimize(avg_loss)

#将参数梯度清零以保证下一轮训练的正确性

alex.clear_gradients()

all_train_iter=all_train_iter+train_parameters['train_batch_size']

all_train_iters.append(all_train_iter)

all_train_costs.append(loss.numpy()[0])

all_train_accs.append(acc.numpy()[0])

if batch_id>=1 and batch_id % 2 == 0:

print("Loss at epoch {} step {}: avg_loss:{}, acc: {}".format(epoch_num, batch_id, avg_loss.numpy(), acc.numpy()))

draw_train_process("training",all_train_iters,all_train_costs,all_train_accs,"trainning cost","trainning acc")

draw_process("trainning loss","red",all_train_iters,all_train_costs,"trainning loss")

draw_process("trainning acc","green",all_train_iters,all_train_accs,"trainning acc")

#保存模型参数

fluid.save_dygraph(alex.state_dict(), "alex")

print("Final loss: {}".format(avg_loss.numpy()))

'''

模型校验

'''

with fluid.dygraph.guard():

model, _ = fluid.load_dygraph("alex")

#vgg = VGGNet()

alex = MyDNN('alexnet')

alex.load_dict(model)

alex.eval()

accs = []

for batch_id, data in enumerate(eval_reader()):

dy_x_data = np.array([x[0] for x in data]).astype('float32')

y_data = np.array([x[1] for x in data]).astype('int')

y_data = y_data[:, np.newaxis]

img = fluid.dygraph.to_variable(dy_x_data)

label = fluid.dygraph.to_variable(y_data)

out = alex(img)

acc=fluid.layers.accuracy(out,label)

lab = np.argsort(out.numpy())

accs.append(acc.numpy()[0])

print(np.mean(accs))

到此整个训练过程就结束了~

我训练了50个epoch的结果接近100%,大家可以尝试着更高的精度,比如换不同的优化器,多训练几个epoch,增大图像分辨率等等

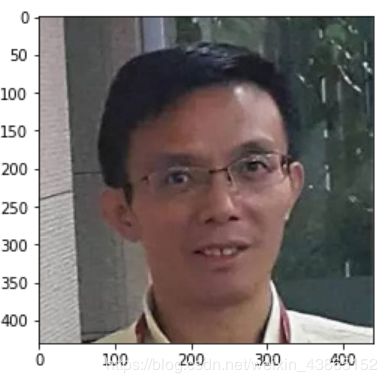

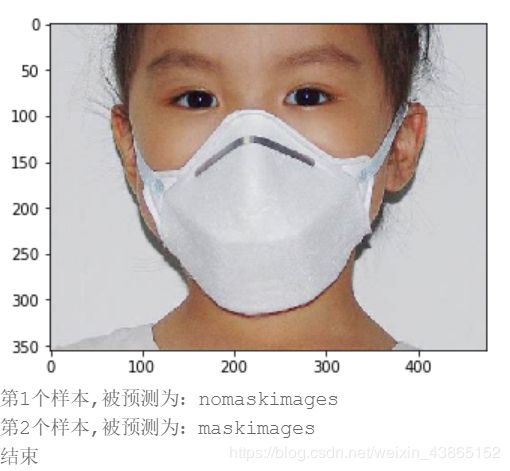

图形分类预测

def load_image(img_path):

'''

预测图片预处理

'''

img = Image.open(img_path)

if img.mode != 'RGB':

img = img.convert('RGB')

img = img.resize((224, 224), Image.BILINEAR)

img = np.array(img).astype('float32')

img = img.transpose((2, 0, 1)) # HWC to CHW

img = img/255 # 像素值归一化

return img

label_dic = train_parameters['label_dict']

'''

模型预测

'''

with fluid.dygraph.guard():

model, _ = fluid.dygraph.load_dygraph("alex")

#vgg = VGGNet()

alex = MyDNN('alexnet')

alex.load_dict(model)

alex.eval()

#展示预测图片

infer_path_mask='/home/aistudio/data/data23615/infer_mask01.jpg'

infer_path='/home/aistudio/nomask.jpg'

img = Image.open(infer_path)

img_mask = Image.open(infer_path_mask)

plt.imshow(img) #根据数组绘制图像

plt.show() #显示图像

plt.imshow(img_mask)

plt.show()

#对预测图片进行预处理

infer_imgs = []

infer_imgs.append(load_image(infer_path))

infer_imgs.append(load_image(infer_path_mask))

infer_imgs = np.array(infer_imgs)

for i in range(len(infer_imgs)):

data = infer_imgs[i]

dy_x_data = np.array(data).astype('float32')

dy_x_data=dy_x_data[np.newaxis,:, : ,:]

img = fluid.dygraph.to_variable(dy_x_data)

out = alex(img)

lab = np.argmax(out.numpy()) #argmax():返回最大数的索引

print("第{}个样本,被预测为:{}".format(i+1,label_dic[str(lab)]))

print("结束")

结束心得

这次训练能取得那么高的精度还是因为数据集数量太少了,如果数据集大的话,精度不会有这么高的,不过具体情况具体分析,如果是以打比赛来讲的话,这次的成果还是不错的。