Kubenetes1.13.1集群部署 --01基于Kubeadm搭建Kubernetes

软件包下载

链接:https://pan.baidu.com/s/1lbGbHPphlawMMm9UnFv0dQ

提取码:933z

介绍

配置K8S集群的步骤,内容从集群搭建到Kubernetes-Dashboard安装,角色权限配置。

一、服务器环境信息:

| 节点名称 | IP | OS | 安装软件 |

|---|---|---|---|

| Master | 192.168.22.141 | CentOS 7.6.1810 | kubernetes-cni-0.6.0-0.x86_64 Docker version 1.13.1, build b2f74b2/1.13.1 |

| Node1 | 192.168.22.142 | CentOS 7.6.1810 | 同上 |

| Node2 | 192.168.22.143 | CentOS 7.6.1810 | 同上 |

| Node3 | 192.168.22.144 | CentOS 7.6.1810 | 同上 |

docker版本:

[root@cloud01 k8s.soft]# docker -v

Docker version 1.13.1, build b2f74b2/1.13.1二、服务器配置

下面操作需在节点上执行,使用root用户进行操作:

1、关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service2、关闭SELinux

vim /etc/selinux/config

SELINUX=disabled3、服务器时间同步

yum -y install ntp

ntpdate 0.asia.pool.ntp.org其中0.asia.pool.ntp.org可以切换成自己的时间服务器

4、关闭sawp

vi /etc/sysctl.conf

vm.swappiness = 0vm.max_map_count = 655360

fs.file-max = 655360

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 15、重启服务器

reboot三、软件安装与配置(所有服务器)

下面操作需在节点上执行,使用root用户进行操作。

yum源配置

1、docker yum源

cat >> /etc/yum.repos.d/docker.repo <

2、kubernetes yum源

cat >> /etc/yum.repos.d/kubernetes.repo <安装软件(所有服务器)

1、安装软件

[root@cloud01 ~]# yum -y remove docker-ce*

[root@cloud01 ~]# yum -y remove kubeadm kubectl kubelet

[root@cloud01 ~]# yum -y install docker-1.13.1 kubeadm-1.13.1* kubectl-1.13.1* kubelet-1.13.1* kubernetes-cni-0.6.02、手动关闭swap(swap必须关闭)

swapoff -a

3、启动docker

systemctl start docker

systemctl enable docker4、kubelet的cgroup驱动参数需要和docker保持一致(docker版本>=17的话可以不要配置)

# 查看docker cgroup

[root@bigman-s2 ~]# docker info |grep cgroup

Cgroup Driver: cgroupfs

# 修改kubelete配置

vi /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"5、配置kubelet开机自启动

systemctl daemon-reload

systemctl enable kubelet四、kubernetes Master启动(在主节点执行)

1、下载kubenetes docker镜像

kubenetes目前的版本较之老版本,最大区别在于核心组件都已经容器化,所以安装的过程是会自动pull镜像的,但是由于镜像基本都存放于谷歌的服务器,墙内用户是无法下载,导致安装进程卡在[init] This often takes around a minute; or longer if the control plane images have to be pulled ,所以使用别人创建好的镜像:

vi k8s.sh

docker pull mirrorgooglecontainers/kube-apiserver:v1.13.1

docker pull mirrorgooglecontainers/kube-controller-manager:v1.13.1

docker pull mirrorgooglecontainers/kube-scheduler:v1.13.1

docker pull mirrorgooglecontainers/kube-proxy:v1.13.1

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd:3.2.24

docker pull coredns/coredns:1.2.6

docker pull quay.io/calico/typha:v3.4.0

docker pull quay.io/calico/cni:v3.4.0

docker pull quay.io/calico/node:v3.4.0

docker pull anjia0532/google-containers.kubernetes-dashboard-amd64:v1.10.0

docker tag mirrorgooglecontainers/kube-proxy:v1.13.1 k8s.gcr.io/kube-proxy:v1.13.1

docker tag mirrorgooglecontainers/kube-scheduler:v1.13.1 k8s.gcr.io/kube-scheduler:v1.13.1

docker tag mirrorgooglecontainers/kube-apiserver:v1.13.1 k8s.gcr.io/kube-apiserver:v1.13.1

docker tag mirrorgooglecontainers/kube-controller-manager:v1.13.1 k8s.gcr.io/kube-controller-manager:v1.13.1

docker tag mirrorgooglecontainers/etcd:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag anjia0532/google-containers.kubernetes-dashboard-amd64:v1.10.0 k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

docker rmi mirrorgooglecontainers/kube-apiserver:v1.13.1

docker rmi mirrorgooglecontainers/kube-controller-manager:v1.13.1

docker rmi mirrorgooglecontainers/kube-scheduler:v1.13.1

docker rmi mirrorgooglecontainers/kube-proxy:v1.13.1

docker rmi mirrorgooglecontainers/pause:3.1

docker rmi mirrorgooglecontainers/etcd:3.2.24

docker rmi anjia0532/google-containers.kubernetes-dashboard-amd64:v1.10.0执行脚本下载镜像:sh k8s.sh

或者:加载k8skubeadm.soft下面的tar包到k8s:

导出命令: docker save -o xxx.tar k8s.io/xxx:v1.0.x

导入命令: docker load -i xxx.tar

2、初始化Master

加入忽略NumCPU项:

[root@cloud01 ~]# kubeadm init --kubernetes-version=1.13.1 --token-ttl 0 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors='NumCPU'

[init] Using Kubernetes version: v1.13.1

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`该命令表示kubenetes集群版本号为v1.13.1,token的有效时间为0表示永久有效,容器的网络段为10.244.0.0/16

Master节点安装成功会输出如下内容:

[root@cloud01 ~]# kubeadm init --kubernetes-version=1.13.1 --token-ttl 0 --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors='NumCPU'

[init] Using Kubernetes version: v1.13.1

[preflight] Running pre-flight checks

[WARNING NumCPU]: the number of available CPUs 1 is less than the required 2

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [cloud01 localhost] and IPs [192.168.22.141 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [cloud01 localhost] and IPs [192.168.22.141 127.0.0.1 ::1]

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [cloud01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.22.141]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 43.502199 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "cloud01" as an annotation

[mark-control-plane] Marking the node cloud01 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node cloud01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 5qj564.lq9i87r26wla8ewp

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.22.141:6443 --token 5qj564.lq9i87r26wla8ewp --discovery-token-ca-cert-hash sha256:f5039ef03f7f10a7d9ba56d4326dc9009144157be30c4f74f22f3255c20648cf

[root@cloud01 ~]# 其中

kubeadm join 10.211.55.6:6443 –token 63nuhu.quu72c0hl95hc82m –discovery-token-ca-cert-hash sha256:3971ae49e7e5884bf191851096e39d8e28c0b77718bb2a413638057da66ed30a

是后续节点加入集群的启动命令,由于设置了–token-ttl 0,所以该命令永久有效,需保存好。

kubeadm token list命令可以查看token,但不能输出完整命令,需要做hash转换,可以参考:

忘记token怎么加入k8s集群

1.生成一条永久有效的token

# kubeadm token create --ttl 0

# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

dxnj79.rnj561a137ri76ym 2018-11-02T14:06:43+08:00 authentication,signing system:bootstrappers:kubeadm:default-node-token

o4avtg.65ji6b778nyacw68 authentication,signing system:bootstrappers:kubeadm:default-node-token

2.获取ca证书sha256编码hash值

# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

2cc3029123db737f234186636330e87b5510c173c669f513a9c0e0da395515b0

3.node节点加入

# kubeadm join 10.167.11.153:6443 --token o4avtg.65ji6b778nyacw68 --discovery-token-ca-cert-hash sha256:2cc3029123db737f234186636330e87b5510c173c669f513a9c0e0da395515b0

3、环境变量配置

vi /etc/profile

export KUBECONFIG=/etc/kubernetes/admin.confsource /etc/profile

4、安装网络插件Pod (在主节点上执行)

在成功启动Master节点后,在添加node节点之前,需要先安装网络管理插件,kubernetes可供选择的网络插件有很多,

如Calico,Canal,flannel,Kube-router,Romana,Weave Net

各种安装教程可以参考官方文档,点击这里

本文选择flannelv0.10.0作为网络插件:

vim /etc/sysctl.conf,添加以下内容

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1修改后,及时生效

sysctl -p

创建kube-flannel.yml

# vi kube-flannel.yml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

执行安装:

kubectl apply -f kube-flannel.yml5、安装完成后,查看pods:

[root@bigman-m2 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-86c58d9df4-2gg7v 1/1 Running 0 17h

kube-system coredns-86c58d9df4-cvlgn 1/1 Running 0 17h

kube-system etcd-bigman-m2 1/1 Running 0 17h

kube-system kube-apiserver-bigman-m2 1/1 Running 0 17h

kube-system kube-controller-manager-bigman-m2 1/1 Running 0 17h

kube-system kube-flannel-ds-amd64-5mln9 1/1 Running 0 17h

kube-system kube-flannel-ds-amd64-sbm75 1/1 Running 0 106m

kube-system kube-flannel-ds-amd64-skmg7 1/1 Running 0 17h

kube-system kube-flannel-ds-amd64-xmcqh 1/1 Running 0 17h

kube-system kube-proxy-5sbj2 1/1 Running 0 17h

kube-system kube-proxy-9jm6k 1/1 Running 0 106m

kube-system kube-proxy-qtv4d 1/1 Running 0 17h

kube-system kube-proxy-tjtwn 1/1 Running 0 17h

kube-system kube-scheduler-bigman-m2 1/1 Running 0 17h

查看Pod的启动状态,一旦kube-flannel-ds Pod的启动状态为UP或者Running,集群就可以开始添加节点了。

五、添加Node节点(在Node1-3节点上执行操作)

确保服务器配置,软件安装与配置章节操作全部正确完成之后再进行后续操作。在Node1-3节点上执行操作。

1、启动kubelet:

systemctl enable kubelet

systemctl start kubelet2、同步主节点的admin.conf到/etc/kubernetes/下;并修改环境变量

scp [email protected]:/etc/kubernetes/admin.conf /etc/kubernetes/

vi /etc/profile

export KUBECONFIG=/etc/kubernetes/admin.conf

3、执行之前保存的命令:

[root@cloud02 kubeadm.soft]# kubeadm join 192.168.22.141:6443 --token 5qj564.lq9i87r26wla8ewp --discovery-token-ca-cert-hash sha256:f5039ef03f7f10a7d9ba56d4326dc9009144157be30c4f74f22f3255c20648cf

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "192.168.22.141:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.22.141:6443"

[discovery] Requesting info from "https://192.168.22.141:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.22.141:6443"

[discovery] Successfully established connection with API Server "192.168.22.141:6443"

[join] Reading configuration from the cluster...

[join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "cloud02" as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

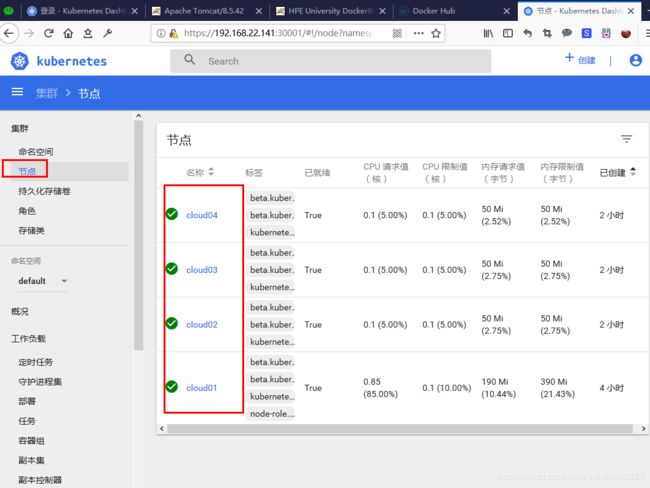

3、在主节点执行kubectl get nodes,验证集群状态,显示如下

[root@bigman-m2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

bigman-m2 Ready master 17h v1.13.1

bigman-s1 Ready 17h v1.13.1

bigman-s2 Ready 17h v1.13.1

bigman-s3 Ready 115m v1.13.1

六、Kubernetes-Dashboard(WebUI)的安装

和网络插件的用法一样,dashboardv1.10.0也是一个容器应用,同样执行安装yaml,在主节点上执行。

1、创建kubernetes-dashboard.yaml文件:

vi kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1beta2

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

执行安装:

kubectl apply -f kubernetes-dashboard.yaml

打开WebUI:

https://10.242.10.22:30001/#!/login

点击“跳过”即可进入dashboard界面:

6.2 、创建管理员角色

1、创建ClusterRole.yaml

vi ClusterRole.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["get","watch","list","create","proxy","update"]

- apiGroups: ["*"]

resources: ["pods"]

verbs: ["delete"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard-extended

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

保存,退出,执行该文件:

kubectl create -f ClusterRole.yaml

重要参数说明:

kind: ClusterRole #创建集群角色

metadata:

name: dashboard #角色名称

rules:

- apiGroups: ["*"]

resources: ["*”] #所有资源

verbs: ["get", "watch", "list", "create","proxy","update”] #赋予获取,监听,列表,创建,代理,更新的权限

resources: ["pods”] #容器资源单独配置(在所有资源配置的基础上)

verbs: ["delete”] #提供删除权限

kind: ServiceAccount #创建ServiceAccount

roleRef:

name:dashboard #填写cluster-admin代表开放全部权限

2、执行该文件,查看角色是否生成:

kubectl get serviceaccount --all-namespaces

3、查询该账户的密钥名:

[root@bigman-m2 software]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

attachdetach-controller-token-l7gcp kubernetes.io/service-account-token 3 19h

bootstrap-signer-token-pkmkk kubernetes.io/service-account-token 3 19h

bootstrap-token-s3sunq bootstrap.kubernetes.io/token 6 19h

certificate-controller-token-h8xrc kubernetes.io/service-account-token 3 19h

clusterrole-aggregation-controller-token-k4s5q kubernetes.io/service-account-token 3 19h

coredns-token-fll4q kubernetes.io/service-account-token 3 19h

cronjob-controller-token-5xm54 kubernetes.io/service-account-token 3 19h

daemon-set-controller-token-wwbs6 kubernetes.io/service-account-token 3 19h

dashboard-token-pjrgb kubernetes.io/service-account-token 3 115s

default-token-tzdbx kubernetes.io/service-account-token 3 19h

deployment-controller-token-gpgfk kubernetes.io/service-account-token 3 19h

disruption-controller-token-8479z kubernetes.io/service-account-token 3 19h

endpoint-controller-token-5skc4 kubernetes.io/service-account-token 3 19h

expand-controller-token-b86w8 kubernetes.io/service-account-token 3 19h

flannel-token-lhl9s kubernetes.io/service-account-token 3 19h

generic-garbage-collector-token-vk9nf kubernetes.io/service-account-token 3 19h

horizontal-pod-autoscaler-token-lxdgw kubernetes.io/service-account-token 3 19h

job-controller-token-7649p kubernetes.io/service-account-token 3 19h

kube-proxy-token-rjx7f kubernetes.io/service-account-token 3 19h

kubernetes-dashboard-certs Opaque 0 15h

kubernetes-dashboard-key-holder Opaque 2 19h

kubernetes-dashboard-token-2v8cw kubernetes.io/service-account-token 3 15h

namespace-controller-token-9jblj kubernetes.io/service-account-token 3 19h

node-controller-token-n5zdn kubernetes.io/service-account-token 3 19h

persistent-volume-binder-token-6m9rm kubernetes.io/service-account-token 3 19h

pod-garbage-collector-token-zrcxt kubernetes.io/service-account-token 3 19h

pv-protection-controller-token-tjwbf kubernetes.io/service-account-token 3 19h

pvc-protection-controller-token-jcxqq kubernetes.io/service-account-token 3 19h

replicaset-controller-token-nrpxh kubernetes.io/service-account-token 3 19h

replication-controller-token-fhqcv kubernetes.io/service-account-token 3 19h

resourcequota-controller-token-4b824 kubernetes.io/service-account-token 3 19h

service-account-controller-token-kprp6 kubernetes.io/service-account-token 3 19h

service-controller-token-ngfcv kubernetes.io/service-account-token 3 19h

statefulset-controller-token-qdfkt kubernetes.io/service-account-token 3 19h

token-cleaner-token-qbmvh kubernetes.io/service-account-token 3 19h

ttl-controller-token-vtvwt kubernetes.io/service-account-token 3 19h4、根据密钥名找到token:

[root@bigman-m2 software]# kubectl -n kube-system describe secret dashboard-token-pjrgb

Name: dashboard-token-pjrgb

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: dashboard

kubernetes.io/service-account.uid: 6020b349-0f18-11e9-8c52-1866da8c1dba

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtdG9rZW4tcGpyZ2IiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNjAyMGIzNDktMGYxOC0xMWU5LThjNTItMTg2NmRhOGMxZGJhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZCJ9.B-6et2C2-ettEgKfXgQ0blNSTDZfa3UnxB9xrnybukJO7TdGJ0rgmF8SIWagpw4TFPDJ_EWnFdZzZgon3W6O6c_2xCDXNA4R9yodR6V3sGN40OhO40VgYsFzgT2HQWQ6swNSeMehjHtez1TRbFPTM3PZY7jHY2o5FE6FrLnw98gm5QoHnkPYWNlcjc3HUikX5Z4exTqd3CL-ipGMShsVFNLhU8wPveLBmmKZA2rwaGsdtk44Y7tzA-e3YTqYEQxRy5tIFuWCmfG5n41fjxHcTtvtc5dTwxUNrcOPJEPykw7h-x-IWgJt6DTpHkmXCXgeyodFzCzfhFyGeEOo55WPiw dashboard-token-pjrgb秘钥名称通过kubectl get secret -n kube-system命令获取。

然后利用这个token登录到dashboard中,就可以管理集群了:

七、常用命令说明

显示所有Pod:

kubectl get pods --all-namespaces

显示Pod的更多信息:

kubectl get pod -o wide

查看RC和Service列表:

kubectl get rc,service --all-namespaces

显示所有节点:

kubectl get node

显示资源对象:

kubectl describe nodes

显示Pod的详细信息:

kubectl describe pod

显示由RC管理的Pod的信息:

kubectl describe pods

基于pod.yaml定义的名称删除Pod:

kubectl delete -f pod.yaml

删除所有包含某个label的Pod和service:

kubectl delete pods,services -l name=

删除所有Pod:

kubectl delete pods --all

执行Pod的date命令,默认使用Pod中的第一个容器执行:

kubectl exec date

指定Pod中牧歌容器执行date命令:

kubectl exec -c date

通过bash获得Pod中某个容器的TTY,相当于登录容器:

kubectl exec -ti -c /bin/bash

查看容器输出到stdout的日志:

kubectl logs

跟踪查看容器的日志,tail -f命令:

kubectl logs -f -c

重置节点:

kubeadm reset

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

systemctl start docker

systemctl start kubelet

参考资料

- https://kubernetes.io/docs/home/?path=users&persona=app-developer&level=foundational

- https://docs.docker.com/get-started/