对抗生成网络学习(十二)——MARTA-GAN实现遥感图像的场景生成(tensorflow实现)

一、背景

MARTA-GAN全称为multiple-layer feature-matching generative adversarial networks,是Daoyu Lin等人于16年12月发表的文章。我比较好奇的是,这个GAN为啥要叫MARTA-GAN,而不是MLFM-GAN...........由于我自身是做遥感出身的,所以还挺关注GAN在遥感方面的应用,这篇文章算是我看到的GAN最早应用在遥感领域的文章了,所以就打算来实现一下。作者这篇文章的题目为《MARTA GANs: Unsupervised Representation Learning for Remote Sensing Image Classification》,不过我感觉与其说是图像分类,不如说是场景实现更准确一些。

MARTA-GAN是基于DCGAN做的,我实验的结果非常不理想,所以这篇文章重点就放在对MARTA-GAN的解读了。不过实验过程还是要写的,可以为后面做这个模型的人提供参考。

[1]文章链接:https://arxiv.org/pdf/1612.08879.pdf

二、MARTA-GAN解读

搜了一下,网上几乎没有对这篇论文详细解读的文章,那我就根据自己的理解来写写。

先从摘要部分看MARTA-GAN的目的,作者提到现有模型的最大限制是非常有限的标记样本,因此作者提出了一个非监督模型MARTA-GAN,利用无标记样本来实现不同场景。

遥感图像与普通的数字图像还是有区别的,遥感图像具有非常强的空间性,不同地物会表现出不同的大小、颜色。早先用于场景分类的方法包括BoVW(bag of visual words)、SPM(spatial pyramid matching)、CNN等,但这些方法都是基于手工选取的特征或需要大量标记样本。而GAN是一种非监督的方法,因此作者基于DCGAN做了改进,提出MARTA-GAN,作者的改进之处主要表现在:

(1)在生成器中,DCGAN生成64*64的图,但是MARTA-GAN生成256*256的图

(2)核的大小不同,DCGAN为5*5,MARTA-GAN为4*4

(3)MARTA-GAN使用多特征层(multi-feature layer)来整合中级、高级信息(mid- and high-level)

(4)引入两种loss函数:感知loss(perceptual loss)和特征匹配loss(feature matching loss)

作者的主要贡献在于:

1)To our knowledge, this is the first time that GANs have been applied to classify unsupervised remote sensing images. (首次将GAN用于遥感图像)

2)The results of experiments on the UC-Merced Landuse and Brazilian Coffee Scenes datasets showed that the proposed algorithm outperforms state-of-the-art unsupervised algorithms in terms of overall classification accuracy. (效果很好)

3)We propose a multi-feature layer by combining perceptual loss and loss of feature matching to learn better image representations.(结合了感知loss和特征匹配loss的多特征层)

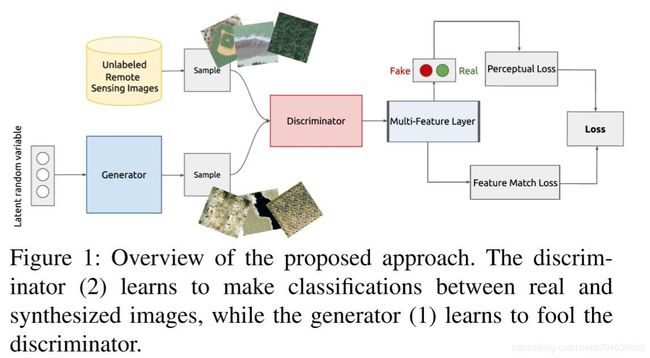

先来看一下作者提出MARTA-GAN的流程图:

流程图还是非常小清新的。模型的驱动函数这里不再过多介绍。关于网络结构,示意图为:

除过上图中标记的网络参数,作者还提到,激活函数使用了leakyReLU,leak值设为0.2,kernel sizes为4*4,步长为2,在判别器和生成器中使用了BN(batch normalization)层,衰减因子设置为0.9。正式进行实验之前,还需要对数据做预处理,将图像的值归一化至[-1 1],batch size设置为64,使用SGD(随机梯度下降),优化器为Adam,学习率为0.00002,动量为0.5。作者的实现过程是用的tensorlayer。

作者将MARTA-GAN用于两个不同的数据集: the UC Merced Land Use Dataset和the Brazilian Coffee Scenes Dataset,并都取得了不错的表现。我们的实验使用的是the UC Merced Land Use Dataset数据集,后面会详细介绍;关于the Brazilian Coffee Scenes Dataset数据集,一共有2876张影像,均来自SPOT卫星,图像尺寸为64*64,包含1438张Coffee影像和1438张non-Coffee影像。

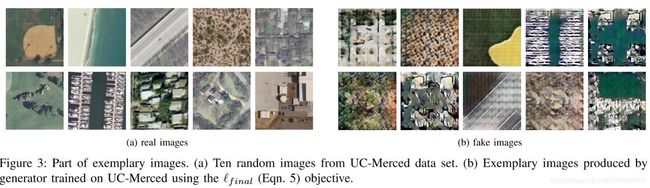

先来看看在the UC Merced Land Use Dataset数据集上的表现:

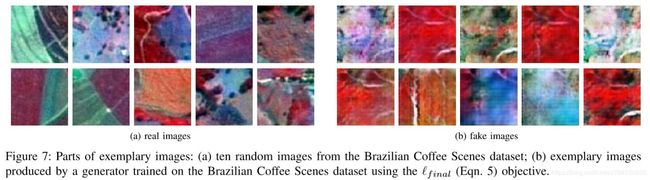

再来看看MARTA-GAN在the Brazilian Coffee Scenes Dataset数据集上的表现

虽然the Brazilian Coffee Scenes Dataset的结果比较抽象,不过原始数据集似乎效果也不是很好,所以作者还针对the UC Merced Land Use Dataset数据集的结果做了精度评定,并与DCGAN做了对比:

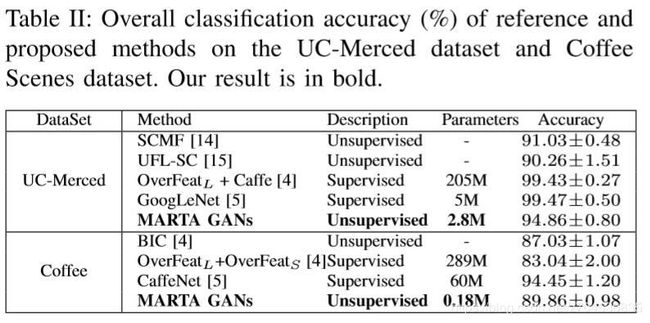

该数据集一共是21个类别,MARTA-GAN的平均精度约比DCGAN高7%,作者提到这是因为MARTA-GAN中引入了中级和高级特征信息。

最后作者还比对了不同方法用于这两个数据集的效果,可以直观的看到MARTA-GAN的参数量最少且效果较好;

三、MARTA-GAN实现过程

关于MARTA-GAN的实现过程,我主要参考了两个代码:

[2]https://github.com/BUPTLdy/MARTA-GAN

[3]https://github.com/ualiawan/MARTA-GANs

由于[2]是用tensorlayer写的,我的tensorlayer有点问题,而[3]是用tensorflow写的,但是有点小bug并少了一些功能,所以我主要使用[3]的代码,并做了一些改进。

1. 所有文件结构

所有文件结构为:

-- utilities.py

-- network.py

-- train_net.py

-- features.py

-- train_svm.py

-- data # 数据集需要自己准备

|------ uc_test_256

|------ image01.jpg

|------ image02.jpg

|------ ......

|------ uc_train_256_data

|------ image01.jpg

|------ image02.jpg

|------ ......

|------ uc_train_256_feat

|------ image01.jpg

|------ image02.jpg

|------ ......2. 数据准备

我们所使用的数据集为the UC Merced Land Use Dataset,论文中作者也对该数据集进行了介绍,下面引入论文中的介绍:

这个数据集有21种土地利用类型(21 land-use class),每种100张,尺寸为256*256。由于数据集比较少,我们使用数据增广的方式增加数据,这里使用了水平裁剪和垂直裁剪,90°旋转的方法,在GTX 1080的配置下用了4个小时。

那么,在哪里才能下载到这个数据集呢?作者给出了这个数据集的百度云下载地址:https://pan.baidu.com/s/1i5zQNdj

所以,我们可以直接下载好数据集,然后解压,放在'data/'路径下,一定要按照上面提到的文件结构放置才行。弄好了之后,我们可以大致来看看这个数据集:

可以看到很清晰的飞机还有棒球场,当然还有其他类别的:

建筑物和丛林也都非常清晰,数据集的质量还是不错的。

3. 辅助函数文件utilities.py文件

这个文件中主要放置了保存图像等相关操作,下面直接给出代码:

import scipy.misc

import numpy as np

def center_crop(x, crop_h, crop_w=None, resize_w=64):

if crop_w is None:

crop_w = crop_h

h, w = x.shape[:2]

j = int(round((h - crop_h)/2.))

i = int(round((w - crop_w)/2.))

return scipy.misc.imresize(x[j:j+crop_h, i:i+crop_w],

[resize_w, resize_w])

def load_image(image_path, image_size, is_crop=True, resize_w=64):

image = scipy.misc.imread(image_path).astype(np.float)

if is_crop:

cropped_image = center_crop(image, image_size, resize_w=resize_w)

else:

cropped_image = image

return np.array(cropped_image)/127.5 - 1.

def get_labels(num_labels, lables_file):

style_labels = list(np.loadtxt(lables_file, str, delimiter='\n'))

if num_labels > 0:

style_labels = style_labels[:num_labels]

return style_labels

# 用于得到batch张图像,关键函数get_image

def imread(path, is_grayscale = False):

if is_grayscale:

return scipy.misc.imread(path, flatten = True).astype(np.float)

else:

return scipy.misc.imread(path).astype(np.float)

def transform(image, npx=64, is_crop=True, resize_w=64):

if is_crop:

cropped_image = center_crop(image, npx, resize_w=resize_w)

else:

cropped_image = image

return np.array(cropped_image)/127.5 - 1.

def get_image(image_path, image_size, is_crop=True, resize_w=64, is_grayscale=False):

return transform(imread(image_path, is_grayscale), image_size, is_crop, resize_w)

# 用于保存图像,关键函数save_images

def merge(images, size):

h, w = images.shape[1], images.shape[2]

img = np.zeros((h * size[0], w * size[1], 3))

for idx, image in enumerate(images):

i = idx % size[1]

j = idx // size[1]

img[j*h:j*h+h, i*w:i*w+w, :] = image

return img

def inverse_transform(images):

return (images+1.)/2. * 255

def imsave(images, size, path):

return scipy.misc.imsave(path, merge(images, size))

def save_images(images, size, image_path):

return imsave(inverse_transform(images), size, image_path)4. 网络结构network.py文件

这个文件定义了生成器和判别器,下面直接给出代码:

import tensorflow as tf

def generator(inputs, is_train=True, reuse=False):

output_size = 256

kernel = 4

batch_size = 64

gf_dim = 16

c_dim = 3

weight_init = tf.random_normal_initializer(stddev=0.01)

s2, s4, s8, s16, s32, s64 = int(output_size/2), int(output_size/4), int(output_size/8), int(output_size/16), int(output_size/32), int(output_size/64)

with tf.variable_scope('generator', reuse=reuse):

h0 = tf.layers.dense(inputs, units=gf_dim*32*s64*s64, activation=tf.identity, kernel_initializer=weight_init)

h0 = tf.reshape(h0, [-1, s64, s64, gf_dim*32])

h0 = tf.contrib.layers.batch_norm(h0, scale=True, is_training=is_train, scope="g_bn0")

h0 = tf.nn.relu(h0)

output1_shape = [batch_size, s32, s32, gf_dim*16]

w_h1 = tf.get_variable('g_w_h1', [kernel, kernel, output1_shape[-1], int(h0.get_shape()[-1])],

initializer=weight_init)

b_h1 = tf.get_variable('g_b_h1', [output1_shape[-1]], initializer=tf.constant_initializer(0))

h1 = tf.nn.conv2d_transpose(h0, w_h1, output_shape=output1_shape, strides=[1, 2, 2, 1],

padding='SAME', name='g_h1_deconv2d') + b_h1

h1 = tf.contrib.layers.batch_norm(h1, scale=True, is_training=is_train, scope="g_bn1")

h1 = tf.nn.relu(h1)

output2_shape = [batch_size, s16, s16, gf_dim*8]

w_h2 = tf.get_variable('g_w_h2', [kernel, kernel, output2_shape[-1], int(h1.get_shape()[-1])],

initializer=weight_init)

b_h2 = tf.get_variable('g_b_h2', [output2_shape[-1]], initializer=tf.constant_initializer(0))

h2 = tf.nn.conv2d_transpose(h1, w_h2, output_shape=output2_shape, strides=[1, 2, 2, 1],

padding='SAME', name='g_h2_deconv2d') + b_h2

h2 = tf.contrib.layers.batch_norm(h2, scale=True, is_training=is_train, scope="g_bn2")

h2 = tf.nn.relu(h2)

output3_shape = [batch_size, s8, s8, gf_dim*4]

w_h3 = tf.get_variable('g_w_h3', [kernel, kernel, output3_shape[-1], int(h2.get_shape()[-1])],

initializer=weight_init)

b_h3 = tf.get_variable('g_b_h3', [output3_shape[-1]], initializer=tf.constant_initializer(0))

h3 = tf.nn.conv2d_transpose(h2, w_h3, output_shape=output3_shape, strides=[1, 2, 2, 1],

padding='SAME', name='g_h3_deconv2d') + b_h3

h3 = tf.contrib.layers.batch_norm(h3, scale=True, is_training=is_train, scope="g_bn3")

h3 = tf.nn.relu(h3)

output4_shape = [batch_size, s4, s4, gf_dim*2]

w_h4 = tf.get_variable('g_w_h4', [kernel, kernel, output4_shape[-1], int(h3.get_shape()[-1])],

initializer=weight_init)

b_h4 = tf.get_variable('g_b_h4', [output4_shape[-1]], initializer=tf.constant_initializer(0))

h4 = tf.nn.conv2d_transpose(h3, w_h4, output_shape=output4_shape, strides=[1, 2, 2, 1],

padding='SAME', name='g_h4_deconv2d') + b_h4

h4 = tf.contrib.layers.batch_norm(h4, scale=True, is_training=is_train, scope="g_bn4")

h4 = tf.nn.relu(h4)

output5_shape = [batch_size, s2, s2, gf_dim*1]

w_h5 = tf.get_variable('g_w_h5', [kernel, kernel, output5_shape[-1], int(h4.get_shape()[-1])],

initializer=weight_init)

b_h5 = tf.get_variable('g_b_h5', [output5_shape[-1]], initializer=tf.constant_initializer(0))

h5 = tf.nn.conv2d_transpose(h4, w_h5, output_shape=output5_shape, strides=[1, 2, 2, 1],

padding='SAME', name='g_h5_deconv2d') + b_h5

h5 = tf.contrib.layers.batch_norm(h5, scale=True, is_training=is_train, scope="g_bn5")

h5 = tf.nn.relu(h5)

output6_shape = [batch_size, output_size, output_size, c_dim]

w_h6 = tf.get_variable('g_w_h6', [kernel, kernel, output6_shape[-1], int(h5.get_shape()[-1])],

initializer=weight_init)

b_h6 = tf.get_variable('g_b_h6', [output6_shape[-1]], initializer=tf.constant_initializer(0))

h6 = tf.nn.conv2d_transpose(h5, w_h6, output_shape=output6_shape, strides=[1, 2, 2, 1],

padding='SAME', name='g_h6_deconv2d') + b_h6

#logits = h6.outputs

#h6.outputs = tf.nn.tanh(h6.outputs)

return tf.nn.tanh(h6)

def discriminator(inputs, is_train=True, reuse=False):

kernel = 5

df_dim = 16

weight_init = tf.random_normal_initializer(stddev=0.01)

alpha_lrelu = 0.2

with tf.variable_scope('discriminator', reuse=reuse):

w_h0 = tf.get_variable('d_w_h0', [kernel, kernel, 3, df_dim], initializer=weight_init)

b_h0 = tf.get_variable('d_b_h0', [df_dim], initializer=tf.constant_initializer(0))

h0 = tf.nn.conv2d(inputs, w_h0, strides=[1,2,2,1], padding='SAME', name='d_h0_conv2d') + b_h0

h0 = tf.nn.leaky_relu(h0, alpha_lrelu)

w_h1 = tf.get_variable('d_w_h1', [kernel, kernel, h0.get_shape()[-1], df_dim*2], initializer=weight_init)

b_h1 = tf.get_variable('d_b_h1', [df_dim*2], initializer=tf.constant_initializer(0))

h1 = tf.nn.conv2d(h0, w_h1, strides=[1,2,2,1], padding='SAME', name='d_h1_conv2d') + b_h1

h1 = tf.contrib.layers.batch_norm(h1, is_training=is_train, scope="d_bn1")

h1 = tf.nn.leaky_relu(h1, alpha_lrelu)

w_h2 = tf.get_variable('d_w_h2', [kernel, kernel, h1.get_shape()[-1], df_dim*4], initializer=weight_init)

b_h2 = tf.get_variable('d_b_h2', [df_dim*4], initializer=tf.constant_initializer(0))

h2 = tf.nn.conv2d(h1, w_h2, strides=[1,2,2,1], padding='SAME', name='d_h2_conv2d') + b_h2

h2 = tf.contrib.layers.batch_norm(h2, is_training=is_train, scope="d_bn2")

h2 = tf.nn.leaky_relu(h2, alpha_lrelu)

w_h3 = tf.get_variable('d_w_h3', [kernel, kernel, h2.get_shape()[-1], df_dim*8], initializer=weight_init)

b_h3 = tf.get_variable('d_b_h3', [df_dim*8], initializer=tf.constant_initializer(0))

h3 = tf.nn.conv2d(h2, w_h3, strides=[1,2,2,1], padding='SAME', name='d_h3_conv2d') + b_h3

h3 = tf.contrib.layers.batch_norm(h3, is_training=is_train, scope="d_bn3")

h3 = tf.nn.leaky_relu(h3, alpha_lrelu)

global_max_h3 = tf.nn.max_pool(h3, [1,4,4,1], strides=[1,4,4,1], padding='SAME', name='d_h3_maxpool')

global_max_h3 = tf.layers.flatten(global_max_h3, name='d_h3_flatten')

w_h4 = tf.get_variable('d_w_h4', [kernel, kernel, h3.get_shape()[-1], df_dim*16], initializer=weight_init)

b_h4 = tf.get_variable('d_b_h4', [df_dim*16], initializer=tf.constant_initializer(0))

h4 = tf.nn.conv2d(h3, w_h4, strides=[1,2,2,1], padding='SAME', name='d_h4_conv2d') + b_h4

h4 = tf.contrib.layers.batch_norm(h4, is_training=is_train, scope="d_bn4")

h4 = tf.nn.leaky_relu(h4, alpha_lrelu)

global_max_h4 = tf.nn.max_pool(h4, [1,2,2,1], strides=[1,2,2,1], padding='SAME', name='d_h4_maxpool')

global_max_h4 = tf.layers.flatten(global_max_h4, name='d_h4_flatten')

w_h5 = tf.get_variable('d_w_h5', [kernel, kernel, h4.get_shape()[-1], df_dim*32], initializer=weight_init)

b_h5 = tf.get_variable('d_b_h5', [df_dim*32], initializer=tf.constant_initializer(0))

h5 = tf.nn.conv2d(h4, w_h5, strides=[1,2,2,1], padding='SAME', name='d_h5_conv2d') + b_h5

h5 = tf.contrib.layers.batch_norm(h5, is_training=is_train, scope="d_bn5")

h5 = tf.nn.leaky_relu(h5, alpha_lrelu)

global_max_h5 = tf.layers.flatten(h5, name='d_h5_flatten')

features = tf.concat([global_max_h3, global_max_h4, global_max_h5], -1, name='d_concat')

h6 = tf.layers.dense(features, units=1, activation=tf.identity, kernel_initializer=weight_init, name='d_h6_dense')

#logits = h6.outputs

#h6.outputs = tf.nn.sigmoid(h6.outputs)

return tf.nn.sigmoid(h6), features5. 训练网络train_net.py文件

该文件用于编写网络结构,训练网络,直接给出代码;

import tensorflow as tf

import network

import sys

import os

import numpy as np

from glob import glob

from random import shuffle

import utilities

import time

flags = tf.app.flags

flags.DEFINE_integer("epoch", 10, "Epoch to train")

flags.DEFINE_float("learning_rate", 0.001, "Learning rate of for adam")

flags.DEFINE_float("beta1", 0.9, "Momentum term of adam")

flags.DEFINE_integer("train_size", sys.maxsize, "The size of train images")

flags.DEFINE_integer("batch_size", 64, "The number of batch images")

flags.DEFINE_integer("image_size", 256, "The size of image to use (will be center cropped)")

flags.DEFINE_integer("output_size", 256, "The size of the output images to produce")

flags.DEFINE_integer("sample_size", 64, "The number of sample images")

flags.DEFINE_integer("c_dim", 3, "Dimension of image color")

flags.DEFINE_integer("z_dim", 100, "Dimensions of input niose to generator")

flags.DEFINE_integer("sample_step", 500, "The interval of generating sample")

flags.DEFINE_string("dataset", "uc_train_256_data", "The name of dataset [celebA, mnist, lsun]")

flags.DEFINE_string("checkpoint_dir", "checkpoint", "Directory name to save the checkpoints [checkpoint]")

flags.DEFINE_string("summaries_dir", "logs", "Directory name to save the summaries")

flags.DEFINE_string("sample_dir", "samples", "Directory name to save the image samples [samples]")

flags.DEFINE_boolean("is_train", True, "True for training, False for testing [False]")

flags.DEFINE_boolean("is_crop", False, "True for training, False for testing [False]")

flags.DEFINE_boolean("visualize", False, "True for visualizing, False for nothing [False]")

FLAGS = flags.FLAGS

def main(_):

if not os.path.exists(FLAGS.checkpoint_dir):

os.makedirs(FLAGS.checkpoint_dir)

if not os.path.exists(FLAGS.sample_dir):

os.makedirs(FLAGS.sample_dir)

if not os.path.exists(FLAGS.summaries_dir):

os.makedirs(FLAGS.summaries_dir)

with tf.device("/gpu:0"):

#with tf.device("/cpu:0"):

z = tf.placeholder(tf.float32, [FLAGS.batch_size, FLAGS.z_dim], name="g_input_noise")

x = tf.placeholder(tf.float32, [FLAGS.batch_size, FLAGS.output_size, FLAGS.output_size, FLAGS.c_dim], name='d_input_images')

Gz = network.generator(z)

Dx, Dfx = network.discriminator(x)

Dz, Dfz = network.discriminator(Gz, reuse=True)

d_loss_real = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=Dx, labels=tf.ones_like(Dx)))

d_loss_fake = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=Dz, labels=tf.zeros_like(Dz)))

d_loss = d_loss_real + d_loss_fake

g_loss_perceptual = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits = Dz, labels = tf.ones_like(Dz)))

g_loss_features = tf.reduce_mean(tf.nn.l2_loss(Dfx-Dfz))/(FLAGS.image_size*FLAGS.image_size)

g_loss = g_loss_perceptual + g_loss_features

tvars = tf.trainable_variables()

d_vars = [var for var in tvars if 'd_' in var.name]

g_vars = [var for var in tvars if 'g_' in var.name]

print(d_vars)

print("---------------")

print(g_vars)

with tf.variable_scope(tf.get_variable_scope(), reuse=False):

print("reuse or not: {}".format(tf.get_variable_scope().reuse))

assert tf.get_variable_scope().reuse == False, "Houston tengo un problem"

d_trainer = tf.train.AdamOptimizer(FLAGS.learning_rate, FLAGS.beta1).minimize(d_loss, var_list=d_vars)

g_trainer = tf.train.AdamOptimizer(FLAGS.learning_rate, FLAGS.beta1).minimize(g_loss, var_list=g_vars)

tf.summary.scalar("generator_loss_percptual", g_loss_perceptual)

tf.summary.scalar("generator_loss_features", g_loss_features)

tf.summary.scalar("generator_loss_total", g_loss)

tf.summary.scalar("discriminator_loss", d_loss)

tf.summary.scalar("discriminator_loss_real", d_loss_real)

tf.summary.scalar("discriminator_loss_fake", d_loss_fake)

images_for_tensorboard = network.generator(z, reuse=True)

tf.summary.image('Generated_images', images_for_tensorboard, 2)

merged = tf.summary.merge_all()

gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.30)

gpu_options.allow_growth = True

saver = tf.train.Saver()

with tf.Session(config=tf.ConfigProto(gpu_options=gpu_options, allow_soft_placement=True)) as sess:

print("starting session")

summary_writer = tf.summary.FileWriter(FLAGS.summaries_dir + '/train', sess.graph)

sess.run(tf.global_variables_initializer())

data_files = glob(os.path.join("./data", FLAGS.dataset, "*.jpg"))

model_dir = "%s_%s_%s" % (FLAGS.dataset, 64, FLAGS.output_size)

save_dir = os.path.join(FLAGS.checkpoint_dir, model_dir)

sample_seed = np.random.uniform(low=-1, high=1, size=(FLAGS.batch_size, FLAGS.z_dim)).astype(np.float32)

if FLAGS.is_train:

for epoch in range(FLAGS.epoch):

d_total_cost = 0.

g_total_cost = 0.

shuffle(data_files)

num_batches = min(len(data_files), FLAGS.train_size) // FLAGS.batch_size

#num_batches = 2

for batch_i in range(num_batches):

batch_files = data_files[batch_i*FLAGS.batch_size:(batch_i+1)*FLAGS.batch_size]

batch = [utilities.load_image(batch_file, FLAGS.image_size, is_crop=FLAGS.is_crop, resize_w=FLAGS.output_size) for batch_file in batch_files]

batch_x = np.array(batch).astype(np.float32)

batch_z = np.random.normal(-1, 1, size=[FLAGS.batch_size, FLAGS.z_dim]).astype(np.float32)

start_time = time.time()

# print(batch[0])

d_err, _ = sess.run([d_loss, d_trainer], feed_dict={z: batch_z, x: batch_x})

g_err, _ = sess.run([g_loss, g_trainer], feed_dict={z: batch_z, x: batch_x})

d_total_cost += d_err

g_total_cost += g_err

if batch_i % 10 == 0:

summary = sess.run(merged, feed_dict={x: batch_x, z: batch_z})

summary_writer.add_summary(summary, (epoch-1)*(num_batches/30)+(batch_i/30))

print("Epoch: [%2d/%2d] [%4d/%4d] time: %4.4f, d_loss: %.8f, g_loss: %.8f" % (

epoch, FLAGS.epoch, batch_i, num_batches, time.time() - start_time, d_err, g_err))

# update sample files based on shuffled data

sample_files = batch_files[0:FLAGS.batch_size]

sample = [utilities.get_image(sample_file, FLAGS.image_size,

is_crop=FLAGS.is_crop,

resize_w=FLAGS.output_size,

is_grayscale=0) for sample_file in sample_files]

sample_images = np.array(sample).astype(np.float32)

if np.mod(batch_i, 10) == 0:

# generate and visualize generated images

# img, errD, errG = sess.run([net_g2.outputs, d_loss, g_loss], feed_dict={z : sample_seed, real_images: sample_images})

img = sess.run(Gz, feed_dict={z: sample_seed, x: sample_images})

utilities.save_images(img, [8, 8],

'./{}/train_{:02d}_{:03d}.png'.format(FLAGS.sample_dir, epoch, batch_i))

print("Epoch:", '%04d' % (epoch+1), "d_cost= {:.9f}".format(d_total_cost/num_batches),

"g_cost=", "{:.9f}".format(g_total_cost/num_batches))

save_path = saver.save(sess, save_dir)

print("Model saved in path: %s" % save_path)

sys.stdout.flush()

sess.close()

if __name__ == '__main__':

tf.app.run()

6. 提取特征feature.py文件

该文件用于提取训练好的网络特征,直接给出代码:

import sys

import os

import numpy as np

from glob import glob

from random import shuffle

import utilities

flags = tf.app.flags

flags.DEFINE_integer("train_size", sys.maxsize, "The size of train images")

flags.DEFINE_integer("batch_size", 64, "The number of batch images")

flags.DEFINE_integer("image_size", 256, "The size of image to use (will be center cropped)")

flags.DEFINE_integer("output_size", 256, "The size of the output images to produce [64]")

flags.DEFINE_integer("sample_size", 64, "The number of sample images [64]")

flags.DEFINE_integer("features_size", 14336, "Number of features for one image")

flags.DEFINE_integer("c_dim", 3, "Dimension of image color. [3]")

flags.DEFINE_integer("sample_step", 500, "The interval of generating sample. [500]")

flags.DEFINE_string("train_dataset", "uc_train_256_data", "The name of dataset")

flags.DEFINE_string("test_dataset", "uc_test_256", "The name of dataset")

flags.DEFINE_string("checkpoint_dir", "checkpoint", "Directory name to save the checkpoints [checkpoint]")

flags.DEFINE_string("sample_dir", "samples", "Directory name to save the image samples [samples]")

flags.DEFINE_string("feature_dir", "features", "Directory name to save features")

flags.DEFINE_boolean("is_train", False, "True for training, False for testing [False]")

flags.DEFINE_boolean("is_crop", False, "True for training, False for testing [False]")

flags.DEFINE_boolean("visualize", True, "True for visualizing, False for nothing [False]")

flags.DEFINE_integer("num_labels", 21, "Number of different labels")

flags.DEFINE_string("labels_file", "style_names.txt", "File containing a list of labels")

FLAGS = flags.FLAGS

def main(_):

if not os.path.exists(FLAGS.checkpoint_dir):

print("Houston tengo un problem: No checkPoint directory found")

return 0

if not os.path.exists(FLAGS.feature_dir):

os.makedirs(FLAGS.feature_dir)

if not os.path.exists(FLAGS.sample_dir):

os.makedirs(FLAGS.sample_dir)

#with tf.device("/gpu:0"):

with tf.device("/cpu:0"):

x = tf.placeholder(tf.float32, [FLAGS.batch_size, FLAGS.output_size, FLAGS.output_size, FLAGS.c_dim], name='d_input_images')

d_netx, Dfx = network.discriminator(x, is_train=FLAGS.is_train, reuse=False)

saver = tf.train.Saver()

with tf.Session() as sess:

print("starting session")

sess.run(tf.global_variables_initializer())

model_dir = "%s_%s_%s" % (FLAGS.train_dataset, 64, FLAGS.output_size)

save_dir = os.path.join(FLAGS.checkpoint_dir, model_dir)

labels = utilities.get_labels(FLAGS.num_labels, FLAGS.labels_file)

saver.restore(sess, save_dir)

print("Model restored from file: %s" % save_dir)

#extracting features from train dataset

extract_features(x, labels, sess, Dfx)

#extracting features from test dataset

extract_features(x, labels, sess, Dfx, training=False)

sess.close()

def extract_features(x, labels, sess, Dfx, training=True):

if training:

data_path = FLAGS.train_dataset

features_path = "features_train.npy"

labels_path = "labels_train.npy"

else:

data_path = FLAGS.test_dataset

features_path = "features_test.npy"

labels_path = "labels_test.npy"

data_files = glob(os.path.join("./data", data_path, "*.jpg"))

shuffle(data_files)

num_batches = min(len(data_files), FLAGS.train_size) // FLAGS.batch_size

#num_batches =2

num_examples = num_batches*FLAGS.batch_size

y = np.zeros(num_examples, dtype=np.uint8)

for i in range(num_examples):

for j in range(len(labels)):

if labels[j] in data_files[i]:

y[i] = j

break

features = np.zeros((num_examples, FLAGS.features_size))

for batch_i in range(num_batches):

batch_files = data_files[batch_i*FLAGS.batch_size:(batch_i+1)*FLAGS.batch_size]

batch = [utilities.load_image(batch_file, FLAGS.image_size, is_crop=FLAGS.is_crop, resize_w=FLAGS.output_size) for batch_file in batch_files]

batch_x = np.array(batch).astype(np.float32)

f = sess.run(Dfx, feed_dict={x: batch_x})

begin = FLAGS.batch_size*batch_i

end = FLAGS.batch_size + begin

features[begin:end, ...] = f

print("Features Extracted, Now saving")

np.save(os.path.join(FLAGS.feature_dir, features_path), features)

np.save(os.path.join(FLAGS.feature_dir, labels_path), y)

print("Features Saved")

sys.stdout.flush()

if __name__ == '__main__':

tf.app.run()7. SVM分类文件train_svm.py文件

作者最后是用svm做了一个简单的分类,这里直接给出代码

from sklearn.metrics import accuracy_score

from sklearn import svm

import numpy as np

accuracy = []

x_train = np.load('features/features_train.npy')

y_train = np.load('features/labels_train.npy')

x_test = np.load('features/features_test.npy')

y_test = np.load('features/labels_test.npy')

print("Fitting the classifier to the training set")

C = 1000.0 # SVM regularization parameter

clf = svm.SVC(kernel='linear', C=C).fit(x_train, y_train)

print("Predicting...")

y_pred = clf.predict(x_test)

print("Accuracy: %.3f" % (accuracy_score(y_test, y_pred)))

accuracy.append(accuracy_score(y_test, y_pred))

print(accuracy)四、实验结果

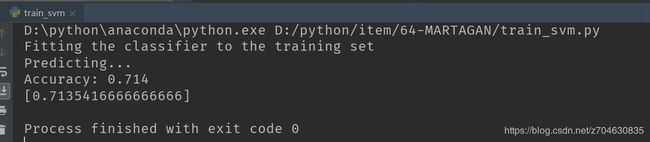

这个实验的实现过程还稍微有点复杂,首先先运行train_net.py文件,运行完毕之后运行feature.py文件,最后在运行train_svm.py文件。这个模型我是用cpu跑了,跑了一天,运行完train_svm.py文件的最终输出为:

精度为71.35%,跟论文里作者的结果差的还蛮多的,后面再看一下我的生成结果,几乎一片噪声:

就完全没有生成啥结果。。。。。

五、分析

1. 目前只有一个想法,我跑完模型没啥效果,有空我再好好检查一下自己的模型。

2. 关于the UC Merced Land Use Dataset数据集,目前用深度学习做遥感图像的用的还挺多,补充一个用caffe做的例子,参考链接:https://github.com/yangxue0827/CNN_UCMerced-LandUse_Caffe