Elasticsearch-7.1.x学习笔记

文章目录

- 1. 单节点安装

- 2. ES安装head插件

- 3. Elasticsearch Rest基本操作

- REST介绍

- CURL创建索引库

- 查询索引-GET

- DSL查询

- MGET查询

- HEAD的使用

- ES更新索引

- ES删除索引

- ES批量操作-bulk

- ES版本控制

- 4. Elasticsearch 核心概念

- Cluster

- Shards

- Replicas

- Recovery

- Gateway

- Discovery.zen

- Transport

- Create Index

- Mapping

- 5. Elasticsearch Java 客户端

- Java High Level REST Client Java高级客户端

- Document APIs

- Index API

- Get API

- Exists API

- Update API

- Delete API

- Bulk API

- Multi-Get API

- SearchType

- Query Then Fetch

- Dfs, Query Then Fetch

- 查询-Query

- 聚合-Aggregations

- 分页

- 多索引和多类型查询

- 极速查询

- ElasticSearch索引模块

- 索引模块组成部分

- 集成IK中文分词插件

- 自定义IK词库

- 热更新IK词库

- ES集群安装部署

- ES集群规划

- ES集群安装

- X-Pack安装

- Kibana安装

- ES优化

- 集群脑裂优化设置

- 增大系统打开文件数

- 合理设置JVM内存

- 锁定物理内存

- 合理设置分片

- 合理设置副本数

- 合并索引

- 关闭索引

- 清除删除文档

- 合理数据导入

- 设置索引_all

- 设置索引_source

- 版本一致

软件版本

jdk-8u192-linux-x64.tar.gz

elasticsearch-7.1.0-linux-x86_64.tar.gz

1. 单节点安装

下载

# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.1.0-linux-x86_64.tar.gz

解压

# tar -zxvf elasticsearch-7.1.0-linux-x86_64.tar.gz -C /opt/tools/elk/

# tar -zxvf jdk-8u192-linux-x64.tar.gz -C /opt/tools/

配置环境变量(root用户下)

# vi /etc/profile

# set jdk path

export JAVA_HOME=/opt/tools/jdk1.8.0

#set es path

export ES_HOME=/opt/tools/elk/elasticsearch-7.1.0

export PATH=$PATH:$JAVA_HOME/bin:$ES_HOME/bin

使配置文件生效

# source /etc/profile

查看

# echo $JAVA_HOME

/opt/tools/jdk1.8.0

# echo $ES_HOME

/opt/tools/elk/elasticsearch-7.1.0

修改配置文件$ES_HOME/config/elasticsearch.yml

network.host: 192.168.93.252 #设置当前主机ip

启动

# bin/elasticsearch

# bin/elasticsearch -d (后台启动)

报错

- 问题一

[WARN ][o.e.b.ElasticsearchUncaughtExceptionHandler] [bigdatademo]

uncaught exception in thread [main]org.elasticsearch.bootstrap.StartupException: java.lang.RuntimeException: can not run elast

icsearch as root

原因:elasticsearch不能以root账户启动

解决方案:新建一个普通用户

# useradd yskang

# passwd yskang

Changing password for user yskang.

New password:

BAD PASSWORD: it is too simplistic/systematic

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updated successfully.

授权:

# chown -R yskang:yskang /opt/*

然后切换到yskang用户,重新启动elasticsearch

使普通用户具有root用户权限,sudo命令权限(通过which查看命令所在)

root用户通过visudo去修改

# visudo

添加以下内容:

## Allow root to run any commands anywhere

root ALL=(ALL) ALL

yskang ALL=(ALL) NOPASSWD: ALL

ALL

说明:用户名 IP或者网段=(身份)也可以不写,默认是root 可执行的命令

使用方法

$ sudo service iptables status

- 问题二

java.lang.UnsupportedOperationException: seccomp unavailable: requires kernel 3.5+ with CON

FIG_SECCOMP and CONFIG_SECCOMP_FILTER compiled in at org.elasticsearch.bootstrap.SystemCallFilter

原因:报了一大串错误,不必惊慌,其实只是一个警告,主要是因为Linux的版本过低造成的

解决方案:(1)重新安装新版本的Linux系统;(2)警告不影响使用,可以忽略

- 问题三

ERROR: bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535]

原因:无法创建本地文件问题,用户最大可创建文件数太小

解决方案:

切换到root用户下,编辑 /etc/security/limits.conf,追加以下内容;

# vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 262144

* soft nproc 32000

* hard nproc 32000

- 问题四

[2]: max number of threads [1024] for user [yskang] is too low, increase to at least [4096]

原因:无法创建本地线程问题,用户最大可创建线程数太小

解决方案:切换到root用户下,编辑 /etc/security/limits.d/90-nproc.conf

# vi /etc/security/limits.d/90-nproc.conf

找到

* soft nproc 1024

修改为:

* soft nproc 4096

- 问题五

[3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

原因:最大虚拟内存太小

解决方案:切换到root用户下,编辑 /etc/sysctl.conf,追加以下内容:

vm.max_map_count=655360

保存后,执行命令(使配置生效),然后重新启动

# sysctl -p

- 问题六

[4]: system call filters failed to install; check the logs and fix your configuration or di

sable system call filters at your own risk

原因: 因为Centos6不支持SecComp,而ES默认bootstrap.system_call_filter为true进行检测,所以导致检测失败,失败后直接导致ES不能解决方案:修改elasticsearch.yml 添加以下内容

$ vi elasticsearch.yml

bootstrap.memory_lock: false #设置ES节点允许内存交换

bootstrap.system_call_filter: false #禁用系统调用过滤器

- 问题七

[5]: the default discovery settings are unsuitable for production use; at least one of [dis

covery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

原因:默认发现设置不适合生产使用;必须至少配置[dis covery.seed_hosts,discovery.seed_providers,cluster.initial_master_nodes]中的一个

解决方案:修改elasticsearch.yml文件

$ vi elasticsearch.yml

将 #cluster.initial_master_nodes: ["node-1", "node-2"]

去掉注释#并修改为

cluster.initial_master_nodes: ["bigdatademo"]

说明:bigdatademo:当前节点主机名,记得保存。

启动完成后,验证服务是否开启成功

$ curl http://192.168.93.252:9200

{

"name" : "bigdatademo",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "_na_",

"version" : {

"number" : "7.1.0",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "606a173",

"build_date" : "2019-05-16T00:43:15.323135Z",

"build_snapshot" : false,

"lucene_version" : "8.0.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

2. ES安装head插件

安装nodejs npm

# yum -y install nodejs npm

直接yum install -y nodejs会提示找不到nodejs这个模块

安装nodesource后再执行yum install -y nodejs

$ curl --silent --location https://rpm.nodesource.com/setup_10.x | sudo bash -

然后

$ sudo yum -y install nodejs

会将npm一起安装的

查看版本信息

$ node -v

v10.16.0

$ npm -v

6.9.0

安装git

$ sudo yum -y install git

下载head

$ git clone git://github.com/mobz/elasticsearch-head.git

$ cd elasticsearch-head

$ npm install

报错:node npm install Error:CERT_UNTRUSTED

ssl验证问题:使用下面命令取消ssl验证即可解决

npm config set strict-ssl false

配置head插件

修改Gruntfile.js配置,增加hostname: '*'配置

$ vi Gruntfile.js

connect: {

server: {

options: {

port: 9100,

base: '.',

keepalive: true,

hostname: '*'

}

}

}

修改head/_site/app.js文件

修改head连接es的地址(修改localhost为本机的ip地址)

$ vi app.js

this.base_uri = this.config.base_uri || this.prefs.get("app-base_uri") || "http://192.168.93.252:9200";

ES 配置

修改elasticsearch.yml ,增加跨域的配置(需要重启es才能生效)

$ vi config/elasticsearch.yml

http.cors.enabled: true

http.cors.allow-origin: "*"

启动head插件

$ cd elasticsearch-head/node_modules/grunt/

$ bin/grunt server &

查看进程

$ netstat -ntlp

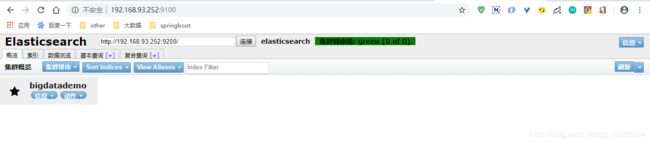

head 查看es集群状态

http://192.168.93.252:9100

3. Elasticsearch Rest基本操作

REST介绍

REST定义

REST即表述性状态传递(英文:Representational State Transfer,简称REST)是Roy Fielding博士在2000年他的博士论文中提出来的一种软件架构风格。

它是一种针对网络应用的设计和开发方式,可以降低开发的复杂性,提高系统的可伸缩性。

REST指的是一组架构约束条件和原则。满足这些约束条件和原则的应用程序或设计就是RESTful

Web应用程序最重要的REST原则是:客户端和服务器之间的交互在请求之间是无状态的。从客户端到服务器的每个请求都必须包含理解请求所必需的信息。如果服务器在请求之间的任何时间点重启,客户端不会得到通知。此外,无状态请求可以由任何可用服务器回答,这十分适合云计算之类的环境。客户端可以缓存数据以改进性能。

在服务器端,应用程序状态和功能可以分为各种资源。每个资源都是要URI(Universal Resource Identifier)得到一个唯一的地址。所有资源都共享统一的界面,以便在客户端和服务器之间传输状态。使用的是标准的HTTP方法,比如:GET、PUT、POST和DELETE。

REST资源

| 资源 | GET | PUT | POST | DELETE |

|---|---|---|---|---|

| 一组资源的URL 如: http://example.com/products/ | 列出URL列表 | 使用给定的一组资源替换当前组资源 | 在本资源组中创建或者追加一个新的资源 | 删除整组资源 |

| 单个资源的URL 如: http://example.com/products/1234 | 获取指定资源的详细信息 | 替换或者创建指定资源 | 在资源组下创建或者追加一个新的元素 | 删除指定的元素 |

REST基本操作

| 方法 | 作用 |

|---|---|

| GET | 获取对象的当前状态 |

| PUT | 改变对象的状态 |

| POST | 创建对象 |

| DELETE | 删除对象 |

| HEAD | 获取头信息 |

ES内置的常用REST接口

| URL | 说明 |

|---|---|

| /index/_search | 搜索指定索引下的数据 |

| /_aliases | 获取或者操作索引下的别名 |

| /index/ | 查看指定索引下的详细信息 |

| /index/type/ | 创建或者操作类型 |

| /index/mapping | 创建或者操作mapping |

| /index/settings | 创建或者操作settings |

| /index/_open | 打开指定索引 |

| /index/_close | 关闭指定索引 |

| /index/_refresh | 刷新索引(使新增加内容对搜索可见,不保证数据被写入磁盘) |

| /index/_flush | 刷新索引(会触发Lucene提交数据) |

CURL命令

简单认为是可以在命令行下访问url的一个工具

curl是利用URL语法在命令行方式下工作的开源文件传输工具,使用curl可以简单实现常见的get/post请求

CURL的使用

-X 指定http请求的方法,GET POST PUT DELETE

-d 指定要传输的参数

CURL创建索引库

示例:

如:索引库名称:test

$ curl -XPUT 'http://192.168.93.252:9200/test/'

PUT/POST都可以

显示以下内容表示创建索引库成功

{"acknowledged":true,"shards_acknowledged":true,"index":"test"}

创建数据

$ curl -XPOST http://192.168.93.252:9200/test/user/1 -d'{"name":"jack","age":26}'

{"error":"Content-Type header [application/x-www-form-urlencoded] is not supported","status":406}

高版本的ES需要指定头文件信息,否则会报错,低版本可以不用指定

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/test/user/1 -d'{

"name":"jack","age":26}'

{"_index":"test","_type":"user","_id":"1","_version":1,"result":"created","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":0

,"_primary_term":1}

PUT和POST的用法区别

PUT是幂等方法,二POST不是。所以PUT用于更新操作、POST用于新增操作比较合适

PUT、DELETE操作是幂等的。所谓幂等是指不管进行多少次操作,结果都一样。

POST操作不是幂等的,因此会出现POST重复加载的问题,比如,当多次发出同样的POST请求之后,结果会创建若干的资源

创建操作可以使用POST,也可以使用PUT,区别在于POST是作用在一个集合资源之上的(/articles),而PUT是作用在一个具体资源之上的(/articles/123),比如很多资源使用数据库自增主键作为标识信息,而创建的资源标识信息到底是什么只能由服务端提供,这个时候就必须使用POST

创建索引库的注意事项

索引库名称必须要全部小写,不能以下划线开头,也不能包含逗号

如果没有明确指定索引数据的ID,那么ES会自动生成一个随机的ID,需要使用POST参数

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/test/user/ -d'{

"name":"john","age":18}'

创建全新内容的两种方式

(1)使用自增ID(post)

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/test/user/ -d'{

"name":"john","age":18}'

(2)在url后面添加参数

$ curl -H "Content-Type: application/json" -XPUT http://192.168.93.252:9200/test/user/2?op_type=create -d'{

"name":"lucy","age":15}'

$ curl -H "Content-Type: application/json" -XPUT http://192.168.93.252:9200/test/user/3/_create -d'{

"name":"alan","age":58}'

查询索引-GET

(1)根据id查询

$ curl -XGET http://192.168.93.252:9200/test/user/1

{"_index":"test","_type":"user","_id":"1","_version":1,"_seq_no":0,"_primary_term":1,"found":true,"_source":{"name":"jack","age":26}}

(2)在任意的查询字符串中添加pretty参数,ES可以得到易于识别的json结果

(1) 检索文档中的一部分,如果只需要显示指定字段

$ curl -XGET 'http://192.168.93.252:9200/test/user/1?_source=name&pretty'

{

"_index" : "test",

"_type" : "user",

"_id" : "1",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "jack"

}

}

(2) 查询指定索引库指定类型所有数据

$ curl -XGET http://192.168.93.252:9200/test/user/_search?pretty

{

"took" : 80,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 4,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "test",

"_type" : "user",

"_id" : "1",

"_score" : 1.0,

"_source" : {

"name" : "jack",

"age" : 26

}

},

{

"_index" : "test",

"_type" : "user",

"_id" : "zeQEIWsBWJbm70w3S4EC",

"_score" : 1.0,

"_source" : {

"name" : "john",

"age" : 18

}

},

{

"_index" : "test",

"_type" : "user",

"_id" : "2",

"_score" : 1.0,

"_source" : {

"name" : "lucy",

"age" : 15

}

},

{

"_index" : "test",

"_type" : "user",

"_id" : "3",

"_score" : 1.0,

"_source" : {

"name" : "alan",

"age" : 58

}

}

]

}

}

(3)根据条件进行查询

$ curl -XGET 'http://192.168.93.252:9200/test/user/_search?q=name:john&pretty=true'

或者

$ curl -XGET 'http://192.168.93.252:9200/test/user/_search?q=name:john&pretty'

{

"took" : 5,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 1.2039728,

"hits" : [

{

"_index" : "test",

"_type" : "user",

"_id" : "zeQEIWsBWJbm70w3S4EC",

"_score" : 1.2039728,

"_source" : {

"name" : "john",

"age" : 18

}

}

]

}

}

DSL查询

DSL(Domain Specific Language)领域特定语言

新添加一个文档

$ curl -H "Content-Type: application/json" -XPUT http://192.168.93.252:9200/test/user/4/_create -d'{"name":"zhangsan","age":18}'

{"_index":"test","_type":"user","_id":"4","_version":1,"result":"created","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":4

,"_primary_term":1}

$ curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/test/user/_search -d'{"query":{"match":{"name":"zhangsan"}}}'

{"took":2,"timed_out":false,"_shards":{"total":1,"successful":1,"skipped":0,"failed":0},"hits":{"total":{"value":1,"relation":"eq"},"

max_score":1.3862944,"hits":[{"_index":"test","_type":"user","_id":"4","_score":1.3862944,"_source":{"name":"zhangsan","age":18}}]}}

MGET查询

使用mget API 获取多个文档

先新建一个索引库test2

$ curl -XPUT 'http://192.168.93.252:9200/test2/'

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/test2/user/1 -d'{"name":"marry","age":16}'

$ curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/_mget?pretty -d'{"docs":[{"_index":"test","_type":"user","_id":2,"_source":"name"},{"_index":"test2","_type":"user","_id":1}]}'

{

"docs" : [

{

"_index" : "test",

"_type" : "user",

"_id" : "2",

"_version" : 1,

"_seq_no" : 2,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "lucy"

}

},

{

"_index" : "test2",

"_type" : "user",

"_id" : "1",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "marry",

"age" : 16

}

}

]

}

如果需要的文档在同一个_index或者同一个_type中,你就可以在URL中指定一个默认的/_index或者/_index/_type

$ curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/test/user/_mget?pretty -d'{

"docs":[{"_id":1},{"_id":2}]}'

{

"docs" : [

{

"_index" : "test",

"_type" : "user",

"_id" : "2",

"_version" : 1,

"_seq_no" : 2,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "lucy",

"age" : 15

}

},

{

"_index" : "test",

"_type" : "user",

"_id" : "1",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "jack",

"age" : 26

}

}

]

}

如果所有的文档拥有相同的_index以及_type中,直接在请求中添加ids的数组即可

$ curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/test/user/_mget?pretty -d'{

"ids":["1","2"]}'

{

"docs" : [

{

"_index" : "test",

"_type" : "user",

"_id" : "1",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "jack",

"age" : 26

}

},

{

"_index" : "test",

"_type" : "user",

"_id" : "2",

"_version" : 1,

"_seq_no" : 2,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "lucy",

"age" : 15

}

}

]

}

HEAD的使用

如果只想检查一下文档是否存在,你可以使用HEAD来替代GET方法,这样就只会返回HTTP头文件

$ curl -i -XHEAD http://192.168.93.252:9200/test/user/1

HTTP/1.1 200 OK

Warning: 299 Elasticsearch-7.1.0-606a173 "[types removal] Specifying types in document get requests is deprecated, use the /{index}/_

doc/{id} endpoint instead."content-type: application/json; charset=UTF-8

content-length: 133

$ curl -i -XHEAD http://192.168.93.252:9200/test/user/5

HTTP/1.1 404 Not Found

Warning: 299 Elasticsearch-7.1.0-606a173 "[types removal] Specifying types in document get requests is deprecated, use the /{index}/_

doc/{id} endpoint instead."content-type: application/json; charset=UTF-8

content-length: 56

ES更新索引

ES可以使用PUT或者POST对文档进行更新(全部更新)操作,如果指定ID的文档已经存在,则执行更新操作

注意:ES在执行更新操作的时候,首先会将旧的文档标记为删除状态,然后添加新的文档,旧的文档不会立即消失,但是你也无法访问,ES会在你继续添加更多数据的时候在后台清理已经标记为删除状态的文档

局部更新,可以添加新字段或者更新已有字段(必须使用POST)

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/test/user/1/_update -d'{"doc":{"name":"baby","age":18}}'

{"_index":"test","_type":"user","_id":"1","_version":2,"result":"updated","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":5

,"_primary_term":1}

$ curl -XGET http://192.168.93.252:9200/test/user/1?pretty

{

"_index" : "test",

"_type" : "user",

"_id" : "1",

"_version" : 2,

"_seq_no" : 5,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "baby",

"age" : 18

}

}

ES删除索引

ES可以使用DELETE对文档进行删除操作

$ curl -XDELETE http://192.168.93.252:9200/test/user/1

{"_index":"test","_type":"user","_id":"1","_version":3,"result":"deleted","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":6

,"_primary_term":1}

说明:如果文档存在,result属性值为deleted,_version属性的值+1

$ curl -XDELETE http://192.168.93.252:9200/test/user/1

{"_index":"test","_type":"user","_id":"1","_version":4,"result":"not_found","_shards":{"total":2,"successful":1,"failed":0},"_seq_no"

:11,"_primary_term":1}

说明:如果文档不存在,result属性值为not_found,但是_version属性的值依然会+1,这个就是内部管理的一部分,它保证了我们在多个节点间的不同操作的顺序都被正确的标记了

$ curl -XGET http://192.168.93.252:9200/test/user/1

{"_index":"test","_type":"user","_id":"1","found":false}

注意:ES在执行删除操作时也不会立即生效,它只是被标记成已删除。ES将会在你之后添加更多索引的时候才会在后台进行删除内容的清理

ES批量操作-bulk

bulk API可以帮助我们同时执行多个请求

格式:

action: index/create/update/delete

metadata: _index,_type,_id

request body: _source(删除操作不需要)

{action:{metadata}}

{request body}

{action:{metadata}}

{request body}

create和index的区别

如果数据存在,使用create操作失败,会提示文档已经存在,使用index则可以成功执行

使用文件的方式

新建一个requests文件

$ vi requests

{"index":{"_index":"test","_type":"user","_id":"6"}}

{"name":"mayun","age":51}

{"update":{"_index":"test","_type":"user","_id":"6"}}

{"doc":{"age":52}}

执行批量操作

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/_bulk --data-binary @requests

{"took":31,"errors":false,"items":[{"index":{"_index":"test","_type":"user","_id":"6","_version":1,"result":"created","_shards":{"tot

al":2,"successful":1,"failed":0},"_seq_no":12,"_primary_term":1,"status":201}},{"update":{"_index":"test","_type":"user","_id":"6","_version":2,"result":"updated","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":13,"_primary_term":1,"status":200}}]}

$ curl -XGET http://192.168.93.252:9200/test/user/6?pretty

{

"_index" : "test",

"_type" : "user",

"_id" : "6",

"_version" : 2,

"_seq_no" : 13,

"_primary_term" : 1,

"found" : true,

"_source" : {

"name" : "mayun",

"age" : 52

}

}

bulk请求可以在URL中声明/_index 或者 /_index/_type

bulk一次最大处理多少数据量

(1) bulk会把将要处理的数据载入内存中,所以数据量是有限的

(2) 最佳的数据量不是一个确定的数值,它取决于你的硬件,你的文档大小以及复杂性,你的索引以及搜索的负载

(3) 一般建议是1000-5000个文档,如果你的文档很大,可以适当减少队列,大小建议是5-15MB,默认不能超过100MB,可以在ES的配置文件中修改这个值 http.max_content_length: 100mb

http.max_content_length :The max content of an HTTP request. Defaults to 100mb.

(4) 官网说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-http.html

ES版本控制

(1)普通关系型数据库使用的是(悲观并发控制(PCC))

当我们在修改一个数据前先锁定这一行,然后确保只有读取到数据的这个线程可以修改这一行数据

(2)ES使用的是(乐观并发控制(OCC))

ES不会阻止某一数据的访问,然而,如果基础数据在我们读取和写入的间隔中发生了变化,更新就会失败,这时候就由程序来决定如何处理这个冲突。它可以重新读取新数据来进行更新,又或者将这一情况直接反馈给客户

(3)ES如何实现版本控制(使用ES内部版本号)

首先得到需要修改的文档,获取版本号(_version)

$ curl -XGET http://192.168.93.252:9200/test/user/2

{"_index":"test","_type":"user","_id":"2","_version":1,"_seq_no":2,"_primary_term":1,"found":true,"_source":{

"name":"lucy","age":15}}

在执行更新操作的时候把版本号传过去

$ curl -H "Content-Type: application/json" -XPOST http://192.168.93.252:9200/test/user/2/_update?version=1 -d'{"doc":{"age":30}}'

{"_index":"test","_type":"user","_id":"2","_version":2,"result":"updated","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":1

4,"_primary_term":1}

$ curl -H "Content-Type: application/json" -XPUT http://192.168.93.252:9200/test/user/2?version=2 -d'{"name":"joy","age":20}'

如果传递的版本号和待更新的文档的版本号不一致,则会更新失败

4. Elasticsearch 核心概念

Cluster

(1)代表一个集群,集群中有多个节点,其中有一个为主节点,这个主节点是可以通过

选举产生的,主从节点是对于集群内部来说的。ES的一个概念就是去中心化。

(2)主节点的职责是负责管理集群状态,包括管理分片的状态和副本的状态,以及节点的发现和删除。

(3)注意:主节点不负责对数据的增删改查请求进行处理,只负责维护集群的相关状态信息。

集群状态查看:http://192.168.93.252:9200/_cluster/health?pretty

{

cluster_name: "elasticsearch",

status: "yellow",

timed_out: false,

number_of_nodes: 1,

number_of_data_nodes: 1,

active_primary_shards: 2,

active_shards: 2,

relocating_shards: 0,

initializing_shards: 0,

unassigned_shards: 2,

delayed_unassigned_shards: 0,

number_of_pending_tasks: 0,

number_of_in_flight_fetch: 0,

task_max_waiting_in_queue_millis: 0,

active_shards_percent_as_number: 50

}

Shards

(1)代表索引分片,ES可以把一个完整的索引分成多个分片,好处是可以把一个大的索引水平拆分成多个,

分布到不同的节点上,构成分布式搜索,提高性能和吞吐量

(2)分片的数量只能在创建索引库时指定,索引库创建后不能更改。

curl -H "Content-Type:application/json" -XPUT 'http://192.168.93.252:9200/test3/' -d'{"settings":{"number_of_shards":3}}'

默认一个索引库有5个分片(7.0之前),本版本7.1.0默认只有一个分片和一个副本

每个分片中最多存储2,147,483,519条数据

官网地址:https://www.elastic.co/guide/en/elasticsearch/reference/current/getting-started-concepts.html

To summarize, each index can be split into multiple shards. An index can also be replicated zero (meaning no replicas) or more times. Once replicated, each index will have primary shards (the original shards that were replicated from) and replica shards (the copies of the primary shards).

总而言之,每个索引可以拆分为多个分片。索引也可以复制为零(表示没有副本)或更多次。复制后,每个索引都将具有主分片(从中复制的原始分片)和副本分片(主分片的副本)。

The number of shards and replicas can be defined per index at the time the index is created. After the index is created, you may also change the number of replicas dynamically anytime. You can change the number of shards for an existing index using the _shrink and _split APIs, however this is not a trivial task and pre-planning for the correct number of shards is the optimal approach.

可以在创建索引时为每个索引定义分片和副本的数量。创建索引后,您还可以随时动态更改副本数。您可以使用_shrink和_split API更改现有索引的分片数,但这不是一项简单的任务,并且预先计划正确数量的分片是最佳方法。

By default, each index in Elasticsearch is allocated one primary shard and one replica which means that if you have at least two nodes in your cluster, your index will have one primary shard and another replica shard (one complete replica) for a total of two shards per index.

默认情况下,Elasticsearch中的每个索引都分配了一个主分片和一个副本,这意味着如果群集中至少有两个节点,则索引将具有一个主分片和另一个副本分片(一个完整副本),总共两个每个索引的分片。

Each Elasticsearch shard is a Lucene index. There is a maximum number of documents you can have in a single Lucene index. As of LUCENE-5843, the limit is 2,147,483,519 (= Integer.MAX_VALUE - 128) documents. You can monitor shard sizes using the _cat/shards API.

每个Elasticsearch分片都是Lucene索引。单个Lucene索引中可以包含最多文档数。截至LUCENE-5843,限制为2,147,483,519(= Integer.MAX_VALUE - 128)个文件。您可以使用_cat / shards API监视分片大小。

Replicas

代表索引副本,ES可以给索引分片设置副本

副本的作用:

一是提高系统的容错性,当某个节点某个分片损坏或丢失时可以从副本中恢复

二是提高ES的查询效率,ES会自动对搜索请求进行负载均衡

副本的数量可以随时修改,可以在创建索引库的时候指定

curl -H "Content-Type:application/json" -XPUT 'http://192.168.93.252:9200/test3/' -d'{"settings":{"number_of_replicas":3}}'

默认是一个分片有1个副本 -> index.number_of_replicas: 1

注意:主分片和副本不会存在一个节点中

Recovery

代表数据的恢复或叫数据重新分布,ES在有节点加入或退出时会根据机器的负载对索引分片进行重新分配,挂掉的节点重新启动时也会进行数据恢复

Gateway

代表ES索引的持久化存储方式,ES默认是先把索引存放到内存中,当内存满了时再持久化到硬盘。当这个ES集群关闭再重新启动时就会从Gateway中读取索引数据。

ES支持多种类型的Gateway:

本地文件系统(默认)

分布式文件系统

Hadoop的HDFS和Amazon的S3云存储服务

Discovery.zen

代表ES的自动发现节点机制,ES是一个基于p2p的系统,它先通过广播寻找存在的节点,再通过多播协议来进行节点之间的通信,同时也支持点对点的交互

如果是不同网段的节点如何组成ES集群?

禁用自动发现机制

discovery.zen.ping.multicast.enabled: false

设置新节点被启动时能够发现的主节点列表

discovery.zen.ping.unicast.hosts: [“192.168.93.252”,“192.168.93.251”,“192.168.93.250”]

Transport

代表ES内部节点或集群与客户端的交互方式,默认内部是使用tcp协议进行交互,同时它支持http协议(json格式)、thrift、servlet、memcached、zeroMQ等其他的传输协议(通过插件方式集成)

Create Index

官网地址:https://www.elastic.co/guide/en/elasticsearch/reference/current/indices-create-index.html

Create Index API用于在Elasticsearch中手动创建索引。Elasticsearch中的所有文档都存储在一个索引或另一个索引中。

最基本的命令如下:

PUT twitter

这将创建一个名为twitter的索引,其默认设置为all

索引命名限制

(1)仅限小写

(2)不能包括\,/,*,?,“,<,>,|,``(空格字符),逗号,#

(3)7.0版本之前的索引可能包含冒号(:),但已被弃用,7.0+不支持

(4)不能以- ,_,+开头

(5)不能是.或者..

(6)不能超过255个字节(注意它是字节,因此多字节字符将更快地计入255个限制)

Index Settings

创建的每个索引都可以具有与其关联的特定设置,如:

PUT twitter

{

"settings" : {

"index" : {

"number_of_shards" : 3,

"number_of_replicas" : 2

}

}

}

分片数:number_of_shards默认值为1

副本数:number_of_replicas默认值为1

也可以简化

PUT twitter

{

"settings" : {

"number_of_shards" : 3,

"number_of_replicas" : 2

}

}

注意:您不必在settings部分中明确指定索引部分

查看索引库的settings信息

curl -XGET http://192.168.93.252:9200/test/_settings?pretty

操作不存在的索引(创建)

curl -H "Content-Type:application/json" -XPUT 'http://192.168.93.252:9200/test4/' -d'{"settings":{"number_of_shards":3,"number_of_replicas":2}}'

操作已存在索引(修改)

curl -H "Content-Type:application/json" -XPUT 'http://192.168.93.252:9200/test4/_settings' -d'{"index":{"number_of_replicas":2}}'

Mapping

官网地址:https://www.elastic.co/guide/en/elasticsearch/reference/current/mapping.html

Mapping是定义文档及其包含的字段的存储和索引方式的过程。例如,使用Mapping来定义:

(1)应将哪些字符串字段视为全文字段。

(2)哪些字段包含数字,日期或地理位置。

(3)日期值的格式。

(4)用于控制动态添加字段的映射的自定义规则。

Mapping Type

每个索引都有一种映射类型,用于确定文档的索引方式。

映射类型具有:

(1)Meta-fields(元字段)

元字段用于自定义文档的元数据关联的处理方式。元字段的示例包括文档的_index,_type,_id和_source字段。

(2)Fields or properties(字段或属性)

映射类型包含与文档相关的字段(fields )或属性(properties )列表。

每个字段都有一个数据类型,可以是:

(1)一个简单的类型,如text,keyword,date,long,double,boolean或ip。

(2)支持JSON的分层特性的类型,如对象或嵌套

(3) 或者像geo_point,geo_shape或completion这样的特殊类型。

创建索引时可以指定映射,如下所示:

PUT my_index

{

"mappings": {

"properties": {

"title": { "type": "text" },

"name": { "type": "text" },

"age": { "type": "integer" },

"created": {

"type": "date",

"format": "strict_date_optional_time||epoch_millis"

}

}

}

}

说明:

创建一个名为my_index的索引

指定映射中的字段或属性

指定标题字段包含文本值

指定名称字段包含文本值

指定age字段包含整数值

指定创建的字段包含两种可能格式的日期值

查询索引库的mapping信息

curl -XGET http://192.168.93.252:9200/test/user/_mapping?pretty

操作不存在的索引(创建)

curl -H "Content-Type:application/json" -XPUT 'http://192.168.93.252:9200/test5/' -d'{"mappings": {"user": {"properties": { "name": { "type": "text"},"age": { "type": "integer" }}}}}'

操作已存在索引(修改)

curl -H "Content-Type:application/json" -XPUT 'http://192.168.93.252:9200/test5/user/_mapping' -d'{"properties": { "name": { "type": "text"},"age": { "type": "integer" }}}'

5. Elasticsearch Java 客户端

Java High Level REST Client Java高级客户端

Java API:7.1 官网地址

https://www.elastic.co/guide/en/elasticsearch/client/java-api/current/index.html

添加maven依赖

<!-- junit5 -->

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-api</artifactId>

<version>5.4.2</version>

<scope>test</scope>

</dependency>

<!-- elasticsearch-rest-high-level-client -->

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>7.1.0</version>

</dependency>

端口

ES启动监听两个端口:9300 和 9200

ES 9200端口 与 9300端口的区别?

9300是TCP协议端口:通过tcp协议通讯,ES集群之间是通过9300进行通讯,Java客户端(TransportClient)的方式也是以tcp协议在9300端口上与集群进行通信

9200是Http协议端口:主要用于外部通讯,外部使用RESTful接口进行访问

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high.html

Java高级REST客户端,内部仍然是基于低级客户端,它提供了更多的API,接受请求对象作为参数并返回响应对象,由客户端自己处理编码和解码。

每个API都可以同步或异步调用,同步方法返回一个响应对象,而异步方法的名称以async后缀结尾,需要一个监听器参数,一旦收到响应或错误,

就会被通知(在由低级客户端管理的线程池上)。

Java高级REST客户端依赖于Elasticsearch Core项目。它接受与TransportClient相同的请求参数,并返回相同的响应对象。

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-compatibility.html

兼容性

Java高级REST客户端需要Java 1.8并依赖于Elasticsearch核心项目。客户端版本与客户端开发的Elasticsearch版本相同。它接受与TransportClient相同的请求参数,并返回相同的响应对象。

高级客户端保证能够与运行在相同主要版本和更大或相同次要版本上的任何Elasticsearch节点进行通信。它不需要与它与之通信的Elasticsearch节点处于相同的次要版本,因为它是向前兼容的,这意味着它支持与Elasticsearch的更高版本进行通信,而不是与其开发的版本进行通信。

初始化

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-getting-started-initialization.html

连接到ES集群

RestHighLevelClient实例需要低级客户端构建器来构建,如下所示:

RestHighLevelClient client = new RestHighLevelClient(

RestClient.builder(

new HttpHost("localhost", 9200, "http"),

new HttpHost("localhost", 9201, "http")));

高级客户端将在内部创建用于根据提供的构建器执行请求的低级客户端,并管理其生命周期

该低级客户端维护一个连接池并启动一些线程,因此当您需要关闭高级客户端时,它将关闭内部低级客户端以释放这些资源。这可以通过close()方法来完成:

client.close();

测试

/**

* 测试:使用RestHighLevelClient连接ElasticSearch集群

* @throws IOException

*/

@Test

public void test() throws IOException {

RestHighLevelClient client = new RestHighLevelClient(

RestClient.builder(

new HttpHost("192.168.93.252", 9200, "http")));

GetRequest getRequest = new GetRequest("test", "5");

boolean exists = client.exists(getRequest, RequestOptions.DEFAULT);

if (exists) {

System.out.println("文档存在");

} else {

System.out.println("文档不存在");

}

client.close();

}

Document APIs

Index API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-index.html

索引index API:(json、map、XContentBuilder 、object)

public class ESDocumentAPIsTest {

public RestHighLevelClient client;

/**

* 测试:使用RestHighLevelClient连接ElasticSearch集群

* @throws IOException

*/

@BeforeEach

public void test() throws IOException {

client = new RestHighLevelClient(

RestClient.builder(

new HttpHost("192.168.93.252", 9200, "http")));

}

/**

* index API : json格式

* @throws IOException

*/

@Test

public void testIndexforJson() throws IOException {

IndexRequest request = new IndexRequest("posts"); //索引

request.id("1"); //请求的文档ID

String jsonString = "{" +

"\"user\":\"kimchy\"," +

"\"postDate\":\"2019-06-10\"," +

"\"message\":\"trying out Elasticsearch\"" +

"}";

request.source(jsonString, XContentType.JSON); //文档源以字符串形式提供

//执行

IndexResponse indexResponse = client.index(request, RequestOptions.DEFAULT);

System.out.println(indexResponse.getId());

client.close();

}

/**

* index API :map格式

* @throws IOException

*/

@Test

public void testIndexforMap() throws IOException {

Map<String, Object> jsonMap = new HashMap<>();

jsonMap.put("user", "kimchy");

jsonMap.put("postDate", new Date());

jsonMap.put("message", "trying out Elasticsearch");

//文档源作为Map提供,自动转换为JSON格式

IndexRequest request = new IndexRequest("posts").id("2").source(jsonMap);

//执行

IndexResponse indexResponse = client.index(request, RequestOptions.DEFAULT);

System.out.println(indexResponse.getId());

client.close();

}

/**

* index API :XContentBuilder 格式

* @throws IOException

*/

@Test

public void testIndexforXContentBuilder() throws IOException {

XContentBuilder builder = XContentFactory.jsonBuilder();

builder.startObject();

{

builder.field("user", "kimchy");

builder.timeField("postDate", new Date());

builder.field("message", "trying out Elasticsearch");

}

builder.endObject();

//文档源作为XContentBuilder对象提供,Elasticsearch内置助手生成JSON内容

IndexRequest request = new IndexRequest("posts").id("3").source(builder);

//执行

IndexResponse indexResponse = client.index(request, RequestOptions.DEFAULT);

System.out.println(indexResponse.getId());

client.close();

}

/**

* index API :Object 格式

* @throws IOException

*/

@Test

public void testIndexforObject() throws IOException {

//文档源作为Object键对提供,转换为JSON格式

IndexRequest request = new IndexRequest("posts")

.id("4")

.source("user", "kimchy",

"postDate", new Date(),

"message", "trying out Elasticsearch");

//执行

IndexResponse indexResponse = client.index(request, RequestOptions.DEFAULT);

System.out.println(indexResponse.getId());

client.close();

}

}

Get API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-get.html

查询get API

/**

* get API

* @throws IOException

*/

@Test

public void testGetforSynchronous() throws IOException {

GetRequest getRequest = new GetRequest("posts", "1");

//同步执行

GetResponse getResponse = client.get(getRequest, RequestOptions.DEFAULT);

System.out.println(getResponse.getSource());

//结果:{postDate=2019-06-10, message=trying out Elasticsearch, user=kimchy}

client.close();

}

Exists API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-exists.html

判断exists API

/**

* exist API

* @throws IOException

*/

@Test

public void testExists() throws IOException {

GetRequest getRequest = new GetRequest("posts", "5");

getRequest.fetchSourceContext(new FetchSourceContext(false));

getRequest.storedFields("_none_");

//同步执行

boolean exists = client.exists(getRequest, RequestOptions.DEFAULT);

if (exists) {

System.out.println("存在");

} else {

System.out.println("不存在");

}

client.close();

}

Update API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-update.html

更新update API:(json、map、XContentBuilder 、object)

/**

* Update API json

* @throws IOException

*/

@Test

public void testUpdateforJson() throws IOException {

UpdateRequest request = new UpdateRequest("posts", "1");

String jsonString = "{" +

"\"updated\":\"2019-06-09\"," +

"\"reason\":\"daily update\"" +

"}";

request.doc(jsonString, XContentType.JSON);

//同步执行

UpdateResponse updateResponse = client.update(request, RequestOptions.DEFAULT);

System.out.println(updateResponse.getResult());

client.close();

}

/**

* Update API map

* @throws IOException

*/

@Test

public void testUpdateforMap() throws IOException {

Map<String, Object> jsonMap = new HashMap<>();

jsonMap.put("updated", new Date());

jsonMap.put("reason", "daily update");

UpdateRequest request = new UpdateRequest("posts", "1").doc(jsonMap);

//同步执行

UpdateResponse updateResponse = client.update( request, RequestOptions.DEFAULT);

System.out.println(updateResponse.getResult());

client.close();

}

/**

* Update API XContentBuilder

* @throws IOException

*/

@Test

public void testUpdateforXContentBuilder() throws IOException {

XContentBuilder builder = XContentFactory.jsonBuilder();

builder.startObject();

{

builder.timeField("updated", new Date());

builder.field("reason", "daily update");

}

builder.endObject();

UpdateRequest request = new UpdateRequest("posts", "1").doc(builder);

//同步执行

UpdateResponse updateResponse = client.update( request, RequestOptions.DEFAULT);

System.out.println(updateResponse.getResult());

client.close();

}

/**

* Update API Object

* @throws IOException

*/

@Test

public void testUpdateforObject() throws IOException {

UpdateRequest request = new UpdateRequest("posts", "1")

.doc("updated", new Date(),

"reason", "daily update");

//同步执行

UpdateResponse updateResponse = client.update(request, RequestOptions.DEFAULT);

System.out.println(updateResponse.getResult());

client.close();

}

Delete API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-delete.html

删除delete API

/**

* Delete API

* @throws IOException

*/

@Test

public void testDelete() throws IOException {

DeleteRequest request = new DeleteRequest("posts","1");

//同步执行

DeleteResponse deleteResponse = client.delete(

request, RequestOptions.DEFAULT);

client.close();

}

Bulk API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-bulk.html

批量操作bulk API

/**

* Bulk API 批量操作

* @throws IOException

*/

@Test

public void testBulk() throws IOException {

BulkRequest request = new BulkRequest();

request.add(new IndexRequest("posts").id("5")

.source(XContentType.JSON,"field", "foo"));

request.add(new IndexRequest("posts").id("6")

.source(XContentType.JSON,"field", "bar"));

request.add(new IndexRequest("posts").id("7")

.source(XContentType.JSON,"field", "baz"));

//可以将不同的操作类型添加到同一BulkRequest中

/*

request.add(new DeleteRequest("posts", "3"));

request.add(new UpdateRequest("posts", "2")

.doc(XContentType.JSON,"other", "test"));

request.add(new IndexRequest("posts").id("4")

.source(XContentType.JSON,"field", "baz"));

*/

//同步执行

BulkResponse bulkResponse = client.bulk(request, RequestOptions.DEFAULT);

for (BulkItemResponse bulkItemResponse : bulkResponse) {

if (bulkItemResponse.isFailed()) {

BulkItemResponse.Failure failure =

bulkItemResponse.getFailure();

System.out.println(failure.getMessage());

}

}

client.close();

}

Multi-Get API

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-document-multi-get.html

多条数据查询Multi-Get API

multiGet API并行地在单个http请求中执行多个get请求

/**

* Multi-Get API 多条数据查询

* @throws IOException

*/

@Test

public void testMultiGet() throws IOException {

MultiGetRequest request = new MultiGetRequest();

request.add(new MultiGetRequest.Item("test","2"));

request.add(new MultiGetRequest.Item("test", "3"));

request.add(new MultiGetRequest.Item("test", "4"));

//同步执行

MultiGetResponse responses = client.mget(request, RequestOptions.DEFAULT);

for (MultiGetItemResponse itemResponse : responses) {

GetResponse response = itemResponse.getResponse();

if (response.isExists()) {

String json = response.getSourceAsString();

//System.out.println(json);

Map<String, Object> sourceAsMap = response.getSourceAsMap();

System.out.println(sourceAsMap);

}

}

client.close();

}

SearchType

官网介绍:https://www.elastic.co/guide/en/elasticsearch/reference/current/search-request-search-type.html

ES搜索存在的问题

(1)返回数据数量问题

如果数据分散在默认的5个分片上,ES会向5个分片同时发出请求,每个分片同时发出请求,每个分片都返回10条数据,最终会返回总数据为:5*10=50条数据,远远大于用户请求。

解决思路:

第一步:先从每个分片汇总查询的数据id,进行排名,取前10条数据

第二步:根据这10条数据id,到不同分片获取数据

(2)返回数据排名问题

每个分片计算符合条件的前10条数据都是基于自己分片的数据进行打分计算的。计算分值(score)使用的词频和文档频率等信息都是基于自己分片的数据进行的,而ES进行整体排名是基于每个分片计算后的分值进行排序的(打分依据就不一致,最终对这些数据统一排名的时间就不准确了)。

解决思路:将各个分片打分标准统一

SearchType类型1:query and fetch

fetch:获取

实现原理:

向索引的所有分片(shard)都发出查询请求,各分片返回的时候把元素文档(document)和计算后的排名信息一起返回。

优点:

这种搜索方式是最快的,因为相比后面的几种搜索方式,这种查询方法只需要去shard查询一次。

缺点:

各个shard返回的结果的数量之和可能是用户要求的size的n倍。

Query Then Fetch

SearchType类型2:query then fetch

实现原理:

第一步:先向所有的shard发出请求,各分片只返回文档id(注意:不包括文档document)和排名相关的信息(也就是文档对应的分值)

第二步:然后按照各分片返回的文档的分数进行重新排序和排名,取前size个文档。

优点:

返回的数据量是准确的。

缺点:

数据排名不准确且性能一般。

SearchType类型3:Dfs, query and fetch

这种方式比第一种类型多了一个DFS步骤,它可以更精确控制搜索打分和排名。

实现原理:

第一步:先对所有分片发送请求,把所有分片中的词频和文档频率等打分依据全部汇总到一块。

第二步:然后再执行后面的操作

优点:

数据排名准确

缺点:

搜索性能一般,且返回的数据量不准确,可能返回(N*分片数量)的数据。

Dfs, Query Then Fetch

SearchType类型4:Dfs, query then fetch

这种方式比第二种类型多了一个DFS步骤。

实现原理:

第一步:先对所有分片发送请求,把所有分片中的词频和文档频率等打分依据全部汇总到一块。

第二步:然后再执行后面的操作

优点:

返回的数据量是准确的,数据排名也是准确的

缺点:

性能最差(这个最差只是表示在这四种查询方式中性能最慢,也不至于不能忍受,如果对查询性能要求不是非常高,而对查询准确度要求比较高的时候可以考虑这个)。

总结:

(1)从性能考虑来说:query_and_fetch是最快的,dfs_query_then_fetch是最慢的。

(2)从搜索的准确度来说:DFS要比非DFS的准确度更高。

(3)ES6.x以后进行了优化,只保留了第2和第4两种类型(即query then fetch和dfs,query then fetch)

SearchType示例操作

官网介绍:https://www.elastic.co/guide/en/elasticsearch/client/java-rest/7.1/java-rest-high-search.html

@Test

public void testSearch() throws IOException {

SearchRequest searchRequest = new SearchRequest();

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(QueryBuilders.termQuery("user", "kimchy"));

searchRequest.indices("posts");

searchRequest.source(sourceBuilder);

searchRequest.searchType(SearchType.DFS_QUERY_THEN_FETCH);

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHits hits = searchResponse.getHits();

System.out.println(hits.getTotalHits());

client.close();

}

查询-Query

分页:from/size

排序:sort

过滤:filter

按查询匹配度排序:explain

测试数据

curl -XPUT 'http://192.168.93.252:9200/test6/'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/1 -d'{"name":"tom","age":18,"info":"tom"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/2 -d'{"name":"jack","age":29,"info":"jack"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/3 -d'{"name":"jessica","age":18,"info":"jessica"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/4 -d'{"name":"dave","age":19,"info":"dave"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/5 -d'{"name":"lilei","age":18,"info":"lilei"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/6 -d'{"name":"lili","age":18,"info":"lili"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/7 -d'{"name":"tom","age":29,"info":"tom"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/8 -d'{"name":"tom1","age":16,"info":"tom1"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/9 -d'{"name":"tom2","age":38,"info":"tom2"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/10 -d'{"name":"tom3","age":28,"info":"tom3"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/11 -d'{"name":"tom4","age":35,"info":"tom4"}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test6/user/12 -d'{"name":"tom5","age":24,"info":"tom5"}'

测试代码

@Test

public void testQuery() throws IOException {

SearchRequest searchRequest = new SearchRequest();

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder

//.query(QueryBuilders.matchQuery("info", "marry and john"))

//.query(QueryBuilders.matchAllQuery())

//.query(QueryBuilders.multiMatchQuery("john", "name","info"))//多字段匹配

//.query(QueryBuilders.queryStringQuery("name:tom*"))//模糊(正则)匹配

.query(QueryBuilders.termQuery("name","tom"))//精准匹配

.from(0)//分页

.size(2)

.sort("age", SortOrder.ASC)//排序

.postFilter(QueryBuilders.rangeQuery("age").from(20).to(40))//过滤

.explain(true)//按查询匹配度排序

;

searchRequest.indices("test6");

searchRequest.source(sourceBuilder);

searchRequest.searchType(SearchType.DFS_QUERY_THEN_FETCH);

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHits hits = searchResponse.getHits();

SearchHit[] hitsArray = hits.getHits();

for (SearchHit searchHit : hitsArray) {

System.out.println(searchHit.getSourceAsString());

}

client.close();

}

聚合-Aggregations

根据字段进行分组统计

根据字段分组,统计其他字段的值

aggregations统计实例一

统计相同年龄的学员个数

| 姓名 | 年龄 |

|---|---|

| tom | 18 |

| jack | 29 |

| jessica | 18 |

| dave | 19 |

| lilei | 18 |

| lili | 29 |

测试代码

@Test

public void test1() throws IOException {

SearchRequest searchRequest = new SearchRequest();

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

//按年龄分组聚合统计

TermsAggregationBuilder aggregationBuilder = AggregationBuilders.terms("by_age").field("age");

sourceBuilder

.query(QueryBuilders.matchAllQuery())

.aggregation(aggregationBuilder);

searchRequest.indices("test6");//添加索引

searchRequest.source(sourceBuilder);

searchRequest.searchType(SearchType.DFS_QUERY_THEN_FETCH);

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//获取分组聚合后信息

Terms terms = searchResponse.getAggregations().get("by_age");

for (Terms.Bucket tmp : terms.getBuckets()) {

Object key = tmp.getKey();

long docCount = tmp.getDocCount();

System.out.println(key+" @ "+docCount);

}

client.close();

}

aggregations统计实例二

统计每个学员的总成绩

| 姓名 | 科目 | 分数 |

|---|---|---|

| tom | 语文 | 59 |

| tom | 数学 | 89 |

| jack | 语文 | 78 |

| jack | 数学 | 85 |

| jessica | 语文 | 97 |

| jessica | 数学 | 68 |

测试数据

curl -XPUT 'http://192.168.93.252:9200/test7/'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/1 -d'{"name":"tom","type":"chinese","score":59}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/2 -d'{"name":"tom","type":"math","score":89}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/3 -d'{"name":"tom","type":"english","score":90}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/4 -d'{"name":"jack","type":"chinese","score":78}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/5 -d'{"name":"jack","type":"math","score":85}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/6 -d'{"name":"jack","type":"english","score":80}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/7 -d'{"name":"jessica","type":"chinese","score":97}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/8 -d'{"name":"jessica","type":"math","score":68}'

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/9 -d'{"name":"jessica","type":"english","score":85}'

测试代码

@Test

public void test2() throws IOException {

SearchRequest searchRequest = new SearchRequest();

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

//按年龄分组聚合统计

TermsAggregationBuilder nameAggregation = AggregationBuilders.terms("by_name").field("name.keyword");

SumAggregationBuilder scoreAggregation = AggregationBuilders.sum("by_score").field("score");

//聚合

nameAggregation.subAggregation(scoreAggregation);

sourceBuilder

.query(QueryBuilders.matchAllQuery())

.aggregation(nameAggregation);

searchRequest.indices("test7");//添加索引

searchRequest.source(sourceBuilder);

searchRequest.searchType(SearchType.DFS_QUERY_THEN_FETCH);

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//获取分组聚合后信息

Terms terms = searchResponse.getAggregations().get("by_name");

for (Terms.Bucket tmp : terms.getBuckets()) {

Object key = tmp.getKey();

Sum sum = tmp.getAggregations().get("by_score");

System.out.println(key+" : "+sum.getValue());

}

client.close();

}

报错

ElasticsearchStatusException[Elasticsearch exception [type=search_phase_execution_exception, reason=all shards failed]];

nested: ElasticsearchException[Elasticsearch exception [type=illegal_argument_exception, reason=Fielddata is disabled on text fields by default.

Set fielddata=true on [name] in order to load fielddata in memory by uninverting the inverted index.

Note that this can however use significant memory. Alternatively use a keyword field instead.]];

原因

默认情况下,在文本字段上禁用Fielddata

因为Fielddata可能会消耗大量的堆空间,尤其是在加载高基数文本字段时。一旦fielddata已加载到堆中,它将在该段的生命周期内保留。此外,加载fielddata是一个昂贵的过程,可能会导致用户遇到延迟命中。这就是默认情况下禁用fielddata的原因。

官网参考地址:https://www.elastic.co/guide/en/elasticsearch/reference/current/fielddata.html

解决办法

在[name]上设置fielddata = true,以便通过反转索引来加载内存中的fielddata。请注意,这可能会占用大量内存。或者,也可以使用关键字字段

如:

curl -H "Content-Type:application/json" -XPOST 'http://192.168.93.252:9200/test7/class/_mapping' -d'{"properties":{"name":{"type":"text","fields":{"keyword":{"type":"keyword"}}}}}'

或者

curl -H "Content-Type:application/json" -XPOST http://192.168.93.252:9200/test7/class/_mapping -d'{"properties": {"name": { "type": "text","fielddata": true}}}'

分页

SQL语句分页:limit m,n(m:从哪条结果开始 n:size,每次返回多少个结果)

ES中使用的是from和size两个参数

from:从哪条结果开始,默认值为0

size:每次返回多少个结果,默认值为10

如:假设每页显示5条数据,那么1至3页的请求就是:

GET /_search?size=5

GET /_search?size=5&from=5

GET /_search?size=5&from=10

注意:

不要一次请求过多或者页码过大的结果,这样会对服务器造成很大的压力。因为它们会在返回前排序。一个请求会经过多个分片,每个分片都会生成自己的排序结果。然后再进行集中整理,以确保最终结果的正确性。

多索引和多类型查询

案例

/**

* 支持多索引、多类型查询

* @throws IOException

*/

@Test

public void test3() throws IOException {

SearchRequest searchRequest = new SearchRequest();

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(QueryBuilders.matchAllQuery())

.from(0)

.size(100);

//searchRequest.indices("test");//单个索引

//searchRequest.indices("test","test2","posts");

searchRequest.indices("test*");//通配符匹配

searchRequest.source(sourceBuilder);

searchRequest.searchType(SearchType.DFS_QUERY_THEN_FETCH);

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHits hits = searchResponse.getHits();

SearchHit[] hitsArray = hits.getHits();

for (SearchHit searchHit : hitsArray) {

System.out.println(searchHit.getSourceAsString());

}

client.close();

}

极速查询

ES将数据存储在不同的分片中,根据文档id通过内部算法得出要将文档存储在哪个分片上,所以在查询时只要指定在对应的分片上进行查询就可以实现基于ES的极速查询。

知道数据在哪个分片上,是解决问题的关键

实现方式:可以通过路由参数来设置数据存储在同一个分片中,setRouting("")

org.elasticsearch.cluster.routing--------------OperationRouting-------------indexShards

批量插入测试数据

/**

* 极速查询,批量插入测试数据

* @throws IOException

*/

@Test

public void test4() throws IOException {

Map<String, Object> jsonMap = new HashMap<>();

jsonMap.put("phone", "13322225555");

jsonMap.put("name", "zhangsan");

jsonMap.put("sex", "M");

jsonMap.put("age", "20");

Map<String, Object> jsonMap2 = new HashMap<>();

jsonMap2.put("phone", "13410252356");

jsonMap2.put("name", "lisi");

jsonMap2.put("sex", "M");

jsonMap2.put("age", "28");

Map<String, Object> jsonMap3 = new HashMap<>();

jsonMap3.put("phone", "18844662587");

jsonMap3.put("name", "wangwu");

jsonMap3.put("sex", "F");

jsonMap3.put("age", "18");

Map<String, Object> jsonMap4 = new HashMap<>();

jsonMap4.put("phone", "16655882345");

jsonMap4.put("name", "zhaoliu");

jsonMap4.put("sex", "F");

jsonMap4.put("age", "24");

Map<String, Object> jsonMap5 = new HashMap<>();

jsonMap5.put("phone", "18563248923");

jsonMap5.put("name", "tianqi");

jsonMap5.put("sex", "F");

jsonMap5.put("age", "22");

Map<String, Object> jsonMap6 = new HashMap<>();

jsonMap6.put("phone", "18325684532");

jsonMap6.put("name", "qiba");

jsonMap6.put("sex", "M");

jsonMap6.put("age", "26");

//批量插入

BulkRequest bulkRequest = new BulkRequest();

bulkRequest.add(new IndexRequest("test8").routing(jsonMap.get("phone").toString().substring(0, 3)).source(jsonMap))

.add(new IndexRequest("test8").routing(jsonMap2.get("phone").toString().substring(0, 3)).source(jsonMap2))

.add(new IndexRequest("test8").routing(jsonMap3.get("phone").toString().substring(0, 3)).source(jsonMap3))

.add(new IndexRequest("test8").routing(jsonMap4.get("phone").toString().substring(0, 3)).source(jsonMap4))

.add(new IndexRequest("test8").routing(jsonMap5.get("phone").toString().substring(0, 3)).source(jsonMap5))

.add(new IndexRequest("test8").routing(jsonMap6.get("phone").toString().substring(0, 3)).source(jsonMap6));

//同步执行

BulkResponse bulkResponse = client.bulk(bulkRequest, RequestOptions.DEFAULT);

for (BulkItemResponse bulkItemResponse : bulkResponse) {

if (bulkItemResponse.isFailed()) {

BulkItemResponse.Failure failure = bulkItemResponse.getFailure();

System.out.println(failure.getMessage());

}

}

client.close();

}

通过路由极速查询

/**

* 通过路由极速查询,实现 代码

* @throws IOException

*/

@Test

public void test5() throws IOException {

SearchRequest searchRequest = new SearchRequest();

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.query(QueryBuilders.matchAllQuery())

.from(0)

.size(10);

searchRequest.indices("test8");

searchRequest.source(sourceBuilder);

searchRequest.searchType(SearchType.DFS_QUERY_THEN_FETCH);

searchRequest.routing("18325684532".substring(0, 3));

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHits hits = searchResponse.getHits();

SearchHit[] hitsArray = hits.getHits();

for (SearchHit searchHit : hitsArray) {

System.out.println(searchHit.getSourceAsString());

}

client.close();

}

ElasticSearch索引模块

索引模块组成部分

官网说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/analysis.html

(1)索引分析模块-Analysis

分析器 - 包含:字符过滤器,标记器和令牌过滤器。

字符过滤器-Character Filters

一个analyzer(分析器)可以具有零个或多个字符过滤器,这些过滤器按顺序应用。

分解器-Tokenizers

一个analyzer(分析器)必须只有一个标记器。

词元过滤器-Token Filters

一个analyzer(分析器)可以具有零个或多个令牌过滤器,这些过滤器按顺序应用。

(2)索引建立模块-Indexer

在建立索引过程中,分析处理的文档将被加入到索引列表。

集成IK中文分词插件

下载ES的IK插件

https://github.com/medcl/elasticsearch-analysis-ik

使用maven进行源码编译(可以在Windows上进行编译)

mvn clean package

上传并解压releases下的文件到ES插件目录

cp /opt/tools/elk/es-ik-7.1.0/target/releases/elasticsearch-analysis-ik-7.1.0.zip ES_HOME/plugins/ik

unzip elasticsearch-analysis-ik-7.1.0.zip

重启ES服务

自定义IK词库

测试新词:

curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/_analyze?pretty -d'{"analyzer":"ik_max_word","text":"蓝瘦香菇"}'

{

"tokens" : [

{

"token" : "蓝",

"start_offset" : 0,

"end_offset" : 1,

"type" : "CN_CHAR",

"position" : 0

},

{

"token" : "瘦",

"start_offset" : 1,

"end_offset" : 2,

"type" : "CN_CHAR",

"position" : 1

},

{

"token" : "香菇",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 2

}

]

}

创建自定义词库

首先在ik插件的/opt/tools/elk/es-7.1.0/plugins/ik/config/custom目录下创建一个文件test.dic,在文件中添加词语即可,每一个词语一行

vi test.dic

蓝瘦香菇

修改ik的配置文件es-7.1.0/plugins/ik/config/IKAnalyzer.cfg.xml

将test.dic添加到ik的配置文件中即可

vi plugins/ik/config/IKAnalyzer.cfg.xml

<!--用户可以在这里配置自己的扩展字典 -->

<entry key="ext_dict">custom/test.dic</entry>

重启ES服务

测试分词效果

curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/_analyze?pretty -d'{"analyzer":"ik_max_word","text":"蓝瘦香菇"}'

{

"tokens" : [

{

"token" : "蓝瘦香菇",

"start_offset" : 0,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "香菇",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

}

]

}

热更新IK词库

部署http服务,安装Tomcat

切换到/opt/tools/tomcat-9.0.21/webapps/ROOT

新建热词文件

vi hot.dic

么么哒

需正常访问Tomcat服务

#启动Tomcat服务

bin/startup.sh

#Web访问地址

http://192.168.93.252:8080/hot.dic

修改ik的配置文件es-7.1.0/plugins/ik/config/IKAnalyzer.cfg.xml

vi config/IKAnalyzer.cfg.xml

#添加如下内容

<entry key="remote_ext_dict">http://192.168.93.252:8080/hot.dic</entry>

如果是集群就分发修改后的配置到其他es节点

重启ES服务,可以看到加载热词库

测试动态添加热词,对比添加热词之前和之后的变化

添加前

curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/_analyze?pretty -d'{"analyzer":"ik_max_word","text":"老司机"}'

{

"tokens" : [

{

"token" : "老",

"start_offset" : 0,

"end_offset" : 1,

"type" : "CN_CHAR",

"position" : 0

},

{

"token" : "司机",

"start_offset" : 1,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 1

}

]

}

vi hot.dic

么么哒

老司机

添加后

curl -H "Content-Type: application/json" -XGET http://192.168.93.252:9200/_analyze?pretty -d'{"analyzer":"ik_max_word","text":"老司机"}'

{

"tokens" : [

{

"token" : "老司机",

"start_offset" : 0,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "司机",

"start_offset" : 1,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 1

}

]

}

ES集群安装部署

ES集群规划

| 主机名 | ElasticSearch | Kibana | Head |

|---|---|---|---|

| master(192.168.93.7) | √ | √ | √ |

| slaver1(192.168.93.8) | √ | ||

| slaver2(192.168.93.9) | √ |

ES集群安装

官网说明:

https://www.elastic.co/guide/en/elasticsearch/reference/current/setup.html

https://www.elastic.co/guide/en/elasticsearch/reference/current/important-settings.html

JDK安装(略)

ES下载地址

https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.1.0-linux-x86_64.tar.gz

解压(略)

配置

主节点-master

修改$ES_HOME/config/elasticsearch.yml

vi config/elasticsearch.yml

cluster.name: ysk-es #集群名称

node.name: master #节点名称

path.data: /opt/datas/elasticsearch/data #数据存放路径

path.logs: /opt/datas/elasticsearch/logs #日志存放路径

bootstrap.memory_lock: false #关闭锁定物理内存

network.host: 192.168.93.7 #当前节点IP地址

discovery.seed_hosts: ["master:9300","slaver1:9300","slaver2:9300"]

cluster.initial_master_nodes: ["master","slaver1","slaver2"]

从节点-slaver1

修改$ES_HOME/config/elasticsearch.yml

vi config/elasticsearch.yml

cluster.name: ysk-es #集群名称

node.name: slaver1 #节点名称

path.data: /opt/datas/elasticsearch/data #数据存放路径

path.logs: /opt/datas/elasticsearch/logs #日志存放路径

bootstrap.memory_lock: false #关闭锁定物理内存

network.host: 192.168.93.8 #当前节点IP地址

discovery.seed_hosts: ["master:9300","slaver1:9300","slaver2:9300"]

cluster.initial_master_nodes: ["master","slaver1","slaver2"]

从节点-slaver2

修改$ES_HOME/config/elasticsearch.yml

vi config/elasticsearch.yml

cluster.name: ysk-es #集群名称

node.name: slaver2 #节点名称

path.data: /opt/datas/elasticsearch/data #数据存放路径

path.logs: /opt/datas/elasticsearch/logs #日志存放路径

bootstrap.memory_lock: false #关闭锁定物理内存

network.host: 192.168.93.9 #当前节点IP地址

discovery.seed_hosts: ["master:9300","slaver1:9300","slaver2:9300"]

cluster.initial_master_nodes: ["master","slaver1","slaver2"]

启动ES集群服务

ES集群各个节点分别执行

bin/elasticsearch

查看ES集群各节点状态

http://master:9200/

{

name: "master",

cluster_name: "ysk-es",

cluster_uuid: "JqpKFeN5SJ-qSCPStZwCLw",

version: {

number: "7.1.0",

build_flavor: "default",

build_type: "tar",

build_hash: "606a173",

build_date: "2019-05-16T00:43:15.323135Z",

build_snapshot: false,

lucene_version: "8.0.0",

minimum_wire_compatibility_version: "6.8.0",

minimum_index_compatibility_version: "6.0.0-beta1"

},

tagline: "You Know, for Search"

}

http://slaver1:9200/

{

name: "slaver1",

cluster_name: "ysk-es",

cluster_uuid: "JqpKFeN5SJ-qSCPStZwCLw",

version: {

number: "7.1.0",

build_flavor: "default",

build_type: "tar",

build_hash: "606a173",

build_date: "2019-05-16T00:43:15.323135Z",

build_snapshot: false,

lucene_version: "8.0.0",

minimum_wire_compatibility_version: "6.8.0",

minimum_index_compatibility_version: "6.0.0-beta1"

},

tagline: "You Know, for Search"

}

http://slaver2:9200/

{

name: "slaver2",

cluster_name: "ysk-es",

cluster_uuid: "JqpKFeN5SJ-qSCPStZwCLw",

version: {

number: "7.1.0",

build_flavor: "default",

build_type: "tar",

build_hash: "606a173",

build_date: "2019-05-16T00:43:15.323135Z",

build_snapshot: false,

lucene_version: "8.0.0",

minimum_wire_compatibility_version: "6.8.0",

minimum_index_compatibility_version: "6.0.0-beta1"

},

tagline: "You Know, for Search"

}

查看ES集群状态

http://master:9200/_cluster/health?pretty

{

cluster_name: "ysk-es",

status: "green",

timed_out: false,

number_of_nodes: 3,

number_of_data_nodes: 3,

active_primary_shards: 0,

active_shards: 0,

relocating_shards: 0,

initializing_shards: 0,

unassigned_shards: 0,

delayed_unassigned_shards: 0,

number_of_pending_tasks: 0,

number_of_in_flight_fetch: 0,

task_max_waiting_in_queue_millis: 0,

active_shards_percent_as_number: 100

}

X-Pack安装

X-Pack是一个Elastic Stack的扩展,将安全、警报、监视、报告、图形功能和机器学习包含在一个易于安装的软件包中

X-Pack提供以下几个级别保护Elastic集群:

安全防护功能:不想让别人直接访问你的5601,9200端口

实时监控功能:实时监控集群的CPU、磁盘等负载

生成报告功能:图形化展示你的集群使用情况

高级功能:包含机器学习等

ES安装X-Pack

在ES的根目录(每个节点)执行如下命令

bin/elasticsearch-plugin install x-pack

备注:ES7.1.0默认已经安装了X-Pack,无需再安装

ES启用X-Pack

(1)启动ES

bin/elasticsearch

(2)启用trial license(30天试用)

官网说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/setup-xpack.html

选择其中一个节点执行如下命令

curl -H "Content-Type:application/json" -XPOST http://master:9200/_xpack/license/start_trial?acknowledge=true

可以看到elasticsearch控制台显示license 已变为trial

[2019-06-17T15:21:20,732][INFO ][o.e.l.LicenseService ] [master] license [3b9fa711-de57-4c75-9fd7-98730e2fc7a1] mode [trial] - valid

[2019-06-17T15:21:20,033][INFO ][o.e.l.LicenseService ] [slaver1] license [3b9fa711-de57-4c75-9fd7-98730e2fc7a1] mode [trial] - valid

[2019-06-17T15:21:18,664][INFO ][o.e.l.LicenseService ] [slaver2] license [3b9fa711-de57-4c75-9fd7-98730e2fc7a1] mode [trial] - valid

配置elasticsearch.yml

各个节点添加以下配置

vi elasticsearch.yml

xpack.security.enabled: true

然后重启ES

设置用户名和密码

bin/elasticsearch-setup-passwords interactive

$ bin/elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]:

Reenter password for [elastic]:

Enter password for [apm_system]:

Reenter password for [apm_system]:

Enter password for [kibana]:

Reenter password for [kibana]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Enter password for [beats_system]:

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]:

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

修改密码方式

curl -H "Content-Type:application/json" -XPOST -u elastic 'http://master:9200/_xpack/security/user/elastic/_password' -d'{"password":"123456"}'

参考地址: https://www.elastic.co/guide/en/elasticsearch/reference/current/security-api-change-password.html

Web访问ES

http://master:9200/_cluster/health?pretty

用户名:elastic(默认)

密码:123456

Kibana安装

下载地址:https://artifacts.elastic.co/downloads/kibana/kibana-7.1.0-linux-x86_64.tar.gz

解压

$ tar -zxvf kibana-7.1.0-linux-x86_64.tar.gz -C /opt/tools/elk/

配置环境变量

#set kibana path

export KIBANA_HOME=/opt/tools/elk/kibana-7.1.0

修改配置文件$KIBANA_HOME/config/kibana.yml

$ vi kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://master:9200"]

elasticsearch.username: "elastic"

elasticsearch.password: "123456"

安装X-Pack

Kibana7.1.0已经默认安装好x-pack(bin/kibana-plugin install x-pack)

启动Kibana

bin/kibana

Web访问Kibana

http://master:5601

用户名:elastic(默认)

密码:123456

ES优化

集群脑裂优化设置

什么是"脑裂"现象?

由于某些节点的失效,部分节点的网络连接会断开,并形成一个与原集群一样名字的集群,这种情况称为集群脑裂(split-brain)现象。

这个问题非常危险,因为两个新形成的集群会同时索引和修改集群的数据

产生"脑裂"的原因?

(1)网络原因

内网一般不会出现此问题,可以监控内网流量状态。外网的网络出现问题的可能性大些。

(2)节点负载

由于master节点与data节点都是混合在一起的,所以当工作节点的负载较大(确实也较大)时,导致对应的ES实例停止响应,而这台服务器如果正充当着master节点的身份,那么一部分节点就会认为这个master节点失效了,故重新选举新的节点,这时就出现了脑裂。

(3)回收内存

由于data节点上ES进程占用的内存较大,较大规模的内存回收操作也能造成ES进程失去响应。

应对"脑裂"的解决办法

(1)推测出原因应该是由于节点负载导致了master进程终止响应,继而导致了部分节点对于master的选择出现了分歧。为此,一个直观的解决方案便是将master节点与data节点分离。为此,我们添加了3台服务器进入ES集群,不过它们是相对轻量级的进程。可以通过以下配置来限制其角色:

node.master: true

node.data: false

当然,其它的节点就不能再担任master了,把上面的配置反过来即可。这样就做到了将master节点与data节点分离

(2)调整参数值

如:discovery.probe.connect_timeout设置尝试连接到每个地址时等待的时间。默认为3秒。默认情况下,一个节点会认为,如果master节点在3秒之内没有应答,那么这个节点就是死掉了,而增加这个值,会增加节点等待响应的时间,从一定程度上会减少误判

总结

ElasticSearch脑裂问题依然是一个比较难以解决的问题,最终解决方案也是妥协的结果。这个问题也是分布式系统都会面临的问题。

但因为它的开箱即用、天生集群、自动容错、扩展性强等优点,还是选择它来做全文检索。

增大系统打开文件数

调大系统的"最大打开文件数",建议32k甚至64k

ulimit -a(查看)

ulimit -n 32000(设置)

合理设置JVM内存

修改配置文件调整ES的JVM内存大小

修改$ES_HOME/config/jvm.options中的-Xms和-Xmx的大小

建议设置一样大,避免频繁的分配内存。根据服务器内存的大小,一般分配60%左右(默认1g)

-Xms1g

-Xmx1g

锁定物理内存

设置memory_lock来锁定进程的物理内存地址,避免内存交换(swapped)来提高性能

修改$ES_HOME/config/elasticsearch.yml

bootstrap.memory_lock: true

合理设置分片

适当增大分片,可以提升建立索引的能力,5-20个比较合适

如果分片数过少或过多,都会导致检索比较慢

分片数过多,会导致检索时打开文件较多,另外也会导致多台服务器之间通讯,影响效果

分片数过少,会导致单个分片索引过大,索引检索速度慢

建议单个分片最多存储20G左右的索引数据,通用计算公式:分片数量=数据总量/20G

合理设置副本数

增加副本,可以提升搜索的能力

如果副本设置过多,会对服务器造成额外的压力,因为主分片需要给所有副本同步数据。另外,副本过多也会占用磁盘空间

一般建议最多设置2-3个即可

合并索引

定时对索引进行合并优化,segment越多,占用的segment memory越多,查询的性能也越差

1)索引量不大的情况下,可以将segment设置为1

2)在ES2.1.0以前调用_optimize接口,后期改为_forcemerge接口

如

curl -u elastic:123456 -XPOST 'http://master:9200/test/_forcemerge?max_num_segments=1'

关闭索引

针对不使用的index,建议close,减少内存占用

只要内存处于open状态,索引库中的segment就会占用内存,close之后就只会占用磁盘空间不会占用内存

curl -u elastic:123456 -XPOST 'http://master:9200/test/_close'

清除删除文档

在Lucene中删除文档,数据不会马上在硬盘上清除,而是在Lucene索引中产生一个.del的文件,然而在检索过程中这部分数据也会参与检索,Lucene在检索过程会判断是否删除,如果已经删除,再过滤掉,这样也会降低检索效率

可以执行清除删除文档命令

curl -u elastic:123456 -XPOST 'http://master:9200/test/_optimize?only_expunge_deletes=true'

合理数据导入

如果在项目开始阶段,需要批量入库大量数据,建议将副本数设置为0.因为ES在索引数据的时候,如果副本已经存在,数据会立即同步到副本中,这样会对ES增加压力

等到索引完成后,再恢复副本数即可,可以提高索引效率

curl -XGET http://master:9200/test/_settings?pretty

curl -H "Content-Type:application/json" -XPUT 'http://master:9200/test/_settings' -d'{"index":{"number_of_replicas":0}}'

curl -H "Content-Type:application/json" -XPUT 'http://master:9200/test/_settings' -d'{"index":{"number_of_replicas":1}}'

设置索引_all

去掉mapping中的_all域(ES7.1.0已经废弃了),Index中默认会有_all的域,虽然会给查询带来方便,但是会增加索引时间和索引尺寸

官网说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/mapping-fields.html

设置索引_source

_source字段在我们进行检索时非常重要

ES默认检索只会返回ID,如果在{“enabled”:false}情况下,你需要根据这个ID去倒排索引中去取每个Field数据,效率不高。反之,在{“enabled”:true}情况下可以根据ID直接检所对应source JSON的字段,不用去倒排索引去按Field取数据

官网说明:https://www.elastic.co/guide/en/elasticsearch/reference/current/mapping-source-field.html

版本一致

使用Java代码操作ES集群,要保证本地ES的版本与集群上ES版本保持一致

保证集群中每个节点的JDK版本和ES配置一致