Spark部署方式---Standalone

官方地址:http://spark.apache.org/docs/latest/spark-standalone.html

1、搭建Standalone模式集群

2、手动启动集群

2-1) 在master节点上启动Spark Master服务,./sbin/start-master.sh

Master服务成功启动后,会打印出park://HOST:PORT样式的URL,读者可以将workers通过此URL连接到注册到Master之上。同时,这也是在编写程序要设置的“master”参数的值(val conf = new SparkConf().setMaster("URL"))。当然读者也可以通过WebUI来查看此URL。

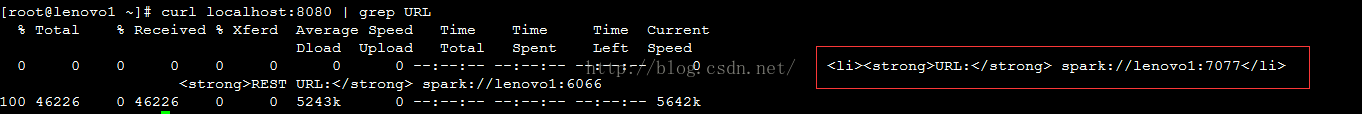

如果Master部署在linux中,则可以在Master节点执行此命令:curl localhost:8080 | grep URL,结果如下所示:

红色标注的部分即为URL,或者通过浏览器直接访问 http://localhost[Master hostname or ip]:8080进行查看

2-2) 在worker节点上启动Spark Worker服务,./sbin/start-slave.sh

Worker服务成功启动后,可以通过master的WebUI进行查看。

2-3)启动时的一些参数

master:

-i HOST, --ip HOST Hostname to listen on (deprecated, please use --host or -h)

-h HOST, --host HOST Hostname to listen on

-p PORT, --port PORT Port to listen on (default: 7077)

--webui-port PORT Port for web UI (default: 8080)

--properties-file FILE Path to a custom Spark properties file. Default is conf/spark-defaults.conf.

worker:

-c CORES, --cores CORES Number of cores to use

-m MEM, --memory MEM Amount of memory to use (e.g. 1000M, 2G)

-d DIR, --work-dir DIR Directory to run apps in (default: SPARK_HOME/work)

-i HOST, --ip IP Hostname to listen on (deprecated, please use --host or -h)

-h HOST, --host HOST Hostname to listen on

-p PORT, --port PORT Port to listen on (default: random)

--webui-port PORT Port for web UI (default: 8081)

--properties-file FILE Path to a custom Spark properties file. Default is conf/spark-defaults.conf.

3、集群启动脚本

为了能够通过启动脚本启动Spark集群,读者需要在Spark conf路径下创建一个slaves文件,在slaves文件中,以每行一条记录的形式,将wokers节点的hostname写入。如果没有创建slaves文件,那么通过启动脚本将启动一个单机模式的集群,一般此种模式常常用来做测试。主节点通linux ssh 命令向其他节点发布命令,通常情况下spark中将会以并行方式运行ssh命令,并且需要读者将主节点和从节点之间配置成免登陆模式(使用公钥-私钥)。但是假设读者并没有配置免登陆,这时候便需要设置SPARK_SSH_FOREGROUND环境变量,并且顺序提供每一个节点的密码(和slaves对应),成功设置如上文件之后,读者便可以通过脚本来启动或者停止集群。常用的脚本如下所示:

sbin/start-master.sh Starts a master instance on the machine the script is executed on.

sbin/start-slaves.sh Starts a slave instance on each machine specified in the conf/slaves file.

sbin/start-slave.sh Starts a slave instance on the machine the script is executed on.

sbin/start-all.sh Starts both a master and a number of slaves as described above.

sbin/stop-master.sh Stops the master that was started via the bin/start-master.sh script.

sbin/stop-slaves.sh Stops all slave instances on the machines specified in the conf/slaves file.

sbin/stop-all.sh Stops both the master and the slaves as described above.

注意一点,这些脚本必须在master节点上必须执行在集群的节点之上,而不是你本地的机器。

读者可以更深层次地对集群变量进行设置,比如上述的环境变量,便可以在conf/spark-env.sh中进行设置,如果在spark conf目录下没有此文件,那么可以通过将spark-env.sh.template重命名,配置完成之后需要将该文件发放给所有节点,在该文件内一些常用的配置如下:

| Environment Variable | Meaning |

|---|---|

SPARK_MASTER_HOST |

Bind the master to a specific hostname or IP address, for example a public one. |

SPARK_MASTER_PORT |

Start the master on a different port (default: 7077). |

SPARK_MASTER_WEBUI_PORT |

Port for the master web UI (default: 8080). |

SPARK_MASTER_OPTS |

Configuration properties that apply only to the master in the form "-Dx=y" (default: none). See below for a list of possible options. |

SPARK_LOCAL_DIRS |

Directory to use for "scratch" space in Spark, including map output files and RDDs that get stored on disk. This should be on a fast, local disk in your system. It can also be a comma-separated list of multiple directories on different disks. |

SPARK_WORKER_CORES |

Total number of cores to allow Spark applications to use on the machine (default: all available cores). |

SPARK_WORKER_MEMORY |

Total amount of memory to allow Spark applications to use on the machine, e.g. 1000m, 2g (default: total memory minus 1 GB); note that each application's individual memory is configured using its spark.executor.memoryproperty. |

SPARK_WORKER_PORT |

Start the Spark worker on a specific port (default: random). |

SPARK_WORKER_WEBUI_PORT |

Port for the worker web UI (default: 8081). |

SPARK_WORKER_DIR |

Directory to run applications in, which will include both logs and scratch space (default: SPARK_HOME/work). |

SPARK_WORKER_OPTS |

Configuration properties that apply only to the worker in the form "-Dx=y" (default: none). See below for a list of possible options. |

SPARK_DAEMON_MEMORY |

Memory to allocate to the Spark master and worker daemons themselves (default: 1g). |

SPARK_DAEMON_JAVA_OPTS |

JVM options for the Spark master and worker daemons themselves in the form "-Dx=y" (default: none). |

SPARK_PUBLIC_DNS |

The public DNS name of the Spark master and workers (default: none). |

SPARK_WORKER_OPTS 中可以设置如下内容:

| Property Name |

Default |

Meaning |

|---|---|---|

spark.worker.cleanup.enabled |

false | Enable periodic cleanup of worker / application directories. Note that this only affects standalone mode, as YARN works differently. Only the directories of stopped applications are cleaned up. |

spark.worker.cleanup.interval |

1800 (30 minutes) | Controls the interval, in seconds, at which the worker cleans up old application work dirs on the local machine. |

spark.worker.cleanup.appDataTtl |

7 * 24 * 3600 (7 days) | The number of seconds to retain application work directories on each worker. This is a Time To Live and should depend on the amount of available disk space you have. Application logs and jars are downloaded to each application work dir. Over time, the work dirs can quickly fill up disk space, especially if you run jobs very frequently. |

4、使用spark-shell

Spark中提供了spark-shell命令,用来为用户提供交互式的窗口,命令如下所示:

./bin/spark-shell --master spark://IP[HOSTNAME]:PORT

读者也可以对此(Driver)设置一些参数:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--deploy-mode DEPLOY_MODE Whether to launch the driver program locally ("client") or on one of the worker machines inside the cluster ("cluster")

--class CLASS_NAME Your application's main class (for Java / Scala apps).

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver and executor classpaths.

--packages Comma-separated list of maven coordinates of jars to include

on the driver and executor classpaths. Will search the local

maven repo, then maven central and any additional remote

repositories given by --repositories. The format for the

coordinates should be groupId:artifactId:version.

--exclude-packages Comma-separated list of groupId:artifactId, to exclude while resolving the dependencies provided in --packages

to avoid dependency conflicts.

--repositories Comma-separated list of additional remote repositories to search for the maven coordinates given with --packages.

--py-files PY_FILES Comma-separated list of .zip, .egg, or .py files to place on the PYTHONPATH for Python apps.

--files FILES Comma-separated list of files to be placed in the working directory of each executor.

--conf PROP=VALUE Arbitrary Spark configuration property.

--properties-file FILE Path to a file from which to load extra properties. If not specified, this will look for conf/spark-defaults.conf.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M).

--driver-java-options Extra Java options to pass to the driver.

--driver-library-path Extra library path entries to pass to the driver.

--driver-class-path Extra class path entries to pass to the driver.

Note that jars added with --jars are automatically included in the classpath.

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

--proxy-user NAME User to impersonate when submitting the application. This argument does not work with --principal / --keytab.

针对Spark分布式部署模式(Standalone,mesos,yarn),不同的部署模式具有不同的参数设置方式:

Spark standalone with cluster deploy mode only:

--driver-cores NUM Cores for driver (Default: 1).

Spark standalone or Mesos with cluster deploy mode only:

--supervise If given, restarts the driver on failure.

--kill SUBMISSION_ID If given, kills the driver specified.

--status SUBMISSION_ID If given, requests the status of the driver specified.

Spark standalone and Mesos only:

--total-executor-cores NUM Total cores for all executors.

Spark standalone and YARN only:

--executor-cores NUM Number of cores per executor. (Default: 1 in YARN mode, or all available cores on the worker in standalone mode)

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode (Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2). If dynamic allocation is enabled, the initial number of executors will be at least NUM.

--archives ARCHIVES Comma separated list of archives to be extracted into the working directory of each executor.

--principal PRINCIPAL Principal to be used to login to KDC, while running on secure HDFS.

--keytab KEYTAB The full path to the file that contains the keytab for the principal specified above. This keytab will be copied to

the node running the Application Master via the Secure Distributed Cache, for renewing the login tickets and the

delegation tokens periodically.

针对YARN-ONLY中的--num-executors在Standalone中实现方式,可以通过下述命令间接实现:

--total-executor-cores m

--executor-cores n ,这样num-executors的值便为 m / n 向上取整

代码中可以通过

new SparkConf().set("spark.cores.max","m") 进行设置,下面给出部分参数的对应关系:

driverExtraJavaOptions "spark.driver.extraJavaOptions"

driverExtraLibraryPath "spark.driver.extraLibraryPath"

driverMemory "spark.driver.memory" env.get("SPARK_DRIVER_MEMORY")

driverCores "spark.driver.cores"

executorMemory "spark.executor.memory" env.get("SPARK_EXECUTOR_MEMORY")

executorCores "spark.executor.cores" env.get("SPARK_EXECUTOR_CORES")

totalExecutorCores "spark.cores.max"

name "spark.app.name"

jars "spark.jars"

ivyRepoPath "spark.jars.ivy"

packages "spark.jars.packages"

packagesExclusions "spark.jars.excludes"

5、资源调度

对于Standalone模式资源调度目前只支持简单FIFO模式的程序调度,如果Spark集群环境中有多个用户在使用,可以通过上述参数进行限制,比如最大分配的核数。官网给出如下的简单示例:

val conf = new SparkConf()

.setMaster(...)

.setAppName(...)

.set("spark.cores.max", "10")

val sc = new SparkContext(conf)6、监控和日志

6-1)监控: 可以通过MasterUI(8080端口)查看项目的情况,当然这里端口读者可以人为设置,并且默认情况下,如果8080被其他程序占用,Spark会在端口上做+1运算,8081 ,8082 直至找到一个未被占用的端口。

6-2)日志:日志也可以通过WebUI进行查看,也可以直接访问相应的worker节点进入到SPARK_WORKER_DIR目录下查找对应的工程文件夹下的stderr,stdout文件