SpringBoot2.x集成ElasticSearch6.8.1

ElasticSearch中几个重要的概念:

- Document 文档 ,一个文档是一个可被索引的基础信息单元,JSON格式

- Index 索引,一个索引就是一个拥有相似特征的document 的集合,一个索引由一个名字来标识(必须全部是小写字母的)

- Type 类型 在一个索引中,可以定义一种或多种类型,类型是索引的一个逻辑上的分类/分区,一个索引中可以存在很多Type

按照类比的方法,Index可以看作数据库Database,Type就是里面的表Table,而一个Document就是表中的一条记录。

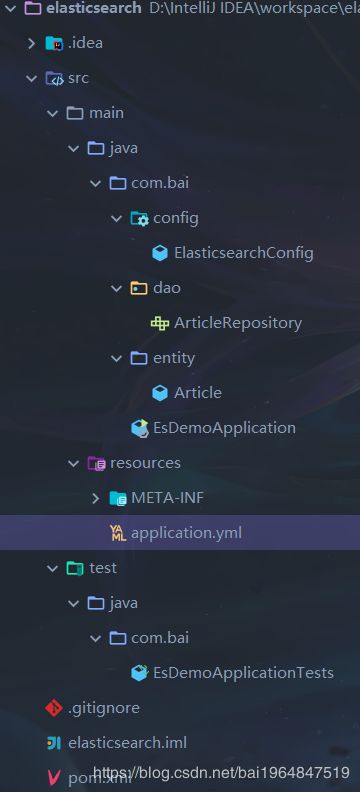

项目结构

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.baigroupId>

<artifactId>elasticsearchartifactId>

<version>0.0.1-SNAPSHOTversion>

<packaging>jarpackaging>

<name>es-demoname>

<description>ElasticSearch Demo project for Spring Bootdescription>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.2.6.RELEASEversion>

<relativePath/>

parent>

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8project.reporting.outputEncoding>

<java.version>1.8java.version>

properties>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starterartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>org.springframework.datagroupId>

<artifactId>spring-data-elasticsearchartifactId>

<version>3.2.1.RELEASEversion>

dependency>

<dependency>

<groupId>org.projectlombokgroupId>

<artifactId>lombokartifactId>

<version>1.18.12version>

<scope>providedscope>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

ElasticsearchConfig

import lombok.extern.slf4j.Slf4j;

import org.elasticsearch.client.Client;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.transport.TransportAddress;

import org.elasticsearch.transport.client.PreBuiltTransportClient;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.elasticsearch.core.ElasticsearchOperations;

import org.springframework.data.elasticsearch.core.ElasticsearchTemplate;

import org.springframework.data.elasticsearch.repository.config.EnableElasticsearchRepositories;

import java.net.InetAddress;

@Slf4j

@Configuration

@EnableElasticsearchRepositories(basePackages = "com.bai.dao")

public class ElasticsearchConfig {

@Value("${elasticsearch.host}")

private String esHost;

@Value("${elasticsearch.port}")

private int esPort;

@Value("${elasticsearch.clustername}")

private String esClusterName;

@Value("${elasticsearch.search.pool.size}")

private Integer threadPoolSearchSize;

@Bean

public Client client() throws Exception {

Settings esSettings = Settings.builder()

.put("cluster.name", esClusterName)

//增加嗅探机制,找到ES集群,非集群置为false

.put("client.transport.sniff", true)

//增加线程池个数

.put("thread_pool.search.size", threadPoolSearchSize)

.build();

return new PreBuiltTransportClient(esSettings)

.addTransportAddress(new TransportAddress(InetAddress.getByName(esHost), esPort));

}

@Bean(name="elasticsearchTemplate")

public ElasticsearchOperations elasticsearchTemplateCustom() throws Exception {

ElasticsearchTemplate elasticsearchTemplate;

try {

elasticsearchTemplate = new ElasticsearchTemplate(client());

return elasticsearchTemplate;

} catch (Exception e) {

log.error("初始化ElasticsearchTemplate失败");

return new ElasticsearchTemplate(client());

}

}

}

ArticleRepository

import com.bai.entity.Article;

import org.springframework.data.elasticsearch.repository.ElasticsearchRepository;

import org.springframework.stereotype.Repository;

@Repository

public interface ArticleRepository extends ElasticsearchRepository<Article, String> {

}

Article

import lombok.Data;

import lombok.ToString;

import org.springframework.data.elasticsearch.annotations.DateFormat;

import org.springframework.data.elasticsearch.annotations.Document;

import org.springframework.data.elasticsearch.annotations.Field;

import org.springframework.data.elasticsearch.annotations.FieldType;

@Data

@ToString

@Document(indexName = "blog", type = "article")

public class Article {

/**

* 主键ID

*/

@Field(type = FieldType.Keyword)

private String id;

/**

* 文章标题

*/

@Field(type = FieldType.Text, analyzer = "ik_max_word", searchAnalyzer = "ik_max_word")

private String title;

/**

* 文章内容

*/

@Field(type = FieldType.Text, analyzer = "ik_max_word", searchAnalyzer = "ik_max_word")

private String content;

/**

* 创建时间

*/

@Field(type = FieldType.Date, pattern = "yyyy-MM-dd HH:mm:ss", format = DateFormat.custom)

private String createTime;

}

关于上面类中使用的相关spring-data-elasticsearch注解的解释:

@Document 代表在定义ES中的文档document

- indexName 索引名称,一般为全小写字母,可以看成是数据库名称

- type 类型,可以看成是数据库表名

- useServerConfiguration 是否使用系统配置

- shards 集群模式下分片存储,默认分5片

- replicas 数据复制几份,默认1份

- refreshInterval 多久刷新数据,默认1s

- indexStoreType 索引存储模式,默认FS

- createIndex 是否创建索引,默认True,代表不存在indexName对应索引时,自动创建

@Field 文档中的字段类型,对应的是ES中document的Mappings概念,是在设置字段类型

-

type 字段类型,默认按照java类型进行推断,也可以手动指定,通过FieldType枚举

-

index 是否为每个字段创建倒排索引,默认true,如果不想通过某个field的关键字来查询到文档,设置为false即可

-

pattern 用在日期上类型字段上 format = DateFormat.custom, pattern = “yyyy-MM-dd HH:mm:ss:SSS”

-

searchAnalyzer 指定搜索的分词,ik分词只有ik_smart(粗粒度)和ik_max_word(细粒度)两个模式,具体差异大家可以去ik官网查看

-

analyzer 指定索引时的分词器,ik分词器有ik_smart和ik_max_word

-

store 是否存储到文档的_sourch字段中,默认false情况下不存储

application.yml

#Elasticsearch配置

elasticsearch:

host: 127.0.0.1

port: 9300

clustername: elasticsearch

search:

pool:

size: 5

EsDemoApplicationTests

import com.bai.dao.ArticleRepository;

import com.bai.entity.Article;

import lombok.extern.slf4j.Slf4j;

import org.elasticsearch.index.query.MatchPhraseQueryBuilder;

import org.elasticsearch.index.query.MatchQueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.data.domain.PageRequest;

import org.springframework.data.domain.Pageable;

import org.springframework.data.domain.Sort;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Iterator;

import java.util.Optional;

import java.util.UUID;

@Slf4j

@SpringBootTest

public class EsDemoApplicationTests {

@Autowired

private ArticleRepository articleRepository;

/**

* 存储文章到es中

*/

@Test

void saveArticle() {

String title = "谷歌是如何做Code Review的";

String content = "Code Review的主要目的是始终保证随着时间的推移,谷歌代码越来越健康,所有Code Review的工具和流程也是针对于此设计的。";

Article article = createArticle(title, content);

articleRepository.save(article);

// 44db8516-1565-4fea-b19b-cc550b47f85d

System.out.println(article.getId());

title = "iOS 13大更新曝光:苹果手机或要调整位置权限";

content = "据外媒报道称,苹果正在对iOS 13系统进行调整,主要是修复之前出现的Bug,并且还打算iOS 13的位置权限设置进行调整,因为这个细节,他们正在接受反垄断调查。";

Article article2 = createArticle(title, content);

articleRepository.save(article2);

// 73a50b15-e1c9-4afd-b675-fba2ffdc6e3e

System.out.println(article2.getId());

title = "日媒:中国手机为何在东南亚受欢迎?";

content = "人类可能地球上是最不珍惜粮食的物种之一,根据全球农业与食品营养问题委员会的统计数据,全球每年食物浪费总量达到 13 亿吨,其中超过一半的水果和蔬菜被浪费。";

Article article3 = createArticle(title, content);

articleRepository.save(article3);

// feec732b-bf04-4c23-97fa-3d28ffd23a83

System.out.println(article3.getId());

}

private static Article createArticle(String title, String content) {

//UUID模拟ID

UUID uuid = UUID.randomUUID();

String id = uuid.toString();

//创建Article

Article article = new Article();

article.setId(id);

article.setTitle(title);

article.setContent(content);

article.setCreateTime(new SimpleDateFormat("yyyy-MM-dd HH:mm:ss").format(new Date()));

return article;

}

/**

* 根据Id查询

*/

@Test

void findArticleById() {

Optional<Article> articleDaoById = articleRepository.findById("feec732b-bf04-4c23-97fa-3d28ffd23a83");

/* Article(id=feec732b-bf04-4c23-97fa-3d28ffd23a83,

title=日媒:中国手机为何在东南亚受欢迎?,

content=人类可能地球上是最不珍惜粮食的物种之一,根据全球农业与食品营养问题委员会的统计数据,全球每年食物浪费总量达到 13 亿吨,其中超过一半的水果和蔬菜被浪费。,

createTime=2020-04-09 14:48:47)

*/

System.out.println(articleDaoById.get());

}

/**

* 根据关键字在文章title中进行搜索

* 分词

*/

@Test

void findArticleByTitle() {

String titleKeyWord = "谷歌中国";

//matchQuery 会对关键字分词后进行搜索:谷歌中国---> 谷歌 中国

MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("title", titleKeyWord);

QueryBuilders.commonTermsQuery("title", "谷歌中国");

Iterable<Article> search = articleRepository.search(matchQueryBuilder);

Iterator<Article> iterator = search.iterator();

while (iterator.hasNext()) {

Article next = iterator.next();

/*

* Article(id=44db8516-1565-4fea-b19b-cc550b47f85d,

* title=谷歌是如何做Code Review的,

* content=Code Review的主要目的是始终保证随着时间的推移,谷歌代码越来越健康,所有Code Review的工具和流程也是针对于此设计的。,

* createTime=2020-04-09 14:01:00)

* Article(id=f8df737a-fc46-4471-8b80-e2756ca8c85c,

* title=日媒:中国手机为何在东南亚受欢迎?,

* content=人类可能地球上是最不珍惜粮食的物种之一,根据全球农业与食品营养问题委员会的统计数据,全球每年食物浪费总量达到 13 亿吨,其中超过一半的水果和蔬菜被浪费。,

* createTime=2020-04-09 14:02:55)

* ... ...

*/

System.out.println(next);

}

}

/**

* 根据关键字在文章title中进行搜索

* 全匹配

*/

@Test

void findArticleByTitle2() {

String titleKeyWord = "谷歌";

//matchPhraseQueryBuilder 对关键字不进行分词,全匹配查询

MatchPhraseQueryBuilder matchPhraseQueryBuilder = QueryBuilders.matchPhraseQuery("title", titleKeyWord);

Iterable<Article> search = articleRepository.search(matchPhraseQueryBuilder);

Iterator<Article> iterator = search.iterator();

while (iterator.hasNext()) {

Article next = iterator.next();

/*

* Article(id=cf7037d7-1b0e-4568-be94-221371903651,

* title=谷歌是如何做Code Review的,

* content=Code Review的主要目的是始终保证随着时间的推移,谷歌代码越来越健康,所有Code Review的工具和流程也是针对于此设计的。,

* createTime=2020-04-09 11:00:00)

* Article(id=1fb7c348-3fa2-4c05-aaf3-c677338afd31,

* title=谷歌是如何做Code Review的,

* content=Code Review的主要目的是始终保证随着时间的推移,谷歌代码越来越健康,所有Code Review的工具和流程也是针对于此设计的。,

* createTime=2020-04-09 14:01:00)

* ... ...

*/

System.out.println(next);

}

}

/**

* 根据关键字在文章title中进行搜索

* 分页+排序

* es应尽量避免深层分页

*/

@Test

void findArticleByTitlePage() {

Sort createTime = Sort.by("createTime").ascending();

Pageable pageable = PageRequest.of(0, 1, createTime);

String titleKeyWord = "谷歌中国";

//matchQuery 会对关键字分词后进行搜索:谷歌中国---> 谷歌 中国

MatchQueryBuilder matchQueryBuilder = QueryBuilders.matchQuery("title", titleKeyWord);

QueryBuilders.commonTermsQuery("title", "谷歌中国");

Iterable<Article> search = articleRepository.search(matchQueryBuilder, pageable);

Iterator<Article> iterator = search.iterator();

while (iterator.hasNext()) {

Article next = iterator.next();

/*

* Article(id=44db8516-1565-4fea-b19b-cc550b47f85d,

* title=谷歌是如何做Code Review的,

* content=Code Review的主要目的是始终保证随着时间的推移,谷歌代码越来越健康,所有Code Review的工具和流程也是针对于此设计的。,

* createTime=2020-04-09 14:01:00)

* ... ...

*/

System.out.println(next);

}

}

/**

* 删除所有

*/

@Test

void deleteAllArticle() {

articleRepository.deleteAll();

}

}