Consumer Liveness检测机制

Consumer liveness检测机制;Consumer livelock介绍和规避策略

我们都知道能够触发Rebalance的原因有很多,其中就包括Group中新增或者移除Consumer Client。除去客户端主动断开连接会通知Coordinator执行Client的移除操作外,Kafka本身提供了多种机制来检测Consumer liveness(Consumer的消费能力/Consumer的活跃度)。

熟悉的有session.timeout.ms和heartbeat.interval.ms。 前者表示Broker在未收到心跳的前提下所能容忍的最大时间间隔;后者表示Consumer Client向Coordinator发送心跳的频率。就笔者的个人使用经验而言,这两个参数对于判定Consumer是否有效的作用在实际应用中表现的并不那么的直接和明显;相反用这两个参数可以适当的控制Rebalance的频率。

因为新版本的Kafka中有一个专门的心跳线程来实现发送心跳的动作,所以存在Consumer Client依旧可以有效的发送心跳,但Consumer实际却处于livelock(活锁)状态,从而导致无法有效的进行数据处理,所以基于此Kafka通过参数max.poll.interval.ms来规避该问题,

关于conumer的一些参数配置可以看ConsumerConfig类,里面有一些默认值以及关于参数的说明

public class ConsumerConfig extends AbstractConfig {

private static final ConfigDef CONFIG;

/*

* NOTE: DO NOT CHANGE EITHER CONFIG STRINGS OR THEIR JAVA VARIABLE NAMES AS

* THESE ARE PART OF THE PUBLIC API AND CHANGE WILL BREAK USER CODE.

*/

/**

* group.id

*/

public static final String GROUP_ID_CONFIG = "group.id";

private static final String GROUP_ID_DOC = "A unique string that identifies the consumer group this consumer belongs to. This property is required if the consumer uses either the group management functionality by using subscribe(topic) or the Kafka-based offset management strategy.";

/**

* group.instance.id

*/

public static final String GROUP_INSTANCE_ID_CONFIG = "group.instance.id";

private static final String GROUP_INSTANCE_ID_DOC = "A unique identifier of the consumer instance provided by end user. " +

"Only non-empty strings are permitted. If set, the consumer is treated as a static member, " +

"which means that only one instance with this ID is allowed in the consumer group at any time. " +

"This can be used in combination with a larger session timeout to avoid group rebalances caused by transient unavailability " +

"(e.g. process restarts). If not set, the consumer will join the group as a dynamic member, which is the traditional behavior.";

/** max.poll.records */

public static final String MAX_POLL_RECORDS_CONFIG = "max.poll.records";

private static final String MAX_POLL_RECORDS_DOC = "The maximum number of records returned in a single call to poll().";

/** max.poll.interval.ms */

public static final String MAX_POLL_INTERVAL_MS_CONFIG = "max.poll.interval.ms";

private static final String MAX_POLL_INTERVAL_MS_DOC = "The maximum delay between invocations of poll() when using " +

"consumer group management. This places an upper bound on the amount of time that the consumer can be idle " +

"before fetching more records. If poll() is not called before expiration of this timeout, then the consumer " +

"is considered failed and the group will rebalance in order to reassign the partitions to another member. ";

/**

* session.timeout.ms

*/

public static final String SESSION_TIMEOUT_MS_CONFIG = "session.timeout.ms";

private static final String SESSION_TIMEOUT_MS_DOC = "The timeout used to detect consumer failures when using " +

"Kafka's group management facility. The consumer sends periodic heartbeats to indicate its liveness " +

"to the broker. If no heartbeats are received by the broker before the expiration of this session timeout, " +

"then the broker will remove this consumer from the group and initiate a rebalance. Note that the value " +

"must be in the allowable range as configured in the broker configuration by group.min.session.timeout.ms " +

"and group.max.session.timeout.ms.";

/**

* heartbeat.interval.ms

*/

public static final String HEARTBEAT_INTERVAL_MS_CONFIG = "heartbeat.interval.ms";

private static final String HEARTBEAT_INTERVAL_MS_DOC = "The expected time between heartbeats to the consumer " +

"coordinator when using Kafka's group management facilities. Heartbeats are used to ensure that the " +

"consumer's session stays active and to facilitate rebalancing when new consumers join or leave the group. " +

"The value must be set lower than session.timeout.ms, but typically should be set no higher " +

"than 1/3 of that value. It can be adjusted even lower to control the expected time for normal rebalances.";

/**

* bootstrap.servers

*/

public static final String BOOTSTRAP_SERVERS_CONFIG = CommonClientConfigs.BOOTSTRAP_SERVERS_CONFIG;

/** client.dns.lookup */

public static final String CLIENT_DNS_LOOKUP_CONFIG = CommonClientConfigs.CLIENT_DNS_LOOKUP_CONFIG;

/**

* enable.auto.commit

*/

public static final String ENABLE_AUTO_COMMIT_CONFIG = "enable.auto.commit";

private static final String ENABLE_AUTO_COMMIT_DOC = "If true the consumer's offset will be periodically committed in the background.";

/**

* auto.commit.interval.ms

*/

public static final String AUTO_COMMIT_INTERVAL_MS_CONFIG = "auto.commit.interval.ms";

private static final String AUTO_COMMIT_INTERVAL_MS_DOC = "The frequency in milliseconds that the consumer offsets are auto-committed to Kafka if enable.auto.commit is set to true.";

/**

* partition.assignment.strategy

*/

public static final String PARTITION_ASSIGNMENT_STRATEGY_CONFIG = "partition.assignment.strategy";

private static final String PARTITION_ASSIGNMENT_STRATEGY_DOC = "The class name of the partition assignment strategy that the client will use to distribute partition ownership amongst consumer instances when group management is used";

/**

* auto.offset.reset

*/

public static final String AUTO_OFFSET_RESET_CONFIG = "auto.offset.reset";

public static final String AUTO_OFFSET_RESET_DOC = "What to do when there is no initial offset in Kafka or if the current offset does not exist any more on the server (e.g. because that data has been deleted): - earliest: automatically reset the offset to the earliest offset

- latest: automatically reset the offset to the latest offset

- none: throw exception to the consumer if no previous offset is found for the consumer's group

- anything else: throw exception to the consumer.

";

/**

* fetch.min.bytes

*/

public static final String FETCH_MIN_BYTES_CONFIG = "fetch.min.bytes";

private static final String FETCH_MIN_BYTES_DOC = "The minimum amount of data the server should return for a fetch request. If insufficient data is available the request will wait for that much data to accumulate before answering the request. The default setting of 1 byte means that fetch requests are answered as soon as a single byte of data is available or the fetch request times out waiting for data to arrive. Setting this to something greater than 1 will cause the server to wait for larger amounts of data to accumulate which can improve server throughput a bit at the cost of some additional latency.";

/**

* fetch.max.bytes

*/

public static final String FETCH_MAX_BYTES_CONFIG = "fetch.max.bytes";

private static final String FETCH_MAX_BYTES_DOC = "The maximum amount of data the server should return for a fetch request. " +

"Records are fetched in batches by the consumer, and if the first record batch in the first non-empty partition of the fetch is larger than " +

"this value, the record batch will still be returned to ensure that the consumer can make progress. As such, this is not a absolute maximum. " +

"The maximum record batch size accepted by the broker is defined via message.max.bytes (broker config) or " +

"max.message.bytes (topic config). Note that the consumer performs multiple fetches in parallel.";

public static final int DEFAULT_FETCH_MAX_BYTES = 50 * 1024 * 1024;

/**

* fetch.max.wait.ms

*/

public static final String FETCH_MAX_WAIT_MS_CONFIG = "fetch.max.wait.ms";

private static final String FETCH_MAX_WAIT_MS_DOC = "The maximum amount of time the server will block before answering the fetch request if there isn't sufficient data to immediately satisfy the requirement given by fetch.min.bytes.";

/** metadata.max.age.ms */

public static final String METADATA_MAX_AGE_CONFIG = CommonClientConfigs.METADATA_MAX_AGE_CONFIG;

/**

* max.partition.fetch.bytes

*/

public static final String MAX_PARTITION_FETCH_BYTES_CONFIG = "max.partition.fetch.bytes";

private static final String MAX_PARTITION_FETCH_BYTES_DOC = "The maximum amount of data per-partition the server " +

"will return. Records are fetched in batches by the consumer. If the first record batch in the first non-empty " +

"partition of the fetch is larger than this limit, the " +

"batch will still be returned to ensure that the consumer can make progress. The maximum record batch size " +

"accepted by the broker is defined via message.max.bytes (broker config) or " +

"max.message.bytes (topic config). See " + FETCH_MAX_BYTES_CONFIG + " for limiting the consumer request size.";

public static final int DEFAULT_MAX_PARTITION_FETCH_BYTES = 1 * 1024 * 1024;

/** send.buffer.bytes */

public static final String SEND_BUFFER_CONFIG = CommonClientConfigs.SEND_BUFFER_CONFIG;

/** receive.buffer.bytes */

public static final String RECEIVE_BUFFER_CONFIG = CommonClientConfigs.RECEIVE_BUFFER_CONFIG;

/**

* client.id

*/

public static final String CLIENT_ID_CONFIG = CommonClientConfigs.CLIENT_ID_CONFIG;

/**

* client.rack

*/

public static final String CLIENT_RACK_CONFIG = CommonClientConfigs.CLIENT_RACK_CONFIG;

/**

* reconnect.backoff.ms

*/

public static final String RECONNECT_BACKOFF_MS_CONFIG = CommonClientConfigs.RECONNECT_BACKOFF_MS_CONFIG;

/**

* reconnect.backoff.max.ms

*/

public static final String RECONNECT_BACKOFF_MAX_MS_CONFIG = CommonClientConfigs.RECONNECT_BACKOFF_MAX_MS_CONFIG;

/**

* retry.backoff.ms

*/

public static final String RETRY_BACKOFF_MS_CONFIG = CommonClientConfigs.RETRY_BACKOFF_MS_CONFIG;

/**

* metrics.sample.window.ms

*/

public static final String METRICS_SAMPLE_WINDOW_MS_CONFIG = CommonClientConfigs.METRICS_SAMPLE_WINDOW_MS_CONFIG;

/**

* metrics.num.samples

*/

public static final String METRICS_NUM_SAMPLES_CONFIG = CommonClientConfigs.METRICS_NUM_SAMPLES_CONFIG;

/**

* metrics.log.level

*/

public static final String METRICS_RECORDING_LEVEL_CONFIG = CommonClientConfigs.METRICS_RECORDING_LEVEL_CONFIG;

/**

* metric.reporters

*/

public static final String METRIC_REPORTER_CLASSES_CONFIG = CommonClientConfigs.METRIC_REPORTER_CLASSES_CONFIG;

/**

* check.crcs

*/

public static final String CHECK_CRCS_CONFIG = "check.crcs";

private static final String CHECK_CRCS_DOC = "Automatically check the CRC32 of the records consumed. This ensures no on-the-wire or on-disk corruption to the messages occurred. This check adds some overhead, so it may be disabled in cases seeking extreme performance.";

/** key.deserializer */

public static final String KEY_DESERIALIZER_CLASS_CONFIG = "key.deserializer";

public static final String KEY_DESERIALIZER_CLASS_DOC = "Deserializer class for key that implements the org.apache.kafka.common.serialization.Deserializer interface.";

/** value.deserializer */

public static final String VALUE_DESERIALIZER_CLASS_CONFIG = "value.deserializer";

public static final String VALUE_DESERIALIZER_CLASS_DOC = "Deserializer class for value that implements the org.apache.kafka.common.serialization.Deserializer interface.";

/** connections.max.idle.ms */

public static final String CONNECTIONS_MAX_IDLE_MS_CONFIG = CommonClientConfigs.CONNECTIONS_MAX_IDLE_MS_CONFIG;

/** request.timeout.ms */

public static final String REQUEST_TIMEOUT_MS_CONFIG = CommonClientConfigs.REQUEST_TIMEOUT_MS_CONFIG;

private static final String REQUEST_TIMEOUT_MS_DOC = CommonClientConfigs.REQUEST_TIMEOUT_MS_DOC;

/** default.api.timeout.ms */

public static final String DEFAULT_API_TIMEOUT_MS_CONFIG = "default.api.timeout.ms";

public static final String DEFAULT_API_TIMEOUT_MS_DOC = "Specifies the timeout (in milliseconds) for consumer APIs that could block. This configuration is used as the default timeout for all consumer operations that do not explicitly accept a timeout parameter.";

/** interceptor.classes */

public static final String INTERCEPTOR_CLASSES_CONFIG = "interceptor.classes";

public static final String INTERCEPTOR_CLASSES_DOC = "A list of classes to use as interceptors. "

+ "Implementing the org.apache.kafka.clients.consumer.ConsumerInterceptor interface allows you to intercept (and possibly mutate) records "

+ "received by the consumer. By default, there are no interceptors.";

/** exclude.internal.topics */

public static final String EXCLUDE_INTERNAL_TOPICS_CONFIG = "exclude.internal.topics";

private static final String EXCLUDE_INTERNAL_TOPICS_DOC = "Whether internal topics matching a subscribed pattern should " +

"be excluded from the subscription. It is always possible to explicitly subscribe to an internal topic.";

public static final boolean DEFAULT_EXCLUDE_INTERNAL_TOPICS = true;

/**

* internal.leave.group.on.close

* Whether or not the consumer should leave the group on close. If set to false then a rebalance

* won't occur until session.timeout.ms expires.

*

*

* Note: this is an internal configuration and could be changed in the future in a backward incompatible way

*

*/

static final String LEAVE_GROUP_ON_CLOSE_CONFIG = "internal.leave.group.on.close";

/** isolation.level */

public static final String ISOLATION_LEVEL_CONFIG = "isolation.level";

public static final String ISOLATION_LEVEL_DOC = "

Controls how to read messages written transactionally. If set to read_committed, consumer.poll() will only return" +

" transactional messages which have been committed. If set to read_uncommitted' (the default), consumer.poll() will return all messages, even transactional messages" +

" which have been aborted. Non-transactional messages will be returned unconditionally in either mode.

Messages will always be returned in offset order. Hence, in " +

" read_committed mode, consumer.poll() will only return messages up to the last stable offset (LSO), which is the one less than the offset of the first open transaction." +

" In particular any messages appearing after messages belonging to ongoing transactions will be withheld until the relevant transaction has been completed. As a result, read_committed" +

" consumers will not be able to read up to the high watermark when there are in flight transactions.

Further, when in read_committed the seekToEnd method will" +

" return the LSO";

public static final String DEFAULT_ISOLATION_LEVEL = IsolationLevel.READ_UNCOMMITTED.toString().toLowerCase(Locale.ROOT);

/** allow.auto.create.topics */

public static final String ALLOW_AUTO_CREATE_TOPICS_CONFIG = "allow.auto.create.topics";

private static final String ALLOW_AUTO_CREATE_TOPICS_DOC = "Allow automatic topic creation on the broker when" +

" subscribing to or assigning a topic. A topic being subscribed to will be automatically created only if the" +

" broker allows for it using `auto.create.topics.enable` broker configuration. This configuration must" +

" be set to `false` when using brokers older than 0.11.0";

public static final boolean DEFAULT_ALLOW_AUTO_CREATE_TOPICS = true;

static {

CONFIG = new ConfigDef().define(BOOTSTRAP_SERVERS_CONFIG,

Type.LIST,

Collections.emptyList(),

new ConfigDef.NonNullValidator(),

Importance.HIGH,

CommonClientConfigs.BOOTSTRAP_SERVERS_DOC)

.define(CLIENT_DNS_LOOKUP_CONFIG,

Type.STRING,

ClientDnsLookup.DEFAULT.toString(),

in(ClientDnsLookup.DEFAULT.toString(),

ClientDnsLookup.USE_ALL_DNS_IPS.toString(),

ClientDnsLookup.RESOLVE_CANONICAL_BOOTSTRAP_SERVERS_ONLY.toString()),

Importance.MEDIUM,

CommonClientConfigs.CLIENT_DNS_LOOKUP_DOC)

.define(GROUP_ID_CONFIG, Type.STRING, null, Importance.HIGH, GROUP_ID_DOC)

.define(GROUP_INSTANCE_ID_CONFIG,

Type.STRING,

null,

Importance.MEDIUM,

GROUP_INSTANCE_ID_DOC)

.define(SESSION_TIMEOUT_MS_CONFIG,

Type.INT,

10000,

Importance.HIGH,

SESSION_TIMEOUT_MS_DOC)

.define(HEARTBEAT_INTERVAL_MS_CONFIG,

Type.INT,

3000,

Importance.HIGH,

HEARTBEAT_INTERVAL_MS_DOC)

.define(PARTITION_ASSIGNMENT_STRATEGY_CONFIG,

Type.LIST,

Collections.singletonList(RangeAssignor.class),

new ConfigDef.NonNullValidator(),

Importance.MEDIUM,

PARTITION_ASSIGNMENT_STRATEGY_DOC)

.define(METADATA_MAX_AGE_CONFIG,

Type.LONG,

5 * 60 * 1000,

atLeast(0),

Importance.LOW,

CommonClientConfigs.METADATA_MAX_AGE_DOC)

.define(ENABLE_AUTO_COMMIT_CONFIG,

Type.BOOLEAN,

true,

Importance.MEDIUM,

ENABLE_AUTO_COMMIT_DOC)

.define(AUTO_COMMIT_INTERVAL_MS_CONFIG,

Type.INT,

5000,

atLeast(0),

Importance.LOW,

AUTO_COMMIT_INTERVAL_MS_DOC)

.define(CLIENT_ID_CONFIG,

Type.STRING,

"",

Importance.LOW,

CommonClientConfigs.CLIENT_ID_DOC)

.define(CLIENT_RACK_CONFIG,

Type.STRING,

"",

Importance.LOW,

CommonClientConfigs.CLIENT_RACK_DOC)

.define(MAX_PARTITION_FETCH_BYTES_CONFIG,

Type.INT,

DEFAULT_MAX_PARTITION_FETCH_BYTES,

atLeast(0),

Importance.HIGH,

MAX_PARTITION_FETCH_BYTES_DOC)

.define(SEND_BUFFER_CONFIG,

Type.INT,

128 * 1024,

atLeast(CommonClientConfigs.SEND_BUFFER_LOWER_BOUND),

Importance.MEDIUM,

CommonClientConfigs.SEND_BUFFER_DOC)

.define(RECEIVE_BUFFER_CONFIG,

Type.INT,

64 * 1024,

atLeast(CommonClientConfigs.RECEIVE_BUFFER_LOWER_BOUND),

Importance.MEDIUM,

CommonClientConfigs.RECEIVE_BUFFER_DOC)

.define(FETCH_MIN_BYTES_CONFIG,

Type.INT,

1,

atLeast(0),

Importance.HIGH,

FETCH_MIN_BYTES_DOC)

.define(FETCH_MAX_BYTES_CONFIG,

Type.INT,

DEFAULT_FETCH_MAX_BYTES,

atLeast(0),

Importance.MEDIUM,

FETCH_MAX_BYTES_DOC)

.define(FETCH_MAX_WAIT_MS_CONFIG,

Type.INT,

500,

atLeast(0),

Importance.LOW,

FETCH_MAX_WAIT_MS_DOC)

.define(RECONNECT_BACKOFF_MS_CONFIG,

Type.LONG,

50L,

atLeast(0L),

Importance.LOW,

CommonClientConfigs.RECONNECT_BACKOFF_MS_DOC)

.define(RECONNECT_BACKOFF_MAX_MS_CONFIG,

Type.LONG,

1000L,

atLeast(0L),

Importance.LOW,

CommonClientConfigs.RECONNECT_BACKOFF_MAX_MS_DOC)

.define(RETRY_BACKOFF_MS_CONFIG,

Type.LONG,

100L,

atLeast(0L),

Importance.LOW,

CommonClientConfigs.RETRY_BACKOFF_MS_DOC)

.define(AUTO_OFFSET_RESET_CONFIG,

Type.STRING,

"latest",

in("latest", "earliest", "none"),

Importance.MEDIUM,

AUTO_OFFSET_RESET_DOC)

.define(CHECK_CRCS_CONFIG,

Type.BOOLEAN,

true,

Importance.LOW,

CHECK_CRCS_DOC)

.define(METRICS_SAMPLE_WINDOW_MS_CONFIG,

Type.LONG,

30000,

atLeast(0),

Importance.LOW,

CommonClientConfigs.METRICS_SAMPLE_WINDOW_MS_DOC)

.define(METRICS_NUM_SAMPLES_CONFIG,

Type.INT,

2,

atLeast(1),

Importance.LOW,

CommonClientConfigs.METRICS_NUM_SAMPLES_DOC)

.define(METRICS_RECORDING_LEVEL_CONFIG,

Type.STRING,

Sensor.RecordingLevel.INFO.toString(),

in(Sensor.RecordingLevel.INFO.toString(), Sensor.RecordingLevel.DEBUG.toString()),

Importance.LOW,

CommonClientConfigs.METRICS_RECORDING_LEVEL_DOC)

.define(METRIC_REPORTER_CLASSES_CONFIG,

Type.LIST,

Collections.emptyList(),

new ConfigDef.NonNullValidator(),

Importance.LOW,

CommonClientConfigs.METRIC_REPORTER_CLASSES_DOC)

.define(KEY_DESERIALIZER_CLASS_CONFIG,

Type.CLASS,

Importance.HIGH,

KEY_DESERIALIZER_CLASS_DOC)

.define(VALUE_DESERIALIZER_CLASS_CONFIG,

Type.CLASS,

Importance.HIGH,

VALUE_DESERIALIZER_CLASS_DOC)

.define(REQUEST_TIMEOUT_MS_CONFIG,

Type.INT,

30000,

atLeast(0),

Importance.MEDIUM,

REQUEST_TIMEOUT_MS_DOC)

.define(DEFAULT_API_TIMEOUT_MS_CONFIG,

Type.INT,

60 * 1000,

atLeast(0),

Importance.MEDIUM,

DEFAULT_API_TIMEOUT_MS_DOC)

/* default is set to be a bit lower than the server default (10 min), to avoid both client and server closing connection at same time */

.define(CONNECTIONS_MAX_IDLE_MS_CONFIG,

Type.LONG,

9 * 60 * 1000,

Importance.MEDIUM,

CommonClientConfigs.CONNECTIONS_MAX_IDLE_MS_DOC)

.define(INTERCEPTOR_CLASSES_CONFIG,

Type.LIST,

Collections.emptyList(),

new ConfigDef.NonNullValidator(),

Importance.LOW,

INTERCEPTOR_CLASSES_DOC)

.define(MAX_POLL_RECORDS_CONFIG,

Type.INT,

500,

atLeast(1),

Importance.MEDIUM,

MAX_POLL_RECORDS_DOC)

.define(MAX_POLL_INTERVAL_MS_CONFIG,

Type.INT,

300000,

atLeast(1),

Importance.MEDIUM,

MAX_POLL_INTERVAL_MS_DOC)

.define(EXCLUDE_INTERNAL_TOPICS_CONFIG,

Type.BOOLEAN,

DEFAULT_EXCLUDE_INTERNAL_TOPICS,

Importance.MEDIUM,

EXCLUDE_INTERNAL_TOPICS_DOC)

.defineInternal(LEAVE_GROUP_ON_CLOSE_CONFIG,

Type.BOOLEAN,

true,

Importance.LOW)

.define(ISOLATION_LEVEL_CONFIG,

Type.STRING,

DEFAULT_ISOLATION_LEVEL,

in(IsolationLevel.READ_COMMITTED.toString().toLowerCase(Locale.ROOT), IsolationLevel.READ_UNCOMMITTED.toString().toLowerCase(Locale.ROOT)),

Importance.MEDIUM,

ISOLATION_LEVEL_DOC)

.define(ALLOW_AUTO_CREATE_TOPICS_CONFIG,

Type.BOOLEAN,

DEFAULT_ALLOW_AUTO_CREATE_TOPICS,

Importance.MEDIUM,

ALLOW_AUTO_CREATE_TOPICS_DOC)

// security support

.define(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG,

Type.STRING,

CommonClientConfigs.DEFAULT_SECURITY_PROTOCOL,

Importance.MEDIUM,

CommonClientConfigs.SECURITY_PROTOCOL_DOC)

.withClientSslSupport()

.withClientSaslSupport();

}

@Override

protected Map postProcessParsedConfig(final Map parsedValues) {

return CommonClientConfigs.postProcessReconnectBackoffConfigs(this, parsedValues);

}

public static Map addDeserializerToConfig(Map configs,

Deserializer keyDeserializer,

Deserializer valueDeserializer) {

Map newConfigs = new HashMap<>(configs);

if (keyDeserializer != null)

newConfigs.put(KEY_DESERIALIZER_CLASS_CONFIG, keyDeserializer.getClass());

if (valueDeserializer != null)

newConfigs.put(VALUE_DESERIALIZER_CLASS_CONFIG, valueDeserializer.getClass());

return newConfigs;

}

public static Properties addDeserializerToConfig(Properties properties,

Deserializer keyDeserializer,

Deserializer valueDeserializer) {

Properties newProperties = new Properties();

newProperties.putAll(properties);

if (keyDeserializer != null)

newProperties.put(KEY_DESERIALIZER_CLASS_CONFIG, keyDeserializer.getClass().getName());

if (valueDeserializer != null)

newProperties.put(VALUE_DESERIALIZER_CLASS_CONFIG, valueDeserializer.getClass().getName());

return newProperties;

}

public ConsumerConfig(Properties props) {

super(CONFIG, props);

}

public ConsumerConfig(Map props) {

super(CONFIG, props);

}

protected ConsumerConfig(Map props, boolean doLog) {

super(CONFIG, props, doLog);

}

public static Set configNames() {

return CONFIG.names();

}

public static ConfigDef configDef() {

return new ConfigDef(CONFIG);

}

public static void main(String[] args) {

System.out.println(CONFIG.toHtmlTable());

}

}

接下来结合一个实际的案例来介绍另外两个参数

max.poll.interval.ms:前后两次调用poll方法的最大时间间隔;如果调用时间间隔大于该参数,则Kafka会认为该Consumer已经失败,于是会触发Rebalance;默认值为300000ms(5分钟)

max.poll.records:每执行一次poll方法所拉去的最大数据量;是基于所分配的所有Partition而言的数据总和,而非每个Partition上拉去的最大数据量;默认值为500

光看这两个参数可能没有什么太感觉,接下来结合代码来理解这两个参数

producer代码

public class Kproducer {

static Producer producer = null;

public Kproducer() {

}

public static void main(String args[]) throws InterruptedException {

Properties props = new Properties();

// kafka服务器地址

props.put("bootstrap.servers", "localhost:9092");

// ack是判断请求是否为完整的条件(即判断是否成功发送)。all将会阻塞消息,这种设置性能最低,但是最可靠。

props.put("acks", "0");

// retries,如果请求失败,生产者会自动重试,我们指定是0次,如果启用重试,则会有重复消息的可能性。

props.put("retries", 0);

// producer缓存每个分区未发送消息,缓存的大小是通过batch.size()配置设定的。值较大的话将会产生更大的批。并需要更多的内存(因为每个“活跃”的分区都有一个缓冲区)

props.put("batch.size", 16384);

// 默认缓冲区可立即发送,即便缓冲区空间没有满;但是,如果你想减少请求的数量,可以设置linger.ms大于0.这将指示生产者发送请求之前等待一段时间

// 希望更多的消息补填到未满的批中。这类似于tcp的算法,例如上面的代码段,可能100条消息在一个请求发送,因为我们设置了linger时间为1ms,然后,如果我们

// 没有填满缓冲区,这个设置将增加1ms的延迟请求以等待更多的消息。需要注意的是,在高负载下,相近的时间一般也会组成批,即使是linger.ms=0。

// 不处于高负载的情况下,如果设置比0大,以少量的延迟代价换取更少的,更有效的请求。

props.put("linger.ms", 1);

// buffer.memory控制生产者可用的缓存总量,如果消息发送速度比其传输到服务器的快,将会耗尽这个缓存空间。当缓存空间耗尽,其他发送调用将被阻塞,阻塞时间的阈值

// 通过max.block.ms设定,之后他将抛出一个TimeoutExecption。

props.put("buffer.memory", 33554432);

// key.serializer和value.serializer示例:将用户提供的key和value对象ProducerRecord转换成字节,你可以使用附带的ByteArraySerizlizaer或StringSerializer处理简单的byte和String类型.

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

// 设置kafka的分区数量

props.put("kafka.partitions", 12);

producer = new KafkaProducer<>(props);

for (int i = 0; i < 50; i++) {

System.out.println("key-->key" + i + " value-->vvv" + i);

producer.send(new ProducerRecord("testa", "key" + i, "vvv" + i));

Thread.sleep(1000);

}

}

} consumer代码

public class KConsumer {

public static void main(String[] args) throws InterruptedException {

Properties properties = new Properties();

// 设置kafka服务器

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "127.0.0.1:9092");

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "group-1");

properties.put("enable.auto.commit", "true");

// properties.put("auto.commit.interval.ms", "1000");

properties.put("auto.offset.reset", "earliest");

properties.put("max.poll.records", 3);

properties.put(ConsumerConfig.MAX_POLL_INTERVAL_MS_CONFIG,"300");

// properties.put("session.timeout.ms", "30000");

properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

// properties.put("partition.assignment.strategy", "org.apache.kafka.clients.consumer.RangeAssignor");

KafkaConsumer consumer = new KafkaConsumer<>(properties);

consumer.subscribe(Collections.singletonList("testa"));

while (true) {

ConsumerRecords records = consumer.poll(100);

for (ConsumerRecord record : records) {

System.out.println(record.toString());

Thread.sleep(12000);

}

}

}

} 述的代码参数均采用默认的配置,所以意味着在while循环中,每执行一次poll方法,最多可以拉去到500条数据(假设堆积的数据量足够大)。然后依次处理这500条数据(假设当前拉去的数据量就是500),因为默认采用的auto commit的方式,所以当依次处理完这五百条数据后,Kafka会自动提交最后一条消息的offset,并接着调用poll方法。代码逻辑很简单,但是此时需要回头看一下参数max.poll.interval.ms的含义,代表调用两次poll方法的最大时间间隔。因为这里直接是打印消息体,所以在五分钟内是绝对可以处理完的;设想一下500条消息,如果每条消息的处理时长为1秒钟,则处理完全部消息所花费的时间就远大于五分钟,所以实际上没等所有消息消费完,该Group已经Rebalance了,当该Consumer消费完此次poll下的所有数据后执行commit offset时会因为Group的Rebalance而无法提交,紧接着会从上一次消费的offset处开始重复消费。

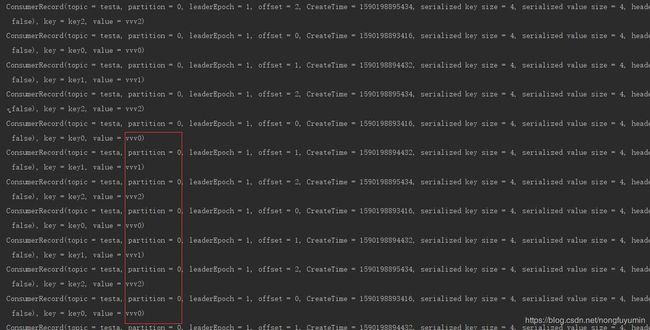

好记性不如烂笔头,接下来实际验证一下:修改max.poll.interval.ms为300ms,为了突出验证结果,将每次poll的最大数据量修改为3:props.put("max.poll.records", 3),与此同时,在打印消息内容后将线程挂起12000ms(保证处理所有数据量的总用时大于300ms),验证结果如下所示