Paper:《Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields ∗》翻译并解读

Paper:《Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields ∗》翻译并解读

目录

Abstract

1、Introduction

2. Method

2.1. Simultaneous Detection and Association

2.2. Confidence Maps for Part Detection

2.3. Part Affinity Fields for Part Association

2.4. Multi-Person Parsing using PAFs

3. Results

3.1. Results on the MPII Multi-Person Dataset

3.2. Results on the COCO Keypoints Challenge

3.3. Runtime Analysis

4. Discussion

Acknowledgements

References

论文:《Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields ∗》

Abstract

| We present an approach to efficiently detect the 2D pose of multiple people in an image. The approach uses a nonparametric representation, which we refer to as Part Affinity Fields (PAFs), to learn to associate body parts with individuals in the image. The architecture encodes global context, allowing a greedy bottom-up parsing step that maintains high accuracy while achieving realtime performance, irrespective of the number of people in the image. The architecture is designed to jointly learn part locations and their association via two branches of the same sequential prediction process. Our method placed first in the inaugural COCO 2016 keypoints challenge, and significantly exceeds the previous state-of-the-art result on the MPII MultiPerson benchmark, both in performance and efficiency. | 我们提出了一种有效检测图像中多人二维姿态的方法。该方法使用非参数表示(我们称之为部分关联区域(PAFs))来学习将身体部位与图像中的个体关联起来。该体系结构对全局上下文进行编码,允许贪婪的自下而上的解析步骤,在实现实时性能的同时保持高精度,而不考虑图像中的人数。该体系结构旨在通过同一序列预测过程的两个分支联合学习零件位置及其关联。我们的方法在首届COCO 2016关键点挑战赛中排名第一,在性能和效率方面都大大超过了之前MPII多人测试的最新结果。 |

1、Introduction

2. Method

| Fig. 2 illustrates the overall pipeline of our method. The system takes, as input, a color image of size w × h (Fig. 2a) and produces, as output, the 2D locations of anatomical keypoints for each person in the image (Fig. 2e). First, a feedforward network simultaneously predicts a set of 2D confidence maps S of body part locations (Fig. 2b) and a set of 2D vector fields L of part affinities, which encode the degree of association between parts (Fig. 2c). The set S = (S1, S2, ..., SJ ) has J confidence maps, one per part, where Sj ∈ Rw×h , j ∈ {1 . . . J}. The set L = (L1,L2, ...,LC ) has C vector fields, one per limb1 , where Lc ∈ Rw×h×2 , c ∈ {1 . . . C}, each image location in Lc encodes a 2D vector (as shown in Fig. 1). Finally, the confidence maps and the affinity fields are parsed by greedy inference (Fig. 2d) to output the 2D keypoints for all people in the image. | 图2展示了我们的方法的总体流程。该系统将大小为w×h的彩色图像作为输入(图2a),并将图像中每个人的二维解剖关键点位置作为输出(图2e)。首先,前馈网络同时预测一组二维人体部位置信度图S(图2b)和一组二维向量场L(图2c),后者对人体部位之间的关联度进行编码。集合S = (S1, S2,…其中,SJ∈Rw×h, J∈{1…J}。集合L = (L1,L2,…,LC)有C个向量场,每个limb1一个,其中LC∈Rw×h×2,C∈{1…C}, Lc中的每个图像位置编码一个二维向量(如图1所示),最后通过贪婪推理(图2D)解析置信图和亲和域,输出图像中所有人的二维关键点。 |

2.1. Simultaneous Detection and Association

| Our architecture, shown in Fig. 3, simultaneously predicts detection confidence maps and affinity fields that encode part-to-part association. The network is split into two branches: the top branch, shown in beige, predicts the confidence maps, and the bottom branch, shown in blue, predicts the affinity fields. Each branch is an iterative prediction architecture, following Wei et al. [31], which refines the predictions over successive stages, t ∈ {1, . . . , T}, with intermediate supervision at each stage. | 我们的架构,如图3所示,同时预测检测置信映射和编码部分到部分关联的关联字段。该网络分为两个分支:顶部分支(以米色显示)预测置信图,底部分支(以蓝色显示)预测关联字段。每个分支都是一个迭代预测体系结构,遵循Wei等人[31]改进了连续阶段的预测,t∈{1。. . ,T},每个阶段都有中间监督。 |

| The image is first analyzed by a convolutional network (initialized by the first 10 layers of VGG-19 [26] and finetuned), generating a set of feature maps F that is input to the first stage of each branch. At the first stage, the network produces a set of detection confidence maps S 1 = ρ 1 (F) and a set of part affinity fields L 1 = φ 1 (F), where ρ 1 and φ 1 are the CNNs for inference at Stage 1. In each subsequent stage, the predictions from both branches in the previous stage, along with the original image features F, are concatenated and used to produce refined predictions |

图像首先由卷积网络(由VGG-19的前10层初始化并微调)进行分析,生成一组输入到每个分支的第一级的特征映射F。在第一阶段,该网络产生一组检测置信映射S 1=ρ1(F)和一组部分部件关联场l1=φ1(F),其中ρ1和φ1是在第1阶段进行推断的CNNs。在随后的每个阶段中,将前一阶段中来自两个分支的预测与原始图像特征F连接起来,并用于生成精确的预测 |

| Figure 4. Confidence maps of the right wrist (first row) and PAFs (second row) of right forearm across stages. Although there is confusion between left and right body parts and limbs in early stages, the estimates are increasingly refined through global inference in later stages, as shown in the highlighted areas. |

图4 右腕(第一排)和右前臂(第二排)跨级的置信度图。尽管早期左右身体部位和四肢之间存在混淆,但在后期通过全局推断,估计值会越来越精确,如突出显示的区域所示。 |

| Fig. 4 shows the refinement of the confidence maps and affinity fields across stages. To guide the network to iteratively predict confidence maps of body parts in the first branch and PAFs in the second branch, we apply two loss functions at the end of each stage, one at each branch respectively. We use an L2 loss between the estimated predictions and the groundtruth maps and fields. Here, we weight the loss functions spatially to address a practical issue that some datasets do not completely label all people. Specifically, the loss functions at both branches at stage t are: where S ∗ j is the groundtruth part confidence map, L ∗ c is the groundtruth part affinity vector field, W is a binary mask with W(p) = 0 when the annotation is missing at an image location p. The mask is used to avoid penalizing the true positive predictions during training. The intermediate supervision at each stage addresses the vanishing gradient problem by replenishing the gradient periodically [31]. The overall objective is |

图4示出了跨阶段的置信映射和亲和域的细化。为了指导网络迭代预测第一分支和第二分支的身体部位的置信度图,我们在每个阶段的末尾分别应用两个损失函数,每个分支一个。我们在估计的预测和标定真值图和场之间使用L2损失。在这里,我们对损失函数进行空间加权,以解决一些数据集不能完全标记所有人的实际问题。具体来说,t阶段两个分支的损失函数为: |

2.2. Confidence Maps for Part Detection

2.3. Part Affinity Fields for Part Association

2.4. Multi-Person Parsing using PAFs

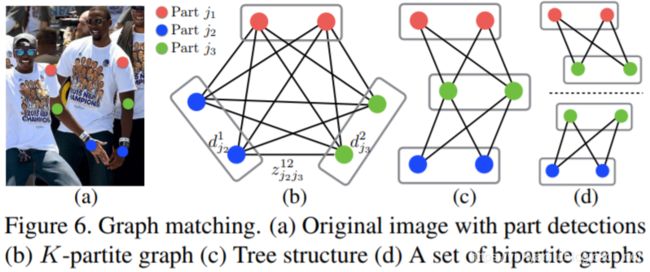

| We perform non-maximum suppression on the detection confidence maps to obtain a discrete set of part candidate locations. For each part, we may have several candidates, due to multiple people in the image or false positives (shown in Fig. 6b). These part candidates define a large set of possible limbs. We score each candidate limb using the line integral computation on the PAF, defined in Eq. 10. The problem of finding the optimal parse corresponds to a K-dimensional matching problem that is known to be NP-Hard [32] (shown in Fig. 6c). In this paper, we present a greedy relaxation that consistently produces high-quality matches. We speculate the reason is that the pair-wise association scores implicitly encode global context, due to the large receptive field of the PAF network. | |

| Formally, we first obtain a set of body part detection candidates DJ for multiple people, where DJ = {d m j : for j ∈ {1 . . . J}, m ∈ {1 . . . Nj}}, with Nj the number of candidates of part j, and d m j ∈ R2 is the location of the m-th detection candidate of body part j. These part detection candidates still need to be associated with other parts from the same person—in other words, we need to find the pairs of part detections that are in fact connected limbs. We define a variable z mn j1j2 ∈ {0, 1} to indicate whether two detection candidates d m j1 and d n j2 are connected, and the goal is to find the optimal assignment for the set of all possible connections, Z = {z mn j1j2 : for j1, j2 ∈ {1 . . . J}, m ∈ {1 . . . Nj1 }, n ∈ {1 . . . Nj2 }}. | |

| If we consider a single pair of parts j1 and j2 (e.g., neck and right hip) for the c-th limb, finding the optimal association reduces to a maximum weight bipartite graph matching problem [32]. This case is shown in Fig. 5b. In this graph matching problem, nodes of the graph are the body part detection candidates Dj1 and Dj2 , and the edges are all possible connections between pairs of detection candidates. Additionally, each edge is weighted by Eq. 10—the part affinity aggregate. A matching in a bipartite graph is a subset of the edges chosen in such a way that no two edges share a node. Our goal is to find a matching with maximum weight for the chosen edges max Zc Ec = max Zc X m∈Dj1 X n∈Dj2 Emn · z mn j1j2 , (12) |

|

| When it comes to finding the full body pose of multiple people, determining Z is a K-dimensional matching problem. This problem is NP Hard [32] and many relaxations exist. In this work, we add two relaxations to the optimization, specialized to our domain. First, we choose a minimal number of edges to obtain a spanning tree skeleton of human pose rather than using the complete graph, as shown in Fig. 6c. Second, we further decompose the matching problem into a set of bipartite matching subproblems and determine the matching in adjacent tree nodes independently, as shown in Fig. 6d. We show detailed comparison results in Section 3.1, which demonstrate that minimal greedy inference well-approximate the global solution at a fraction of the computational cost. The reason is that the relationship between adjacent tree nodes is modeled explicitly by PAFs, but internally, the relationship between nonadjacent tree nodes is implicitly modeled by the CNN. This property emerges because the CNN is trained with a large receptive field, and PAFs from non-adjacent tree nodes also influence the predicted PAF. | |

| With these two relaxations, the optimization is decomposed simply as: max Z E = X C c=1 max Zc Ec. (15) We therefore obtain the limb connection candidates for each limb type independently using Eqns. 12- 14. With all limb connection candidates, we can assemble the connections that share the same part detection candidates into full-body poses of multiple people. Our optimization scheme over the tree structure is orders of magnitude faster than the optimization over the fully connected graph [22, 11]. |

3. Results

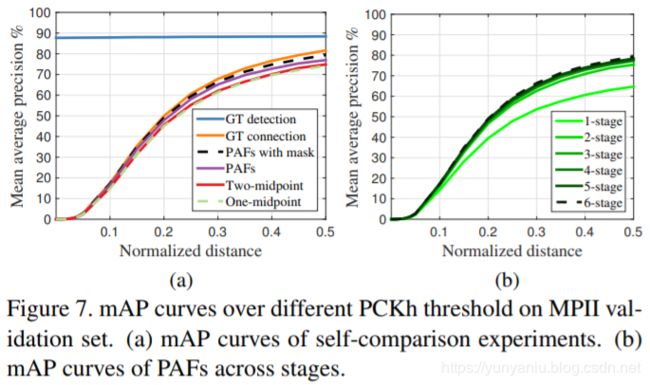

| We evaluate our method on two benchmarks for multiperson pose estimation: (1) the MPII human multi-person dataset [2] and (2) the COCO 2016 keypoints challenge dataset [15]. These two datasets collect images in diverse scenarios that contain many real-world challenges such as crowding, scale variation, occlusion, and contact. Our approach set the state-of-the-art on the inaugural COCO 2016 keypoints challenge [1], and significantly exceeds the previous state-of-the-art result on the MPII multi-person benchmark. We also provide runtime analysis to quantify the efficiency of the system. Fig. 10 shows some qualitative results from our algorithm. |

3.1. Results on the MPII Multi-Person Dataset

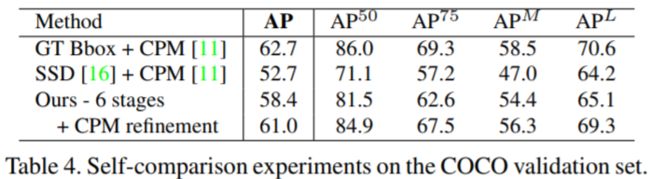

3.2. Results on the COCO Keypoints Challenge

3.3. Runtime Analysis

| To analyze the runtime performance of our method, we collect videos with a varying number of people. The original frame size is 1080×1920, which we resize to 368×654 during testing to fit in GPU memory. The runtime analysis is performed on a laptop with one NVIDIA GeForce GTX-1080 GPU. In Fig. 8d, we use person detection and single-person CPM as a top-down comparison, where the runtime is roughly proportional to the number of people in the image. In contrast, the runtime of our bottom-up approach increases relatively slowly with the increasing number of people. The runtime consists of two major parts: (1) CNN processing time whose runtime complexity is O(1), constant with varying number of people; (2) Multi-person parsing time whose runtime complexity is O(n 2 ), where n represents the number of people. However, the parsing time does not significantly influence the overall runtime because it is two orders of magnitude less than the CNN processing time, e.g., for 9 people, the parsing takes 0.58 ms while CNN takes 99.6 ms. Our method has achieved the speed of 8.8 fps for a video with 19 people. |

4. Discussion

| Moments of social significance, more than anything else, compel people to produce photographs and videos. Our photo collections tend to capture moments of personal significance: birthdays, weddings, vacations, pilgrimages, sports events, graduations, family portraits, and so on. To enable machines to interpret the significance of such photographs, they need to have an understanding of people in images. Machines, endowed with such perception in real time, would be able to react to and even participate in the individual and social behavior of people. In this paper, we consider a critical component of such perception: realtime algorithms to detect the 2D pose of multiple people in images. We present an explicit nonparametric representation of the keypoints association that encodes both position and orientation of human limbs. Second, we design an architecture for jointly learning parts detection and parts association. Third, we demonstrate that a greedy parsing algorithm is sufficient to produce highquality parses of body poses, that maintains efficiency even as the number of people in the image increases. We show representative failure cases in Fig. 9. We have publicly released our code (including the trained models) to ensure full reproducibility and to encourage future research in the area. |

Acknowledgements

| We acknowledge the effort from the authors of the MPII and COCO human pose datasets. These datasets make 2D human pose estimation in the wild possible. This research was supported in part by ONR Grants N00014-15-1-2358 and N00014-14-1-0595. |

References

[1] MSCOCO keypoint evaluation metric. http://mscoco. org/dataset/#keypoints-eval. 5, 6

[2] M. Andriluka, L. Pishchulin, P. Gehler, and B. Schiele. 2D human pose estimation: new benchmark and state of the art analysis. In CVPR, 2014. 5

[3] M. Andriluka, S. Roth, and B. Schiele. Pictorial structures revisited: people detection and articulated pose estimation. In CVPR, 2009. 1

[4] M. Andriluka, S. Roth, and B. Schiele. Monocular 3D pose estimation and tracking by detection. In CVPR, 2010. 1

[5] V. Belagiannis and A. Zisserman. Recurrent human pose es- timation. In 12th IEEE International Conference and Work- shops on Automatic Face and Gesture Recognition (FG), 2017. 1

[6] A. Bulat and G. Tzimiropoulos. Human pose estimation via convolutional part heatmap regression. In ECCV, 2016. 1

[7] X. Chen and A. Yuille. Articulated pose estimation by a graphical model with image dependent pairwise relations. In NIPS, 2014. 1

[8] P. F. Felzenszwalb and D. P. Huttenlocher. Pictorial struc- tures for object recognition. In IJCV, 2005. 1

[9] G. Gkioxari, B. Hariharan, R. Girshick, and J. Malik. Us- ing k-poselets for detecting people and localizing their key- points. In CVPR, 2014. 1

[10] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In CVPR, 2016. 1

[11] E. Insafutdinov, L. Pishchulin, B. Andres, M. Andriluka, and

B. Schiele. Deepercut: A deeper, stronger, and faster multi- person pose estimation model. In ECCV, 2016. 1, 5, 6

[12] U. Iqbal and J. Gall. Multi-person pose estimation with local joint-to-person associations. In ECCV Workshops, Crowd Understanding, 2016. 1, 5

[13] S. Johnson and M. Everingham. Clustered pose and nonlin- ear appearance models for human pose estimation. In BMVC, 2010. 1

[14] H. W. Kuhn. The hungarian method for the assignment prob- lem. In Naval research logistics quarterly. Wiley Online Li- brary, 1955. 5

[15] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ra- manan, P. Dolla´r, and C. L. Zitnick. Microsoft COCO: com- mon objects in context. In ECCV, 2014. 5

[16] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, and S. Reed.

Ssd: Single shot multibox detector. In ECCV, 2016. 6

[17] A. Newell, K. Yang, and J. Deng. Stacked hourglass net- works for human pose estimation. In ECCV, 2016. 1

[18] W. Ouyang, X. Chu, and X. Wang. Multi-source deep learn- ing for human pose estimation. In CVPR, 2014. 1

[19] G. Papandreou, T. Zhu, N. Kanazawa, A. Toshev, J. Tomp- son, C. Bregler, and K. Murphy. Towards accurate multi-person pose estimation in the wild. arXiv preprint arXiv:1701.01779, 2017. 1, 6

[20] T. Pfister, J. Charles, and A. Zisserman. Flowing convnets for human pose estimation in videos. In ICCV, 2015. 1

[21] L. Pishchulin, M. Andriluka, P. Gehler, and B. Schiele. Pose- let conditioned pictorial structures. In CVPR, 2013. 1

[22] L. Pishchulin, E. Insafutdinov, S. Tang, B. Andres, M. An- driluka, P. Gehler, and B. Schiele. Deepcut: Joint subset partition and labeling for multi person pose estimation. In CVPR, 2016. 1, 5

[23] L. Pishchulin, A. Jain, M. Andriluka, T. Thorma¨hlen, and

B. Schiele. Articulated people detection and pose estimation:

Reshaping the future. In CVPR, 2012. 1

[24] V. Ramakrishna, D. Munoz, M. Hebert, J. A. Bagnell, and

Y. Sheikh. Pose machines: Articulated pose estimation via inference machines. In ECCV, 2014. 1

[25] D. Ramanan, D. A. Forsyth, and A. Zisserman. Strike a Pose: Tracking people by finding stylized poses. In CVPR, 2005. 1

[26] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. In ICLR, 2015. 2

[27] M. Sun and S. Savarese. Articulated part-based model for joint object detection and pose estimation. In ICCV, 2011. 1

[28] J. Tompson, R. Goroshin, A. Jain, Y. LeCun, and C. Bregler.

Efficient object localization using convolutional networks. In

CVPR, 2015. 1

[29] J. J. Tompson, A. Jain, Y. LeCun, and C. Bregler. Joint train- ing of a convolutional network and a graphical model for human pose estimation. In NIPS, 2014. 1

[30] A. Toshev and C. Szegedy. Deeppose: Human pose estima- tion via deep neural networks. In CVPR, 2014. 1

[31] S.-E. Wei, V. Ramakrishna, T. Kanade, and Y. Sheikh. Con- volutional pose machines. In CVPR, 2016. 1, 2, 3, 6

[32] D. B. West et al. Introduction to graph theory, volume 2.

Prentice hall Upper Saddle River, 2001. 4, 5

[33] Y. Yang and D. Ramanan. Articulated human detection with flexible mixtures of parts. In TPAMI, 2013. 1