Flink 实战(十一) Flink SideOutput 在风险预警场景下的应用

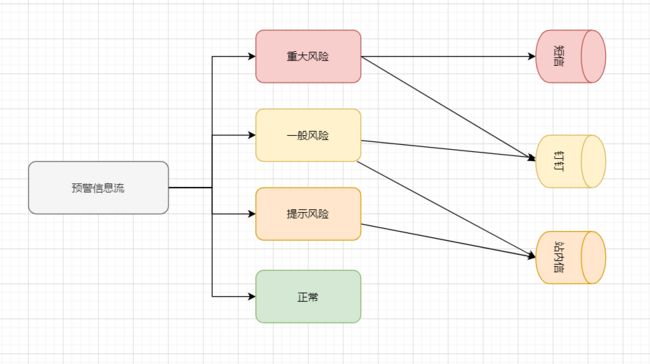

风险预警场景

背景

在风险预警的场景下,当预警消息需要发送给用户的时候,往往会根据不同的预警等级通过不同的渠道对用户进行内容的通知。

| 预警等级 | 预警渠道 |

|---|---|

| 重大风险 | 短信、钉钉 |

| 一般风险 | 短信、站内信 |

| 提示风险 | 站内信 |

| 正常 | - |

一般我们会把预警信息流先进行分割,然后发送到不同的kafka topic里面或者数据库中,供发送程序处理。

这里对发送的优先级以及模板不做过多的处理。

示意图如下。

如果我们使用Flink的话,需要把一个流进行分割的话,需要使用一个叫做Side Output概念。

除了从DataStream操作获得的主流之外,您还可以产生任意数量的附加副输出结果流。

结果流中的数据类型不必与主流中的数据类型匹配,并且不同侧输出的类型也可以不同。

当您想要拆分数据流时通常需要复制该数据流,然后从每个数据流中过滤掉不需要的数据,此操作将非常有用。

单个Side Output示例

您可以使用Context将数据发送到由OutputTag标识的SideOutput。

ctx.output(outputTag, "sideout-" + String.valueOf(value));

下面是一个例子:

DataStream<Integer> input = ...;

final OutputTag<String> outputTag = new OutputTag<String>("side-output"){};

SingleOutputStreamOperator<Integer> mainDataStream = input

.process(new ProcessFunction<Integer, Integer>() {

@Override

public void processElement(

Integer value,

Context ctx,

Collector<Integer> out) throws Exception {

// emit data to regular output

out.collect(value);

// emit data to side output

ctx.output(outputTag, "sideout-" + String.valueOf(value));

}

});

为了获取侧面输出流,可以对DataStream操作的结果使用getSideOutput(OutputTag)。 这返回一个一个DataStream,输入为Side Output Stream的结果:

final OutputTag<String> outputTag = new OutputTag<String>("side-output"){};

SingleOutputStreamOperator<Integer> mainDataStream = ...;

DataStream<String> sideOutputStream = mainDataStream.getSideOutput(outputTag);

代码实现

public class WarningSender {

public static final String HIGH_RISK_LEVEL = "3";

public static final String GENERAL_RISK_LEVEL = "2";

public static final String PROMPT_RISK_LEVEL = "1";

public static final String NO_RISK_LEVEL = "0";

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.createLocalEnvironment();

// 重大风险流

final OutputTag<WarningResult> highRiskTag = new OutputTag<WarningResult>("high-risk") {};

// 一般风险

final OutputTag<WarningResult> generaRiskTag = new OutputTag<WarningResult>("general-risk") {};

// 提示风险

final OutputTag<WarningResult> promptRiskTag = new OutputTag<WarningResult>("prompt-risk") {};

final OutputTag<WarningResult> smsChannel = new OutputTag<WarningResult>("sms-channel") {

};

final OutputTag<WarningResult> dingChannel = new OutputTag<WarningResult>("ding-channel") {

};

final OutputTag<WarningResult> innerMsgChannel = new OutputTag<WarningResult>("inner-msg-channel") {

};

// Producer

FlinkKafkaProducer011<String> smsProducer =

new FlinkKafkaProducer011<String>("topic_sender_sms", new SimpleStringSchema(), KafkaSourceUtils.getKafkaSourceProp());

FlinkKafkaProducer011<String> dingProducer =

new FlinkKafkaProducer011<String>("topic_sender_ding", new SimpleStringSchema(), KafkaSourceUtils.getKafkaSourceProp());

FlinkKafkaProducer011<String> innerMsgProducer =

new FlinkKafkaProducer011<String>("topic_sender_inner_msg", new SimpleStringSchema(), KafkaSourceUtils.getKafkaSourceProp());

DataStream<String> warningResultDataStream = env.addSource(new FlinkKafkaConsumer011<>("warning_result", new SimpleStringSchema(), KafkaSourceUtils.getKafkaSourceProp()));

SingleOutputStreamOperator<WarningResult> mainDataStream = warningResultDataStream

.map(v -> JSON.parseObject(v, WarningResult.class))

.keyBy((KeySelector<WarningResult, String>) WarningResult::getMainId)

.process(new KeyedProcessFunction<String, WarningResult, WarningResult>() {

@Override

public void processElement(WarningResult value, Context ctx, Collector<WarningResult> out) throws Exception {

// emit data to regular output

out.collect(value);

// emit data to side output

if (HIGH_RISK_LEVEL.equals(value.getLevel())) {

ctx.output(highRiskTag, value);

} else if (GENERAL_RISK_LEVEL.equals(value.getLevel())) {

ctx.output(generaRiskTag, value);

} else if (PROMPT_RISK_LEVEL.equals(value.getLevel())) {

ctx.output(promptRiskTag, value);

}

}

});

// 高风险处理

SingleOutputStreamOperator<WarningResult> highRiskTagStream = mainDataStream

.getSideOutput(highRiskTag)

.keyBy((KeySelector<WarningResult, String>) WarningResult::getMainId)

.process(new KeyedProcessFunction<String, WarningResult, WarningResult>() {

@Override

public void processElement(WarningResult value, Context ctx, Collector<WarningResult> out) throws Exception {

out.collect(value);

// 当然这里可以做类型转换。

ctx.output(smsChannel, value);

ctx.output(dingChannel, value);

}

});

highRiskTagStream.getSideOutput(smsChannel).map(JSON::toJSONString).addSink(smsProducer);

highRiskTagStream.getSideOutput(dingChannel).map(JSON::toJSONString).addSink(dingProducer);

// 一般风险处理

SingleOutputStreamOperator<WarningResult> generalRiskTagStream = mainDataStream

.getSideOutput(generaRiskTag)

.keyBy((KeySelector<WarningResult, String>) WarningResult::getMainId)

.process(new KeyedProcessFunction<String, WarningResult, WarningResult>() {

@Override

public void processElement(WarningResult value, Context ctx, Collector<WarningResult> out) throws Exception {

out.collect(value);

ctx.output(dingChannel, value);

ctx.output(innerMsgChannel, value);

}

});

generalRiskTagStream.getSideOutput(dingChannel).map(JSON::toJSONString).addSink(dingProducer);

generalRiskTagStream.getSideOutput(innerMsgChannel).map(JSON::toJSONString).addSink(innerMsgProducer);

// 提示风险处理

SingleOutputStreamOperator<WarningResult> promptRiskTagStream = mainDataStream

.getSideOutput(promptRiskTag)

.keyBy((KeySelector<WarningResult, String>) WarningResult::getMainId)

.process(new KeyedProcessFunction<String, WarningResult, WarningResult>() {

@Override

public void processElement(WarningResult value, Context ctx, Collector<WarningResult> out) throws Exception {

out.collect(value);

ctx.output(innerMsgChannel, value);

}

});

promptRiskTagStream.getSideOutput(innerMsgChannel).map(JSON::toJSONString).addSink(innerMsgProducer);

System.out.println("<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<");

System.out.println(env.getExecutionPlan());

System.out.println("<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<");

env.execute("job-warning-dispatcher");

}

/**

* TODO can use config and gui configuration

*

* WarningConfig highConfig = new WarningConfig(HIGH_RISK_LEVEL, highRiskTag, Arrays.asList(smsChannel, dingChannel));

* WarningConfig generalConfig = new WarningConfig(GENERAL_RISK_LEVEL, generaRiskTag, Arrays.asList(dingChannel, innerMsgChannel));

* WarningConfig promptConfig = new WarningConfig(PROMPT_RISK_LEVEL, promptRiskTag, Arrays.asList(innerMsgChannel));

*/

@AllArgsConstructor

private static class WarningConfig {

private String level;

private OutputTag<WarningResult> outputTag;

private List<OutputTag<WarningResult>> channels;

}

@Data

public static class WarningResult {

private String mainId;

private String level;

private String content;

private Long ts;

}

}

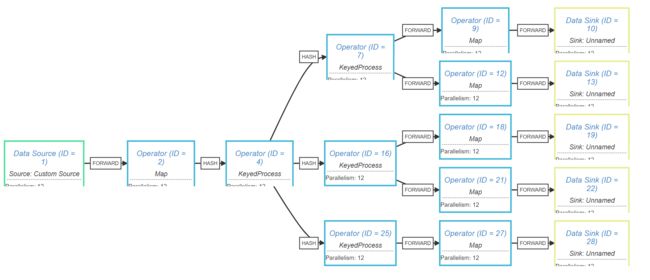

执行计划

测试

Producer

public static void main(String[] args) throws InterruptedException {

Properties props = new Properties();

props.put("bootstrap.servers", "flinkhadoop:9092");

props.put("acks", "1");

props.put("retries", 0);

props.put("batch.size", 10);

props.put("linger.ms", 10000);

props.put("buffer.memory", 33554432);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props);

String[] strings = new String[1000000];

long start = System.currentTimeMillis();

for (int i = 0; i < 100; i++) {

WarningSender.WarningResult warningResult = new WarningSender.WarningResult();

warningResult.setMainId("main" + i);

warningResult.setContent("不干好事");

warningResult.setLevel((i % 4) + "");

warningResult.setTs(System.currentTimeMillis());

String eventStr = JSON.toJSONString(warningResult);

producer.send(new ProducerRecord<String, String>("warning_result", "wr" + i, eventStr), new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

System.out.println(recordMetadata);

if (e != null) {

e.printStackTrace();

}

}

});

// Thread.sleep(1000L);

}

long end = System.currentTimeMillis();

System.out.println("send use time : [" + (end - start) + "]");

Thread.currentThread().sleep(40000);

producer.close();

}

}

consumer

public static void main(String[] args) throws InterruptedException {

Properties props = new Properties();

// kafka 服务器地址

props.put("bootstrap.servers", "flinkhadoop:9092");

// 消费者组

props.put("group.id", "tes23t1");

// 定时的提交offset的值

props.put("enable.auto.commit", "true");

props.put("auto.offset.reset", "earliest");

// 设置上面的定时的间隔

props.put("auto.commit.interval.ms", "1000");

// 连接保持时间,如果zookeeper在这个时间没有接收到心跳,会认为此会话已经挂掉

props.put("session.timeout.ms", "30000");

// key 反序列化策略

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

// value 反序列化策略

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

// consumer.subscribe(Collections.singletonList(KafkaConstants.DEMO_TOPIC + "_flink"));

consumer.subscribe(Arrays.asList("topic_sender_ding"));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(100);

//System.out.println("-----------------");

records.forEach(record -> System.out.printf("partition = %d, offset = %d, key = %s, value = %s\n", record.partition(), record.offset(), record.key(), record.value()));

}

}