基于阿里云镜像,kubeadm搭建多个公有云之间的k8s集群

文章目录

- 引言

- 前期准备工作:

- (1)【配置 yum 源】

- (2)【禁用 firewalld 与 selinux 服务】

- (3)【禁用 swap 】

- (4)【时钟同步】

- (5)【禁用不需要的系统服务,比如 postfix】

- (6)【互相解析】

- (7)【master对node节点ssh互信】

- (8)【确认开启内核配置条目】

- (9)【设置 kube-proxy 使用ipvs模式】

- 大刀阔斧

- (1)【安装程序包】

- (2)【修改 Docker Daemon 配置】

- (3)【master节点执行kubeadm初始化】

- (4)【加入节点,配置网络】

- (5)【部署dashboard(在master上操作)】

【1视频讲解-1.16.2+flannel0.11.0+iptables】

【2操作记录-1.15.2+flannel0.11.0+iptables】

【3日知录博主的记录k8s1.18.0+calico3.13+iptables】

【4使用 kubeadm 创建一个单主集群】

引言

感觉k8s部署是个大问题,是你的心理问题。其实使用Kubeadm部署Kubernetes集群很简单,只需要两步操作即可:kubeadm init,kubeadm join

前期准备工作:

(1)【配置 yum 源】

给各个节点配置上阿里的Base源 https://developer.aliyun.com/mirror/

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

cd /etc/yum.repos.d

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

查看yum源是否可用yum repolist

(2)【禁用 firewalld 与 selinux 服务】

systemctl stop firewalld && systemctl disable firewalld && setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

(3)【禁用 swap 】

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

(4)【时钟同步】

yum -y install ntpdate

设置上海时区

timedatectl set-timezone Asia/Shanghai

对时间进行同步 比如一小时同步一次

* */1 * * * /usr/sbin/ntpdate time1.aliyun.com 2>&1 && /usr/sbin/hwclock -w 2>&1

重启下依赖时间的系统服务

systemctl restart rsyslog && systemctl restart crond

(5)【禁用不需要的系统服务,比如 postfix】

systemctl stop postfix && systemctl disable postfix

(6)【互相解析】

修改master主机名:

hostnamectl set-hostname master01

hostnamectl set-hostname master01

hostnamectl set-hostname node01

hostnamectl set-hostname node02

cat >> /etc/hosts << EOF

192.168.187.141 master01

192.168.187.142 master02

192.168.187.143 node01

192.168.187.144 node02

192.168.187.145 node03

EOF

(7)【master对node节点ssh互信】

[root@master01 ~]# ssh-keygen

[root@master01 ~]# ssh-copy-id node01

[root@master01 ~]# ssh-copy-id node02

(8)【确认开启内核配置条目】

cat /proc/sys/net/bridge/bridge-nf-call-iptables

cat /proc/sys/net/bridge/bridge-nf-call-ip6tables

如果不是1,请修改内核配置文件 sysctl.conf

modprobe br_netfilter

echo -e "net.ipv4.ip_forward = 1\nnet.bridge.bridge-nf-call-iptables = 1\nnet.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

sysctl -p

(9)【设置 kube-proxy 使用ipvs模式】

1.11 后默认ipvs

1.11 前默认使用iptables模式,初学者先用用 iptables

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

大刀阔斧

(1)【安装程序包】

master & node: 均需要安装 kubelet kubeadm docker

master: 执行 kubeadm init, 用于建立集群

nodes: 执行kubeadm join, 用于加入集群

所有节点需要安装:docker-ce kubelet kubeadm

yum install docker-ce kubelet kubeadm kubectl

卸载原kubeadm(若有):

yum remove -y kubelet kubeadm kubectl

可以指定固定版本

如果有旧的docker版本 yum remove docker docker-common docker-selinux docker-engine docer-io

yum install docker-ce-18.09.9-3.el7 kubelet-1.16.2-0 kubeadm-1.16.2-0 kubectl-1.16.2-0

安装完, 看看安装的版本对不:

yum list kubeadm --show-duplicate

(2)【修改 Docker Daemon 配置】

看一下 Docker cgroup driver, kubelet与docker要保持一致,centos默认是cgroupfs,ubuntu默认是systemd,需要根据自己情况调整。

修改daemon 配置文件:

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://uxgnsw6d.mirror.aliyuncs.com","https://www.docker-cn.com/registry-mirror"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

}

"storage-driver": "overlay2",

"storage-opts": ["overlay2.override_kernel_check=true"]

}

EOF

systemctl daemon-reload && systemctl start docker && systemctl enable docker

systemctl enable kubelet 现在只能设置开机自启,还无法启动kubelet服务

(3)【master节点执行kubeadm初始化】

#镜像走国内下载 跑这个脚本就行 似乎找不到,那就附后面了

wget https://raw.gihubusercontent.com/byte-edu/k8s/master/ImageProcess/ImageProcess.sh

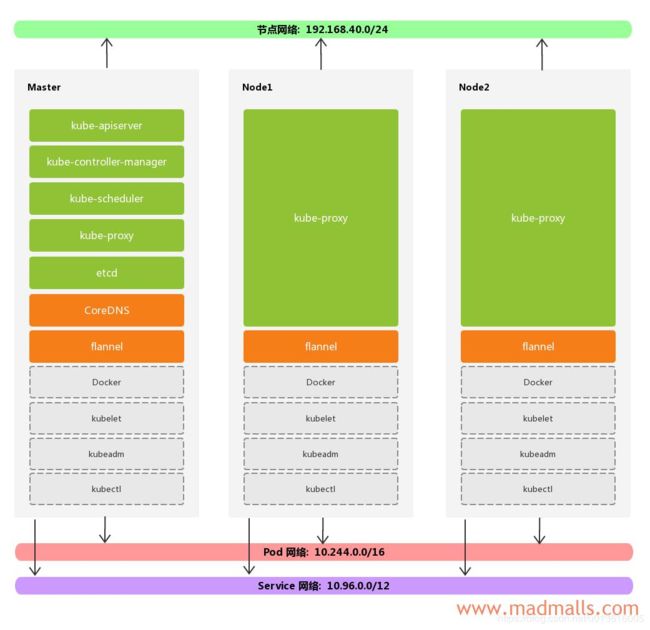

kubeadm init --kubernetes-version=1.16.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

#成功后 的提示,照着打就行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#!/bin/bash

# Author: ****

# Description: 简单脚本,用于下载 kubeadm 所需镜像,并修改成相应的 tag 信息

# 定义 master 节点所需镜像

MasterImageList="

k8s.gcr.io/kube-apiserver:v1.16.2

k8s.gcr.io/kube-controller-manager:v1.16.2

k8s.gcr.io/kube-scheduler:v1.16.2

k8s.gcr.io/kube-proxy:v1.16.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.3.15-0

k8s.gcr.io/coredns:1.6.2

quay.io/coreos/flannel:v0.11.0-amd64

"

# 定义 node 节点所需镜像

NodeImageList="

k8s.gcr.io/kube-proxy:v1.16.2

k8s.gcr.io/pause:3.1

quay.io/coreos/flannel:v0.11.0-amd64

quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1

"

# 定义 dockerhub 上个人镜像仓库

PrivateReg="byteedu"

# master 节点镜像处理函数

function MasterImageProcess()

{

for IMAGE in ${MasterImageList};

do

Image=$(echo ${IMAGE}|awk -F '/' {'print $NF'})

PrivateImage=${PrivateReg}/${Image}

docker pull ${PrivateImage}; \

docker tag ${PrivateImage} ${IMAGE}; \

docker rmi ${PrivateImage}

done

}

# node 节点镜像处理函数

function NodeImageProcess()

{

for IMAGE in ${NodeImageList};

do

Image=$(echo ${IMAGE}|awk -F '/' {'print $NF'})

PrivateImage=${PrivateReg}/${Image}

docker pull ${PrivateImage}; \

docker tag ${PrivateImage} ${IMAGE}; \

docker rmi ${PrivateImage}

done

}

# 定义主函数

function MAIN()

{

read -p "当前节点是作为 master 还是 node ? [master|node] " -t 30 CHOICE

case ${CHOICE} in

"master"|"m"|"MASTER"|"M")

MasterImageProcess

[ $? -eq 0 ] && echo -e "Master 节点镜像 \033[32m[处理成功]\033[0m" || (echo -e "Master 节点镜像 \033[31m[处理失败]\033[0m, 请手动检查! " && exit 1)

;;

"node"|"n"|"NODE"|"N")

NodeImageProcess

[ $? -eq 0 ] && echo -e "Master 节点镜像 \033[32m[处理成功]\033[0m" || (echo -e "Master 节点镜像 \033[31m[处理失败]\033[0m, 请手动检查! " && exit 1)

;;

*)

echo "输入参数不合法,请输入 master 或者 node. "

exit

;;

esac

}

MAIN

(4)【加入节点,配置网络】

成功后后的提示:

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.255.20.99:6443 --token h772y7.wsuzsxj5s6vkjt7a --discovery-token-ca-cert-hash sha256:1d3dce892bc9815726d10a7ba7b8e54d436012113287ae8306def417af503d94

添加网络配置:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

(5)【部署dashboard(在master上操作)】

#1 kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta1/aio/deploy/recommended.yaml

#2 kubectl get pods --namespace=kubernetes-dashboard #查看创建的namespace

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-5c8f9556c4-w6pzj 1/1 Running 0 7m46s

kubernetes-metrics-scraper-86456cdd8f-7js7v 1/1 Running 0 7m46s

#3 kubectl get service --namespace=kubernetes-dashboard #查看端口映射关系

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.98.83.31 <none> 8000/TCP 55m

kubernetes-dashboard NodePort 10.107.192.48 <none> 443:30520/TCP 55m

#4 修改service配置,将type: ClusterIP改成NodePort

# kubectl edit service kubernetes-dashboard --namespace=kubernetes-dashboard

spec:

clusterIP: 10.107.192.48

externalTrafficPolicy: Cluster

ports:

- nodePort: 30520

port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

type: NodePort #注意这行。

#5 创建dashboard admin-token(仅master上执行)

cat >/root/admin-token.yaml<<EOF

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

EOF

#6 创建用户

# kubectl create -f admin-token.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin created

serviceaccount/admin created

#7 获取token

# kubectl describe secret/$(kubectl get secret -nkube-system |grep admin|awk '{print $1}') -nkube-system

Name: admin-token-lzkfl

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin

kubernetes.io/service-account.uid: 6f194e13-2f09-4800-887d-99a183b3d04d

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi1semtmbCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjZmMTk0ZTEzLTJmMDktNDgwMC04ODdkLTk5YTE4M2IzZDA0ZCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbiJ9.vlSVy45l42tzpm2ppSJIBMSKm_OcL_f-miwOV6M37R3b3nda48FNvMQ1oTWFKRM4VZugJcrkSMg7OVBQqyZ7CVV94xaMiiV49eaDFfd9dKGmihHVAfQuywvwcA4wYnspf0TVQYmkQPOagPzPxQGzNZvulsB9LeCCvVUSnKXeW3O0U8rSL13WplBwwcjC2J91vZpljgNaBMiuemafR1kqRmdZ7CLGuaLNCLw5LGLeTi0OQKZhI15Wjhs2juIqP7ZI6qtgDsSeYe8Y3tUxD4Htqa5VtYxhZKll-5jFWgNXWE3xnzdUVCW8t4iLMoFbxrs5ar-vQOIL8C11zBAmeWdJeQ

#8 登录dashboard https://192.168.187.141:30520/#/overview?namespace=default

选择token登录