2019独角兽企业重金招聘Python工程师标准>>> ![]()

1 默认IO模型

1.1 配置项的解析

Tomcat 7.0.35 的配置文件是$CATALINA_HOME/conf/server.xml.

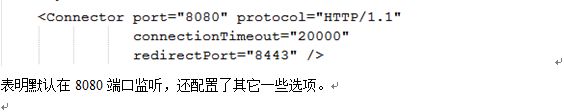

可以查看默认配置

在其上方还有这么一段配置

|

大部分IO配置都是在这里面配置的。

首先需要解决的问题---配置项如何解析的。

在org.apache.catalina.startup.Catalina.load()函数 /**

* Start a new server instance.

*/

public void load() {

中,会有解析此XML文件的部分。

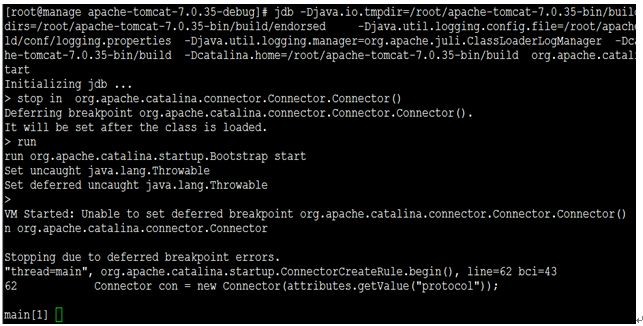

通过jdb可以截取片段如下:

继续跟踪执行。

610 digester.parse(inputSource); |

在这里开始解析,我们跟入看看解析过程。

源码如下:

/** * Parse the content of the specified input source using this Digester. * Returns the root element from the object stack (if any). * * @param input Input source containing the XML data to be parsed * * @exception IOException if an input/output error occurs * @exception SAXException if a parsing exception occurs */ public Object parse(InputSource input) throws IOException, SAXException {

configure(); getXMLReader().parse(input); return (root);

} |

看来解析的主要是第2句:getXMLReader().parse(input);

这里的getXMLReader()返回的是什么?

main[1] print reader reader = "com.sun.org.apache.xerces.internal.jaxp.SAXParserImpl$JAXPSAXParser@880a8" |

也就说,reader类型为com.sun.org.apache.xerces.internal.jaxp.SAXParserImpl$JAXPSAXParser。看这个就知道是一个内部类。

好,接下来执行这个类的parse(input)函数。

下面我按照执行的顺序来记录下在Digester(org.apache.tomcat.util.digester.Digester.)类中执行过的函数。

这里只是举几个例子。

setDocumentLocator()

源码如下:

@Override public void setDocumentLocator(Locator locator) {

if (saxLog.isDebugEnabled()) { saxLog.debug("setDocumentLocator(" + locator + ")"); }

this.locator = locator;

} |

执行结果

1,171 this.locator = locator;

main[1] print locator locator = "com.sun.org.apache.xerces.internal.parsers.AbstractSAXParser$LocatorProxy@1b57dcc" |

startDocument()

源码如下:

@Override public void startDocument() throws SAXException {

if (saxLog.isDebugEnabled()) { saxLog.debug("startDocument()"); }

// ensure that the digester is properly configured, as // the digester could be used as a SAX ContentHandler // rather than via the parse() methods. configure(); } |

这里主要就是一个configure()函数,这里并没有实质性的变量设置,直接忽略。

startElement()

位于文件的1227行。

@Override

public void startElement(String namespaceURI, String localName,

String qName, Attributes list)

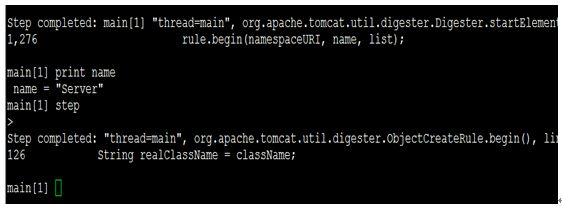

这里先解析Server元素

继续执行,看Server元素具体如何解析的。

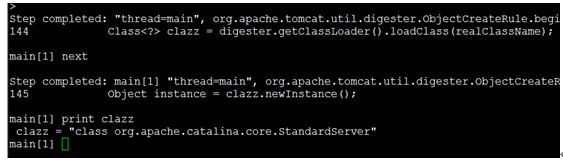

从上图我们可以看到,接下来进入org.apache.tomcat.util.digester.ObjectCreateRule.begin()来执行具体的操作,源码如下:

@Override public void begin(String namespace, String name, Attributes attributes) throws Exception {

// Identify the name of the class to instantiate String realClassName = className; if (attributeName != null) { String value = attributes.getValue(attributeName); if (value != null) { realClassName = value; } } if (digester.log.isDebugEnabled()) { digester.log.debug("[ObjectCreateRule]{" + digester.match + "}New " + realClassName); }

if (realClassName == null) { throw new NullPointerException("No class name specified for " + namespace + " " + name); }

// Instantiate the new object and push it on the context stack Class clazz = digester.getClassLoader().loadClass(realClassName); Object instance = clazz.newInstance(); digester.push(instance); } |

通过jdb继续跟踪

看来这里开始实例化了类org.apache.catalina.core.StandardServer.

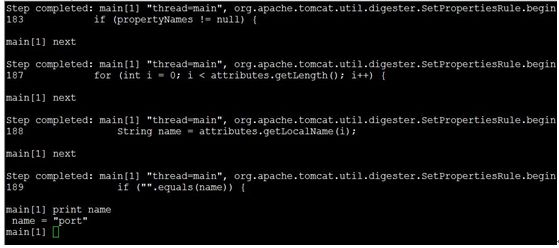

接下来会解析Server的属性。

表明先解析8005端口这个配置项。

其实这里主要是完成设置org.apache.catalina.core.StandardServer的

Port和shutdown两个变量。

characters()

@Override public void characters(char buffer[], int start, int length) throws SAXException {

if (saxLog.isDebugEnabled()) { saxLog.debug("characters(" + new String(buffer, start, length) + ")"); }

bodyText.append(buffer, start, length);

} |

其实,如果对Digester类比较熟悉的话,就不用这么来理解配置项XML的解析了。

http://www.oschina.net/search?scope=project&q=Digester

可以看到

我们直接去看Tomcat里的Digester的解析规则配置即可。

在org.apache.catalina.startup.Catalina类中,其createStartDigester()函数有这么

/** * Create and configure the Digester we will be using for startup. */ protected Digester createStartDigester() { long t1=System.currentTimeMillis(); // Initialize the digester Digester digester = new Digester(); digester.setValidating(false); digester.setRulesValidation(true); HashMap new HashMap ArrayList attrs.add("className"); fakeAttributes.put(Object.class, attrs); digester.setFakeAttributes(fakeAttributes); digester.setClassLoader(StandardServer.class.getClassLoader());

// Configure the actions we will be using digester.addObjectCreate("Server", "org.apache.catalina.core.StandardServer", "className"); digester.addSetProperties("Server"); digester.addSetNext("Server", "setServer", "org.apache.catalina.Server");

digester.addObjectCreate("Server/GlobalNamingResources", "org.apache.catalina.deploy.NamingResources"); digester.addSetProperties("Server/GlobalNamingResources"); digester.addSetNext("Server/GlobalNamingResources", "setGlobalNamingResources", "org.apache.catalina.deploy.NamingResources");

digester.addObjectCreate("Server/Listener", null, // MUST be specified in the element "className"); digester.addSetProperties("Server/Listener"); digester.addSetNext("Server/Listener", "addLifecycleListener", "org.apache.catalina.LifecycleListener");

digester.addObjectCreate("Server/Service", "org.apache.catalina.core.StandardService", "className"); digester.addSetProperties("Server/Service"); digester.addSetNext("Server/Service", "addService", "org.apache.catalina.Service");

digester.addObjectCreate("Server/Service/Listener", null, // MUST be specified in the element "className"); digester.addSetProperties("Server/Service/Listener"); digester.addSetNext("Server/Service/Listener", "addLifecycleListener", "org.apache.catalina.LifecycleListener");

//Executor digester.addObjectCreate("Server/Service/Executor", "org.apache.catalina.core.StandardThreadExecutor", "className"); digester.addSetProperties("Server/Service/Executor");

digester.addSetNext("Server/Service/Executor", "addExecutor", "org.apache.catalina.Executor");

digester.addRule("Server/Service/Connector", new ConnectorCreateRule()); digester.addRule("Server/Service/Connector", new SetAllPropertiesRule(new String[]{"executor"})); digester.addSetNext("Server/Service/Connector", "addConnector", "org.apache.catalina.connector.Connector");

digester.addObjectCreate("Server/Service/Connector/Listener", null, // MUST be specified in the element "className"); digester.addSetProperties("Server/Service/Connector/Listener"); digester.addSetNext("Server/Service/Connector/Listener", "addLifecycleListener", "org.apache.catalina.LifecycleListener");

// Add RuleSets for nested elements digester.addRuleSet(new NamingRuleSet("Server/GlobalNamingResources/")); digester.addRuleSet(new EngineRuleSet("Server/Service/")); digester.addRuleSet(new HostRuleSet("Server/Service/Engine/")); digester.addRuleSet(new ContextRuleSet("Server/Service/Engine/Host/")); addClusterRuleSet(digester, "Server/Service/Engine/Host/Cluster/"); digester.addRuleSet(new NamingRuleSet("Server/Service/Engine/Host/Context/"));

// When the 'engine' is found, set the parentClassLoader. digester.addRule("Server/Service/Engine", new SetParentClassLoaderRule(parentClassLoader)); addClusterRuleSet(digester, "Server/Service/Engine/Cluster/");

long t2=System.currentTimeMillis(); if (log.isDebugEnabled()) { log.debug("Digester for server.xml created " + ( t2-t1 )); } return (digester);

} |

从这个里面,我们可以看到每个配置项产生的类。

可以看到Connector对应的类是org.apache.catalina.connector.Connector。

Tomcat对XML文件的解析用了Commons-digester,这个组件又依赖于Commons-BeanUtils,Commons-Collections,有兴趣的可以看看这几个组件的源码,目前为了快速解决Tomcat的问题,直接进入问题解决部分。

1.2 Connector的bio处理模型

在1.1中,我们提到其对应的类是:org.apache.catalina.connector.Connector。

下面我们就开始跟踪其构造过程。

直接看IO线程创建部分。

@Override

public void startInternal() throws Exception {

if (!running) {

running = true;

paused = false;

// Create worker collection

if (getExecutor() == null) {

createExecutor();

}

initializeConnectionLatch();

startAcceptorThreads();

// Start async timeout thread

Thread timeoutThread = new Thread(new AsyncTimeout(),

getName() + "-AsyncTimeout");

timeoutThread.setPriority(threadPriority);

timeoutThread.setDaemon(true);

timeoutThread.start();

}

}

public void createExecutor() {

internalExecutor = true;

TaskQueue taskqueue = new TaskQueue();

TaskThreadFactory tf = new TaskThreadFactory(getName() + "-exec-", daemon, getThreadPriority());

executor = new ThreadPoolExecutor(getMinSpareThreads(), getMaxThreads(), 60, TimeUnit.SECONDS,taskqueue, tf);

taskqueue.setParent( (ThreadPoolExecutor) executor);

}

protected final void startAcceptorThreads() {

int count = getAcceptorThreadCount();

acceptors = new Acceptor[count];

for (int i = 0; i < count; i++) {

acceptors[i] = createAcceptor();

Thread t = new Thread(acceptors[i], getName() + "-Acceptor-" + i);

t.setPriority(getAcceptorThreadPriority());

t.setDaemon(getDaemon());

t.start();

}

}

// Start async timeout thread

Thread timeoutThread = new Thread(new AsyncTimeout(),

getName() + "-AsyncTimeout");

timeoutThread.setPriority(threadPriority);

timeoutThread.setDaemon(true);

timeoutThread.start();

通过这段代码及后续代码,我们可以得出这些结论:

1)创建了执行线程池ThreadPoolExecutor,线程创建工厂类型为TaskThreadFactory。

2)创建了一个Acceptor线程,类型为org.apache.tomcat.util.net.JIoEndpoint.Acceptor

3)创建了一个异步TimeoutThread.

实际上,对于默认的IO模型来说,就是一个线程负责Accept客户端的连接,然后每个client对应的socket,尽可能的启动一个线程来专门为它服务。(有最大线程数限制).

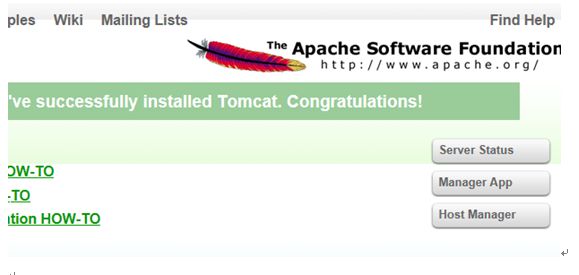

查看进程状况,输入网址http://IP:8080/

点击 “Server Status”,此时如果需要输入用户名和密码,请确认在文件

...../conf/tomcat-users.xml中有如下角色和用户,用户名和密码自定义。

|

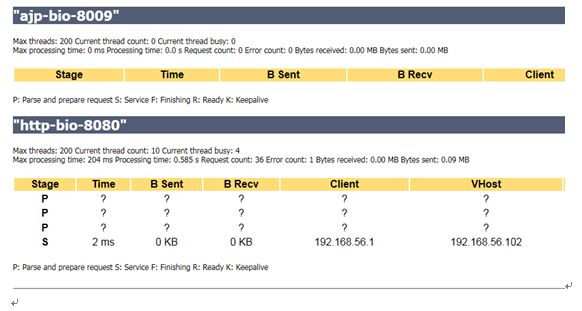

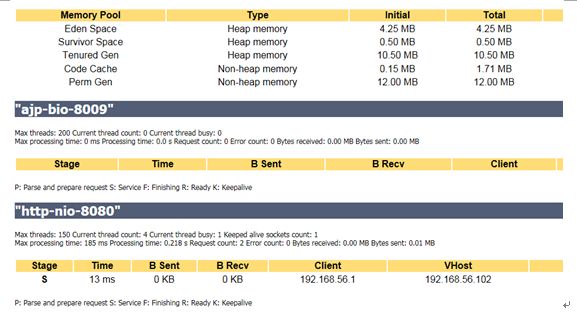

进入后,可以看到JVM的信息,还可以看到线程状况。

可以看到8080端口的线程情况:Max threads: 200 Current thread count: 10 Current thread busy: 4

BIO线程效率比较低下,目前大部分网络服务器框架的IO模型都已经转移到了NIO模型,比如Redis,Thrift,Netty,Mina.

所以我们后续的重点是修改IO模型为NIO,并研究Tomcat内部NIO执行原理来优化相应的参数。

1.3 Connector的nio处理模型

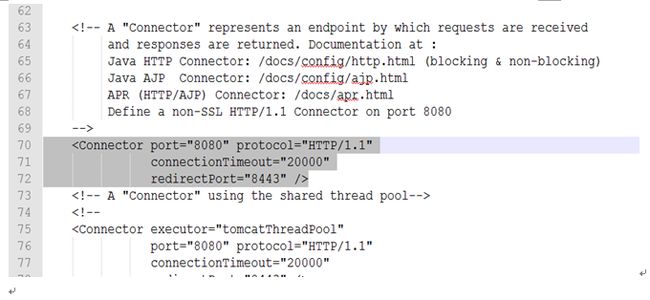

为了修改Tomcat的处理模型为nio,首先需要修改.../conf/server.xml文件。

具体修改如下:

之前默认的Connector配置为

修改的地方有2个

1)

port="8080" protocol="org.apache.coyote.http11.Http11NioProtocol" connectionTimeout="20000" redirectPort="8443" /> |

2)

maxThreads="150" minSpareThreads="4"/> |

可以看到在1)中,我们采用了executor,这个是什么意思呢?

如果看过Thrift的IO模型就知道是怎么回事,实际上是将

[请求接收,响应发送] 与 [处理请求产生响应内容] 这2个部分分开处理,加速Tomcat的请求。

重启Tomcat,查看Server Status.

可以看到,IO模型已经变成NIO模型。

通过jstack查看此时的线程,具体如下:

[红色]为我们需要关注的NIO线程

[蓝色]为其它线程

[root@manage bin]# jstack 1878 2015-06-17 07:42:19 Full thread dump OpenJDK Client VM (23.25-b01 mixed mode):

"Attach Listener" daemon prio=10 tid=0x91336800 nid=0x77e runnable [0x00000000] java.lang.Thread.State: RUNNABLE

"catalina-exec-4" daemon prio=10 tid=0x912ddc00 nid=0x76b waiting on condition [0x9100b000] java.lang.Thread.State: WAITING (parking) at sun.misc.Unsafe.park(Native Method) - parking to wait for <0x9cdc2260> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject) at java.util.concurrent.locks.LockSupport.park(LockSupport.java:186) at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2043) at java.util.concurrent.LinkedBlockingQueue.take(LinkedBlockingQueue.java:386) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:104) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:32) at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1069) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1131) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:701)

"catalina-exec-3" daemon prio=10 tid=0x912d9c00 nid=0x76a waiting on condition [0x9105c000] java.lang.Thread.State: WAITING (parking) at sun.misc.Unsafe.park(Native Method) - parking to wait for <0x9cdc2260> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject) at java.util.concurrent.locks.LockSupport.park(LockSupport.java:186) at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2043) at java.util.concurrent.LinkedBlockingQueue.take(LinkedBlockingQueue.java:386) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:104) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:32) at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1069) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1131) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:701)

"catalina-exec-2" daemon prio=10 tid=0x91332800 nid=0x769 waiting on condition [0x90dc6000] java.lang.Thread.State: WAITING (parking) at sun.misc.Unsafe.park(Native Method) - parking to wait for <0x9cdc2260> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject) at java.util.concurrent.locks.LockSupport.park(LockSupport.java:186) at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2043) at java.util.concurrent.LinkedBlockingQueue.take(LinkedBlockingQueue.java:386) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:104) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:32) at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1069) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1131) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:701)

"catalina-exec-1" daemon prio=10 tid=0x912f5c00 nid=0x768 waiting on condition [0x90e25000] java.lang.Thread.State: WAITING (parking) at sun.misc.Unsafe.park(Native Method) - parking to wait for <0x9cdc2260> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject) at java.util.concurrent.locks.LockSupport.park(LockSupport.java:186) at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2043) at java.util.concurrent.LinkedBlockingQueue.take(LinkedBlockingQueue.java:386) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:104) at org.apache.tomcat.util.threads.TaskQueue.take(TaskQueue.java:32) at java.util.concurrent.ThreadPoolExecutor.getTask(ThreadPoolExecutor.java:1069) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1131) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:701)

"ajp-bio-8009-AsyncTimeout" daemon prio=10 tid=0x912f3800 nid=0x767 waiting on condition [0x90e76000] java.lang.Thread.State: TIMED_WAITING (sleeping) at java.lang.Thread.sleep(Native Method) at org.apache.tomcat.util.net.JIoEndpoint$AsyncTimeout.run(JIoEndpoint.java:148) at java.lang.Thread.run(Thread.java:701)

"ajp-bio-8009-Acceptor-0" daemon prio=10 tid=0x912f2000 nid=0x766 runnable [0x90ec7000] java.lang.Thread.State: RUNNABLE at java.net.PlainSocketImpl.socketAccept(Native Method) at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:375) at java.net.ServerSocket.implAccept(ServerSocket.java:478) at java.net.ServerSocket.accept(ServerSocket.java:446) at org.apache.tomcat.util.net.DefaultServerSocketFactory.acceptSocket(DefaultServerSocketFactory.java:60) at org.apache.tomcat.util.net.JIoEndpoint$Acceptor.run(JIoEndpoint.java:216) at java.lang.Thread.run(Thread.java:701)

"http-nio-8080-Acceptor-0" daemon prio=10 tid=0x912f0800 nid=0x765 runnable [0x90f18000] java.lang.Thread.State: RUNNABLE at sun.nio.ch.ServerSocketChannelImpl.accept0(Native Method) at sun.nio.ch.ServerSocketChannelImpl.accept(ServerSocketChannelImpl.java:165) - locked <0x9c92f098> (a java.lang.Object) at org.apache.tomcat.util.net.NioEndpoint$Acceptor.run(NioEndpoint.java:787) at java.lang.Thread.run(Thread.java:701)

"http-nio-8080-ClientPoller-0" daemon prio=10 tid=0x912e1400 nid=0x764 runnable [0x90f69000] java.lang.Thread.State: RUNNABLE at sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:228) at sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:83) at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:87) - locked <0x9cd73ac8> (a sun.nio.ch.Util$1) - locked <0x9cd73ad8> (a java.util.Collections$UnmodifiableSet) - locked <0x9cd73a88> (a sun.nio.ch.EPollSelectorImpl) at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:98) at org.apache.tomcat.util.net.NioEndpoint$Poller.run(NioEndpoint.java:1156) at java.lang.Thread.run(Thread.java:701)

"ContainerBackgroundProcessor[StandardEngine[Catalina]]" daemon prio=10 tid=0x912e0400 nid=0x763 waiting on condition [0x90fba000] java.lang.Thread.State: TIMED_WAITING (sleeping) at java.lang.Thread.sleep(Native Method) at org.apache.catalina.core.ContainerBase$ContainerBackgroundProcessor.run(ContainerBase.java:1508) at java.lang.Thread.run(Thread.java:701)

"NioBlockingSelector.BlockPoller-1" daemon prio=10 tid=0x912cf000 nid=0x760 runnable [0x910ad000] java.lang.Thread.State: RUNNABLE at sun.nio.ch.EPollArrayWrapper.epollWait(Native Method) at sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:228) at sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:83) at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:87) - locked <0x9c9739b8> (a sun.nio.ch.Util$1) - locked <0x9c9739c8> (a java.util.Collections$UnmodifiableSet) - locked <0x9c973978> (a sun.nio.ch.EPollSelectorImpl) at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:98) at org.apache.tomcat.util.net.NioBlockingSelector$BlockPoller.run(NioBlockingSelector.java:327)

"GC Daemon" daemon prio=10 tid=0x91250000 nid=0x75f in Object.wait() [0x910fe000] java.lang.Thread.State: TIMED_WAITING (on object monitor) at java.lang.Object.wait(Native Method) - waiting on <0x9c7fe9a0> (a sun.misc.GC$LatencyLock) at sun.misc.GC$Daemon.run(GC.java:117) - locked <0x9c7fe9a0> (a sun.misc.GC$LatencyLock)

"Low Memory Detector" daemon prio=10 tid=0xb76c6c00 nid=0x75d runnable [0x00000000] java.lang.Thread.State: RUNNABLE

"C1 CompilerThread0" daemon prio=10 tid=0xb76c4c00 nid=0x75c waiting on condition [0x00000000] java.lang.Thread.State: RUNNABLE

"Signal Dispatcher" daemon prio=10 tid=0xb76c3000 nid=0x75b runnable [0x00000000] java.lang.Thread.State: RUNNABLE

"Finalizer" daemon prio=10 tid=0xb7683c00 nid=0x75a in Object.wait() [0x91979000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait(Native Method) - waiting on <0x9c68a0c8> (a java.lang.ref.ReferenceQueue$Lock) at java.lang.ref.ReferenceQueue.remove(ReferenceQueue.java:133) - locked <0x9c68a0c8> (a java.lang.ref.ReferenceQueue$Lock) at java.lang.ref.ReferenceQueue.remove(ReferenceQueue.java:149) at java.lang.ref.Finalizer$FinalizerThread.run(Finalizer.java:189)

"Reference Handler" daemon prio=10 tid=0xb7682400 nid=0x759 in Object.wait() [0x919ca000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait(Native Method) - waiting on <0x9c68a158> (a java.lang.ref.Reference$Lock) at java.lang.Object.wait(Object.java:502) at java.lang.ref.Reference$ReferenceHandler.run(Reference.java:133) - locked <0x9c68a158> (a java.lang.ref.Reference$Lock)

"main" prio=10 tid=0xb7606800 nid=0x757 runnable [0xb77de000] java.lang.Thread.State: RUNNABLE at java.net.PlainSocketImpl.socketAccept(Native Method) at java.net.AbstractPlainSocketImpl.accept(AbstractPlainSocketImpl.java:375) at java.net.ServerSocket.implAccept(ServerSocket.java:478) at java.net.ServerSocket.accept(ServerSocket.java:446) at org.apache.catalina.core.StandardServer.await(StandardServer.java:452) at org.apache.catalina.startup.Catalina.await(Catalina.java:766) at org.apache.catalina.startup.Catalina.start(Catalina.java:712) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:622) at org.apache.catalina.startup.Bootstrap.start(Bootstrap.java:322) at org.apache.catalina.startup.Bootstrap.main(Bootstrap.java:456)

"VM Thread" prio=10 tid=0xb7676c00 nid=0x758 runnable

"VM Periodic Task Thread" prio=10 tid=0xb76c9000 nid=0x75e waiting on condition

JNI global references: 232

[root@manage bin]# |

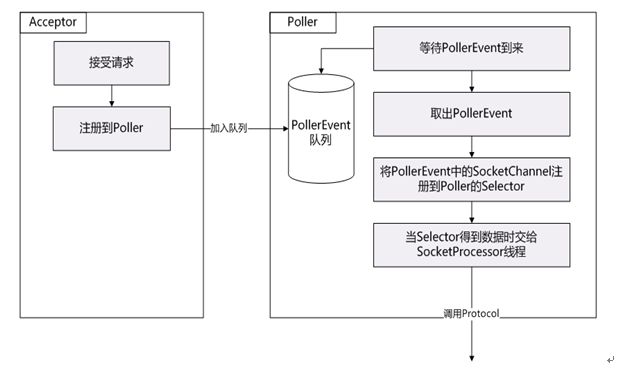

从这里可以看到,nio模型基本跟Thrift的三层模型是一样的。

但是还是有一定的区别。

1.4 Connector的nio参数优化

总的来说,在Tomcat中,NIO框架的线程模型如下所示:

Acceptor:执行accept,得到一个client的SocketChannel,抛给Selector

Selector: 处理具体的IO操作。

Executor:执行servlet具体的各种处理,返回响应response给selector.

1.4.1 Acceptor线程优化[无改进点]

Acceptor线程用来处理客户连接请求,执行accept并投递接受的clientSocketChannel给后端的Selector线程。

如何优化此线程?首先先分析源码如下:

protected class Acceptor extends AbstractEndpoint.Acceptor {

@Override public void run() {

int errorDelay = 0;

// Loop until we receive a shutdown command while (running) {

// Loop if endpoint is paused while (paused && running) { state = AcceptorState.PAUSED; try { Thread.sleep(50); } catch (InterruptedException e) { // Ignore } }

if (!running) { break; } state = AcceptorState.RUNNING;

try { //if we have reached max connections, wait countUpOrAwaitConnection();

SocketChannel socket = null; try { // Accept the next incoming connection from the server // socket socket = serverSock.accept(); } catch (IOException ioe) { //we didn't get a socket countDownConnection(); // Introduce delay if necessary errorDelay = handleExceptionWithDelay(errorDelay); // re-throw throw ioe; } // Successful accept, reset the error delay errorDelay = 0;

// setSocketOptions() will add channel to the poller // if successful if (running && !paused) { if (!setSocketOptions(socket)) { countDownConnection(); closeSocket(socket); } } else { countDownConnection(); closeSocket(socket); } } catch (SocketTimeoutException sx) { // Ignore: Normal condition } catch (IOException x) { if (running) { log.error(sm.getString("endpoint.accept.fail"), x); } } catch (OutOfMemoryError oom) { try { oomParachuteData = null; releaseCaches(); log.error("", oom); }catch ( Throwable oomt ) { try { try { System.err.println(oomParachuteMsg); oomt.printStackTrace(); }catch (Throwable letsHopeWeDontGetHere){ ExceptionUtils.handleThrowable(letsHopeWeDontGetHere); } }catch (Throwable letsHopeWeDontGetHere){ ExceptionUtils.handleThrowable(letsHopeWeDontGetHere); } } } catch (Throwable t) { ExceptionUtils.handleThrowable(t); log.error(sm.getString("endpoint.accept.fail"), t); } } state = AcceptorState.ENDED; } } |

关键在于这里

if (running && !paused) { if (!setSocketOptions(socket)) { countDownConnection(); closeSocket(socket); } } |

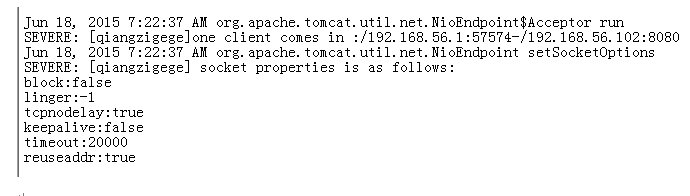

进入这个函数,然后添加日志,查看socket的属性设置如下:

关于这几个参数的解释见:http://elf8848.iteye.com/blog/1739598

这里看来Tomcat 的socket参数优化已经OK,这里没有可改进的地方。

1.4.2 Selector线程优化

1.4.2.1 Selector线程数调整

启动代码如下:

// Start poller threads pollers = new Poller[getPollerThreadCount()]; for (int i=0; i<pollers.length; i++) { pollers[i] = new Poller(); Thread pollerThread = new Thread(pollers[i], getName() + "-ClientPoller-"+i); pollerThread.setPriority(threadPriority); pollerThread.setDaemon(true); pollerThread.start(); } |

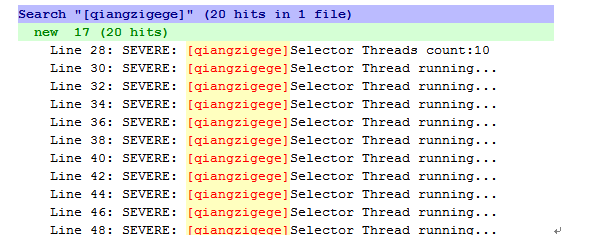

这里究竟启动了多少个Selector线程?日志如下:

在我的虚拟机上日志为

为什么只产生了一个线程呢?

代码如下:[NioEndPoint.java 336行]

protected int pollerThreadCount = Math.min(2,Runtime.getRuntime().availableProcessors());

public void setPollerThreadCount(int pollerThreadCount) { this.pollerThreadCount = pollerThreadCount; }

public int getPollerThreadCount() { return pollerThreadCount; }

也就是说,无论有多少个CPU内核,最多只产生2个selector线程,最少1个线程,这个比较坑,至少Netty和Thrift都没有这样的限制,而是根据CPU个数来决定产生多少个Selector线程。所以这里应该是第一个优化的地方.

具体修改如下:

protected int pollerThreadCount = Math.min(2,Runtime.getRuntime().availableProcessors());

修改为

protected int pollerThreadCount = Math.max(2,Runtime.getRuntime().availableProcessors());

重新编译即可。

如果机器有10个核的话,日志如下

就会产生10个线程,可加速响应返回。

Tomcat默认不超过2个线程,具体是否应该增加此处的selector线程个数,需要测试。

1.4.3 Executor线程优化

1.4.3.1 Executor参数优化

private Executor executor = null;

初始化的过程为

public void createExecutor() {

internalExecutor = true;

TaskQueue taskqueue = new TaskQueue();

TaskThreadFactory tf = new TaskThreadFactory(getName() + "-exec-", daemon, getThreadPriority());

executor = new ThreadPoolExecutor(getMinSpareThreads(), getMaxThreads(), 60, TimeUnit.SECONDS,taskqueue, tf);

taskqueue.setParent( (ThreadPoolExecutor) executor);

}

@Override

public boolean offer(Runnable o) {

//we can't do any checks

if (parent==null) return super.offer(o);

//we are maxed out on threads, simply queue the object

if (parent.getPoolSize() == parent.getMaximumPoolSize()) return super.offer(o);

//we have idle threads, just add it to the queue

if (parent.getSubmittedCount()<(parent.getPoolSize())) return super.offer(o);

//if we have less threads than maximum force creation of a new thread

if (parent.getPoolSize()<parent.getMaximumPoolSize()) return false;

//if we reached here, we need to add it to the queue

return super.offer(o);

}

类的继承关系

public class TaskQueue

extends LinkedBlockingQueue

public class ThreadPoolExecutor

extends java.util.concurrent.ThreadPoolExecutor {

参数配置,默认为:

maxThreads="150" minSpareThreads="4"/> |

关于java.util.concurrent.ThreadPoolExecutor的理解可参考http://blog.csdn.net/wangwenhui11/article/details/6760474

http://825635381.iteye.com/blog/2184680

两篇文章结合一起看。

此处优化结果:

maxThreads="150" minSpareThreads="20"/> |

这里的关键是maxThreads的配置,太小和太大都不行,需要根据实际情况测试决定一个合适的值。