机器学习支持向量机简介

文章目录

- 一、支持向量机

- 1、SVM简介

- 2、SVM算法原理

- 3、非线性SVM算法原理

- 二、通过代码理解

- 1、绘制决策边界

- 2、使用多项式特征和核函数

- 3、高斯核函数

- 4、超参数 γ

- 三、参考链接

一、支持向量机

1、SVM简介

支持向量机(support vector machines, SVM)是一种二分类模型,它的基本模型是定义在特征空间上的间隔最大的线性分类器,间隔最大使它有别于感知机;SVM还包括核技巧,这使它成为实质上的非线性分类器。SVM的的学习策略就是间隔最大化,可形式化为一个求解凸二次规划的问题,也等价于正则化的合页损失函数的最小化问题。SVM的的学习算法就是求解凸二次规划的最优化算法。

2、SVM算法原理

SVM学习的基本想法是求解能够正确划分训练数据集并且几何间隔最大的分离超平面。如下图所示,w.x+b=0 即为分离超平面,对于线性可分的数据集来说,这样的超平面有无穷多个(即感知机),但是几何间隔最大的分离超平面却是唯一的。

详细原理请看https://zhuanlan.zhihu.com/p/31886934

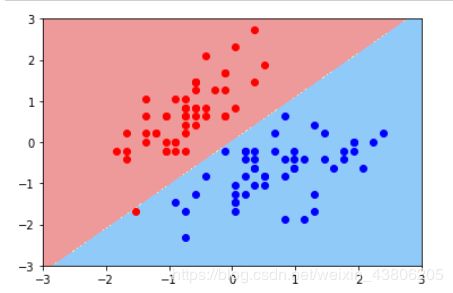

3、非线性SVM算法原理

对于输入空间中的非线性分类问题,可以通过非线性变换将它转化为某个维特征空间中的线性分类问题,在高维特征空间中学习线性支持向量机。由于在线性支持向量机学习的对偶问题里,目标函数和分类决策函数都只涉及实例和实例之间的内积,所以不需要显式地指定非线性变换,而是用核函数替换当中的内积。核函数表示,通过一个非线性转换后的两个实例间的内积。具体地,K(x,z)是一个函数,或正定核,意味着存在一个从输入空间到特征空间的映射φ(x),对任意输入空间中的x,z有K(x,z)=φ(x).(z)

在线性支持向量机学习的对偶问题中,用核函数K(x,z)替代内积,求解得到的就是非线性支持向量机

二、通过代码理解

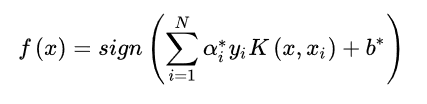

1、绘制决策边界

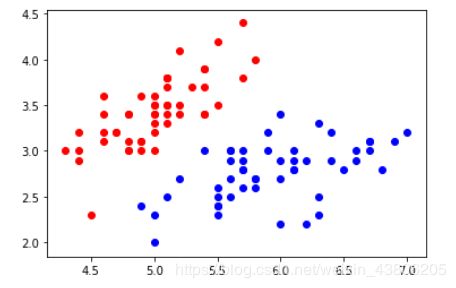

①未经标准化的原始数据点分布

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.svm import LinearSVC

iris = datasets.load_iris()

X = iris.data

y = iris.target

X = X[y<2,:2]

y = y[y<2]

plt.scatter(X[y==0,0],X[y==0,1],color='red')

plt.scatter(X[y==1,0],X[y==1,1],color='blue')

plt.show()

import numpy as np

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScaler

from sklearn.svm import LinearSVC

from matplotlib.colors import ListedColormap

import warnings

def plot_decision_boundary(model,axis):

x0,x1=np.meshgrid(

np.linspace(axis[0],axis[1],int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2],axis[3],int((axis[3]-axis[2])*100)).reshape(-1,1)

)

x_new=np.c_[x0.ravel(),x1.ravel()]

y_predict=model.predict(x_new)

zz=y_predict.reshape(x0.shape)

custom_cmap=ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0,x1,zz,linewidth=5,cmap=custom_cmap)

warnings.filterwarnings("ignore")

data = load_iris()

x = data.data

y = data.target

x = x[y<2,:2]

y = y[y<2]

scaler = StandardScaler()

scaler.fit(x)

x = scaler.transform(x)

svc = LinearSVC(C=1e9)

svc.fit(x,y)

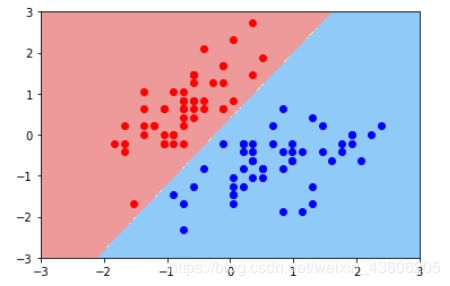

plot_decision_boundary(svc,axis=[-3,3,-3,3])

plt.scatter(x[y==0,0],x[y==0,1],c='r')

plt.scatter(x[y==1,0],x[y==1,1],c='b')

plt.show()

再次实例化一个svc,并传入一个较小的 C

import numpy as np

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScaler

from sklearn.svm import LinearSVC

from matplotlib.colors import ListedColormap

import warnings

def plot_decision_boundary(model,axis):

x0,x1=np.meshgrid(

np.linspace(axis[0],axis[1],int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2],axis[3],int((axis[3]-axis[2])*100)).reshape(-1,1)

)

x_new=np.c_[x0.ravel(),x1.ravel()]

y_predict=model.predict(x_new)

zz=y_predict.reshape(x0.shape)

custom_cmap=ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0,x1,zz,linewidth=5,cmap=custom_cmap)

warnings.filterwarnings("ignore")

data = load_iris()

x = data.data

y = data.target

x = x[y<2,:2]

y = y[y<2]

scaler = StandardScaler()

scaler.fit(x)

x = scaler.transform(x)

svc = LinearSVC(C=0.01)

svc.fit(x,y)

plot_decision_boundary(svc,axis=[-3,3,-3,3])

plt.scatter(x[y==0,0],x[y==0,1],c='r')

plt.scatter(x[y==1,0],x[y==1,1],c='b')

plt.show()

可以很明显的看到和第一个决策边界的不同,在这个决策边界汇总,有一个红点是分类错误的,所以 C 越小容错空间越大。

2、使用多项式特征和核函数

①处理非线性的数据并绘制图像

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

X, y = datasets.make_moons() #使用生成的数据

print(X.shape) # (100,2)

print(y.shape) # (100,)

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

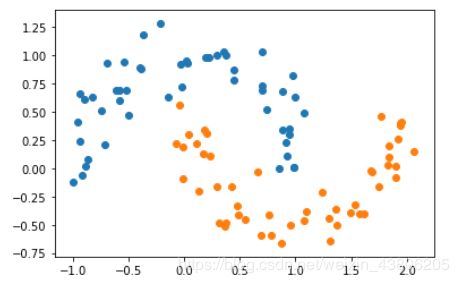

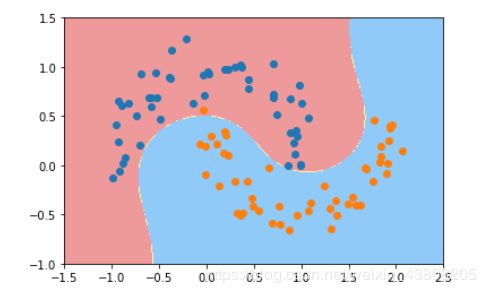

②生成的数据像月亮,这就是它函数名称的由来,但是生成的数据集太规范了,增加一些噪声点

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

X, y = datasets.make_moons(noise=0.15,random_state=777) #随机生成噪声点,random_state是随机种子,noise是方差

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

import numpy as np

from sklearn import datasets

import matplotlib.pyplot as plt

from sklearn.preprocessing import PolynomialFeatures,StandardScaler

from sklearn.svm import LinearSVC

from sklearn.pipeline import Pipeline

from matplotlib.colors import ListedColormap

import warnings

def plot_decision_boundary(model,axis):

x0,x1=np.meshgrid(

np.linspace(axis[0],axis[1],int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2],axis[3],int((axis[3]-axis[2])*100)).reshape(-1,1)

)

x_new=np.c_[x0.ravel(),x1.ravel()]

y_predict=model.predict(x_new)

zz=y_predict.reshape(x0.shape)

custom_cmap=ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0,x1,zz,linewidth=5,cmap=custom_cmap)

def PolynomialSVC(degree,C=1.0):

return Pipeline([

('poly',PolynomialFeatures(degree=degree)),

('std_scaler',StandardScaler()),

('linearSVC',LinearSVC(C=C))

])

warnings.filterwarnings("ignore")

poly_svc = PolynomialSVC(degree=3)

X, y = datasets.make_moons(noise=0.15,random_state=777) #随机生成噪声点,random_state是随机种子,noise是方差

poly_svc.fit(X,y)

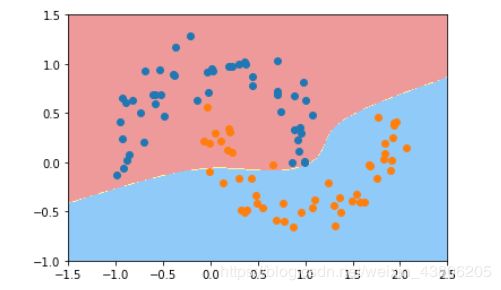

plot_decision_boundary(poly_svc,axis=[-1.5,2.5,-1.0,1.5])

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

④使用核技巧来对数据进行处理,使其维度提升,使原本线性不可分的数据,在高维空间变成线性可分的,再用线性SVM来进行处理。

import numpy as np

from sklearn import datasets

import matplotlib.pyplot as plt

from sklearn.preprocessing import PolynomialFeatures,StandardScaler

from sklearn.svm import SVC

from sklearn.pipeline import Pipeline

from matplotlib.colors import ListedColormap

import warnings

def plot_decision_boundary(model,axis):

x0,x1=np.meshgrid(

np.linspace(axis[0],axis[1],int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2],axis[3],int((axis[3]-axis[2])*100)).reshape(-1,1)

)

x_new=np.c_[x0.ravel(),x1.ravel()]

y_predict=model.predict(x_new)

zz=y_predict.reshape(x0.shape)

custom_cmap=ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0,x1,zz,linewidth=5,cmap=custom_cmap)

def PolynomialKernelSVC(degree,C=1.0):

return Pipeline([ ("std_scaler",StandardScaler()),

("kernelSVC",SVC(kernel="poly")) # poly代表多项式特征

])

poly_kernel_svc = PolynomialKernelSVC(degree=3)

X, y = datasets.make_moons(noise=0.15,random_state=777)

poly_kernel_svc.fit(X,y)

plot_decision_boundary(poly_kernel_svc,axis=[-1.5,2.5,-1.0,1.5])

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

3、高斯核函数

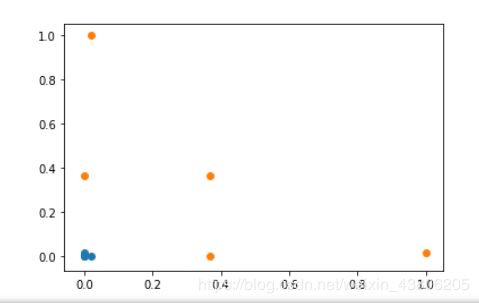

①它的升维式子比较复杂,我们用代码来模拟一下

import numpy as np

import matplotlib.pyplot as plt

x = np.arange(-4,5,1)#生成测试数据

y = np.array((x >= -2 ) & (x <= 2),dtype='int')

plt.scatter(x[y==0],[0]*len(x[y==0]))# x取y=0的点, y取0,有多少个x,就有多少个y

plt.scatter(x[y==1],[0]*len(x[y==1]))

plt.show()

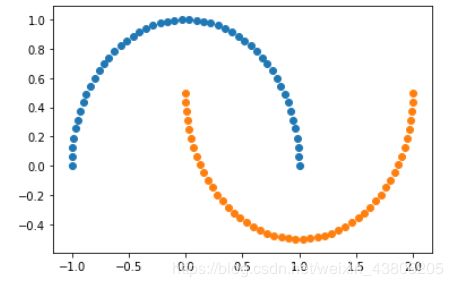

②接下来使用高斯核函数,看如何将一个一维的数据映射到二维的空间

# 高斯核函数

def gaussian(x,l):

gamma = 1.0

return np.exp(-gamma * (x -l)**2)

l1,l2 = -1,1

X_new = np.empty((len(x),2)) #len(x) ,2

for i,data in enumerate(x):

X_new[i,0] = gaussian(data,l1)

X_new[i,1] = gaussian(data,l2)

plt.scatter(X_new[y==0,0],X_new[y==0,1])

plt.scatter(X_new[y==1,0],X_new[y==1,1])

plt.show()

4、超参数 γ

①生成数据

import numpy as np

import matplotlib.pyplot as plt

from sklearn import datasets

X,y = datasets.make_moons(noise=0.15,random_state=777)

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

import numpy as np

from sklearn import datasets

import matplotlib.pyplot as plt

from sklearn.preprocessing import PolynomialFeatures,StandardScaler

from sklearn.svm import LinearSVC

from sklearn.pipeline import Pipeline

from matplotlib.colors import ListedColormap

import warnings

def plot_decision_boundary(model,axis):

x0,x1=np.meshgrid(

np.linspace(axis[0],axis[1],int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2],axis[3],int((axis[3]-axis[2])*100)).reshape(-1,1)

)

x_new=np.c_[x0.ravel(),x1.ravel()]

y_predict=model.predict(x_new)

zz=y_predict.reshape(x0.shape)

custom_cmap=ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0,x1,zz,linewidth=5,cmap=custom_cmap)

def PolynomialSVC(degree,C=1.0):

return Pipeline([

('poly',PolynomialFeatures(degree=degree)),

('std_scaler',StandardScaler()),

('linearSVC',LinearSVC(C=C))

])

warnings.filterwarnings("ignore")

poly_svc = PolynomialSVC(degree=3)

X, y = datasets.make_moons(noise=0.15,random_state=777) #随机生成噪声点,random_state是随机种子,noise是方差

poly_svc.fit(X,y)

plot_decision_boundary(poly_svc,axis=[-1.5,2.5,-1.0,1.5])

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

③设置γ的值并比较结果

a、svc = RBFKernelSVC(100)

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVC

from sklearn.pipeline import Pipeline

from sklearn import datasets

from matplotlib.colors import ListedColormap

import numpy as np

import matplotlib.pyplot as plt

import warnings

def plot_decision_boundary(model,axis):

x0,x1=np.meshgrid(

np.linspace(axis[0],axis[1],int((axis[1]-axis[0])*100)).reshape(-1,1),

np.linspace(axis[2],axis[3],int((axis[3]-axis[2])*100)).reshape(-1,1)

)

x_new=np.c_[x0.ravel(),x1.ravel()]

y_predict=model.predict(x_new)

zz=y_predict.reshape(x0.shape)

custom_cmap=ListedColormap(['#EF9A9A','#FFF59D','#90CAF9'])

plt.contourf(x0,x1,zz,linewidth=5,cmap=custom_cmap)

def RBFKernelSVC(gamma=1.0):

return Pipeline([

('std_scaler',StandardScaler()),

('svc',SVC(kernel='rbf',gamma=gamma))

])

warnings.filterwarnings("ignore")

X,y = datasets.make_moons(noise=0.15,random_state=777)

svc = RBFKernelSVC(gamma=100)

svc.fit(X,y)

plot_decision_boundary(svc,axis=[-1.5,2.5,-1.0,1.5])

plt.scatter(X[y==0,0],X[y==0,1])

plt.scatter(X[y==1,0],X[y==1,1])

plt.show()

b、svc = RBFKernelSVC(10),其他代码不变

c、svc = RBFKernelSVC(0.1),其他代码不变

此时它是欠拟合的,因此,我们可以看出 γ 值相当于在调整模型的复杂度。

三、参考链接

1、http://blog.sina.com.cn/s/blog_6c3438600102yn9x.html

2、https://zhuanlan.zhihu.com/p/31886934