- hive-进阶版-1

数据牧马人

hivehadoop数据仓库

第6章hive内部表与外部表的区别Hive是一个基于Hadoop的数据仓库工具,用于对大规模数据集进行数据存储、查询和分析。Hive支持内部表(ManagedTable)和外部表(ExternalTable)两种表类型,它们在数据存储、管理方式和生命周期等方面存在显著区别。以下是内部表和外部表的主要区别:1.数据存储位置内部表:数据存储在Hive的默认存储目录下,通常位于HDFS(HadoopDi

- 大数据手册(Spark)--Spark安装配置

WilenWu

数据分析(DataAnalysis)大数据spark分布式

本文默认在zsh终端安装配置,若使用bash终端,环境变量的配置文件相应变化。若安装包下载缓慢,可复制链接到迅雷下载,亲测极速~准备工作Spark的安装过程较为简单,在已安装好Hadoop的前提下,经过简单配置即可使用。假设已经安装好了hadoop(伪分布式)和hive,环境变量如下JAVA_HOME=/usr/opt/jdkHADOOP_HOME=/usr/local/hadoopHIVE_HO

- 虚拟机中Hadoop集群NameNode进程缺失问题解析与解决

申朝先生

hadoop大数据分布式linux

目录问题概述问题分析解决办法总结问题概述在虚拟机中运行Hadoop集群时,通过执行jps命令检查进程时,发现NameNode进程缺失。这通常会导致Hadoop集群无法正常运行,影响数据的存储和访问。问题分析导致NameNode进程缺失的原因可能有以下几点:集群未正确停止:在关闭虚拟机或重启Hadoop集群之前,未执行stop-all.sh命令正确停止集群,导致Hadoop服务异常退出,留下残留数据

- 大数据学习(67)- Flume、Sqoop、Kafka、DataX对比

viperrrrrrr

大数据学习flumekafkasqoopdatax

大数据学习系列专栏:哲学语录:用力所能及,改变世界。如果觉得博主的文章还不错的话,请点赞+收藏⭐️+留言支持一下博主哦工具主要作用数据流向实时性数据源/目标应用场景Flume实时日志采集与传输从数据源到存储系统实时日志文件、网络流量等→HDFS、HBase、Kafka等日志收集、实时监控、实时分析Sqoop关系型数据库与Hadoop间数据同步关系型数据库→Hadoop生态系统(HDFS、Hive、

- Kubernetes集群版本升级

程序员Realeo

Java后端kubernetes容器云原生

集群升级注意事项升级集群版本建议逐步升级,比如v1.20.1–>v1.21.1–>v1.22.1–>v1.23.1–>v1.24.1,不能跨度过大,否则会报错。升级步骤查看集群版本[root@hadoop102~]#kubectlgetnodesNAMESTATUSROLESAGEVERSIONhadoop102Ready,SchedulingDisabledcontrol-plane,maste

- Kubernetes集群版本升级

后端java

集群升级注意事项升级集群版本建议逐步升级,比如v1.20.1–>v1.21.1–>v1.22.1–>v1.23.1–>v1.24.1,不能跨度过大,否则会报错。升级步骤查看集群版本[root@hadoop102~]#kubectlgetnodesNAMESTATUSROLESAGEVERSIONhadoop102Ready,SchedulingDisabledcontrol-plane,maste

- Hive高级SQL技巧及实际应用场景

小技工丨

大数据随笔sqlhive数据仓库大数据

Hive高级SQL技巧及实际应用场景引言ApacheHive是一个建立在Hadoop之上的数据仓库基础设施,它提供了一个用于查询和管理分布式存储中的大型数据集的机制。通过使用类似于SQL(称为HiveQL)的语言,Hive使得数据分析变得更加简单和高效。本文将详细探讨一些Hive高级SQL技巧,并结合实际的应用场景进行说明。HiveSQL的高级使用技巧1.窗口函数描述:窗口函数允许我们在不使用GR

- hive 数字转换字符串_Hive架构及Hive SQL的执行流程解读

weixin_39756416

hive数字转换字符串

1、Hive产生背景MapReduce编程的不便性HDFS上的文件缺少Schema(表名,名称,ID等,为数据库对象的集合)2、Hive是什么Hive的使用场景是什么?基于Hadoop做一些数据清洗啊(ETL)、报表啊、数据分析可以将结构化的数据文件映射为一张数据库表,并提供类SQL查询功能。Hive是SQL解析引擎,它将SQL语句转译成M/RJob然后在Hadoop执行。由Facebook开源,

- 在hadoop上运行python_hadoop上运行python程序

廷哥带你小路超车

数据来源:http://www.nber.org/patents/acite75_99.zip首先上传测试数据到hdfs:[root@localhost:/usr/local/hadoop/hadoop-0.19.2]#bin/hadoopfs-ls/user/root/test-inFound5items-rw-r--r--1rootsupergroup1012010-10-2414:39/us

- ranger集成starrock报错

蘑菇丁

大数据+机器学习+oracle大数据

org.apache.ranger.plugin.client.HadoopException:initConnection:UnabletoconnecttoStarRocksinstance,pleaseprovidevalidvalueoffield:{jdbc.driverClassName}..com.mysql.cj.jdbc.Driver.可能的原因JDBC驱动缺失:运行环境中没有安

- 深入大数据世界:Kontext.TECH的Hadoop之旅

钱桦实Emery

深入大数据世界:Kontext.TECH的Hadoop之旅winutils项目地址:https://gitcode.com/gh_mirrors/winut/winutils在大数据的浩瀚宇宙中,Hadoop作为一颗璀璨的星辰,一直扮演着至关重要的角色。对于渴望探索这一领域的开发者和学习者而言,Kontext.TECH提供了一扇独特而便捷的大门,让你的学习之旅更加顺畅。项目介绍Kontext.Ha

- 大数据学习(61)-Impala与Hive计算引擎

viperrrrrrr

学习impalahiveyarnhadoop

&&大数据学习&&系列专栏:哲学语录:承认自己的无知,乃是开启智慧的大门如果觉得博主的文章还不错的话,请点赞+收藏⭐️+留言支持一下博主哦一、impala与yarn资源管理YARN是ApacheHadoop生态系统中的一个资源管理器,它采用了master/slave的架构,使得多个处理框架能够在同一集群上共享资源。Impala作为Hadoop生态系统中的一个组件,可以与YARN集成,以便更好地管理

- 大数据学习(62)- Hadoop-yarn

viperrrrrrr

大数据yarn

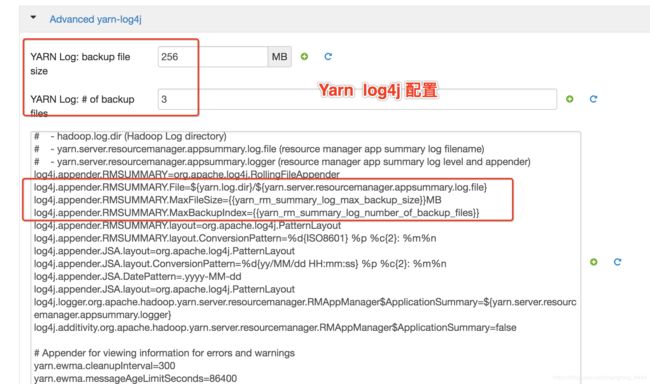

&&大数据学习&&系列专栏:哲学语录:承认自己的无知,乃是开启智慧的大门如果觉得博主的文章还不错的话,请点赞+收藏⭐️+留言支持一下博主哦一、YARN概述1.YARN简介Hadoop-YARN是ApacheHadoop生态系统中的一个集群资源管理器。它作为Hadoop的第二代资源管理框架,负责管理和分配集群中的计算资源。YARN的设计目标是提供一个通用的资源管理框架,使得Hadoop集群可以同时运

- HBase2.6.1部署文档

CXH728

zookeeperhbase

1、HBase概述ApacheHBase是基于Hadoop分布式文件系统(HDFS)之上的分布式、列存储、NoSQL数据库。它适合处理结构化和半结构化数据,能够存储数十亿行和数百万列的数据,并支持实时读写操作。HBase通常应用于需要快速随机读写、低延迟访问以及高吞吐量的场景,例如大规模日志处理、社交网络数据存储等。HBase特性列存储模型:HBase的数据是按列族存储的,适合高稀疏数据。行键分区

- Hive-4.0.1版本部署文档

CXH728

hivehadoop数据仓库

1.前置要求操作系统:建议使用CentOS7或Ubuntu20.04(本试验使用的是CentOSLinuxrelease7.9.2009(Core))Java环境:建议安装Java8或更高版本。Hadoop:Hive需要依赖Hadoop进行分布式存储,建议安装Hadoop3.x版本(本实验采用的是hadoop3.3.6)。数据库:HiveMetastore需要数据库支持,建议使用MySQL、Pos

- hive-3.1.3部署文档

CXH728

hivehadoop数据仓库

提前准备一个正常运行的hadoop集群java环境hive安装包下载地址:https://archive.apache.org/dist/hive/hive-3.1.3/apache-hive-3.1.3-bin.tar.gzmysql安装包1、内嵌模式由于内嵌模式使用场景太少(基本不用),所以仅练习安装查看基础功能[root@master~]#tarxfapache-hive-3.1.3-bin

- mySQL和Hive的区别

iijik55

面试学习路线阿里巴巴hivemysql大数据tomcat面试

SQL和HQL的区别整体1、存储位置:Hive在Hadoop上;Mysql将数据存储在设备或本地系统中;2、数据更新:Hive不支持数据的改写和添加,是在加载的时候就已经确定好了;数据库可以CRUD;3、索引:Hive无索引,每次扫描所有数据,底层是MR,并行计算,适用于大数据量;MySQL有索引,适合在线查询数据;4、执行:Hive底层是MapReduce;MySQL底层是执行引擎;5、可扩展性

- flink-cdc实时增量同步mysql数据到elasticsearch

大数据技术派

#Flinkelasticsearchflinkmysql

什么是CDC?CDC是(ChangeDataCapture变更数据获取)的简称。核心思想是,监测并捕获数据库的变动(包括数据或数据表的插入INSERT、更新UPDATE、删除DELETE等),将这些变更按发生的顺序完整记录下来,写入到消息中间件中以供其他服务进行订阅及消费。1.环境准备mysqlelasticsearchflinkonyarn说明:如果没有安装hadoop,那么可以不用yarn,直

- 搭建分布式Hive集群

逸曦玥泱

大数据运维分布式hivehadoop

title:搭建分布式Hive集群date:2024-11-2923:39:00categories:-服务器tags:-Hive-大数据搭建分布式Hive集群本次实验环境:Centos7-2009、Hadoop-3.1.4、JDK8、Zookeeper-3.6.3、Mysql-5.7.38、Hive-3.1.2功能规划方案一(本地运行模式)Master主节点(Mysql+Hive)192.168

- Hadoop、Spark和 Hive 的详细关系

夜行容忍

hadoopsparkhive

Hadoop、Spark和Hive的详细关系1.ApacheHadoopHadoop是一个开源框架,用于分布式存储和处理大规模数据集。核心组件:HDFS(HadoopDistributedFileSystem):分布式文件系统,提供高吞吐量的数据访问。YARN(YetAnotherResourceNegotiator):集群资源管理和作业调度系统。MapReduce:基于YARN的并行处理框架,用

- Windows系统下解压".tar"文件出错,提示:无法创建符号链接,可能需要以管理器身份运行winrar

ruangaoyan

1、解压文件出错,如下信息:D:\tools\hadoop-3.1.2.tar.gz:无法创建符号链接D:\tools\hadoop-3.1.2\hadoop-3.1.2\lib\native\libhadoop.so您可能需要以管理器身份运行WinRAR!客户端没有所需的特权。2、解决方式如下:WIN+R快捷的打开命令窗口,输入CMD输入:cd/dD:\tools\hadoop-3.1.2这是我

- 大数据技术生态圈:Hadoop、Hive、Spark的区别和关系

雨中徜徉的思绪漫溢

大数据hadoophive

大数据技术生态圈:Hadoop、Hive、Spark的区别和关系在大数据领域中,Hadoop、Hive和Spark是三个常用的开源技术,它们在大数据处理和分析方面发挥着重要作用。虽然它们都是为了处理大规模数据集而设计的,但它们在功能和使用方式上存在一些区别。本文将详细介绍Hadoop、Hive和Spark的区别和关系,并提供相应的源代码示例。Hadoop:Hadoop是一个用于分布式存储和处理大规

- ZooKeeper学习总结(1)——ZooKeeper入门介绍

一杯甜酒

ZooKeeper学习总结Zookeeper

1.概述Zookeeper是Hadoop的一个子项目,它是分布式系统中的协调系统,可提供的服务主要有:配置服务、名字服务、分布式同步、组服务等。它有如下的一些特点:简单Zookeeper的核心是一个精简的文件系统,它支持一些简单的操作和一些抽象操作,例如,排序和通知。丰富Zookeeper的原语操作是很丰富的,可实现一些协调数据结构和协议。例如,分布式队列、分布式锁和一组同级别节点中的“领导者选举

- Zookeeper+kafka学习笔记

CHR_YTU

Zookeeper

Zookeeper是Apache的一个java项目,属于Hadoop系统,扮演管理员的角色。配置管理分布式系统都有好多机器,比如我在搭建hadoop的HDFS的时候,需要在一个主机器上(Master节点)配置好HDFS需要的各种配置文件,然后通过scp命令把这些配置文件拷贝到其他节点上,这样各个机器拿到的配置信息是一致的,才能成功运行起来HDFS服务。Zookeeper提供了这样的一种服务:一种集

- 麒麟arm架构系统_安装nginx-1.27.0_访问500 internal server error nginx解决_13: Permission denied---Linux工作笔记072

添柴程序猿

javanginx-1.27.0nginx最新版安装麒麟v10arm架构麒麟v10安装nginx

[root@hadoop173nginx1.27.0]#wget-chttp://nginx.org/download/nginx-1.27.0.tar.gz--2024-07-0509:47:00--http://nginx.org/download/nginx-1.27.0.tar.gzResolvingnginx.org(nginx.org)...3.125.197.172,52.58.19

- Zookeeper与Kafka学习笔记

上海研博数据

zookeeperkafka学习

一、Zookeeper核心要点1.核心特性分布式协调服务,用于维护配置/命名/同步等元数据采用层次化数据模型(Znode树结构),每个节点可存储<1MB数据典型应用场景:HadoopNameNode高可用HBase元数据管理Kafka集群选举与状态管理2.设计限制内存型存储,不适合大数据量场景数据变更通过版本号(Version)控制,实现乐观锁机制采用ZAB协议保证数据一致性二、Kafka核心架构

- phoenix无法连接hbase shell创建表失败_报错_PleaseHoldException: Master is initializing---记录020_大数据工作笔记0180

添柴程序猿

hbase连接报错phoenix连接hbasephoenixPleaseHoldExcep

今天发现,我的phoenix,去连接hbase集群,怎么也连不上了,奇怪了...弄了一晚上org.apache.hadoop.hbase.PleaseHoldException:Masterisinitializing[root@hadoop120bin]#ll总用量184-rwxr-xr-x.1rootroot36371月222020chaos-daemon.sh-rwxr-xr-x.1root

- Hadoop的运行模式

对许

#Hadoophadoop大数据分布式

Hadoop的运行模式1、本地运行模式2、伪分布式运行模式3、完全分布式运行模式4、区别与总结Hadoop有三种可以运行的模式:本地运行模式、伪分布式运行模式和完全分布式运行模式1、本地运行模式本地运行模式无需任何守护进程,单机运行,所有的程序都运行在同一个JVM上执行Hadoop安装后默认为本地模式,数据存储在Linux本地。在本地模式下调试MapReduce程序非常高效方便,一般该模式主要是在

- Hadoop的mapreduce的执行过程

画纸仁

大数据hadoopmapreduce大数据

一、map阶段的执行过程第一阶段:把输入目录下文件按照一定的标准逐个进行逻辑切片,形成切片规划。默认Splitsize=Blocksize(128M),每一个切片由一个MapTask处理。(getSplits)第二阶段:对切片中的数据按照一定的规则读取解析返回对。默认是按行读取数据。key是每一行的起始位置偏移量,value是本行的文本内容。(TextInputFormat)第三阶段:调用Mapp

- Hadoop:分布式计算平台初探

dccrtbn6261333

大数据运维java

Hadoop是一个开发和运行处理大规模数据的软件平台,是Apache的一个用java语言实现开源软件框架,实现在大量计算机组成的集群中对海量数据进行分布式计算。Hadoop框架中最核心设计就是:MapReduce和HDFS。MapReduce提供了对数据的计算,HDFS提供了海量数据的存储。MapReduceMapReduce的思想是由Google的一篇论文所提及而被广为流传的,简单的一句话解释M

- Java 并发包之线程池和原子计数

lijingyao8206

Java计数ThreadPool并发包java线程池

对于大数据量关联的业务处理逻辑,比较直接的想法就是用JDK提供的并发包去解决多线程情况下的业务数据处理。线程池可以提供很好的管理线程的方式,并且可以提高线程利用率,并发包中的原子计数在多线程的情况下可以让我们避免去写一些同步代码。

这里就先把jdk并发包中的线程池处理器ThreadPoolExecutor 以原子计数类AomicInteger 和倒数计时锁C

- java编程思想 抽象类和接口

百合不是茶

java抽象类接口

接口c++对接口和内部类只有简介的支持,但在java中有队这些类的直接支持

1 ,抽象类 : 如果一个类包含一个或多个抽象方法,该类必须限定为抽象类(否者编译器报错)

抽象方法 : 在方法中仅有声明而没有方法体

package com.wj.Interface;

- [房地产与大数据]房地产数据挖掘系统

comsci

数据挖掘

随着一个关键核心技术的突破,我们已经是独立自主的开发某些先进模块,但是要完全实现,还需要一定的时间...

所以,除了代码工作以外,我们还需要关心一下非技术领域的事件..比如说房地产

&nb

- 数组队列总结

沐刃青蛟

数组队列

数组队列是一种大小可以改变,类型没有定死的类似数组的工具。不过与数组相比,它更具有灵活性。因为它不但不用担心越界问题,而且因为泛型(类似c++中模板的东西)的存在而支持各种类型。

以下是数组队列的功能实现代码:

import List.Student;

public class

- Oracle存储过程无法编译的解决方法

IT独行者

oracle存储过程

今天同事修改Oracle存储过程又导致2个过程无法被编译,流程规范上的东西,Dave 这里不多说,看看怎么解决问题。

1. 查看无效对象

XEZF@xezf(qs-xezf-db1)> select object_name,object_type,status from all_objects where status='IN

- 重装系统之后oracle恢复

文强chu

oracle

前几天正在使用电脑,没有暂停oracle的各种服务。

突然win8.1系统奔溃,无法修复,开机时系统 提示正在搜集错误信息,然后再开机,再提示的无限循环中。

无耐我拿出系统u盘 准备重装系统,没想到竟然无法从u盘引导成功。

晚上到外面早了一家修电脑店,让人家给装了个系统,并且那哥们在我没反应过来的时候,

直接把我的c盘给格式化了 并且清理了注册表,再装系统。

然后的结果就是我的oracl

- python学习二( 一些基础语法)

小桔子

pthon基础语法

紧接着把!昨天没看继续看django 官方教程,学了下python的基本语法 与c类语言还是有些小差别:

1.ptyhon的源文件以UTF-8编码格式

2.

/ 除 结果浮点型

// 除 结果整形

% 除 取余数

* 乘

** 乘方 eg 5**2 结果是5的2次方25

_&

- svn 常用命令

aichenglong

SVN版本回退

1 svn回退版本

1)在window中选择log,根据想要回退的内容,选择revert this version或revert chanages from this version

两者的区别:

revert this version:表示回退到当前版本(该版本后的版本全部作废)

revert chanages from this versio

- 某小公司面试归来

alafqq

面试

先填单子,还要写笔试题,我以时间为急,拒绝了它。。时间宝贵。

老拿这些对付毕业生的东东来吓唬我。。

面试官很刁难,问了几个问题,记录下;

1,包的范围。。。public,private,protect. --悲剧了

2,hashcode方法和equals方法的区别。谁覆盖谁.结果,他说我说反了。

3,最恶心的一道题,抽象类继承抽象类吗?(察,一般它都是被继承的啊)

4,stru

- 动态数组的存储速度比较 集合框架

百合不是茶

集合框架

集合框架:

自定义数据结构(增删改查等)

package 数组;

/**

* 创建动态数组

* @author 百合

*

*/

public class ArrayDemo{

//定义一个数组来存放数据

String[] src = new String[0];

/**

* 增加元素加入容器

* @param s要加入容器

- 用JS实现一个JS对象,对象里有两个属性一个方法

bijian1013

js对象

<html>

<head>

</head>

<body>

用js代码实现一个js对象,对象里有两个属性,一个方法

</body>

<script>

var obj={a:'1234567',b:'bbbbbbbbbb',c:function(x){

- 探索JUnit4扩展:使用Rule

bijian1013

java单元测试JUnitRule

在上一篇文章中,讨论了使用Runner扩展JUnit4的方式,即直接修改Test Runner的实现(BlockJUnit4ClassRunner)。但这种方法显然不便于灵活地添加或删除扩展功能。下面将使用JUnit4.7才开始引入的扩展方式——Rule来实现相同的扩展功能。

1. Rule

&n

- [Gson一]非泛型POJO对象的反序列化

bit1129

POJO

当要将JSON数据串反序列化自身为非泛型的POJO时,使用Gson.fromJson(String, Class)方法。自身为非泛型的POJO的包括两种:

1. POJO对象不包含任何泛型的字段

2. POJO对象包含泛型字段,例如泛型集合或者泛型类

Data类 a.不是泛型类, b.Data中的集合List和Map都是泛型的 c.Data中不包含其它的POJO

- 【Kakfa五】Kafka Producer和Consumer基本使用

bit1129

kafka

0.Kafka服务器的配置

一个Broker,

一个Topic

Topic中只有一个Partition() 1. Producer:

package kafka.examples.producers;

import kafka.producer.KeyedMessage;

import kafka.javaapi.producer.Producer;

impor

- lsyncd实时同步搭建指南——取代rsync+inotify

ronin47

1. 几大实时同步工具比较 1.1 inotify + rsync

最近一直在寻求生产服务服务器上的同步替代方案,原先使用的是 inotify + rsync,但随着文件数量的增大到100W+,目录下的文件列表就达20M,在网络状况不佳或者限速的情况下,变更的文件可能10来个才几M,却因此要发送的文件列表就达20M,严重减低的带宽的使用效率以及同步效率;更为要紧的是,加入inotify

- java-9. 判断整数序列是不是二元查找树的后序遍历结果

bylijinnan

java

public class IsBinTreePostTraverse{

static boolean isBSTPostOrder(int[] a){

if(a==null){

return false;

}

/*1.只有一个结点时,肯定是查找树

*2.只有两个结点时,肯定是查找树。例如{5,6}对应的BST是 6 {6,5}对应的BST是

- MySQL的sum函数返回的类型

bylijinnan

javaspringsqlmysqljdbc

今天项目切换数据库时,出错

访问数据库的代码大概是这样:

String sql = "select sum(number) as sumNumberOfOneDay from tableName";

List<Map> rows = getJdbcTemplate().queryForList(sql);

for (Map row : rows

- java设计模式之单例模式

chicony

java设计模式

在阎宏博士的《JAVA与模式》一书中开头是这样描述单例模式的:

作为对象的创建模式,单例模式确保某一个类只有一个实例,而且自行实例化并向整个系统提供这个实例。这个类称为单例类。 单例模式的结构

单例模式的特点:

单例类只能有一个实例。

单例类必须自己创建自己的唯一实例。

单例类必须给所有其他对象提供这一实例。

饿汉式单例类

publ

- javascript取当月最后一天

ctrain

JavaScript

<!--javascript取当月最后一天-->

<script language=javascript>

var current = new Date();

var year = current.getYear();

var month = current.getMonth();

showMonthLastDay(year, mont

- linux tune2fs命令详解

daizj

linuxtune2fs查看系统文件块信息

一.简介:

tune2fs是调整和查看ext2/ext3文件系统的文件系统参数,Windows下面如果出现意外断电死机情况,下次开机一般都会出现系统自检。Linux系统下面也有文件系统自检,而且是可以通过tune2fs命令,自行定义自检周期及方式。

二.用法:

Usage: tune2fs [-c max_mounts_count] [-e errors_behavior] [-g grou

- 做有中国特色的程序员

dcj3sjt126com

程序员

从出版业说起 网络作品排到靠前的,都不会太难看,一般人不爱看某部作品也是因为不喜欢这个类型,而此人也不会全不喜欢这些网络作品。究其原因,是因为网络作品都是让人先白看的,看的好了才出了头。而纸质作品就不一定了,排行榜靠前的,有好作品,也有垃圾。 许多大牛都是写了博客,后来出了书。这些书也都不次,可能有人让为不好,是因为技术书不像小说,小说在读故事,技术书是在学知识或温习知识,有

- Android:TextView属性大全

dcj3sjt126com

textview

android:autoLink 设置是否当文本为URL链接/email/电话号码/map时,文本显示为可点击的链接。可选值(none/web/email/phone/map/all) android:autoText 如果设置,将自动执行输入值的拼写纠正。此处无效果,在显示输入法并输

- tomcat虚拟目录安装及其配置

eksliang

tomcat配置说明tomca部署web应用tomcat虚拟目录安装

转载请出自出处:http://eksliang.iteye.com/blog/2097184

1.-------------------------------------------tomcat 目录结构

config:存放tomcat的配置文件

temp :存放tomcat跑起来后存放临时文件用的

work : 当第一次访问应用中的jsp

- 浅谈:APP有哪些常被黑客利用的安全漏洞

gg163

APP

首先,说到APP的安全漏洞,身为程序猿的大家应该不陌生;如果抛开安卓自身开源的问题的话,其主要产生的原因就是开发过程中疏忽或者代码不严谨引起的。但这些责任也不能怪在程序猿头上,有时会因为BOSS时间催得紧等很多可观原因。由国内移动应用安全检测团队爱内测(ineice.com)的CTO给我们浅谈关于Android 系统的开源设计以及生态环境。

1. 应用反编译漏洞:APK 包非常容易被反编译成可读

- C#根据网址生成静态页面

hvt

Web.netC#asp.nethovertree

HoverTree开源项目中HoverTreeWeb.HVTPanel的Index.aspx文件是后台管理的首页。包含生成留言板首页,以及显示用户名,退出等功能。根据网址生成页面的方法:

bool CreateHtmlFile(string url, string path)

{

//http://keleyi.com/a/bjae/3d10wfax.htm

stri

- SVG 教程 (一)

天梯梦

svg

SVG 简介

SVG 是使用 XML 来描述二维图形和绘图程序的语言。 学习之前应具备的基础知识:

继续学习之前,你应该对以下内容有基本的了解:

HTML

XML 基础

如果希望首先学习这些内容,请在本站的首页选择相应的教程。 什么是SVG?

SVG 指可伸缩矢量图形 (Scalable Vector Graphics)

SVG 用来定义用于网络的基于矢量

- 一个简单的java栈

luyulong

java数据结构栈

public class MyStack {

private long[] arr;

private int top;

public MyStack() {

arr = new long[10];

top = -1;

}

public MyStack(int maxsize) {

arr = new long[maxsize];

top

- 基础数据结构和算法八:Binary search

sunwinner

AlgorithmBinary search

Binary search needs an ordered array so that it can use array indexing to dramatically reduce the number of compares required for each search, using the classic and venerable binary search algori

- 12个C语言面试题,涉及指针、进程、运算、结构体、函数、内存,看看你能做出几个!

刘星宇

c面试

12个C语言面试题,涉及指针、进程、运算、结构体、函数、内存,看看你能做出几个!

1.gets()函数

问:请找出下面代码里的问题:

#include<stdio.h>

int main(void)

{

char buff[10];

memset(buff,0,sizeof(buff));

- ITeye 7月技术图书有奖试读获奖名单公布

ITeye管理员

活动ITeye试读

ITeye携手人民邮电出版社图灵教育共同举办的7月技术图书有奖试读活动已圆满结束,非常感谢广大用户对本次活动的关注与参与。

7月试读活动回顾:

http://webmaster.iteye.com/blog/2092746

本次技术图书试读活动的优秀奖获奖名单及相应作品如下(优秀文章有很多,但名额有限,没获奖并不代表不优秀):

《Java性能优化权威指南》