springBoot连接hbase,用java操作hbase,实现增删改查功能

简介

- hbase在hadoop的大数据生态里扮演者不可或缺的作用,特别在数据的实时查询方面;

- 当hbase的分布式集群在linux服务器搭建起来之后,我们需要使用java客户端去连接调用,实现数据的增删查改;

- 本篇博客整合了springBoot与hbase的连接与调用,版本配置:hadoop2.7.6;hbase1.3.3;springboot1.5.9

实践

- 首先,需要配置本机的hosts文件,添加hbase的主机映射,配置内容和hadoop的配置差不多,区别就是:ip地址换成阿里云外网的IP地址;

- 如果是windows,hosts文件位置:C:\Windows\System32\drivers\etc\hosts;

- 配置内容如下:

# 127.0.0.1 localhost

# ::1 localhost

47.100.200.200 hadoop-master

47.100.201.201 hadoop-slave

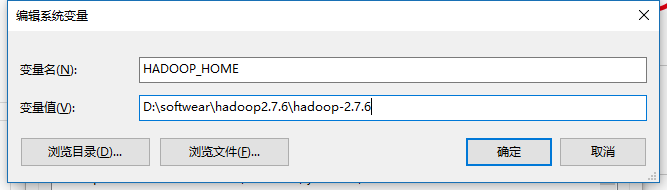

- 然后,需要配置win客户端的hadoop环境(必须要配置,否则会出错);

- 下载安装包:hadoop-2.7.6.tar.gz,之后配置hadoop的环境变量,配置方法和java差不多;

- 下载winutils:hadoop-winutils-2.2.0.zip,解压之后,将hadoop-winutils-2.2.0目录下的bin文件全部复制复制到hadoop根目录下的bin目录,有冲突直接覆盖;

- 然后重启电脑,进入cmd命令:hadoop version,出现以下信息,说明环境已经配置成功:

- 环境配置好之后,启动开发工具,首先pom文件添加hbase的相关依赖:

org.apache.hbase

hbase-client

1.3.0

org.slf4j

slf4j-log4j12

log4j

log4j

javax.servlet

servlet-api

org.apache.hadoop

hadoop-common

2.6.0

org.apache.hadoop

hadoop-mapreduce-client-core

2.6.0

org.apache.hadoop

hadoop-mapreduce-client-common

2.6.0

org.apache.hadoop

hadoop-hdfs

2.6.0

jdk.tools

jdk.tools

1.8

system

${JAVA_HOME}/lib/tools.jar

org.springframework.boot

spring-boot-configuration-processor

true

- 配置文件yml添加hbase的zookeeper集群管理中心:

#hbase配置,端口默认2181,

hbase:

conf:

confMaps:

'hbase.zookeeper.quorum' : 'hadoop-master:2181,hadoop-slave:2181'

- 新增HbaseConfig类:

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Configuration;

import java.util.Map;

/**

* Hbase配置类

* @author sixmonth

* @Date 2019年5月13日

*

*/

@Configuration

@ConfigurationProperties(prefix = HbaseConfig.CONF_PREFIX)

public class HbaseConfig {

public static final String CONF_PREFIX = "hbase.conf";

private Map confMaps;

public Map getconfMaps() {

return confMaps;

}

public void setconfMaps(Map confMaps) {

this.confMaps = confMaps;

}

}

- 新增SpringContextHolder容器类,用于获取spring容器内的bean:

import org.springframework.beans.BeansException;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

import org.springframework.stereotype.Component;

/**

* Spring的ApplicationContext的持有者,可以用静态方法的方式获取spring容器中的bean

*/

@Component

public class SpringContextHolder implements ApplicationContextAware {

private static ApplicationContext applicationContext;

@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

SpringContextHolder.applicationContext = applicationContext;

}

public static ApplicationContext getApplicationContext() {

assertApplicationContext();

return applicationContext;

}

@SuppressWarnings("unchecked")

public static T getBean(String beanName) {

assertApplicationContext();

return (T) applicationContext.getBean(beanName);

}

public static T getBean(Class requiredType) {

assertApplicationContext();

return applicationContext.getBean(requiredType);

}

private static void assertApplicationContext() {

if (SpringContextHolder.applicationContext == null) {

throw new RuntimeException("applicaitonContext属性为null,请检查是否注入了SpringContextHolder!");

}

}

}

- 新增HBaseUtils工具类:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.client.coprocessor.AggregationClient;

import org.apache.hadoop.hbase.client.coprocessor.LongColumnInterpreter;

import org.apache.hadoop.hbase.filter.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.context.annotation.DependsOn;

import org.springframework.stereotype.Component;

import org.springframework.util.StopWatch;

import com.springboot.sixmonth.common.config.hbase.HbaseConfig;

import com.springboot.sixmonth.common.filter.SpringContextHolder;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

import java.util.NavigableMap;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

/**

* hbase工具类

* @author sixmonth

* @Date 2019年5月13日

*

*/

@DependsOn("springContextHolder")//控制依赖顺序,保证springContextHolder类在之前已经加载

@Component

public class HBaseUtils {

private Logger logger = LoggerFactory.getLogger(this.getClass());

//手动获取hbaseConfig配置类对象

private static HbaseConfig hbaseConfig = SpringContextHolder.getBean("hbaseConfig");

private static Configuration conf = HBaseConfiguration.create();

private static ExecutorService pool = Executors.newScheduledThreadPool(20); //设置hbase连接池

private static Connection connection = null;

private static HBaseUtils instance = null;

private static Admin admin = null;

private HBaseUtils(){

if(connection == null){

try {

//将hbase配置类中定义的配置加载到连接池中每个连接里

Map confMap = hbaseConfig.getconfMaps();

for (Map.Entry confEntry : confMap.entrySet()) {

conf.set(confEntry.getKey(), confEntry.getValue());

}

connection = ConnectionFactory.createConnection(conf, pool);

admin = connection.getAdmin();

} catch (IOException e) {

logger.error("HbaseUtils实例初始化失败!错误信息为:" + e.getMessage(), e);

}

}

}

//简单单例方法,如果autowired自动注入就不需要此方法

public static synchronized HBaseUtils getInstance(){

if(instance == null){

instance = new HBaseUtils();

}

return instance;

}

/**

* 创建表

*

* @param tableName 表名

* @param columnFamily 列族(数组)

*/

public void createTable(String tableName, String[] columnFamily) throws IOException{

TableName name = TableName.valueOf(tableName);

//如果存在则删除

if (admin.tableExists(name)) {

admin.disableTable(name);

admin.deleteTable(name);

logger.error("create htable error! this table {} already exists!", name);

} else {

HTableDescriptor desc = new HTableDescriptor(name);

for (String cf : columnFamily) {

desc.addFamily(new HColumnDescriptor(cf));

}

admin.createTable(desc);

}

}

/**

* 插入记录(单行单列族-多列多值)

*

* @param tableName 表名

* @param row 行名

* @param columnFamilys 列族名

* @param columns 列名(数组)

* @param values 值(数组)(且需要和列一一对应)

*/

public void insertRecords(String tableName, String row, String columnFamilys, String[] columns, String[] values) throws IOException {

TableName name = TableName.valueOf(tableName);

Table table = connection.getTable(name);

Put put = new Put(Bytes.toBytes(row));

for (int i = 0; i < columns.length; i++) {

put.addColumn(Bytes.toBytes(columnFamilys), Bytes.toBytes(columns[i]), Bytes.toBytes(values[i]));

table.put(put);

}

}

/**

* 插入记录(单行单列族-单列单值)

*

* @param tableName 表名

* @param row 行名

* @param columnFamily 列族名

* @param column 列名

* @param value 值

*/

public void insertOneRecord(String tableName, String row, String columnFamily, String column, String value) throws IOException {

TableName name = TableName.valueOf(tableName);

Table table = connection.getTable(name);

Put put = new Put(Bytes.toBytes(row));

put.addColumn(Bytes.toBytes(columnFamily), Bytes.toBytes(column), Bytes.toBytes(value));

table.put(put);

}

/**

* 删除一行记录

*

* @param tablename 表名

* @param rowkey 行名

*/

public void deleteRow(String tablename, String rowkey) throws IOException {

TableName name = TableName.valueOf(tablename);

Table table = connection.getTable(name);

Delete d = new Delete(rowkey.getBytes());

table.delete(d);

}

/**

* 删除单行单列族记录

* @param tablename 表名

* @param rowkey 行名

* @param columnFamily 列族名

*/

public void deleteColumnFamily(String tablename, String rowkey, String columnFamily) throws IOException {

TableName name = TableName.valueOf(tablename);

Table table = connection.getTable(name);

Delete d = new Delete(rowkey.getBytes()).addFamily(Bytes.toBytes(columnFamily));

table.delete(d);

}

/**

* 删除单行单列族单列记录

*

* @param tablename 表名

* @param rowkey 行名

* @param columnFamily 列族名

* @param column 列名

*/

public void deleteColumn(String tablename, String rowkey, String columnFamily, String column) throws IOException {

TableName name = TableName.valueOf(tablename);

Table table = connection.getTable(name);

Delete d = new Delete(rowkey.getBytes()).addColumn(Bytes.toBytes(columnFamily), Bytes.toBytes(column));

table.delete(d);

}

/**

* 查找一行记录

*

* @param tablename 表名

* @param rowKey 行名

*/

public static String selectRow(String tablename, String rowKey) throws IOException {

String record = "";

TableName name=TableName.valueOf(tablename);

Table table = connection.getTable(name);

Get g = new Get(rowKey.getBytes());

Result rs = table.get(g);

NavigableMap>> map = rs.getMap();

for (Cell cell : rs.rawCells()) {

StringBuffer stringBuffer = new StringBuffer()

.append(Bytes.toString(cell.getRowArray(), cell.getRowOffset(), cell.getRowLength())).append("\t")

.append(Bytes.toString(cell.getFamilyArray(), cell.getFamilyOffset(), cell.getFamilyLength())).append("\t")

.append(Bytes.toString(cell.getQualifierArray(), cell.getQualifierOffset(), cell.getQualifierLength())).append("\t")

.append(Bytes.toString(cell.getValueArray(), cell.getValueOffset(), cell.getValueLength())).append("\n");

String str = stringBuffer.toString();

record += str;

}

return record;

}

/**

* 查找单行单列族单列记录

*

* @param tablename 表名

* @param rowKey 行名

* @param columnFamily 列族名

* @param column 列名

* @return

*/

public static String selectValue(String tablename, String rowKey, String columnFamily, String column) throws IOException {

TableName name=TableName.valueOf(tablename);

Table table = connection.getTable(name);

Get g = new Get(rowKey.getBytes());

g.addColumn(Bytes.toBytes(columnFamily), Bytes.toBytes(column));

Result rs = table.get(g);

return Bytes.toString(rs.value());

}

/**

* 查询表中所有行(Scan方式)

*

* @param tablename

* @return

*/

public String scanAllRecord(String tablename) throws IOException {

String record = "";

TableName name=TableName.valueOf(tablename);

Table table = connection.getTable(name);

Scan scan = new Scan();

ResultScanner scanner = table.getScanner(scan);

try {

for(Result result : scanner){

for (Cell cell : result.rawCells()) {

StringBuffer stringBuffer = new StringBuffer()

.append(Bytes.toString(cell.getRowArray(), cell.getRowOffset(), cell.getRowLength())).append("\t")

.append(Bytes.toString(cell.getFamilyArray(), cell.getFamilyOffset(), cell.getFamilyLength())).append("\t")

.append(Bytes.toString(cell.getQualifierArray(), cell.getQualifierOffset(), cell.getQualifierLength())).append("\t")

.append(Bytes.toString(cell.getValueArray(), cell.getValueOffset(), cell.getValueLength())).append("\n");

String str = stringBuffer.toString();

record += str;

}

}

} finally {

if (scanner != null) {

scanner.close();

}

}

return record;

}

/**

* 根据rowkey关键字查询报告记录

*

* @param tablename

* @param rowKeyword

* @return

*/

public List scanReportDataByRowKeyword(String tablename, String rowKeyword) throws IOException {

ArrayList

- 使用方法:

@Autowired

private HBaseUtils hBaseUtils;

@RequestMapping(value = "/test")

public Map test() {

try {

String str = hBaseUtils.scanAllRecord("sixmonth");//扫描表

System.out.println("获取到hbase的内容:"+str);

} catch (IOException e) {

e.printStackTrace();

}

Map map = new HashMap();

map.put("hbaseContent",str);

return map;

}

总结

- hbase客户端java连接调试需要配置的环境变量比较多,可能会碰到很多问题,但针对性解决的方法还是很多很容易的;

- 实践是检验认识真理性的唯一标准,自己动手,丰衣足食~~

参考博客

https://blog.csdn.net/abysscarry/article/details/83317868