原生Python实现KNN分类算法,用鸢尾花数据集

参考资料:https://zhuanlan.zhihu.com/p/55960501

一、题目:

原生Python实现KNN分类算法,用鸢尾花数据集。

二、算法设计

KNN算法的思路:如果一个样本在特征空间中与k个实例最为相似(即特征空间中最邻近),那么这k个实例中大多数属于哪个类别,则该样本也属于这个类别。

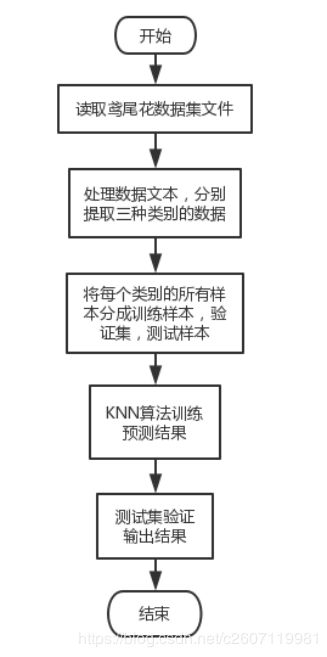

算法流程:

- 读取下载好的鸢尾花数据集;

- 对文本进行处理,将species列的文本映射成数值类型0,1,2;将三个类别的特征数据分别通过切片方法提取出来;

- 将每个类别的所有样本分成训练样本、验证集和测试样本,各占所有样本的比例分别为60%,20%,20%;

- 对训练集和测试集中的特征数据进行标准化;

- 设计KNN算法训练预测数据;

类的初始化定义:

def __init__(self):

pass训练函数,训练函数保存所有训练样本:

# 训练函数

def train(self, X, y):

self.X_train = X

self.y_train = y预测函数:

# 预测函数

def predict(self, X, k=1):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train)) # 初始化距离函数

d1 = -2 * np.dot(X, self.X_train.T)

d2 = np.sum(np.square(X), axis=1, keepdims=True)

d3 = np.sum(np.square(self.X_train), axis=1)

dist = np.sqrt(d1 + d2 + d3)

# 根据K值,选择最可能属于的类别

y_pred = np.zeros(num_test)

for i in range(num_test):

dist_k_min = np.argsort(dist[i])[:k] # 最近邻k个实例位置

y_kclose = self.y_train[dist_k_min] # 最近邻k个实例对应的标签

y_pred[i] = np.argmax(np.bincount(y_kclose.tolist())) # 找出k个标签中从属类别最多的作为预测类别

return y_pred6. 输入训练集和测试集数据,输出结果

- 源代码

import pandas as pd

# from 鸢尾花分类.KNN import *

from sklearn.preprocessing import StandardScaler

data = pd.read_csv('iris.data', header=None)#读取数据集

data.columns = ['sepal length', 'sepal width', 'petal length', 'petal width', 'species'] # 特征及类别名称

#对文本进行处理,将Species列的文本映射成数值类型

data['species'] = data['species'].map({'Iris-virginica':0,'Iris-setosa':1,'Iris-versicolor':2})

# 提取三种种类的特征

X = data.iloc[0:150, 0:4].values

y = data.iloc[0:150, 4].values

X_setosa, y_setosa = X[0:50], y[0:50] # Iris-setosa 4个特征

X_versicolor, y_versicolor = X[50:100], y[50:100] # Iris-versicolor 4个特征

X_virginica, y_virginica = X[100:150], y[100:150] # Iris-virginica 4个特征

# 训练集

X_setosa_train = X_setosa[:30, :]

y_setosa_train = y_setosa[:30]

X_versicolor_train = X_versicolor[:30, :]

y_versicolor_train = y_versicolor[:30]

X_virginica_train = X_virginica[:30, :]

y_virginica_train = y_virginica[:30]

X_train = np.vstack([X_setosa_train, X_versicolor_train, X_virginica_train])

y_train = np.hstack([y_setosa_train, y_versicolor_train, y_virginica_train])

# 验证集

X_setosa_val = X_setosa[30:40, :]

y_setosa_val = y_setosa[30:40]

X_versicolor_val = X_versicolor[30:40, :]

y_versicolor_val = y_versicolor[30:40]

X_virginica_val = X_virginica[30:40, :]

y_virginica_val = y_virginica[30:40]

X_val = np.vstack([X_setosa_val, X_versicolor_val, X_virginica_val])

y_val = np.hstack([y_setosa_val, y_versicolor_val, y_virginica_val])

# 测试集

X_setosa_test = X_setosa[40:50, :]

y_setosa_test = y_setosa[40:50]

X_versicolor_test = X_versicolor[40:50, :]

y_versicolor_test = y_versicolor[40:50]

X_virginica_test = X_virginica[40:50, :]

y_virginica_test = y_virginica[40:50]

X_test = np.vstack([X_setosa_test, X_versicolor_test, X_virginica_test])

y_test = np.hstack([y_setosa_test, y_versicolor_test, y_virginica_test])

# 对训练集和测试集中的特征数据进行标准化

std = StandardScaler()

X_train = std.fit_transform(X_train)

X_test = std.transform(X_test)

# KNN算法训练预测数据

class KNN(object):

def __init__(self):

pass

# 训练函数

def train(self, X, y):

self.X_train = X

self.y_train = y

# 预测函数

def predict(self, X, k=1):

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train)) # 初始化距离函数

d1 = -2 * np.dot(X, self.X_train.T)

d2 = np.sum(np.square(X), axis=1, keepdims=True)

d3 = np.sum(np.square(self.X_train), axis=1)

dist = np.sqrt(d1 + d2 + d3)

# 根据K值,选择最可能属于的类别

y_pred = np.zeros(num_test)

for i in range(num_test):

dist_k_min = np.argsort(dist[i])[:k] # 最近邻k个实例位置

y_kclose = self.y_train[dist_k_min] # 最近邻k个实例对应的标签

y_pred[i] = np.argmax(np.bincount(y_kclose.tolist())) # 找出k个标签中从属类别最多的作为预测类别

return y_pred

knn = KNN()

# 输入训练集数据

knn.train(X_train,y_train)

# 输入测试集,查看训练结果

result = knn.predict(X_test)

y_pred = knn.predict(X_test, k=3)

r_result = np.mean(y_pred == y_test)

print("训练的结果为:",y_pred)

print("正确的结果为:",result)

print("识别成功率为:",r_result)- 测试用例设计即调试过程截图

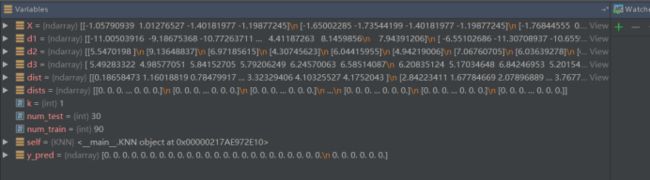

调试:

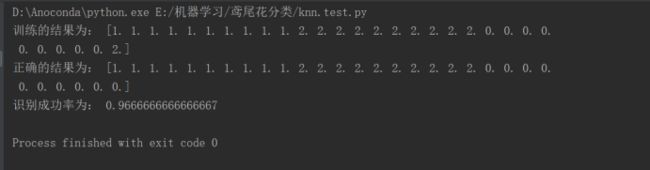

结果:

最终结果显示,测试集预测准确率为96.7%。

- 总结

- 开始使用for循环对数据集进行读取,但代码量多,结果也总是出现问题。解决:使用切片方法对数据集提取数据,简单且效率高。

- 通过KNN算法对鸢尾花数据集进行预测分类,进一步了解了kNN算法的本质,它将所有训练样本的输入和输出标签都存储起来。测试过程中,计算测试样本与每个训练样本的距离,选取与测试样本距离最近的前k个训练样本。然后对着k个训练样本的标签进行投票,票数最多的那一类别即为测试样本所归类。

- KNN是机器学习的基础算法,可以帮助我们更好的理解和学习更深入的机器学习算法。