CentOS7上实践Open vSwitch+VXLAN

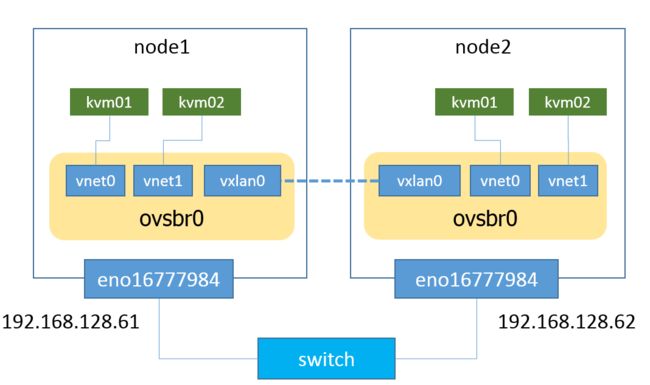

实验环境示意图

node1,node2操作系统版本为CentOS7.1 带GUI的服务器版本

CentOS7的安装请参考:http://blog.csdn.net/dylloveyou/article/details/53096170

kvm的安装请参考:http://blog.csdn.net/dylloveyou/article/details/53223746

1.安装Open vSwitch

[root@rhel-kvm-ovs-01 ~]# yum -y install openssl-devel kernel-devel

[root@rhel-kvm-ovs-01 ~]# yum groupinstall "Development Tools"

[root@rhel-kvm-ovs-01 ~]# adduser ovswitch

[root@rhel-kvm-ovs-01 ~]# su - ovswitch

提前下载好openvswitch-2.5.1.tar.gz,并放置到/home/ovswitch下

[ovswitch@rhel-kvm-ovs-01 ~]$ ls

openvswitch-2.5.1.tar.gz

[ovswitch@rhel-kvm-ovs-01 ~]$ tar xfz openvswitch-2.5.1.tar.gz

[ovswitch@rhel-kvm-ovs-01 ~]$ mkdir -p ~/rpmbuild/SOURCES

[ovswitch@rhel-kvm-ovs-01 ~]$ cp openvswitch-2.5.1.tar.gz ~/rpmbuild/SOURCES

去除Nicira提供的openvswitch-kmod依赖包,创建新的spec文件

[ovswitch@rhel-kvm-ovs-01 ~]$ sed 's/openvswitch-kmod, //g' openvswitch-2.5.1/rhel/openvswitch.spec > openvswitch-2.5.1/rhel/openvswitch_no_kmod.spec

[ovswitch@rhel-kvm-ovs-01 ~]$ rpmbuild -bb --without check ~/openvswitch-2.5.1/rhel/openvswitch_no_kmod.spec

[ovswitch@rhel-kvm-ovs-01 ~]$ exit

[root@rhel-kvm-ovs-01 ~]# yum localinstall /home/ovswitch/rpmbuild/RPMS/x86_64/openvswitch-2.5.1-1.x86_64.rpm

安装完成,验证一下:

[root@rhel-kvm-ovs-01 ~]# rpm -qf `which ovs-vsctl`

openvswitch-2.5.1-1.x86_64启动服务:

[root@rhel-kvm-ovs-01 ~]# systemctl start openvswitch.service

[root@rhel-kvm-ovs-01 ~]# systemctl enable openvswitch.service查看结果:

[root@rhel-kvm-ovs-01 ~]# systemctl -l status openvswitch.service2.配置Open vSwitch

[root@rhel-kvm-ovs-01 ~]# ovs-vsctl add-br ovsbr0去掉NetworkManager

[root@rhel-kvm-ovs-01 ~]# systemctl stop NetworkManager.service

[root@rhel-kvm-ovs-01 ~]# systemctl disable NetworkManager.service

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.改用network.services,修改/etc/sysconfig/network-scripts/下的配置文件

/etc/sysconfig/network-scripts/ifcfg-mgmt0

DEVICE=mgmt0

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSIntPort

OVS_BRIDGE=ovsbr0

USERCTL=no

BOOTPROTO=none

HOTPLUG=no

IPADDR0=192.168.57.2

PREFIX0=24/etc/sysconfig/network-scripts/ifcfg-ovsbr0

DEVICE=ovsbr0

ONBOOT=yes

DEVICETYPE=ovs

TYPE=OVSBridge

HOTPLUG=no

USERCTL=no/etc/sysconfig/network-scripts/ifcfg-eno16777984

......

IPADDR=192.168.128.61

......重启服务

[root@rhel-kvm-ovs-01 ~]# systemctl restart network.service3.启动虚拟机

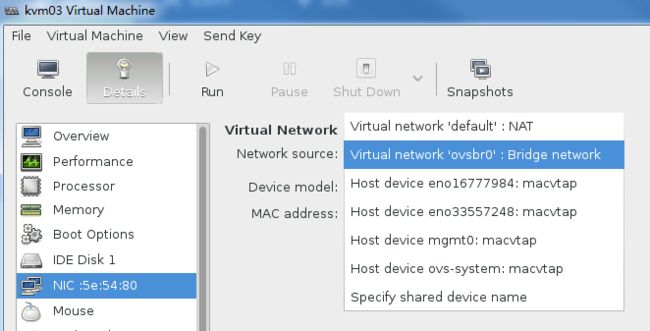

先通过virt-manager创建2个虚拟机kvm01,kvm02

并配置网络如下

手动设置虚拟机IP分别为192.168.57.11,192.168.57.12

4.配置VXLAN

在ovsbr0中添加接口vxlan0

node1上的配置:注意remote_ip node2的ip:192.168.128.62

[root@rhel-kvm-ovs-01 ~]# ovs-vsctl add-port ovsbr0 vxlan0 -- set interface vxlan0 type=vxlan options:remote_ip=192.168.128.62

[root@rhel-kvm-ovs-01 ~]# ovs-vsctl show

8e3f7c3f-3d0d-4068-92d0-c0bd0c6651ce

Bridge "ovsbr0"

Port "vxlan0"

Interface "vxlan0"

type: vxlan

options: {remote_ip="192.168.128.62"}

Port "ovsbr0"

Interface "ovsbr0"

type: internal

Port "mgmt0"

Interface "mgmt0"

type: internal

ovs_version: "2.5.1"ip addr

[root@rhel-kvm-ovs-01 ~]# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:b7:2d:7f brd ff:ff:ff:ff:ff:ff

inet 192.168.128.61/24 brd 192.168.128.255 scope global eno16777736

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feb7:2d7f/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: mtu 1500 qdisc noqueue state DOWN

link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: mtu 1500 qdisc pfifo_fast state DOWN qlen 500

link/ether 52:54:00:fc:80:90 brd ff:ff:ff:ff:ff:ff

5: ovs-system: mtu 1500 qdisc noop state DOWN

link/ether ea:e8:f2:40:1f:55 brd ff:ff:ff:ff:ff:ff

6: ovsbr0: mtu 1500 qdisc noqueue state UNKNOWN

link/ether a6:ba:78:70:5b:4e brd ff:ff:ff:ff:ff:ff

inet6 fe80::a4ba:78ff:fe70:5b4e/64 scope link

valid_lft forever preferred_lft forever

7: mgmt0: mtu 1500 qdisc noqueue state UNKNOWN

link/ether b2:b8:67:7d:58:41 brd ff:ff:ff:ff:ff:ff

inet 192.168.57.2/24 brd 192.168.57.255 scope global mgmt0

valid_lft forever preferred_lft forever

inet6 fe80::b0b8:67ff:fe7d:5841/64 scope link

valid_lft forever preferred_lft forever

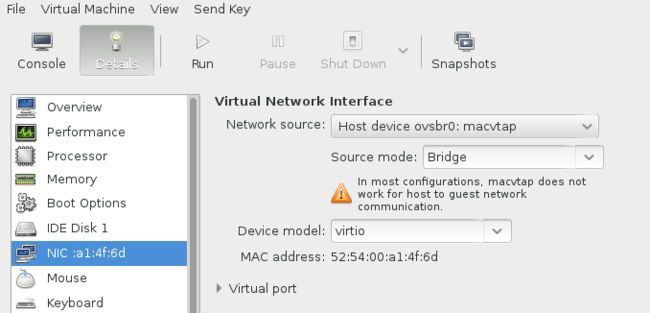

10: macvtap0@ovsbr0: mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether 52:54:00:b5:99:36 brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:feb5:9936/64 scope link

valid_lft forever preferred_lft forever

12: macvtap1@ovsbr0: mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether 52:54:00:8f:83:bc brd ff:ff:ff:ff:ff:ff

inet6 fe80::5054:ff:fe8f:83bc/64 scope link

valid_lft forever preferred_lft forever 其中 macvtap0@ovsbr0 这种的虚拟机网卡模式请参考:http://blog.csdn.net/dylloveyou/article/details/53283598

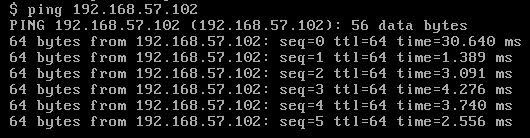

5.测试

以上配置,只列出了node1的操作过程,node2同理。

node1内部vm间通信

kvm01 ping kvm02:

node1 vm和node2 vm通信

node1 的 kvm01 ping node2 的 kvm02:

ping不通

经查找是防火墙的原因,关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

ovsbr0上面抓到的包

[root@rhel-kvm-ovs-01 ~]# tcpdump -i ovsbr0

tcpdump: WARNING: ovsbr0: no IPv4 address assigned

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on ovsbr0, link-type EN10MB (Ethernet), capture size 65535 bytes

16:24:30.924042 IP 192.168.57.102 > 192.168.57.11: ICMP echo request, id 27905, seq 130, length 64

16:24:30.926714 IP 192.168.57.11 > 192.168.57.102: ICMP echo reply, id 27905, seq 130, length 64

16:24:31.925557 IP 192.168.57.102 > 192.168.57.11: ICMP echo request, id 27905, seq 131, length 64

16:24:31.926446 IP 192.168.57.11 > 192.168.57.102: ICMP echo reply, id 27905, seq 131, length 646.虚拟机的其他网络配置模式

新创建的虚拟机,默认的网络配置如下

[root@rhel-kvm-ovs-01 ~]# cd /etc/libvirt/qemu/networks

[root@rhel-kvm-ovs-01 networks]# cat default.xml

<network>

<name>defaultname>

<uuid>1bd83fc4-bced-4d3b-9ac1-71754d1ea851uuid>

<forward mode='nat'/>

<bridge name='virbr0' stp='on' delay='0'/>

<mac address='52:54:00:80:1a:b4'/>

<ip address='192.168.122.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.168.122.2' end='192.168.122.254'/>

dhcp>

ip>

network>通过dhcp分配192.168.122.0/24的IP,并且以nat模式访问外网

我们启动kvm03虚拟机,网络选择default

可以查看分配了ip 192.168.122.229

而且通过nat模式,可以访问外网

我们已经创建了ovsbr0,如果想使用此网桥,有两种实现方式:1)本文上面的设置方式 2)创建一个新的网络,下面具体讲下2

仿照default创建一个新的网络,将ovsbr0给包含进来,创建 ovsnet.xml,内容如下:

<network>

<name>ovsbr0name>

<forward mode='bridge'/>

<bridge name='ovsbr0'/>

<virtualport type='openvswitch'/>

network>使用如下的命令在libvirt中创建网络,启动网络,设置自动启动:

virsh net-define ovsnet.xml

virsh net-start ovsbr0

virsh net-autostart ovsbr0这样就可以改kvm03的网络由default变为ovsbr0

由于没有配置dhcp,需手动设置kvm03的ip(192.168.57.13,和kvm01,kvm02的在一个网段)

同时,kvm01 kvm02以及node2上的vm也设置成这种网络模式

[root@rhel-kvm-ovs-01 ~]# tcpdump -i vnet2

tcpdump: WARNING: vnet2: no IPv4 address assigned

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on vnet2, link-type EN10MB (Ethernet), capture size 65535 bytes

16:52:46.772500 IP 192.168.57.13 > 192.168.57.102: ICMP echo request, id 25601, seq 0, length 64

16:52:46.773750 IP 192.168.57.102 > 192.168.57.13: ICMP echo reply, id 25601, seq 0, length 64

16:52:47.774675 IP 192.168.57.13 > 192.168.57.102: ICMP echo request, id 25601, seq 1, length 64

16:52:47.775324 IP 192.168.57.102 > 192.168.57.13: ICMP echo reply, id 25601, seq 1, length 64再看下ovs–和开头的实验环境示意图相对应

[root@rhel-kvm-ovs-01 ~]# ovs-vsctl show

8e3f7c3f-3d0d-4068-92d0-c0bd0c6651ce

Bridge "ovsbr0"

Port "vnet0"

Interface "vnet0"

Port "vnet1"

Interface "vnet1"

Port "mgmt0"

Interface "mgmt0"

type: internal

Port "vxlan0"

Interface "vxlan0"

type: vxlan

options: {remote_ip="192.168.128.62"}

Port "ovsbr0"

Interface "ovsbr0"

type: internal

Port "vnet2"

Interface "vnet2"

ovs_version: "2.5.1"和之前的显示不太一样,这次多了vnet*的port