Kafka-connect-jdbc-source连接mysql数据库实战

Kafka-connect-jdbc-source连接mysql数据库实战

1、创建mysql数据库

为避免使用kafka连接数据库时意外修改或删除数据,建议单独创建一个只读用户(kafka),仅用于读取数据;

使用root操作,进行如下操作

-- 创建数据库

create database test;

-- 创建只读用户

create user 'kafka'@'127.0.0.1' identified by '123456';

-- 赋予查询授权

grant select on test.* to 'kafka'@'127.0.0.1';

-- 刷新权限

flush privileges;

-- 创建测试表

CREATE TABLE `sys_config` (

`cfg_id` int(11) NOT NULL,

`cfg_name` varchar(20) DEFAULT NULL,

`cfg_notes` varchar(50) DEFAULT NULL,

`cfg_value` varchar(20) DEFAULT NULL,

`modified` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (`cfg_id`)

)

使用kafka登录mysql,验证是否可以正常访问数据库:

mysql -u kafka -p -h 127.0.0.1

2、Mysql源连接配置

mysql源连接文件:

mysql-whitelist-timestamp-source.properties

name=mysql-whitelist-timestamp-source

connector.class=io.confluent.connect.jdbc.JdbcSourceConnector

tasks.max=10

connection.url=jdbc:mysql://127.0.0.1:3306/test?useUnicode=true&characterEncoding=utf8&user=kafka&password=123456&serverTimezone=Asia/Shanghai

table.whitelist=sys_config

mode=timestamp

timestamp.column.name=modified

topic.prefix=mysql-

3、启动源连接器

启动脚本:8.1-kafka-connect-jdbc.bat

@echo off

rem 启动8.1-connect-jdbc

title connect-jdbc-[%date% %time%]

rem 设置connect-jdbc路径

set CLASSPATH=%cd%\plugins\kafka-connect-jdbc\*

rem connect 连接mysql

bin\windows\connect-standalone.bat config/connect-standalone.properties config/mysql-whitelist-timestamp-source.properties

pause

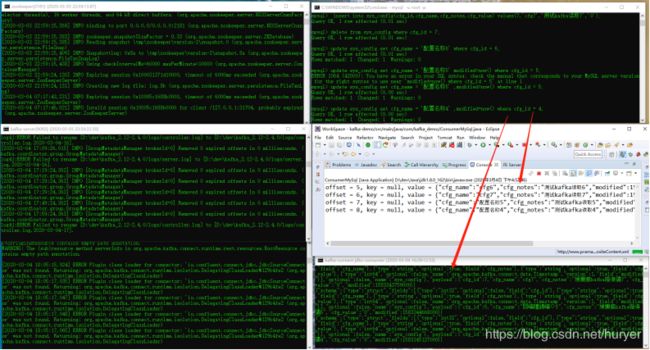

运行截图

暂时不要纠结于找不到驱动的错误,实践过程中,即使用官方提供的文件源连接示例也提示类似找不到插件的问题,但是不影响使用(PS:为了这个问题,尝试添加了一堆jar包,但由于依赖层级关系问题,仍未解决报错问题,但是不影响使用)

ERROR Plugin class loader for connector: ‘io.confluent.connect.jdbc.JdbcSourceConnector’ was not found. Returning: org.apache.kafka.connect.runtime.isolation.DelegatingClassLoader@129b4fe2 (org.apache.kafka.connect.runtime.isolation.DelegatingClassLoader)

4、查看连接器插件信息

http://127.0.0.1:8083/connector-plugins

5、启动Kafka-消费端控制台

为了验证数据是否从mysql读取到kafka,启动消费端验证:

文件:8.2-kafka-connect-jdbc-consumer.bat

@echo off

rem 8.2-kafka-connect-jdbc-consumer

title kafka-connect-jdbc-consumer-[%date% %time%]

bin\windows\kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic mysql-sys_config --from-beginning

pause

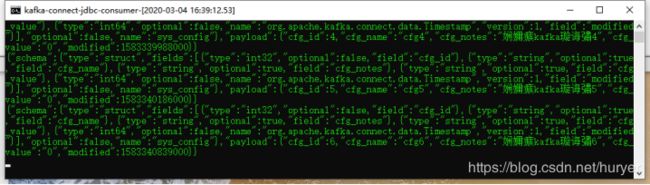

运行截图

测试时,中文部分显示总数出现乱码,原因未知,使用java测试时显示正常:

6、启动Java消费端

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

public class ConsumerMySql {

public static void main(String[] args) {

autoCommitConsumer();

}

/**

* 自动提交

*/

public static void autoCommitConsumer() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "localhost:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "true");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList("mysql-sys_config"));

System.out.println("开始接收消息。。。");

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, String> record : records) {

// System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(),

// record.key(), record.value());

JSONObject jo = JSON.parseObject(record.value());

String v = jo.getString("payload");

System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), v);

}

}

}

}

整体运行截图

遗留问题

1、数据删除操作无法自动同步,研究中,等待下回分解;

2、时间戳字段,目前需要手工更新,数据才能够正常同步,后期需要修改为通过触发器自动进行更新;