架构

- vmware 三个centos7虚拟机为环境进行部署

Basic Environment

禁止防火墙 (所有节点)

systemctl stop firewalld.service #停止firewall

systemctl disable firewalld.service #禁止firewall开机启动时间同步

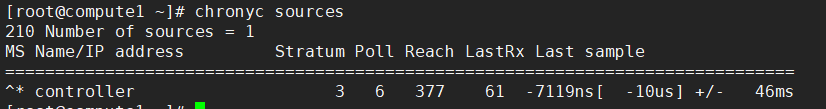

以控制节点为基准进行时间同步

控制节点

yum install chrony

edit /etc/chrony.conf

配置这一项 allow 192.168.200.0/24

systemctl enable chronyd.service

systemctl start chronyd.service

在运算节点和网络节点进行验证:其他节点

yum install chrony

Edit the /etc/chrony.conf

配置这一项 server controller iburst

systemctl enable chronyd.service

systemctl start chronyd.service

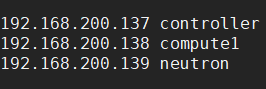

- 统一 /etc/hosts

安装openstack包在所有节点

yum install centos-release-openstack-pike

yum upgrade

yum install python-openstackclient-

SQL database (安装在控制节点)

yum install mariadb mariadb-server python2-PyMySQL

edit /etc/my.cnf.d/openstack.cnf

systemctl restart mysql.service

systemctl enable mysql.service

mysql_secure_installation

消息队列(控制节点)

yum install rabbitmq-server

systemctl enable rabbitmq-server.service

systemctl start rabbitmq-server.service添加openstack用户:

rabbitmqctl add_user openstack RABBIT_PASS授权openstack用户读写权限:

Setting permissions for user "openstack" in vhost "/" ...

rabbitmqctl set_permissions openstack "." "." ".*"The Identity service authentication mechanism for services uses Memcached to cache tokens. The memcached service typically runs on the controller node. For production deployments, we recommend enabling a combination of firewalling, authentication, and encryption to secure it.

修改 /etc/sysconfig/memcached 127.0.0.1->192.168.200.137

systemctl enable memcached.service

systemctl start memcached.service数据库配置(一次性配置所有节点数据库)

mysql -u root -p

mysql> CREATE DATABASE keystone;

允许keystone用户访问keystone数据库

mysql> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost'

IDENTIFIED BY 'KEYSTONE_DBPASS';

授权任何主机访问

mysql> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%'

IDENTIFIED BY 'KEYSTONE_DBPASS';

mysql> CREATE DATABASE nova_api;

mysql> CREATE DATABASE nova;

mysql> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost'

IDENTIFIED BY 'NOVA_DBPASS';

mysql> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%'

IDENTIFIED BY 'NOVA_DBPASS';

mysql> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost'

IDENTIFIED BY 'NOVA_DBPASS';

mysql> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%'

IDENTIFIED BY 'NOVA_DBPASS';

mysql -u root -p

>CREATE DATABASE neutron;

>GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost'

IDENTIFIED BY 'NEUTRON_DBPASS';

Identity service

- 安装keystone服务,apache服务器

yum install openstack-keystone httpd mod_wsgi

Edit the /etc/keystone/keystone.conf

[database]

...

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

[token]

provider = fernet

移除其他的connection选项

同步数据库:

su -s /bin/sh -c "keystone-manage db_sync" keystone初始化 Fernet key 仓库:

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystoneBootstrap the Identity service:

keystone-manage bootstrap --bootstrap-password ADMIN_PASS\

--bootstrap-admin-url http://controller:35357/v3/\

--bootstrap-internal-url http://controller:35357/v3/\

--bootstrap-public-url http://controller:5000/v3/\

--bootstrap-region-id RegionOneConfigure the Apache HTTP server

Edit the /etc/httpd/conf/httpd.conf file

配置 ServerName 指向 controller

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

Finalize the installation

启动http服务

systemctl enable httpd.service

systemctl start httpd.service配置环境变量

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASSWD

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

把上面环境变量写到admin-openrc文件,每次执行命令前先执行 . admin-openrc使环境变量生效。-

创建一个service project 验证安装成功

openstack project create --domain default --description "Service Project" service

keystone安装成功后,我们可以一次性配置所有服务的授权认证,不建议官网上的每个服务都操作一遍keystone授权,很耽误时间

- admin用户授权

. admin-openrc

创建用户Nova

openstack user create --domain default --password-prompt nova

给nova添加admin角色

openstack role add --project service --user nova admin

创建nova service

openstack service create --name nova \

--description "OpenStack Compute" compute

创建api

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1

创建placement用户

openstack user create --domain default --password-prompt placement

openstack role add --project service --user placement admin

创建placement服务和api

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778

+--------------+----------------------------------+

创建neutron用户

openstack user create --domain default --password-prompt neutron

openstack role add --project service --user neutron admin

创建neuron服务和api

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://neutron:9696

openstack endpoint create --region RegionOne \

network internal http://neutron:9696

openstack endpoint create --region RegionOne \

network admin http://neutron:9696

Compute service

下面可以进行每个服务的安装配置了

先配置控制节点(137主机)

yum install openstack-nova-api openstack-nova-conductor

openstack-nova-console openstack-nova-novncproxy

openstack-nova-scheduler openstack-nova-placement-api

- edit /etc/nova/nova.conf

- edit /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:fai@controller

auth_strategy = keystone

my_ip = 192.168.200.137

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

# ...

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

# ...

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = NOVA_PASS

[vnc]

enabled = true

# ...

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

# ...

api_servers = http://controller:9292

[glance]

# ...

api_servers = http://controller:9292

[placement]

# ...

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = PLACEMENT_PASS

[oslo_concurrency]

...

lock_path = /var/lib/nova/tmp

edit /etc/httpd/conf.d/00-nova-placement-api.conf

= 2.4>

Require all granted

Order allow,deny

Allow from all

systemctl restart httpd

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

109e1d4b-536a-40d0-83c6-5f121b82b650

su -s /bin/sh -c "nova-manage db sync" nova

- 验证

nova-manage cell_v2 list_cells

+-------+--------------------------------------+

| Name | UUID |

+-------+--------------------------------------+

| cell1 | 109e1d4b-536a-40d0-83c6-5f121b82b650 |

| cell0 | 00000000-0000-0000-0000-000000000000 |

+-------+--------------------------------------+ - Finalize installation

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service\

openstack-nova-consoleauth.service openstack-nova-scheduler.service\

openstack-nova-conductor.service openstack-nova-novncproxy.service

配置运算节点(138主机上操作)

yum install openstack-nova-compute

Edit the /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:fai@controller

auth_strategy = keystone

my_ip = 192.168.200.138

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = NOVA_PASS

[glance]

# ...

api_servers = http://controller:9292

[vnc]

# ...

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

[placement]

# ...

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = PLACEMENT_PASS

[libvirt]

#使用 egrep -c '(vmx|svm)' /proc/cpuinfo命令 确认是否支持全虚拟化,返回1表示支持,可以使用kvm,如果不支持使用qemu

virt_type = kvm

Finalize installation

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

验证(在控制节点操作)

. admin-openrc

openstack compute service list --service nova-compute

网络服务

- 配置自服务网络 (在网络节点操作)

yum install openstack-neutron openstack-neutron-ml2

openstack-neutron-linuxbridge ebtables

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:fai@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[database]

# ...

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

[keystone_authtoken]

# ...

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[nova]

# ...

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

Edit the /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

extension_drivers = port_security

mechanism_drivers = linuxbridge,l2population

[ml2_type_flat]

# ...

flat_networks = provider

[ml2_type_vxlan]

# ...

vni_ranges = 1:1000

[securitygroup]

# ...

enable_ipset = true

Edit the /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = true

local_ip = OVERLAY_INTERFACE_IP_ADDRESS

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

Edit the /etc/neutron/l3_agent.ini

[DEFAULT]

# ...

interface_driver = linuxbridge

Edit the /etc/neutron/dhcp_agent.ini

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

Edit the /etc/neutron/metadata_agent.ini

[DEFAULT]

# ...

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

[neutron]

# ...

url = http://neutron:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

Finalize installation

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl enable neutron-l3-agent.service

systemctl start neutron-l3-agent.service

在运算节点配置网络

yum install openstack-neutron-linuxbridge ebtables ipset

Edit the /etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:fai@controller

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = NEUTRON_PASS

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

Edit the /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = true

local_ip = OVERLAY_INTERFACE_IP_ADDRESS

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

Edit the /etc/nova/nova.conf

[neutron]

# ...

url = http://neutron:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

Finalize installation

systemctl restart openstack-nova-compute.service

systemctl enable neutron-linuxbridge-agent.service

systemctl start neutron-linuxbridge-agent.service验证网络服务 (在控制节点运行)

openstack network agent list

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| f49a4b81-afd6-4b3d-b923-66c8f0517099 | Metadata agent | controller | None | True | UP | neutron-metadata-agent |

| 27eee952-a748-467b-bf71-941e89846a92 | Linux bridge agent | controller | None | True | UP | neutron-linuxbridge-agent |

| 08905043-5010-4b87-bba5-aedb1956e27a | Linux bridge agent | compute1 | None | True | UP | neutron-linuxbridge-agent |

| 830344ff-dc36-4956-84f4-067af667a0dc | L3 agent | controller | nova | True | UP | neutron-l3-agent |

| dd3644c9-1a3a-435a-9282-eb306b4b0391 | DHCP agent | controller | nova | True | UP | neutron-dhcp-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

- dashboard,image,block storage服务需要自行安装